Red Hat Enterprise Linux OpenStack Platform 6: SR-IOV Networking - Part I: Understanding the Basics

Red Hat Enterprise Linux OpenStack Platform 6 introduces support for single root I/O virtualization (SR-IOV) networking. This is done through a new SR-IOV mechanism driver for the OpenStack Networking (Neutron) Modular Layer 2 (ML2) plugin, as well as necessary enhancements for PCI support in the Compute service (Nova).

In this blog post I would like to provide an overview of SR-IOV, and highlight why SR-IOV networking is an important addition to RHEL OpenStack Platform 6. We will also follow up with a second blog post going into the configuration details, describing the current implementation, and discussing some of the current known limitations and expected enhancements going forward.

PCI Passthrough: The Basics

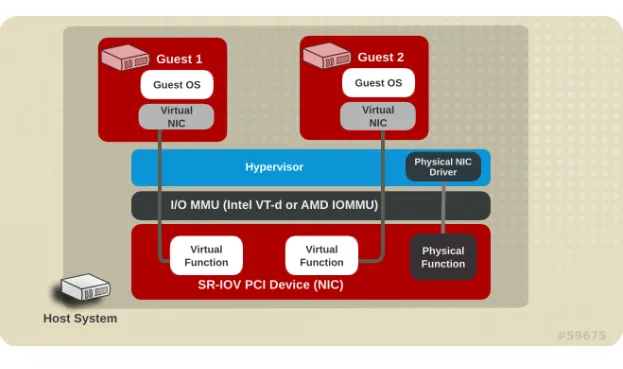

PCI Passthrough allows direct assignment of a PCI device into a guest operating system (OS). One prerequisite for doing this is that the hypervisor must support either the Intel VT-d or AMD IOMMU extensions. Standard passthrough allows virtual machines (VMs) exclusive access to PCI devices and allows the PCI devices to appear and behave as if they were physically attached to the guest OS. In the case of networking, it is possible to utilize PCI passthrough to dedicate an entire network device (i.e., physical port on a network adapter) to a guest OS running within a VM.

What is SR-IOV?

Single root I/O virtualization, officially abbreviated as SR-IOV, is a specification that allows a PCI device to separate access to its resources among various PCI hardware functions: Physical Function (PF) and one or more Virtual Functions (VF). SR-IOV provides a standard way for a single physical I/O device to present itself to the the PCIe bus as multiple virtual devices. While PFs are the full featured PCIe functions, VFs are lightweight functions that lack any configuration resources. The VFs configuration and management is done through the PF, so they can concentrate on data movement only. It is important to note that the overall bandwidth available to the PF is shared between all VFs associated with it.

In the case of networking, SR-IOV allows a physical network adapter to appear as multiple PCIe network devices. Each physical port on the network interface card (NIC) is being represented as a Physical Function (PF) and each PF can be associated with a configurable number of Virtual Functions (VFs). Allocating a VF to a virtual machine instance enables network traffic to bypass the software layer of the hypervisor and flow directly between the VF and the virtual machine. This way, the logic for I/O operations resides in the network adapter itself, and the virtual machines think they are interacting with multiple separate network devices. This allows a near line-rate performance, without the need to dedicate a separate physical NIC to each individual virtual machine. Comparing standard PCI Passthrough with SR-IOV, SR-IOV offers more flexibility.

Since the network traffic completely bypasses the software layer of the hypervisor, including the software switch typically used in virtualization environments, the physical network adapter is the one responsible to manage the traffic flows, including proper separation and bridging. This means that the network adapter must provide support for SR-IOV and implement some form of hardware-based Virtual Ethernet Bridge (VEB).

In Red Hat Enterprise Linux 7, which provides the base operating system for RHEL OpenStack Platform 6, driver support for SR-IOV network adapters has been expanded to cover more device models from known vendors. In addition, the number of available SR-IOV Virtual Functions has been increased for capable network adapters, resulting in the expanded capability to configure up to 128 VFs per PF. Please refer to the following article for details on supported drivers.

SR-IOV in OpenStack

Starting with Red Hat Enterprise Linux OpenStack Platform 4, it is possible to boot a virtual machine instance with standard, general purpose PCI device passthrough. However, SR-IOV and PCI Passthrough for networking devices is available starting with Red Hat Enterprise Linux OpenStack Platform 6 only, where proper networking awareness was added.

Traditionally, a Neutron port is a virtual port that is typically attached to a virtual bridge (e.g., Open vSwitch) on a Compute node. With the introduction of SR-IOV networking support, it is now possible to associate a Neutron port with a Virtual Function that resides on the network adapter. For those Neutron ports, a virtual bridge on the Compute node is no longer required.

When a packet comes in to the physical port on the network adapter, it is placed into a specific VF pool based on the MAC address or VLAN tag. This lends to a direct memory access transfer of packets to and from the virtual machine. The hypervisor is not involved in the packet processing to move the packet, thus removing bottlenecks in the path. Virtual machine instances using SR-IOV ports and virtual machine instances using regular ports (e.g., linked to Open vSwitch bridge) can communicate with each other across the network as long as the appropriate configuration (i.e., flat, VLAN) is in place.

While Ethernet is the most common networking technology deployed in today's data centers, it is also possible to use SR-IOV pass-through for ports using other networking technologies, such as InfiniBand (IB). However, the current SR-IOV Neutron ML2 driver supports Ethernet ports only.

Why SR-IOV and OpenStack?

The main motivation for using SR-IOV networking is to provide enhanced performance characteristics (e.g., throughput, delay) for specific networks or virtual machines. The feature is extremely popular among our telecommunications customers and those seeking to implement virtual network functions (VNFs) on the top of RHEL OpenStack Platform, a common use case for Network Functions Virtualization (NFV).

Each network function has a unique set of performance requirements. These requirements may vary based on the function role as we consider control plane virtualization (e.g., signalling, session control, and subscriber databases), management plane virtualization (e.g, OSS, off-line charging, and network element managers), and data plane virtualization (e.g., media gateways, routers, and firewalls). SR-IOV is one of the popular techniques available today that can be used in order to reach the high performance characteristics required mostly by data plane functions.

저자 소개

유사한 검색 결과

Smarter troubleshooting with the new MCP server for Red Hat Enterprise Linux (now in developer preview)

Red Hat's commitment to the EU Cyber Resilience Act: Shaping the future of cybersecurity standards

Technically Speaking | Build a production-ready AI toolbox

AI Is Changing The Threat Landscape | Compiler

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래