In this post:

-

Understand how pipelines can be used to automate infrastructure or platform lifecycle management within a service provider’s environment

-

Learn how pipelines play a key role in delivering frequent and reliable software deployment and upgrades for smoother day 2 operations

-

Find out how new versions of vendor software can be obtained and validated for use based on a service provider’s current configuration

-

Read how the Red Hat OpenShift-hosted control plane capability is used to facilitate software testing

In Pipelines for cloud-native network functions (CNFs) Part 1: Pipelines for onboarding CNFs, modern telecommunications processes including infrastructure as code (IaC), development and operations (DevOps), development, security and operations (DevSecOps), network operations (NetOps) and GitOps use pipelines to achieve automation, consistency and reliability of a process.

In this article, I discuss how pipelines can be used for lifecycle management (LCM) of software components of an infrastructure or platform. The key objective is to achieve more frequent and reliable deployments and upgrades of the associated infrastructure or platform. This helps to accelerate the adoption of new software that matches a service provider’s requirements. The lack of service provider adoption of this type of pipeline is one reason why day 2 operations of cloud-native deployments are often challenging.

Pipelines for LCM

Design begins with the definition of stages to obtain new versions of software components. The level of design complexity depends on the particular requirements of the software vendor, ranging from a simple script to an advanced automation workflow. The automated workflow would interact with APIs provided by the vendor to obtain the software and mirror it locally within the service provider’s environment.

As an example, I will describe the concept of a pipeline for identifying available Red Hat OpenShift releases. The pipeline has to validate whether a specific release is valid for deployment on the current service provider’s configuration. The pipeline’s goal is to achieve reliable upgrades of air-gapped OpenShift clusters (e.g. clusters with no connection to the internet or that have restrictions for accessing online vendor registries).

As depicted below—using OpenShift 4.7 as a baseline —an OpenShift release is supported for up to 18 months. During that time, micro versions or "z-releases" are provided with security patches and bug fixes.

Pipeline design has to consider as many combinations as the service provider may choose to have. For this example, and as depicted below, I am only considering a simple pipeline to be used for micro updates, or for upgrades from one OpenShift release to the next.

There is considerable overlap between these pipelines and the pipelines for onboarding CNFs that were identified in part 1. The initial pipeline (A) is responsible for mirroring a new OpenShift version to the service provider’s registry. Once mirrored, this pipeline creates a new candidate version to be used over subsequent pipelines.

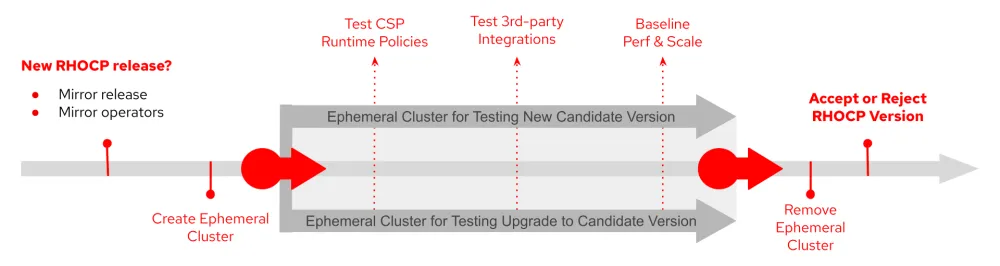

Best practice dictates that software artifacts provided by any vendor, or those generated outside of the service provider, should be scanned and security checked. The next pipeline (B) is used to provide a baseline for comparison, or can be used for audit purposes. The service provider’s vetting process is where the actual validation and verification work happens (C and D). To understand these steps a different representation for these pipelines is depicted below:

First an ephemeral cluster is created. As I discussed in part 1, the OpenShift hosted control plane capabilities is an ideal approach for the instantiation of an ephemeral cluster to serve this particular purpose.

There are differences between the initial stages of these pipelines. One pipeline (C) is responsible for deploying the new OpenShift candidate version into the ephemeral cluster. The other pipeline (D) executes an upgrade procedure on the ephemeral cluster that has the previously accepted version. After these initial stages, the remaining pipeline stages proceed to execute the following steps:

-

Validation to better adhere to the service provider’s policies

-

A defined functional and integration test

-

A load test for the creation of a baseline associated with the new OpenShift candidate version

When all the tests and validations are successful, the ephemeral cluster is removed and resources are made available for future use.

The next pipeline (E) tags and labels the software components as a baseline for new clusters defined in the environment. In many service provider environments, this final stage mirrors the software artifact into internal repositories where it can be consumed by other specialized pipelines.

How Red Hat can help

Lifecycle management enables more frequent and reliable deployment and upgrades of the associated infrastructure or platforms. The use of pipelines to deploy and validate software releases and any incremental versions—also used to check if the software adheres to a service provider’s policies—are important for consistency, reliability and faster time-to-market.

Service providers that use the Red Hat OpenShift will benefit from simplified workflows that can be used to optimize their operational model and reduce their total cost of ownership (TCO).

The adoption of pipelines can be achieved with Red Hat OpenShift Pipelines, a Kubernetes-native CI/CD solution based on Tekton. It builds on Tekton to provide a CI/CD experience through tight integration with Red Hat OpenShift and Red Hat developer tools. Red Hat OpenShift Pipelines is designed to run each step of the CI/CD pipeline in its own container, allowing each step to scale independently to meet the demands of the pipeline.

To implement best practices and adherence to a service provider’s own security requirements, Red Hat Advanced Cluster Security for Kubernetes provides built-in controls for security policy enforcement across the entire software development cycle, helping reduce operational risk and increase developer productivity. Safeguarding cloud-native applications and their underlying infrastructure prevents operational and scalability issues and helps organizations keep pace with increasingly rapid release schedules.

In my next post I will cover pipelines for multitenant end-to-end integrations and how they are used in conjunction with the pipelines described here to capture incompatibility and other issues before they are adopted into a service provider’s production environment.

Sobre o autor

William is a Product Manager in Red Hat's AI Business Unit and is a seasoned professional and inventor at the forefront of artificial intelligence. With expertise spanning high-performance computing, enterprise platforms, data science, and machine learning, William has a track record of introducing cutting-edge technologies across diverse markets. He now leverages this comprehensive background to drive innovative solutions in generative AI, addressing complex customer challenges in this emerging field. Beyond his professional role, William volunteers as a mentor to social entrepreneurs, guiding them in developing responsible AI-enabled products and services. He is also an active participant in the Cloud Native Computing Foundation (CNCF) community, contributing to the advancement of cloud native technologies.

Mais como este

New efficiency upgrades in Red Hat Advanced Cluster Management for Kubernetes 2.15

Improving VirtOps: Manage, migrate or modernize with Red Hat and Cisco

Technically Speaking | Taming AI agents with observability

Get into GitOps | Technically Speaking

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem