In this post:

-

Understand how pipelines can be used to automate processes within telco service provider environments

-

Learn how different pipelines can be used to create an onboarding experience

-

Read how pipelines enforce compatibility towards a service provider’s requirements

-

Find out how Red Hat OpenShift can be used to create a digital twin for onboarded application testing

Using pipelines to achieve greater automation, improved consistency and enhanced reliability of a process is a well established practice. We can find pipelines within telecommunication and IT processes including Infrastructure as Code (IaC), development and operations (DevOps), development, security and operations (DevSecOps), network operations (NetOps) and GitOps. Some common characteristics among these processes is expressing the intent, or desired state, in a declarative, versioned and immutable manner.

Driving predictive workflows while enforcing security and policy guardrails means pipelines can be used to take control of time-consuming processes. Examples include onboarding applications or CNFs , and pre-qualifying new software releases as candidates for telco environments. In a series of posts I am going to discuss these examples from three perspectives:

-

Part 1 (this post) will focus on onboarding pipelines

-

Part 2 will focus on pipelines for lifecycle management

-

Part 3 will cover pipelines for multi-tenant end-to-end integrations

Onboarding pipelines

This allows third-party applications to be onboarded into a telco environment. This is achieved by automatically running standard security policy validations on a digital twin, enforcing organizational best practices and, when needed, forcing the vendors of the application to decouple their software development artifact definitions from the lifecycle management of the platform.

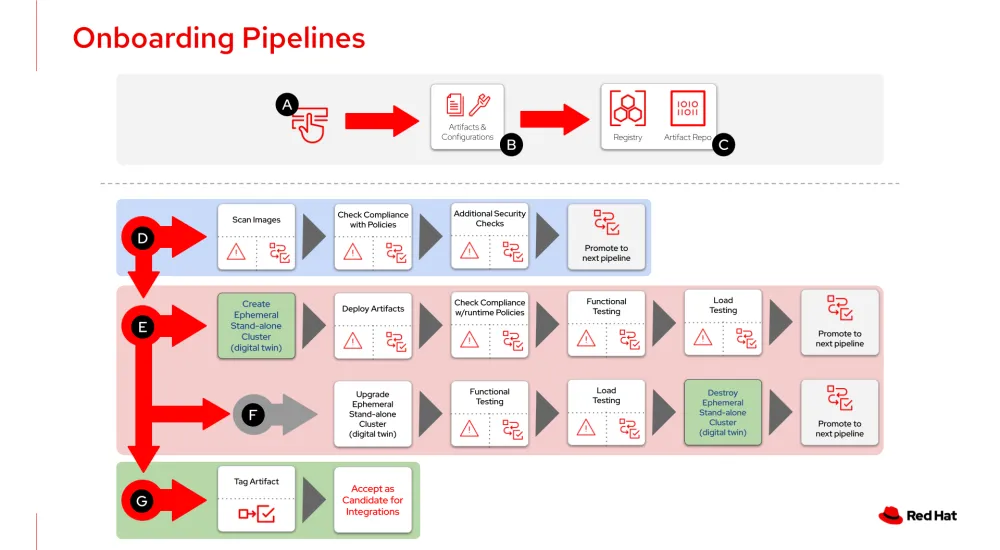

An onboarding pipeline is a collection of pipelines that are used to create an onboarding experience. There is no definitive design, but there are certain patterns that can be considered. For example, the following diagram depicts an onboarding pipeline consisting of four pipelines and a number of stages.

The onboarding process starts with a flow where the application or CNF vendor uploads the artifacts (A), configuration and a software bill of material (SBOM) for their application relevant to the telco environment (B), and other artifact repositories and image registries if needed (C).

The beginning of the process is a simple transfer of artifacts between the vendor and service provider. A key component of these artifacts is the SBOM, and enforcing this (or manifests associated with the artifacts) provides a clear statement of application programming interface (API) dependencies.

The first pipeline of the onboarding process (D) begins with the following checks:

The first pipeline of the onboarding process (D) begins with the following checks:

-

Scans the images and continues validating the artifacts following the service provider’s base policies, which may include a definition of a unprivileged run and no dependencies on deprecated API versions

-

Performs additional security checks including no hard coding of passwords, no consumption of upstream binaries without the proper vetting process by the vendor, and integration with the service provider’s identity providers

The first pipeline enforces compatibility with existing service provider platforms, preventing situations around incompatible APIs that could arise earlier in the process. Once these initial stages are completed successfully, the application and artifacts are tagged or labeled and promoted to the next pipeline (E).

Two pipelines are associated with runtime compatibility and compliance (E and F). It begins by creating a digital twin. In this example, a discardable (or ephemeral) stand-alone cluster is created, where the application can be tested without affecting other applications in the service provider’s network.

As depicted below, Red Hat OpenShift can create the digital twin with its hosted control planes capability. A management cluster is used to create other clusters with a containerized control plane, giving the ability to more easily create and destroy digital twins.

Once the digital twin is ready, the application is deployed and configured. The pipeline (E) proceeds to execute compliance checks for validating adherence to the service provider’s runtime policies. Once the check is cleared, automated tests relevant to the functionalities of the application being onboarded are performed, including load testing for defining a baseline for the particular version and configuration of the application.

Once the digital twin is ready, the application is deployed and configured. The pipeline (E) proceeds to execute compliance checks for validating adherence to the service provider’s runtime policies. Once the check is cleared, automated tests relevant to the functionalities of the application being onboarded are performed, including load testing for defining a baseline for the particular version and configuration of the application.

To deliver a comprehensive testing process, an onboarding pipeline should also test the application during a cluster upgrade. This will force the application to be decoupled from the lifecycle management of the platform, resulting in longer-term stability and usability of the onboarded application. Upgrading the discardable cluster (F), and once again executing the functional and load testing validation provides confidence that the application continues to work. Only if all the checks are successfully completed can the application and its artifacts be accepted.

The digital twin is then destroyed, freeing the resources for the next application being onboarded, and the artifacts are promoted to the final pipeline (G). Artifacts are then tagged or labeled and accepted as candidates for integrations. For many service providers this final stage mirrors the artifact into internal repositories. Here it can be consumed by pipelines testing multi-tenant and end-to-end integrations, combining the onboarded application with other third-party applications or services.

How Red Hat can help

A digital twin helps service providers test third party applications before they are released into their production environments. The use of a digital twin mitigates the risk of deployment and helps enforce compatibility and compliance. With the hosted control planes capability provided by Red Hat OpenShift, service providers can build dedicated clusters for a digital twin, and use them with a number of pipelines to facilitate the onboarding of third party applications. Once testing is complete, the digital twin can be quickly destroyed, freeing up resources to be used for future testing.

The adoption of pipelines can be achieved with Red Hat OpenShift Pipelines, a Kubernetes-native CI/CD solution based on Tekton. It builds on Tekton to provide a CI/CD experience through tight integration with Red Hat OpenShift and Red Hat developer tools. Red Hat OpenShift Pipelines is designed to run each step of the CI/CD pipeline in its own container, allowing each step to scale independently to meet the demands of the pipeline.

Red Hat OpenShift Serverless is a service based on the open source Knative project. It provides an enterprise-grade serverless platform which brings portability and greater consistency across hybrid and multi-cloud environments. This enables developers to create cloud-native, source-centric applications using a series of Custom Resource Definitions (CRDs) and associated controllers in Kubernetes which can then be used by pipelines to mitigate or outright eliminate conflicts among the configurations and CRDs.

To implement best practice and adherence to a service provider’s own IT security requirements, Red Hat Advanced Cluster Security for Kubernetes provides built-in controls for security policy enforcement across the entire software development cycle. This helps to reduce operational risk and increase developer productivity while still providing greater safeguards for cloud-native applications and the underlying infrastructure. This further helps to prevent operational and scalability issues but still keep pace with increasingly rapid release schedules.

In the next post, I will cover pipelines for lifecycle management and how those can be used to streamline the process to identify new software versions and validate compatibility with existing services.

About the author

William is a Product Manager in Red Hat's AI Business Unit and is a seasoned professional and inventor at the forefront of artificial intelligence. With expertise spanning high-performance computing, enterprise platforms, data science, and machine learning, William has a track record of introducing cutting-edge technologies across diverse markets. He now leverages this comprehensive background to drive innovative solutions in generative AI, addressing complex customer challenges in this emerging field. Beyond his professional role, William volunteers as a mentor to social entrepreneurs, guiding them in developing responsible AI-enabled products and services. He is also an active participant in the Cloud Native Computing Foundation (CNCF) community, contributing to the advancement of cloud native technologies.

More like this

End-to-end security for AI: Integrating AltaStata Storage with Red Hat OpenShift confidential containers

2025 Red Hat Ansible Automation Platform: A year in review

Data Security 101 | Compiler

AI Is Changing The Threat Landscape | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds