Thanks for joining me again as we continue to look at cgroup v2, which became available with Red Hat Enterprise Linux 8. This time around, I’d like to take a very deep look at the virtual file system used to control the cgroup controllers and the special files inside. Understanding this will be necessary for doing custom work that used to be the domain of libcgroup (first introduced in RHEL 6, and not recommended for use in RHEL 8). We’re also going to try some fun with cpusets, which are now fully working with RHEL and systemd for the first time ever!

Important: this errata fixed a bug involving the SELinux policies and cpusets: RHBA-2020:2467. This errata, released on June 10th, 2020 will need to be installed on your system before any of the examples around cpusets will work properly. Or at all, if SELinux is in enforcing mode. Which you need to have set so that Dan Walsh does not begin to weep. If your system has selinux-policy-3.14.3-41.el8_2.4 or higher installed (check via ‘rpm -q selinux-policy’) then you’re all set.

Drop-In Files

The preferred method for making changes to cgroup settings is the use of the “systemctl set-property” command. This makes the relevant changes to the virtual file system as well as making changes persistent via the creation of drop-in files.

If you are using systemctl set-property to modify cgroup settings, a drop-in file will be automatically created in /etc/systemd/system.control/slicename.slice.d/. If you’ve used drop-in files in the past, you will recognize immediately that this is “the wrong place!” Well, not necessarily wrong, but different as drop-ins for RHEL 7 would land in /etc/systemd/system/slicename.d/

For instance, here is the folder for user 1000 after his CPU quota is changed:

These drop-in files take precedence over any that live in /etc/systemd/system, which is where all user defined drop-ins used live and where systemctl set-property used to write them. Again, please keep this in mind if you have scripts or playbooks that manipulate drop-ins.

Our Virtual File System: Where Things Actually Happen

It may be worth a review of the fundamentals of cgroup v2, so feel free to jump back in time and re-read my prior entry in this series. I know, it’s been a while, I can wait right here while you do so. Here’s the link: World domination with cgroups in RHEL 8: welcome cgroups v2!

Taking a look at /sys/fs/cgroup on Roland, we can see what the top level structure is for him, since he’s running cgroup v2.

The directories (which appear in blue) are of course our top level slices. All of the other files are settings that apply to slices within the current level of the directory.

Let’s see which CPUs are part of the cpuset for everyone.

Roland is a two core VM, so that makes all the sense in the world.

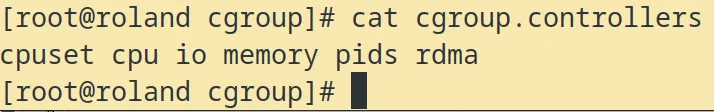

Here are the active controllers:

Ahhh. It does feel good to see cpuset taking it’s rightful place with all its brethren.

Let’s check out the current user.slice.

Yeah, looks like mrichter is on and about. No doubt causing trouble. Remember, a user’s slice is labeled with their UID.

Now, let’s find mrichter’s ssh session. We could just use systemd-cgls, but what fun is that?

Looks like we won our game of “guess the PID” on the first try. Let’s talk about what we’re actually seeing here.

With systemd, every user gets a slice named user-[UID].slice. However this is not where the actual processes “live” in the cgroup tree. Rather, each session spawns a scope sub-unit. (In this case, session-7.scope.)

Let’s have mrichter log in another session.

And now let’s check his slice.

Look at that...another session scope (good ol’ number 9) has spawned. Let’s look at the systemd-cgls output for that.

We see the two scopes plus another scope which systemd fired up when mrichter logged in. Some interesting bits live in there, but it’s mostly used for backend systemd stuff and honestly probably not something we want to muck with...at least not today.

We’ve looked at a few interface files - some of them are read-only (which allows you to check the current status on something) and some of them are read/write (which is fundamentally how you can change the behavior of a cgroup or process inside) The interface files found within the directories are documented at kernel.org. - check that out if you want an overview of pretty much everything that can be read or changed. At that link, you’ll see the global interface files common to all cgroup and then the controller specific files.

Oh Happy Day! Now cpusets “Just Work”!

The cpuset controller has been around for quite some time already. If you recall, I spun a tale of how to use it under RHEL 7, but it required some shenanigans.

No more, my friends! Now systemd has complete control over the cpuset controller. Let’s see how that works.

First of all, systemctl set-property supports two cpuset settings. Those are AllowedCPUs and AllowedMemoryNodes. Let’s talk about AllowedMemoryNodes first. This setting restricts processes to be executed on specific memory NUMA nodes. You pass it a list of memory NUMA nodes indices or ranges separated by commas. Memory NUMA nodes ranges are specified by the lower and upper CPU indices separated by a dash.

Right. Huh?

If you’re not familiar with our friend non-uniform memory access (NUMA) allow me to explain. It’s a technology that gives each physical processor (which today will contain multiple cores) a local memory bank. Any core can access any piece of memory in the entire system, but access to the local NUMA bank will be faster.

For workloads requiring the absolute best performance, one can use tools to make sure that processes are accessing the local NUMA bank for the physical CPU. To use this setting properly, you need to know which of your system cores are associated with the different NUMA banks. There is no shortcut with this, you need to dig around and actually learn the hardware. Not all systems are NUMA capable so you’ll sometimes see this only reflect bank 0. Like poor Roland... he’s a VM on a Red Hat Virtualization system, without NUMA support.

Oh yeah. Let’s talk about the concept of “allowed” versus “effective”. Remember, a child cgroup inherits settings from the parent above. Perhaps you’re running on an 8 core system. You would expect that a cgroup would have processors 0-7 available. It’s possible that a parent cgroup might have a more restrictive setting, so the “effective” interface file for the child shows the actual value. You’ll also see situations where a cgroup doesn’t have the setting explicitly set, but the “effective” file shows what the reality is. In the following example, the user slice doesn’t have cpuset.cpus set, so it inherits the global parent setting:

And no, the child may NOT modify the setting to use resources above and beyond what the parent can use. In other words, we can’t go ahead and set user.slice to allow cpus 0-2, since the parent only allows 0-1.

Now let’s talk about the star of the show, AllowedCPUs. Processes in a cgroup will only be scheduled on a CPU that is part of the list. The most common use case for this would be “I have a latency sensitive application. It’s important to make sure that this application has plenty of processing power and is not starved by other applications that may also need CPU cycles.” For example, many financial trading systems are super time sensitive. Failing to execute a transaction in time can result in a real world loss of money and angry customers.

By using cpusets, we can place workloads on specific processors. We can also assign default cpusets for top level slices. So for our example on Roland, we’re going to do a few things.

-

I’m going to give Roland 2 more virtual CPUs, so he’ll now be a 4 core VM.

-

A new user will be created, this user will represent the latency sensitive application.

-

We’ll lock down both the system daemons and mrichter so they can’t use our new user’s CPUs.

Bear with me for a minute while I fire up Red Hat Virtualization manager and fiddle with Roland’s settings.

Ok, back. Roland is ready to rock!

Let’s proceed with a new user.

We’ll just let the “password too short” error message go.

Anyway, the application that Elsa will be running is good old “foo,” which is really a Bash script that pumps numbers endlessly from /dev/zero to sha1sum. It’s great for generating processor load. But let’s pretend it’s actually performing critical functions to predict regional snow storms to feed to multiple clients. Delays in processing will cause a cascade effect of nasty failures, probably leading to the land being covered in endless ice.

With 4 cores available, the following plan seems reasonable. Let’s give Elsa core 2 and 3. Let’s give the other users (mrichter and root) core 1. Finally we’ll place system services, such as daemons, on core 0. In fact, let’s do that now.

When using the set-property command, the change is immediate. In fact, anything under the system.slice that was scheduled on a CPU other than 0 is now running on just 0.

Now we’ll move our other users to CPU 1.

Let’s test that with root. We’ll have him fire up foo and then check top.

It’s running, what does top say?

Looks good. Let’s kill the job and set Elsa up.

Now we’ll login as Elsa and start some work.

Perfect!

Another way to check the processor that the process is on is with the ps -eo pid,psr,user,cmd command. You’ll get four columns: the PID, CPU number it’s running on, the user and the actual process. Here’s an example with two instances of “foo” running.

Remember, drop-ins can be pre-populated into /etc/systemd/system for new users. That way, as new folk join the team, they will have the proper cpuset (or other cgroup) settings. Do be warned that using systemctl to change anything set by a drop-in will override the one in /etc/systemd/system. That might be tricky to catch.

Thanks once again for joining me on a fun nerd-fest. I’ll be sure to keep everyone updated as cgroups change or as we discover new and exciting use cases for them. Until then, be well!

Sobre o autor

Marc Richter (RHCE) is a Principal Technical Account Manager (TAM) in the US Northeast region. Prior to coming to Red Hat in 2015, Richter spent 10 years as a Linux administrator and engineer at Merck. He has been a Linux user since the late 1990s and a computer nerd since his first encounter with the Apple 2 in 1978. His focus at Red Hat is RHEL Platform, especially around performance and systems management.

Mais como este

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Looking ahead to 2026: Red Hat’s view across the hybrid cloud

The Overlooked Operating System | Compiler: Stack/Unstuck

Linux, Shadowman, And Open Source Spirit | Compiler

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem