This article highlights the benefits of using the pull model method for managing multiple Kubernetes clusters in a CD system like Argo CD. It explains how to enhance Argo CD without significant code changes to your existing applications and migrate existing Open Cluster Management (OCM) AppSubscription users to Argo CD. The blog mainly focuses on the upstream version of RHACM but also explains how to use it with RHACM 2.8, where the pull model feature is introduced as Technology Preview.

Introducing the pull model

Argo CD is a CNCF-graduated project that utilizes a GitOps approach for continuously managing and deploying applications on Kubernetes clusters. On the other hand, Open Cluster Management (OCM) is a CNCF Sandbox project that focuses on managing a fleet of Kubernetes clusters at scale.

By utilizing OCM, users can now enable the optional Argo CD pull model architecture, which offers flexibility that may be better suited for certain scenarios.

Argo CD currently utilizes a push model architecture where the workload is pushed from a centralized cluster to remote clusters, requiring a connection from the centralized cluster to the remote destinations.

In the pull model, the Argo CD Application CR is distributed from the centralized cluster to the remote clusters. Each remote cluster independently reconciles and deploys the application using the received CR. Subsequently, the application status is reported back from the remote clusters to the centralized cluster, resulting in a user experience (UX) that is similar to the push model.

One of the main use cases for the optional pull model is to address network scenarios where the centralized cluster cannot reach out to remote clusters, but the remote clusters can communicate with the centralized cluster. In such situations, the push model would not be easily feasible.

Another advantage of the pull model is decentralized control, where each cluster has its own copy of the configuration and is responsible for pulling updates independently. The hub-managed architecture using Argo CD and the pull model can reduce the need for a centralized system to manage the configurations of all target clusters, making the system more scalable and easier to manage. However, note that the hub cluster itself still represents a potential single point of failure, which you should address through redundancy or other means.

Additionally, the pull model offers better security by reducing the risk of unauthorized access and eliminating the need for storing remote cluster credentials in a centralized environment. The pull model also provides more flexibility, allowing clusters to pull updates on their own schedule and reducing the risk of conflicts or disruptions.

OCM key components and Argo CD pull model integration

The following content covers more about the pull model and how to integrate it.

Configure managed clusters

Run the following command to list the managed clusters, as seen in the example:

oc get managedclusters -l cloud=AWS

NAME HUB ACCEPTED JOINED AVAILABLE AGE

demo-managed-0 True True True 17h

demo-managed-1 True True True 16h

Use Placement to dynamically select a set of managed clusters

See here for a detailed description of the Placement-CRD, which is a central component of the multicluster solution.

Architecture and dependencies

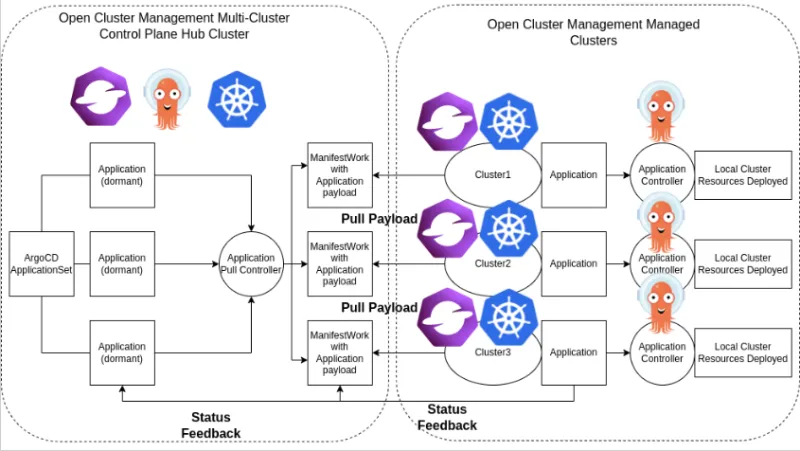

This Argo CD pull model controller on the hub cluster creates ManifestWork objects, wrapping Application objects as payload.

See here for more info regarding ManifestWork, which is a central concept for delivering workloads to the managed clusters.

The Open Cluster Management agent on the managed cluster notices the ManifestWork on the hub cluster and pulls the Application from there.

The following image is an overview of the new pull model architecture:

Set up the solution by using Open Cluster Management

First, set up your Open Cluster Management (OCM) multicluster environment.

Note: Currently, OCM does not have a UI.

See the OCM website to set up a quick demo environment based on kind.

In the overall solution, the OCM hub cluster provides the cluster inventory and ability to deliver workloads to the managed clusters.

The hub cluster and managed clusters need Argo CD Application installed. See the Argo CD website for more details.

Install Argo CD

1. Install Argo CD to both hub and managed clusters:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

2. Clone the ArgoCD Pull Integration project and install the pull controller on the hub cluster:

git clone https://github.com/open-cluster-management-io/argocd-pull-integration

cd argocd-pull-integration

kubectl apply -f deploy/install.yaml

If your controller starts successfully, you should see the following output:

$ kubectl -n argocd get deploy | grep pull

argocd-pull-integration-controller-manager 1/1 1 1 106s

3. On the hub cluster, create an Argo CD cluster secret that represents the managed cluster.

Note: Replace the <cluster-name> with the registered managed cluster name.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: <cluster-name>-secret # cluster1-secret

namespace: argocd

labels:

argocd.argoproj.io/secret-type: cluster

type: Opaque

stringData:

name: <cluster-name> # cluster1

server: https://<cluster-name>-control-plane:6443 # https://cluster1-control-plane:6443

EOF

4. On the hub cluster, apply the manifests in the example/hub folder in the repository:

kubectl apply -f example/hub

5. On the managed cluster, apply the manifests in the example/managed folder:

kubectl apply -f example/managed

On the hub cluster, apply the guestbook-app-set manifest:

kubectl apply -f example/guestbook-app-set.yaml

NOTE: The Application template inside the ApplicationSet must contain the following content:

labels:

apps.open-cluster-management.io/pull-to-ocm-managed-cluster: 'true'

annotations:

argocd.argoproj.io/skip-reconcile: 'true'

apps.open-cluster-management.io/ocm-managed-cluster: '{{name}}'

- The labels annotation allows the pull model controller to select the Application for processing.

- The skip-reconcile annotation prevents the Application from reconciling on the hub cluster.

- The ocm-managed-cluster annotation allows the ApplicationSet to generate multiple Application sets based on each cluster generator target.

When this guestbook ApplicationSet reconciles, it generates an Application for the registered managed cluster, as seen in the following example:

$ kubectl -n argocd get appset

NAME AGE

guestbook-app 84s

$ kubectl -n argocd get app

NAME SYNC STATUS HEALTH STATUS

cluster1-guestbook-app

The pull controller wraps the Application on the hub cluster with a ManifestWork. See the following example:

$ kubectl -n cluster1 get manifestwork

NAME AGE

cluster1-guestbook-app-d0e5 2m41s

On the managed cluster, you should see that the Application is pulled down successfully. For example:

$ kubectl -n argocd get app

NAME SYNC STATUS HEALTH STATUS

cluster1-guestbook-app Synced Healthy

$ kubectl -n guestbook get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

guestbook-ui 1/1 1 1 7m36s

On the hub cluster, the status controller syncs the Application with the ManifestWork status feedback. For example:

$ kubectl -n argocd get app

NAME SYNC STATUS HEALTH STATUS

cluster1-guestbook-app Synced Healthy

Set up pull model

1. Install the OpenShift GitOps operator on the hub cluster and all the target managed clusters in the installed openshift-gitops namespace.

2. Create a gitOpsCluster resource that references a placement resource. The placement resource selects all the managed clusters that need to support the pull model. As a result, managed cluster secrets are created in the Argo CD server namespace. Every managed cluster needs to have a cluster secret in the Argo CD server namespace on the hub cluster. This is required by the Argo CD application set controller to propagate the Argo CD application template for a managed cluster. See the following example:

apiVersion: apps.open-cluster-management.io/v1beta1

kind: GitOpsCluster

metadata:

name: argo-acm-importer

namespace: openshift-gitops

spec:

argoServer:

cluster: notused

argoNamespace: openshift-gitops

placementRef:

kind: Placement

apiVersion: cluster.open-cluster-management.io/v1beta1

name: bgdk-app-placement # the placement can select all clusters

namespace: openshift-gitops

3. All namespaces on each managed cluster where the Argo CD application will be deployed are governed by the Argo CD Application controller service account, as you see in the following example:

apiVersion: v1

kind: Namespace

metadata:

name: mortgage2

labels:

argocd.argoproj.io/managed-by: openshift-gitops

4. Let the Argo CD Application controller ignore these Argo CD applications that the pull model propagates. For OpenShift GitOps operator 1.9.0+, specify the annotation in the Argo CD ApplicationSet template annotations:

argocd.argoproj.io/skip-reconcile: "true"

For more details, please refer to this link from the Gitops Operator documentation.

5. Explicitly declare all application destination namespaces in the Git repo or Helm repo for the application, and include the managed-by label in the namespaces. Refer to the link on how to declare a namespace containing the managed-by label in a Git repo.

For deploying applications using the pull model, the Argo CD application controllers need to ignore these application resources on the hub cluster. The desired solution is to add the argocd.argoproj.io/skip-reconcile annotation to the template section of the ApplicationSet. On the Red Hat Advanced Cluster Management (RHACM) hub cluster, the required OpenShift GitOps operator must be version 1.9.0 or above. On the managed cluster(s), the OpenShift GitOps operator should be at the same level as the hub cluster.

ApplicationSet CRD architecture

The Argo CD ApplicationSet CRD is used to deploy applications on the managed clusters using the push or pull model. It uses a placement resource in the generator field to get a list of managed clusters. The template field supports parameter substitution of specifications for the application. The Argo CD ApplicationSet controller on the hub cluster manages the application creation for each target cluster.

For the pull model, the destination for the application must be the default local Kubernetes server (https://kubernetes.default.svc) since the application is deployed locally by the application controller on the managed cluster. By default, the push model is used to deploy the application unless the annotations apps.open-cluster-management.io/ocm-managed-cluster and apps.open-cluster-management.io/pull-to-ocm-managed-cluster are added to the template section of the ApplicationSet.

The following is a sample ApplicationSet YAML that uses the pull model:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: guestbook-allclusters-app-set

namespace: openshift-gitops

spec:

generators:

- clusterDecisionResource:

configMapRef: ocm-placement-generator

labelSelector:

matchLabels:

cluster.open-cluster-management.io/placement: aws-app-placement

requeueAfterSeconds: 30

template:

metadata:

annotations:

apps.open-cluster-management.io/ocm-managed-cluster: '{{name}}'

apps.open-cluster-management.io/ocm-managed-cluster-app-namespace: openshift-gitops

argocd.argoproj.io/skip-reconcile: "true"

labels:

apps.open-cluster-management.io/pull-to-ocm-managed-cluster: "true"

name: '{{name}}-guestbook-app'

spec:

destination:

namespace: guestbook

server: https://kubernetes.default.svc

project: default

source:

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps.git

syncPolicy:

automated: {}

syncOptions:

- CreateNamespace=true

Controller architecture

There are two sets of controllers on the hub cluster watching the ApplicationSet resources:

- The existing Argo CD application controllers

- The new propagation controller

Annotations in the application resource are used to determine which controller reconciles to deploy the application. The Argo CD application controllers, used for the push model, ignore applications containing the argocd.argoproj.io/skip-reconcile annotation. The propagation controller, which supports the pull model, only reconciles on applications that contain the apps.open-cluster-management.io/ocm-managed-cluster annotation.

It generates a manifestWork to deliver the application to the managed cluster.

The managed cluster is determined by the ocm-managed-cluster annotation value.

The following is a sample ManifestWork YAML that the propagation controller generates to create the guestbook application on the managed cluster pcluster2:

apiVersion: work.open-cluster-management.io/v1

kind: ManifestWork

metadata:

annotations:

apps.open-cluster-management.io/hosting-applicationset: openshift-gitops/guestbook-allclusters-app-set

name: pcluster2-guestbook-app-4a491

namespace: pcluster2

spec:

manifestConfigs:

- feedbackRules:

- jsonPaths:

- name: healthStatus

path: .status.health.status

type: JSONPaths

- jsonPaths:

- name: syncStatus

path: .status.sync.status

type: JSONPaths

resourceIdentifier:

group: argoproj.io

name: pcluster2-guestbook-app

namespace: openshift-gitops

resource: applications

workload:

manifests:

- apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

annotations:

apps.open-cluster-management.io/hosting-applicationset: openshift-gitops/guestbook-allclusters-app-set

finalizers:

- resources-finalizer.argocd.argoproj.io

labels:

apps.open-cluster-management.io/application-set: "true"

name: pcluster2-guestbook-app

namespace: openshift-gitops

spec:

destination:

namespace: guestbook

server: https://kubernetes.default.svc

project: default

source:

path: guestbook

repoURL: https://github.com/argoproj/argocd-example-apps.git

syncPolicy:

automated: {}

syncOptions:

- CreateNamespace=true

As a result of the feedback rules specified in ManifestConfigs, the health status and the sync status from the status of the Argo CD application are synced to the manifestwork’s statusFeedback.

Deploying an application with the local Argo CD server on the managed cluster

After the Argo CD application is created on the managed cluster through ManifestWorks, the local Argo CD controllers reconcile to deploy the application. The controllers deploy the application through the following sequence of operations:

- Connect and pull resources from the specified Git/Helm repository

- Deploy the resources on the local managed cluster

- Generate the Argo CD application status

- Multicluster Application report - aggregate application status from the managed clusters

A new multicluster ApplicationSet report CRD is introduced to provide an aggregate status of the ApplicationSet on the hub cluster. The report is only created for those deployed using the new pull model. It includes the list of resources and the overall status of the application from each managed cluster. A separate multicluster ApplicationSet report resource is created for each Argo CD ApplicationSet resource. The report is created in the same namespace as the ApplicationSet. The MulticlusterApplicationSetReport includes:

- List of resources for the Argo CD application

- Overall sync and health status for one Argo CD application

- Error message for each cluster where the overall status is out of sync or unhealthy

- Summary status of the overall application status from all the managed clusters

To support the generation of the MulticlusterApplicationSetReport, two new controllers have been added to the hub cluster: the resource sync controller and the aggregation controller.

The resource sync controller runs every 10 seconds, and its purpose is to query the OCM search V2 component on each managed cluster to retrieve the resource list and any error messages for each Argo CD application.

It then produces an intermediate report for each application set, which is intended to be used by the aggregation controller to generate the final MulticlusterApplicationSetReport report.

The aggregation controller also runs every 10 seconds, and it uses the report generated by the resource sync controller to add the health and sync status of the application on each managed cluster. The status for each application is retrieved from the status feedback in the manifestwork for the application. Once the aggregation is complete, the final MulticlusterApplicationSetReport is saved in the same namespace as the Argo CD ApplicationSet, with the same name as the ApplicationSet.

The two new controllers, along with the propagation controller, all run in separate containers in the same multicluster-integrations pod, as shown in the example below:

NAMESPACE NAME READY STATUS

open-cluster-management multicluster-integrations-7c46498d9-fqbq4 3/3 Running

The following is a sample multicluster ApplicationSet report YAML for the guestbook ApplicationSet:

apiVersion: apps.open-cluster-management.io/v1alpha1

kind: MulticlusterApplicationSetReport

metadata:

labels:

apps.open-cluster-management.io/hosting-applicationset: openshift-gitops.guestbook-allclusters-app-set

name: guestbook-allclusters-app-set

namespace: openshift-gitops

statuses:

clusterConditions:

- cluster: cluster1

conditions:

- message: 'Failed sync attempt to 53e28ff20cc530b9ada2173fbbd64d48338583ba: one or more objects failed to apply, reason: services is forbidden: User "system:serviceaccount:openshift-gitops:openshift-gitops-argocd-application-controller" cannot create resource "services" in API group "" in the namespace "guestbook",deployments.apps is forbidden: User "system:serviceaccount:openshift-gitops:openshift-gitops-argocd-application-controller" cannot create resource "deployments" in API group "apps" in the namespace "guestboo...'

type: SyncError

healthStatus: Missing

syncStatus: OutOfSync

- cluster: pcluster1

healthStatus: Progressing

syncStatus: Synced

- cluster: pcluster2

healthStatus: Progressing

syncStatus: Synced

summary:

clusters: "3"

healthy: "0"

inProgress: "2"

notHealthy: "3"

notSynced: "1"

synced: "2"

All the resources listed in the MulticlusterApplicationSetReport are actually deployed on the managed cluster(s). If a resource fails to be deployed, it won't be included in the resource list. However, the error message would indicate why the resource failed to be deployed.

Limitations

- Resources are only deployed on the managed cluster(s).

- If a resource fails to deploy, it won't be included in the MulticlusterApplicationSetReport.

- The pull model excludes the local-cluster as the target-managed cluster.

- There might be use cases where the managed clusters cannot reach the GitServer. In this case, a push model would be the only solution.

References

Wrap up

I want to thank RHACM's Application Lifecycle Management team for their huge efforts in making this new important feature possible. We would both love to get feedback on RHACM's 2.8 feature, and we would like to collaborate with you in the Open Cluster Management community. We would like to thank Brandi Swope for her kind review.