This article is the first in a six-part series in which we present various usage models for confidential computing, a set of technologies designed to protect data in use—for example by using memory encryption—and the requirements to get the expected security and trust benefits from the technology.

In the series, we will focus on four primary use cases: confidential virtual machines, confidential workloads, confidential containers and finally confidential clusters. In all use cases, we will see that establishing a solid chain of trust uses similar, if subtly different, attestation methods, which make it possible for a confidential platform to attest to some of its properties. We will discuss various implementations of this idea, as well as alternatives that were considered.

In this first article, we will provide some background about confidential computing and its history, and establish some terminology that we will need to cover the topic.

A brief history of trusted computing and attestation

More than two decades ago, the computing industry saw a need to have trust in the components running on a remote computer (PC, laptop or even server) without necessarily having control of it. The solution was to build a chain of trust beginning with a small physical “root of trust” using a one way hash function. This works as follows:

- The root of trust first measures itself, meaning that it computes a cryptographic hash, either over its own code for software, or of a well known version identifier for hardware.

- Then it measures the next stage of code to boot, for example the boot ROM for x86 platforms.

- Once this is done, control is transferred to the boot ROM, which finds and measures the next stage of code to run, and so on.

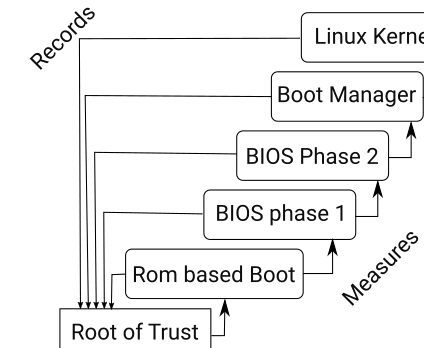

The principle is that before execution, each next stage is measured by the prior stage, meaning that if all hashes are of trusted components then the entire boot sequence must be trustworthy because the chain of hashes is grounded in the root of trust. An attacker could inject bogus code, or substitute their own code to the expected original code at any stage (except in the root of trust). However, should this happen, the measurement by the prior stage would indicate a wrong hash. The following diagram demonstrates this flow:

The mechanism we just described is a form of attestation: it proves to a third party some important properties about the system being used, in this case that the boot sequence only contains known components. There are many other properties that can be attested, many attestation mechanisms, and the root of trust itself can take many forms.

In the Beginning was the Physical Root of Trust

A long time ago, security researchers realized that the component that formed the root of trust would have to be a separate (and tamper proof) piece from the system being measured. This led to the development in 2000 of the Trusted Platform Modules (TPM): A fairly inexpensive and tiny chip that could be inserted into any complex system to provide a tamper resistant root of trust which could then reliably form the base of the measurement chain. Over the years, the functionality of the TPM has grown, so that it is now more like a cryptographic coprocessor, but its fundamental job of being the root of trust remains the same, and so does the construction of the chain of trust.

All confidential computing platforms presented here inherit this core idea of an isolated, independent physical root of trust using cryptographic methods to confirm the validity of an entire chain of trust.

Confidential computing

Confidential computing provides a set of technologies designed to protect data in use, such as the data currently being processed by the machine, or currently stored in memory. This complements existing technologies that protect data at rest (e.g. disk encryption) and data in transit (e.g. network encryption).

Confidential computing is a core technology, now built in many mainstream processors or systems:

- AMD delivered the Secure Encrypted Virtualization (SEV) in 2017. The technology originally featured memory encryption only. A later iteration, SEV-ES (Encrypted State) added protection for the CPU state. Finally, the current version of the technology, SEV-SNP (Secure Nested Pages) ensures memory integrity.

- Intel proposes a similar technology called Trust Domain Extensions (TDX), which just started shipping with the Sapphire Rapids processor family. An older related technology called Software Guard Extensions (SGX), introduced in 2015, allowed memory encryption for operating system processes. However, it is now deprecated except on Xeon.

- IBM Z mainframes feature Secure Execution (SE), which takes a slightly different, more firmware-centric approach, owing to the architecture being virtualization-centric for so long.

- Power has a Protected Execution Facility (PEF), introducing what they call an ultravisor, that offers higher privilege than the hypervisor (for virtual machines) and the supervisor (for the operating system), and grants access to secure memory.

- ARM is developing the Confidential Compute Architecture (CCA), which introduces the Realm Management Extensions (RME) in the hardware, and allows firmware to separate the resources between realms that cannot access one another.

While all these technologies share the same goal, they differ widely in architecture, design and implementation details. Even the two major x86 vendors take very different approaches to the same problem. Among the primary differences are the roles and weights of firmware, hardware or adjunct security processors in the security picture. This complex landscape makes it difficult for the software vendors to present a uniform user experience across the board.

Host, guest, tenant and owner

In confidential computing, the host platform is no longer trusted. It belongs to a different trust domain than the guest operating system. This forces us to introduce a new terminology. When talking about virtualization, we usually make a distinction between host and guest, and this implies—correctly—that the guest has no special confidentiality rights or guarantees for anything inside the host.

By contrast, in a way reminiscent of lodging, we will talk about a tenant for a confidential virtual machine. The tenant does have additional rights to confidentiality, similar to the restrictions preventing a building manager from accessing a tenant’s private apartment.

We can also talk about the owner of the virtual machine, notably when we refer to components outside of the virtual machine, but that belong to the same trust domain, such as a server providing secrets to the virtual machine. In other words, the tenant’s trust domain may extend beyond the virtual machine itself, and in that case, we prefer to talk about ownership. Notably, we will see that the integrity of Confidential Computing, and the security benefits it can provide, often relies on controlled access to external resources, collectively known as the relying party. This includes in particular services providing support for various forms of attestation to ensure that the execution environment is indeed trusted, as well as other services delivering secrets or keys.

The diagram below, corresponding to a confidential containers scenario, illustrates these three trust domains of interest using different colors:

- In red, the trusted platform offers services that must be relied on not just to execute the software components, but also to provide cryptographic-level guarantees.

- In blue, the host manages the physical resources, but it is not trusted with any data that resides on it. Data sent to disk or network devices must be encrypted, for example.

- In green, the owner, which on the diagram includes a role as a tenant on the host, within the virtual machine, as well as another role with external resources collectively forming the relying party.

For more details on the different building blocks for the container flow we recommend reading this blog post.

What does confidential computing guarantee?

In the marketing literature, you will often see vague attributes such as “security” touted as expected benefits of confidential computing. However, in reality, the additional security is limited to one particular aspect, namely confidentiality of data in use. And even that limited benefit requires some care.

The only thing that a confidential computing guarantees is … confidentiality, most notably the confidentiality of data in use, including data stored in random-access memory, in the processors’ internal registers and in the hypervisor’s data structures used to manage the virtual machine. In other words, the technology helps protect the data being processed by the virtual machine from being accessed outside of the trust domain. Most notably, the host, the hypervisor, other processes on the same host and other virtual machines and physical devices with DMA capabilities should all remain unable to access cleartext data. Note that some platforms like previous-generation IBM-Z may offer some level of confidential computing without necessarily implementing it through physical memory encryption.

Confidentiality can be understood as protecting the data from being read from outside of the trusted domain. However, it was quickly understood that this also requires integrity protection, to make sure that a malicious actor cannot tamper with the trusted domain. Such tampering would make it all too easy not just to corrupt trusted data, but possibly even to take over the execution flow sufficiently to cause a data leak.

In practical terms, this means that a malicious system administrator on a public cloud can no longer dump the memory of a virtual machine to try and steal passwords as they are being processed. What they would get from such a dump would, at best, be encrypted versions of the password. This is illustrated in this demo of confidential workloads, where a password in memory is shown to be accessible to a host administrator if not using confidential computing (at time marker 01:23), and no longer when confidential computing encrypts the memory (at time marker 03:50).

On many platforms, the memory encryption key resides in hardware (which may be a dedicated security processor running its own firmware like on AMD-SEV, or a separate hardware-protected area of memory like for ARM-CCA), and barring hardware-level exploits finding a way to extract it, there is no practical way to decrypt encrypted memory. Most importantly, the keys cannot be accessed through human errors, such as through social engineering, and that matters since human error is one of the most common methods used to compromise a system.

The only failure mode of concern for confidential computing is a data leak. Denial of service (DoS) is specifically out of scope, and for a good reason: the host manages physical resources, and can legitimately deny their access at any time, and for any purpose, ranging from the mundane (throttling for cost reasons) to the catastrophic (device failure, power outage or datacenter flooding). Similarly, from a confidentiality point of view, a crash is an acceptable result, as long as that crash cannot be exploited to leak confidential data.

This uproots the usual security model for the guest operating system. In a traditional model, the execution environment is globally trusted: it makes no sense to think about the security damage that could result if program instructions start misbehaving, if register data is altered or if memory content changes at random. However, in a confidential computing scenario, some of these questions become relevant.

The community, starting with Intel, started documenting various new potential threats, and it’s fair to say that there was some sharp push back from the kernel community against confidential as a whole and this new attack model in particular, even after significant rework to show how important the concepts were.

One of the most obvious attack vectors in the new model is the hypervisor. A malicious hypervisor can relatively easily inject random data or otherwise disturb the execution of the guest, facilitating timing attacks or lying about the state and capabilities of the supporting platform to suppress necessary mitigations. Emulation of I/O devices, notably access to device registers in the PCI space, may require additional scrutiny if bad data can lead to controlled guest crashes that would expose confidential data.

This is a massive change in the threat model for the kernel, which historically, except in the case of known bugs, always tended to trust hardware in general, and I/O devices in particular, The massive change in viewpoint explains the pushback from several kernel developers against the new threat model that confidential computing requires. This is still an active area of research.

Various kinds of proof

Like all security technologies, confidential computing relies on a chain of trust which maintains the security of the whole system. That chain of trust starts with a root of trust, i.e. an authoritative source that can vouch for the encryption keys being used. It is built on various cryptographic proofs that provide some strong mathematical guarantees.

The most common forms of proof include:

- Certificates, which prove someone’s identity. If you use Secure Boot to start your computer, Microsoft-issued certificates confirm Microsoft’s identity as the publisher of the software being booted.

- Encryption, preventing the data from being understood by anyone except its intended recipient. When you connect to any e-commerce site today, HTTPS encrypts the data between your computer and the web server.

- Integrity, to ensure that data has not been tampered with. When you use the Git source code management, the “hash” that identifies each commit also guarantees its integrity, something that developers with a disk going bad sometimes discover the hard way. Integrity is often proven by means of cryptographic measurements, such as a cryptographic hash computed based on the contents of an area of memory.

Conclusion

As any emerging technology, confidential computing introduced a large number of concepts, and in doing so, is creating its own acronym-filled jargon. In the next article, we will focus on one particular concept, attestation.

Sobre los autores

Más como éste

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

Attestation vs. integrity in a zero-trust world

AI Is Changing The Threat Landscape | Compiler

What Is Product Security? | Compiler

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube