TLDR;-)

This is the final blog of the series on Ingress Controllers for OpenShift. In this one, we’re covering Red Hat OpenShift Service on AWS (ROSA). And as you will see there are some subtle differences, even though under the hood, it’s still the same technology used to achieve the result we are after.

Anything different with a ROSA private cluster?

As with our ARO setup in the previous blog, we first need to build a private ROSA cluster. The step by step guide for this is available here.

For ROSA with a private setting, several Load-balancers are deployed to accommodate the standard API (port 6443) , Ingress Access for http and https (80 and 443) and for the SRE team to have access to the cluster. This is presented in the figure below (taken from the ROSA official documentation).

I’ve included here the view where SREs are using a private link, there’s also an option that doesn’t rely on it (see here).

So, I’ve created a private cluster using the basic cli

rosa create cluster –-cluster-name=private-rosa –-private

Keep in mind before you can create the cluster, there is a list of prerequisites to follow (here), I’ve put together a short video showing all the steps to create an OpenShift cluster using the ROSA CLI, you can have a look at it here.

Anyway, after a little bit of time for deployment, I’ve got my private cluster and a cluster-admin user for it.

Here again, I have created a jumphost so I can access the cluster, and I will then do the following steps:

- Check the default ingress

- Deploy an application into the demo namespace

- Try to reach this application from the internal side and external side

- Deploy a new ingress controller using the Custom Domain Controller to give external access to this application

- Deploy a route associated with this Custom Domain Controller

- Check that the app is now reachable via an external access

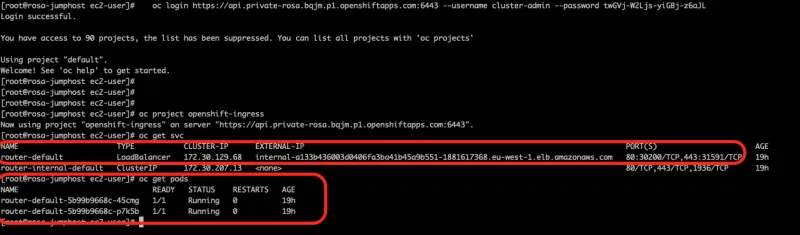

I can now connect to my private cluster and ensure my default ingress controller is running as expected.

We will now deploy the sample application and create a route for it.

oc new-project demo

oc new-app https://github.com/sclorg/django-ex.git

oc expose service django-ex

And check that the application is reachable from the jumphost but not from the outside world (e.g my laptop).

OK, so what is this Custom Domain thingy?

Custom domains are specific wildcard domains that can be used with ROSA. The top-level domains (TLDs) are owned by the customer that is operating the ROSA cluster. The Custom Domains Operator sets up a new ingress controller with a custom certificate as a second day operation.

The public DNS record for this ingress controller can then be used by an external DNS to create a wildcard CNAME record for use with a custom domain.

So effectively it’s the same as deploying directly an ingress-controller as per the previous blogs but this one is the officially supported way of getting our scenario to work in a ROSA environment.

The detailed process for it is available here. But I will now take you through the details of it.

I first have to create a secret in the namespace where the application I want to expose is located. This secret is made up of the private key and the public certificate used for the custom domain. For creating the certificate required in the initial step of the setup I’ve used this blog.

oc create secret tls simon-tls --cert=fullchain.pem --key=privkey.pem -n demo

You then simply check that the secret has been deployed successfully in the right namespace

oc get secrets -n demo

The next step is then to create the Custom Domain Custom Resource (CR)

apiVersion: managed.openshift.io/v1alpha1

kind: CustomDomain

metadata:

name: simon

spec:

domain: apps.simon-public.melbourneopenshift.com

scope: External

certificate:

name: simon-tls

namespace: demo

See the External scope meaning that we will use this CustomDomain to expose applications to the external world.

oc apply -f simon-custom-domain.yaml

Get the status of the Custom Domain by typing

oc get customdomains

Notice a few things:

- The Custom Domain has created a new ingress-controller called simon

- The External-IP address of the router-simon service points to the newly created AWS Load-balancer.

Finally, we now simply need to point the DNS entry for the globally routable domain to the IP address of the load-balancer created by the Custom Domain CR. In my setup I am reusing again the same domain name (*.simon-public.melbourneopenshift.com) and I’m pointing it to the latest created load-balancer.

![]()

Finally, let’s now deploy the new route for the application associated with the new Ingress-controller.

And there you go again, as you can see in the screenshot the django-ex-external route is now associated with the new ingress controller exposing our application on our private cluster to the world!

A final word?

In this series of blogs (blog 1, blog 2, blog 3), we’ve looked at how Ingress Controllers can be used to expose Applications to the external world for private OCP, ARO and ROSA deployments.

Again for those interested in trying out here’s a link that has all the resources used in this blog. Please give it a try, and if you have improvements, comments, or feedback, open a pull request or just reach out.G

À propos de l'auteur

Simon Delord is a Solution Architect at Red Hat. He works with enterprises on their container/Kubernetes practices and driving business value from open source technology. The majority of his time is spent on introducing OpenShift Container Platform (OCP) to teams and helping break down silos to create cultures of collaboration.Prior to Red Hat, Simon worked with many Telco carriers and vendors in Europe and APAC specializing in networking, data-centres and hybrid cloud architectures.Simon is also a regular speaker at public conferences and has co-authored multiple RFCs in the IETF and other standard bodies.

Plus de résultats similaires

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Key considerations for 2026 planning: Insights from IDC

Edge computing covered and diced | Technically Speaking

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud