L'architecture réseau de Red Hat OpenShift offre un système frontal robuste et évolutif pour une multitude d'applications conteneurisées. Les services fournissent un équilibrage de charge simple basé sur les balises de pod, et les routes exposent ces services au réseau externe. Ces concepts fonctionnent très bien pour les microservices, mais ils peuvent s’avérer complexes pour les applications exécutées sur des machines virtuelles sur OpenShift Virtualization, où l’infrastructure de gestion de serveur existante est déjà en place et suppose qu’un accès complet aux machines virtuelles est toujours disponible.

Dans un article précédent, j'ai montré comment installer et configurer OpenShift Virtualization, et comment exécuter une machine virtuelle de base. Dans cet article, je présente différentes options de configuration de votre cluster OpenShift Virtualization afin de permettre aux machines virtuelles d’accéder au réseau externe de manière très similaire à celle d’autres hyperviseurs courants.

Connexion d’OpenShift à des réseaux externes

OpenShift peut être configuré pour accéder à des réseaux externes en plus du réseau de pods interne. Cela est possible grâce à l’opérateur NMState dans un cluster OpenShift. Vous pouvez installer l’opérateur NMState à partir d’OpenShift Operator Hub.

L'opérateur NMState fonctionne avec Multus, un plug-in CNI pour OpenShift permettant aux pods de communiquer avec plusieurs réseaux. Comme nous avons déjà un excellent article sur Multus, j'ai oublié de donner des explications détaillées ici, me concentrant plutôt sur la façon d'utiliser NMState et Multus pour connecter des machines virtuelles à plusieurs réseaux.

Présentation des composants NMState

Après avoir installé l’opérateur NMState, trois définitions CustomResourceDefinitions (CRD) ont été ajoutées pour vous permettre de configurer des interfaces réseau sur vos nœuds de cluster. Vous interagissez avec ces objets lorsque vous configurez des interfaces réseau sur les nœuds OpenShift.

NodeNetworkState

(

nodenetworkstates.nmstate.io) établit un objet NodeNetworkState (NNS) pour chaque nœud de cluster. Le contenu de l’objet détaille l’état actuel du réseau de ce nœud.NodeNetworkConfigurationPolicies

(

nodenetworkconfigurationpolicies.nmstate.io) est une politique qui indique à l'opérateur NMState comment configurer différentes interfaces réseau sur des groupes de nœuds. En bref, ils représentent les changements de configuration apportés aux nœuds OpenShift.NodeNetworkConfigurationEnactments

(

nodenetworkconfigurationenactments.nmstate.io) stocke les résultats de chaque objet NodeNetworkConfigurationPolicy (NNCP) appliqué dans NodeNetworkConfigurationEnactment (NNCE). Il y a une NNCE pour chaque nœud, pour chaque NNCP.

Une fois ces définitions éliminées, vous pouvez passer à la configuration des interfaces réseau sur vos nœuds OpenShift. La configuration matérielle de l'atelier que j'utilise pour cet article comprend trois interfaces réseau. La première est enp1s0, qui est déjà configurée lors de l'installation du cluster à l'aide du pont br-ex. Il s'agit du pont et de l'interface que j'utilise dans l'option #1 ci-dessous. La deuxième interface, enp2s0, se trouve sur le même réseau que enp1s0, et je l'utilise pour configurer le pont OVS br0 dans Option #2 ci-dessous. Enfin, l’interface enp8s0 est connectée à un réseau distinct sans accès Internet ni serveur DHCP. J'utilise cette interface pour configurer le pont Linux br1 dans Option #3 ci-dessous.

Option nº 1 : Utilisation d’un réseau externe avec une seule carte réseau

Si vos nœuds OpenShift n’ont qu’une seule carte réseau pour la mise en réseau, la seule option pour connecter des machines virtuelles au réseau externe consiste à réutiliser le pont br-ex qui est utilisé par défaut sur tous les nœuds exécutés dans un cluster OVN-Kubernetes. Cela signifie que cette option peut ne pas être disponible pour les clusters utilisant l'ancienne version d'OpenShift-SDN.

Étant donné qu’il n’est pas possible de reconfigurer complètement le pont br-ex sans affecter l’opération de base du cluster, vous devez plutôt ajouter un réseau local à ce pont. Vous pouvez y parvenir avec le paramètreNodeNetworkConfigurationPolicy suivant :

$ cat br-ex-nncp.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-ex-network

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: br-ex-network

bridge: br-ex

state: presentDans la plupart des cas, l'exemple ci-dessus est identique dans tous les environnements qui ajoutent un réseau local au pont br-ex. Les seules parties qui sont généralement modifiées sont le nom de la stratégie NNCP (.metadata.name) et le nom du réseau local (.spec.desiredstate.ovn.bridge-mappings). Dans cet exemple, ils sont tous deux br-ex-network, mais les noms sont arbitraires et peuvent ne pas être identiques les uns les autres. La valeur utilisée pour le localnet est nécessaire lorsque vous configurez leNetworkAttachmentDefinition, alors retenez cette valeur pour plus tard !

Appliquez la configuration NNCP aux nœuds de cluster :

$ oc apply -f br-ex-nncp.yaml

nodenetworkconfigurationpolicy.nmstate.io/br-ex-networkVérifiez la progression de NNCP et NNCE avec ces commandes :

$ oc get nncp

NAME STATUS REASON

br-ex-network Progressing ConfigurationProgressing

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Progressing 1s ConfigurationProgressing

lt01ocp11.matt.lab.br-ex-network Progressing 3s ConfigurationProgressing

lt01ocp12.matt.lab.br-ex-network Progressing 4s ConfigurationProgressingDans ce cas, la stratégie NNCP unique appelée br-ex-network a généré une NNCE pour chaque nœud. Après quelques secondes, le processus est terminé :

$ oc get nncp

NAME STATUS REASON

br-ex-network Available SuccessfullyConfigured

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Available 83s SuccessfullyConfigured

lt01ocp11.matt.lab.br-ex-network Available 108s SuccessfullyConfigured

lt01ocp12.matt.lab.br-ex-network Available 109s SuccessfullyConfiguredVous pouvez maintenant passer à la définitionNetworkAttachmentDefinitionqui définit la manière dont vos machines virtuelles s'attachent au réseau que vous venez de créer.

Configuration de Network AttachmentDefinition

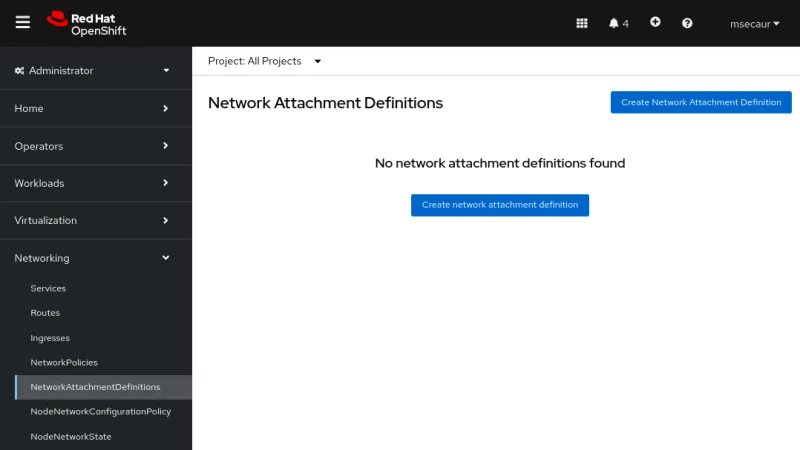

Pour créer unNetworkAttachmentDefinition dans la console OpenShift, sélectionnez le projet dans lequel vous créez des machines virtuelles (vmtest dans cet exemple) et accédez à Networking > NetworkAttachmentDefinitions. Cliquez ensuite sur le bouton bleu intitulé Create network attachment definition

La console présente un formulaire que vous pouvez utiliser pour créer la définition NetworkAttachmentDefinition :

Le champ Name est arbitraire, mais dans cet exemple, j'utilise le même nom que celui que j'ai utilisé pour NNCP (br-ex-network). Pour Network Type, vous devez choisir le réseau localnet secondaire OVN Kubernetes.

Dans le champ Bridge mapping, saisissez le nom du réseau local que vous avez configuré précédemment (qui est égalementbr-ex-network dans cet exemple). Comme le champ demande une « mise en correspondance du pont », vous pouvez être tenté de saisir « br-ex », mais en fait, vous devez utiliser le localnet que vous avez créé, qui est déjà connecté àbr-ex.

Vous pouvez également créer NetworkAttachmentDefinition à l'aide d'un fichier YAML au lieu d'utiliser la console :

$ cat br-ex-network-nad.yaml

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: br-ex-network

namespace: vmtest

spec:

config: '{

"name":"br-ex-network",

"type":"ovn-k8s-cni-overlay",

"cniVersion":"0.4.0",

"topology":"localnet",

"netAttachDefName":"vmtest/br-ex-network"

}'

$ oc apply -f br-ex-network-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/br-ex-network createdDans le fichier YAMLNetworkAttachDefinition YAML ci-dessus, le champ name dans .spec.config est le nom du réseau local de la stratégie NNCP, et netAttachDefName est l'espace/nom qui doit correspondre aux deux champs identiques de la section .metadata (dans ce cas, vmtest/br-ex-network).

Les machines virtuelles utilisent des adresses IP statiques ou DHCP pour l'adressage IP. Par conséquent, la gestion des adresses IP (IPAM) dans une NetworkAttachmentDefinition

Configuration de la carte réseau de machine virtuelle

Pour utiliser le nouveau réseau externe avec une machine virtuelle, modifiez la section Network interfaces de la machine virtuelle et sélectionnez le nouveau réseau vmtest/br-ex-network en tant que type Network . Il est également possible de personnaliser l’adresse MAC dans ce formulaire.

Continuez à créer la machine virtuelle comme vous le feriez normalement. Après le démarrage de la machine virtuelle, votre carte réseau virtuelle est connectée au réseau externe. Dans cet exemple, le réseau externe comprend un serveur DHCP. Une adresse IP est donc automatiquement attribuée et l'accès au réseau est autorisé.

Supprimez NetworkAttachmentDefinition et localnet sur br-ex.

Si vous souhaitez annuler les étapes ci-dessus, assurez-vous d’abord qu’aucune machine virtuelle n’utiliseNetworkAttachmentDefinition, puis supprimez-le à l’aide de la console. Sinon, utilisez la commande :

$ oc delete network-attachment-definition/br-ex-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br-ex-network" deletedSupprimez ensuiteNodeNetworkConfigurationPolicy. La suppression de la stratégie n’annulera pas les modifications sur les nœuds OpenShift !

$ oc delete nncp/br-ex-network

nodenetworkconfigurationpolicy.nmstate.io "br-ex-network" deletedLa suppression de la stratégie NNCP supprime également toutes les ressources NNCE associées :

$ oc get nnce

No resources foundEnfin, modifiez le fichier YAML NNCP utilisé précédemment, mais changez l'état de mise en correspondance du pont de present à absent :

$ cat br-ex-nncp.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-ex-network

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: br-ex-network

bridge: br-ex

state: absent # Changed from presentRéappliquez la stratégie NNCP mise à jour :

$ oc apply -f br-ex-nncp.yaml

nodenetworkconfigurationpolicy.nmstate.io/br-ex-network

$ oc get nncp

NAME STATUS REASON

br-ex-network Available SuccessfullyConfigured

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Available 2s SuccessfullyConfigured

lt01ocp11.matt.lab.br-ex-network Available 29s SuccessfullyConfigured

lt01ocp12.matt.lab.br-ex-network Available 30s SuccessfullyConfiguredLa configuration localnet a maintenant été supprimée. Vous pouvez supprimer la stratégie NNCP en toute sécurité.

Option nº 2 : utilisation d'un réseau externe avec un pont OVS sur une carte réseau dédiée

Les nœuds OpenShift peuvent être connectés à plusieurs réseaux qui utilisent différentes cartes réseau physiques. Bien qu'il existe de nombreuses options de configuration (telles que la liaison et les VLAN), dans cet exemple, j'utilise simplement une carte réseau dédiée pour configurer un pont OVS. Pour plus d’informations sur les options de configuration avancées, telles que la création de liaisons ou l’utilisation d’un VLAN, reportez-vous à notre documentation.

Vous pouvez voir toutes les interfaces de nœud à l’aide de cette commande (la sortie est tronquée ici pour plus de commodité) :

$ oc get nns/lt01ocp10.matt.lab -o jsonpath='{.status.currentState.interfaces[3]}' | jq

{

…

"mac-address": "52:54:00:92:BB:00",

"max-mtu": 65535,

"min-mtu": 68,

"mtu": 1500,

"name": "enp2s0",

"permanent-mac-address": "52:54:00:92:BB:00",

"profile-name": "Wired connection 1",

"state": "up",

"type": "ethernet"

}Dans cet exemple, l'adaptateur réseau inutilisé sur tous les nœuds est enp2s0.

Comme dans l’exemple précédent, commencez par une stratégie NNCP qui crée un nouveau pont OVS appelébr0 sur les nœuds, à l’aide d’une carte réseau inutilisée (enp2s0, dans cet exemple). La stratégie NNCP ressemble à ceci :

$ cat br0-worker-ovs-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br0-ovs

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br0

description: |-

A dedicated OVS bridge with enp2s0 as a port

allowing all VLANs and untagged traffic

type: ovs-bridge

state: up

bridge:

options:

stp: true

port:

- name: enp2s0

ovn:

bridge-mappings:

- localnet: br0-network

bridge: br0

state: presentLa première chose à noter à propos de l'exemple ci-dessus est le champ .metadata.name, qui est arbitraire et identifie le nom de la stratégie NNCP. Vous pouvez également voir, vers la fin du fichier, que vous ajoutez un mappage de pont localnet au nouveau pont br0, comme vous l'avez fait avec br-ex dans l'exemple de carte réseau unique.

Dans l’exemple précédent utilisant br-ex comme pont, nous avons pu supposer que tous les nœuds OpenShift avaient un pont avec ce nom. Vous avez donc appliqué la stratégie NNCP à tous les nœuds de travail. Cependant, dans le scénario actuel, il est possible d'avoir des nœuds hétérogènes avec des noms de carte réseau différents pour les mêmes réseaux. Dans ce cas, vous devez ajouter une étiquette à chaque type de nœud pour identifier sa configuration. Ensuite, en utilisant le .spec.nodeSelector dans l'exemple ci-dessus, vous pouvez appliquer la configuration uniquement aux nœuds qui fonctionnent avec cette configuration. Pour d'autres types de nœuds, vous pouvez modifier le NNCP et le nodeSelector, et créer le même pont sur ces nœuds, même lorsque le nom de la carte réseau sous-jacente est différent.

Dans cet exemple, les noms des cartes réseau sont tous identiques, vous pouvez donc utiliser la même stratégie NNCP pour tous les nœuds.

Appliquez maintenant la stratégie NNCP, comme vous l’avez fait pour l’exemple de carte réseau unique :

$ oc apply -f br0-worker-ovs-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br0-ovs createdComme auparavant, les stratégies NNCP et NNCE prennent un certain temps pour être créées et appliquées avec succès. Vous pouvez voir le nouveau pont dans le NNS :

$ oc get nns/lt01ocp10.matt.lab -o jsonpath='{.status.currentState.interfaces[3]}' | jq

{

…

"port": [

{

"name": "enp2s0"

}

]

},

"description": "A dedicated OVS bridge with enp2s0 as a port allowing all VLANs and untagged traffic",

…

"name": "br0",

"ovs-db": {

"external_ids": {},

"other_config": {}

},

"profile-name": "br0-br",

"state": "up",

"type": "ovs-bridge",

"wait-ip": "any"

}Configuration de NetworkAttachDefintion

Le processus de création d'un NetworkAttachmentDefinition pour un pont OVS est identique à l'exemple précédent d'utilisation d'une carte réseau unique, car dans les deux cas, vous créez un mappage de pont OVN. Dans l’exemple actuel, le nom du mappage de ponts est br0-network, c’est donc ce que vous utilisez dans le formulaire de créationNetworkAttachmenDefinition :

Vous pouvez également créer la définitionNetworkAttachmentDefinition à l'aide d'un fichier YAML :

$ cat br0-network-nad.yaml

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: br0-network

namespace: vmtest

spec:

config: '{

"name":"br0-network",

"type":"ovn-k8s-cni-overlay",

"cniVersion":"0.4.0",

"topology":"localnet",

"netAttachDefName":"vmtest/br0-network"

}'

$ oc apply -f br0-network-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/br0-network createdConfiguration de la carte réseau de machine virtuelle

Comme auparavant, créez une machine virtuelle normalement, en utilisant la nouvelleNetworkAttachmentDefinition appeléevmtest/br0-network pour le réseau de la carte réseau.

Au démarrage de la machine virtuelle, la carte réseau utilise le pont br0 sur le nœud. Dans cet exemple, la carte réseau dédiée pour br0 se trouve sur le même réseau que le pont br-ex, de sorte que vous obtenez une adresse IP du même sous-réseau que précédemment, et l’accès au réseau est autorisé.

Supprimez NetworkAttachmentDefinition et le pont OVS.

Le processus de suppression deNetworkAttachmentDefinition et le pont OVS est en grande partie identique à celui de l’exemple précédent. Vérifiez qu’aucune machine virtuelle n’utiliseNetworkAttachmentDefinition, puis supprimez-le de la console ou de la ligne de commande :

$ oc delete network-attachment-definition/br0-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br0-network" deletedSupprimez ensuiteNodeNetworkConfigurationPolicy (rappelez-vous que la suppression de la stratégie n'annulera pas les modifications sur les nœuds OpenShift) :

$ oc delete nncp/br0-ovs

nodenetworkconfigurationpolicy.nmstate.io "br0-ovs" deletedLa suppression de la stratégie NNCP supprime également la stratégie NNCE associée :

$ oc get nnce

No resources foundPour terminer, modifiez le fichier YAML NNCP utilisé précédemment, mais faites passer l'état de l'interface de « up » à « absent » et l'état de mise en correspondance du pont de « present » à « absent » :

$ cat br0-worker-ovs-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br0-ovs

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

Interfaces:

- name: br0

description: |-

A dedicated OVS bridge with enp2s0 as a port

allowing all VLANs and untagged traffic

type: ovs-bridge

state: absent # Change this from “up” to “absent”

bridge:

options:

stp: true

port:

- name: enp2s0

ovn:

bridge-mappings:

- localnet: br0-network

bridge: br0

state: absent # Changed from presentRéappliquez la stratégie NNCP mise à jour. Une fois que la stratégie NNCP a été correctement traitée, vous pouvez la supprimer.

$ oc apply -f br0-worker-ovs-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br0-ovs createdOption nº 3 : utilisation d’un réseau externe avec un pont Linux sur une carte réseau dédiée

Dans la mise en réseau Linux, les ponts OVS et les ponts Linux ont le même objectif. Au final, le choix de l'une ou de l'autre dépend des besoins de l'environnement. Il existe une multitude d'articles disponibles sur Internet qui traitent des avantages et des inconvénients des deux types de ponts. En bref, les ponts Linux sont plus matures et plus simples que les ponts OVS, mais ils ne sont pas aussi riches en fonctionnalités. Les ponts OVS ont l'avantage d'offrir plus de types de tunnel et d'autres fonctionnalités modernes que les ponts Linux, mais sont un peu plus difficiles à résoudre. Pour les besoins d’OpenShift Virtualization, vous devez probablement utiliser par défaut des ponts OVS sur des ponts Linux en raison de pratiques telles que MultiNetworkPolicy, mais les déploiements peuvent être réussis avec l’une ou l’autre de ces options.

Notez que lorsqu’une interface de machine virtuelle est connectée à un pont OVS, la MTU par défaut est 1400. Lorsqu’une interface de machine virtuelle est connectée à un pont Linux, la MTU par défaut est définie sur 1 500.Vous trouverez des informations complémentaires sur la taille MTU du cluster dans la documentation officielle.

Configuration de nœud

Comme dans l'exemple précédent, nous utilisons une carte réseau dédiée pour créer un nouveau pont Linux. Attention, je connecte le pont Linux à un réseau qui n'a pas de serveur DHCP, pour que vous puissiez voir comment cela affecte les machines virtuelles connectées à ce réseau.

Dans cet exemple, je crée un pont Linux appelé br1 sur l'interface enp8s0, qui est attaché au réseau 172.16.0.0/24 qui n'a ni accès à Internet ni serveur DHCP. La stratégie NNCP ressemble à ceci :

$ cat br1-worker-linux-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-linux-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br1

description: Linux bridge with enp8s0 as a port

type: linux-bridge

state: up

bridge:

options:

stp:

enabled: false

port:

- name: enp8s0

$ oc apply -f br1-worker-linux-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br1-worker createdComme auparavant, il faut quelques secondes pour créer et appliquer correctement les politiques NNCP et NNCE.

Configuration de NetworkAttachDefintion

Le processus de création d'unNetworkAttachmentDefinition pour un pont Linux est un peu différent des deux exemples précédents, car vous ne vous connectez pas à un localnet OVN cette fois. Pour une connexion de pont Linux, vous vous connectez directement au nouveau pont. Voici le formulaire de création NetworkAttachmentDefinition :

Dans ce cas, sélectionnez le Network Type de CNV Linux bridge et indiquez le nom du pont actuel dans le champ Bridge name, qui estbr1 dans cet exemple.

Configuration de la carte réseau de machine virtuelle

Créez à présent une autre machine virtuelle, mais utilisez la nouvelle NetworkAttachmentDefinition appeléevmtest/br1-network pour le réseau de la carte réseau. Cela permet d'attacher la carte réseau au nouveau pont Linux.

Au démarrage de la machine virtuelle, la carte réseau utilise le pont br1 sur le nœud. Dans cet exemple, la carte réseau dédiée se trouve sur un réseau sans serveur DHCP et sans accès Internet. Attribuez donc à la carte réseau une adresse IP manuelle à l’aide de nmcli et validez la connectivité sur le réseau local uniquement.

Supprimez NetworkAttachmentDefinition et le pont Linux.

Comme dans les exemples précédents, pour supprimer NetworkAttachmentDefinition et le pont Linux, assurez-vous d'abord qu'aucune machine virtuelle n'utilise NetworkAttachmentDefinition. Supprimez-le de la console ou de la ligne de commande :

$ oc delete network-attachment-definition/br1-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br1-network" deletedSupprimez ensuiteNodeNetworkConfigurationPolicy:

$ oc delete nncp/br1-linux-bridge

nodenetworkconfigurationpolicy.nmstate.io "br1-linux-bridge" deletedLa suppression de la stratégie NNCP supprime également toutes les ressources NNCE associées (rappelez-vous que la suppression de la stratégie n’annulera pas les modifications sur les nœuds OpenShift) :

$ oc get nnce

No resources foundEnfin, modifiez le fichier YAML NNCP, en passant deup àabsent:

$ cat br1-worker-linux-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-linux-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br1

description: Linux bridge with enp8s0 as a port

type: linux-bridge

state: absent # Changed from up

bridge:

options:

stp:

enabled: false

port:

- name: enp8s0Réappliquez la stratégie NNCP mise à jour. Une fois la stratégie NNCP traitée avec succès, elle peut être supprimée.

$ oc apply -f br1-worker-linux-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br1-worker createdMise en réseau avancée dans OpenShift Virtualization

De nombreux utilisateurs d'OpenShift Virtualization peuvent profiter des fonctions réseau avancées déjà intégrées à Red Hat OpenShift. Cependant, si de plus en plus de charges de travail sont migrées depuis les hyperviseurs traditionnels, il faudra peut-être exploiter l'infrastructure existante. Pour ce faire, il peut être nécessaire de connecter les charges de travail OpenShift Virtualization directement à leurs réseaux externes. L'opérateur NMState associé à la mise en réseau Multus offre une méthode flexible pour améliorer les performances et la connectivité afin de faciliter votre transition d'un hyperviseur traditionnel vers Red Hat OpenShift Virtualization.

Pour en savoir plus sur OpenShift Virtualization, consultez un autre article de blog sur le sujet ou consultez le produit sur notre site web. Pour plus d’informations sur les sujets réseau abordés dans cet article, consultez la documentation Red Hat OpenShift et tous les détails dont vous avez besoin. Enfin, si vous souhaitez voir une démo ou utiliser vous-même la solution OpenShift Virtualization, n'hésitez pas à contacter votre responsable de compte.

À propos de l'auteur

Matthew Secaur is a Red Hat Principal Technical Account Manager (TAM) for Canada and the Northeast United States. He has expertise in Red Hat OpenShift Platform, Red Hat OpenShift Virtualization, Red Hat OpenStack Platform, and Red Hat Ceph Storage.

Plus de résultats similaires

Accelerating VM migration to Red Hat OpenShift Virtualization: Hitachi storage offload delivers faster data movement

Achieve more with Red Hat OpenShift 4.21

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud