Automation Controller API:

Automation controller has a rich ReSTful API. REST stands for Representational State Transfer and is sometimes spelled as “ReST”. It relies on a stateless, client-server, and cacheable communications protocol, usually the HTTP protocol. REST APIs provide access to resources (data entities) via URI paths. You can visit the automation controller REST API in a web browser at: http://<server name>/api/

API Usage:

Many automation controller customers use the API to build their own event driven automation type solutions that draw data from their environment and then trigger jobs in the automation controller. This type of architecture can lead to incredibly high frequency and volume of requests to the controller API pushing controller’s API to the breaking point.

The API is the simplest and most straightforward way to interact with automation controller for any external system. These external systems and tools integrate with the automation controller API to mainly launch the jobs and get results about the jobs. Additionally, the inventory information is stored in an external system and pushed to the automation controller via API too. Given the fact that we have seen enterprises manage thousands of hosts via automation controller, the number of API calls can be huge. There can be a short burst of loads of API calls in a short period of time or it can be a long consistent time period of many API calls. In both cases, it pushes the automation controller's API to a breaking point where it starts rejecting those API requests. Both of these use cases have been a frequent requirement of enterprise customers of Red Hat Ansible Automation Platform.

API Usage Issues

We have seen customers that engage in this high frequency and high concurrency automation against the automation controller API and have faced certain API limitations. Automation controller can only cater a certain number of concurrent API requests. In our experiments we have seen that with 16 uWSGI workers, automation controller can handle about 150 concurrent API requests. In the past, to overcome API limitations, we have recommended that customers horizontally scale the control plane and rate limit clients. See: Scaling Automation Controller for API Driven Workloads. In most of the recommendations we have been trying to handle more concurrent web requests on automation controller or find ways for the customer to make fewer web requests.

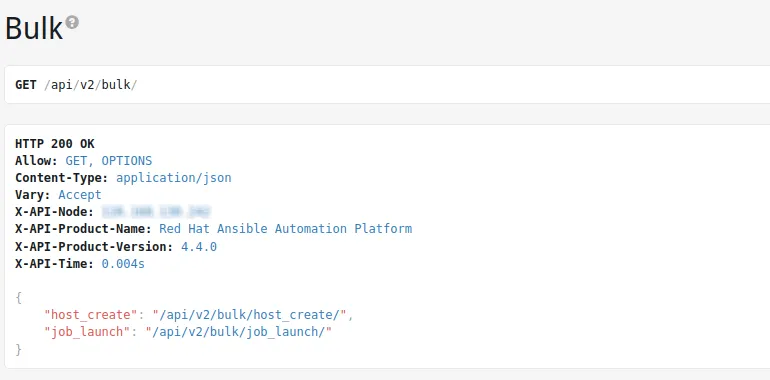

What is Bulk API

Bulk API optimizes your automation controller operations by consolidating tasks. It's designed to achieve the same outcomes with fewer API calls, enhancing efficiency and performance. This feature has been included in Ansible Automation Platform 2.4 as a tech preview feature, and we have implemented two endpoints that each implement a bulk operation. In the future, based on feedback, we may add additional operations. The current operations supported are bulk operations for adding multiple hosts to an inventory, and for launching multiple jobs.

/api/v2/bulk/host_create/

/api/v2/bulk/job_launch/

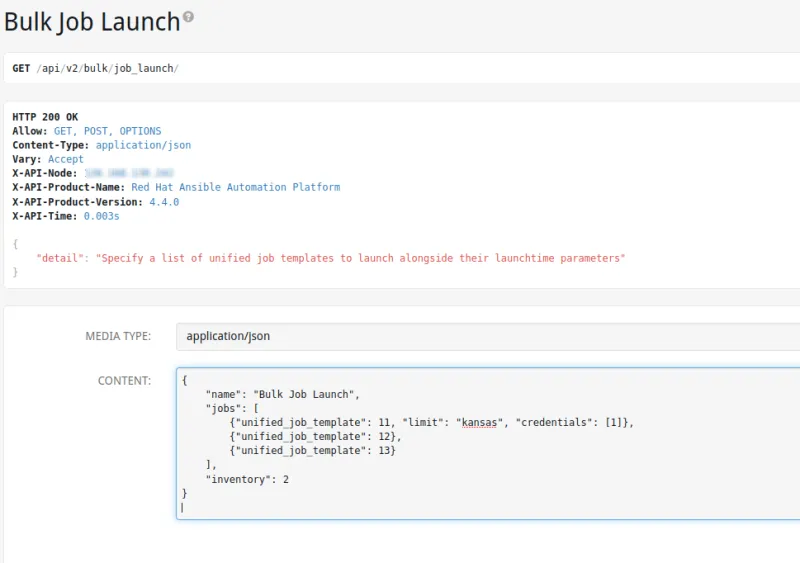

Bulk Job Launch

Bulk job launch provides a feature in the API that allows a single web request to achieve multiple job launches. It creates a workflow job with individual jobs as nodes within the workflow job. It also supports providing promptable fields like inventory and credentials.

The following is an example of a post request at the /api/v2/bulk/job_launch:

{

"name": "Bulk Job Launch",

"jobs": [

{"unified_job_template": 7},

{"unified_job_template": 8},

{"unified_job_template": 9}

]

}

The above will launch a workflow job with 3 workflow nodes in it.

The maximum number of jobs allowed to be launched in one bulk launch is controlled by the setting BULK_JOB_MAX_LAUNCH. The default is set to 100 jobs. This can be modified from /api/v2/settings/bulk/.

Important Note: A bulk job results in a workflow job being generated, that then contains the requested jobs. This parent workflow job will only be visible in the jobs section of the UI to system and organization administrators, although the individual jobs within a bulk job can be seen by users that would normally be able to see them.

If the job template has fields marked as prompt on launch, those can be provided for each job in the bulk job launch as well:

{

"name": "Bulk Job Launch",

"jobs": [

{"unified_job_template": 11, "limit": "kansas", "credentials": [1], "inventory": 1}

]

}

In the above example job template 11 has limit, credentials and inventory marked as prompt on launch and those are provided as parameters to the job.

Prompted field value can also be provided at the top level. For example:

{

"name": "Bulk Job Launch",

"jobs": [

{"unified_job_template": 11, "limit": "kansas", "credentials": [1]},

{"unified_job_template": 12},

{"unified_job_template": 13}

],

"inventory": 2

}

In the above example, inventory: 2 will get used for the job templates (11, 12 and 13) in which inventory is marked as prompt of launch.

RBAC For Bulk Job Launch

Who can bulk launch?

Anyone who is logged in and has access to view the job template can view the launch point. In order to launch a unified_job_template, you need to have either update or execute depending on the type of unified job (job template, project update, etc).

Launching using the bulk endpoint results in a workflow job. For auditing purposes, in general we require the client to include an organization in the bulk job launch payload. This organization is used to assign an organization to the resulting workflow job. The logic for assigning this organization is as follows:

- Super users may assign any organization or none. If they do not assign one, they will be the only user able to see the parent workflow.

- Users that are members of exactly 1 organization do not need to specify an organization, as their single organization will be used to assign to the resulting workflow.

- Users that are members of multiple organizations must specify the organization to assign to the resulting workflow. If they do not specify, an error will be returned indicating this requirement.

Example of specifying the organization:

{

"name": "Bulk Job Launch with org specified",

"jobs": [

{"unified_job_template": 12},

{"unified_job_template": 13}

],

"organization": 2

}

Who can see bulk jobs that have been run?

System and organization admins will see bulk jobs in the workflow jobs list and the unified jobs list. They can additionally see these individual workflow jobs.

Regular users can only see the individual workflow jobs that were launched by their bulk job launch. These jobs do not appear in the unified jobs list, nor do they show in the workflow jobs list. This is important because the response to a bulk job launch includes a link to the parent workflow job.

Bulk Host Create

Bulk host create provides a feature in the API that allows a single web request to create multiple hosts in an inventory.

Following is an example of a post request at the /api/v2/bulk/host_create:

{

"inventory": 1,

"hosts": [{"name": "host1", "variables": "ansible_connection: local"}, {"name": "host2"}, {"name": "host3"}, {"name": "host4"}, {"name": "host5"}, {"name": "host6"}]

}

The above will add 6 hosts in the inventory.

The setting BULK_HOST_MAX_CREATE controls the number of hosts that a user can add. The default is 100 hosts. Additionally, Nginx limits the maximum payload size, which is very likely when posting a large number of hosts in one request with variable data associated with them. The maximum payload size is 1MB unless overridden in your Nginx config.

Performance Improvements:

There are some significant performance gains using bulk API when compared with launching jobs via normal job launch i.e. /api/v2/job_templates/<id>/launch.

To evaluate the performance gains of the bulk API, we compared launching 100 and 1000 jobs via the launch endpoint of the job template and launching the same number of jobs via the bulk API endpoint and compared the time spent, both by the client to launch the jobs and until all jobs finished.

We executed these requests as both superuser and a normal user with appropriate permission, because this can impact the performance of requests due to additional complexity of evaluating RBAC rules. We used the Grafana K6 to drive the requests. The results are shared in the following table. 1

Job Launch Results:

|

Launch Method |

Number of jobs |

Number of requests necessary |

Wall clock time (seconds) |

Created Time |

Finished Time |

Total Time Taken |

|

Serial BulkJob Launch |

100 |

1 |

0m01.2s |

17:52:40 |

17:58:34 |

5m54s |

|

Serial Normal Job Launch |

100 |

100 |

02m42.9s |

09:19:26 |

09:24:47 |

5m21s |

|

16 Concurrent Client Normal Job Launch |

100 |

100 |

01m43.1s |

10:11:21 |

10:16:39 |

5m18s |

|

Serial BulkJob Launch |

1000 |

10 |

0m01.5s |

08:44:43 |

09:47:18 |

1h2m35s |

|

Serial Normal Job Launch |

1000 |

1000 |

10m42.9s |

07:52:47 |

07:55:36 |

1h2m49s |

|

16 Concurrent Client Normal Job Launch |

1000 |

1000 |

08m43.1s |

09:18:16 |

10:20:38 |

1h2m22s |

1 Note we compare using the bulk API both with serial “normal” requests as well as with requests made by 16 concurrent clients. This is because a single controller instance by default has 16 web server workers able to handle a request, so making 16 launch requests concurrently is the fastest a single instance will process web requests.

As clearly seen under the wall clock time, there is more than 100x improvement in launching jobs via bulk API as compared to launching jobs via normal job launch endpoint.

Host Create Results:

|

Create method |

Number of hosts |

Number of requests necessary |

Wall clock time (seconds) |

User type |

|

BulkHostCreate |

100 |

1 |

0m0.1s |

admin |

|

BulkHostCreate |

100 |

1 |

0m0.2s |

regular |

|

Manual |

100 |

100 |

0m12.0s |

admin |

|

Manual |

100 |

100 |

0m12.1s |

regular |

|

BulkHostCreate |

1000 |

1 |

0m0.7s |

admin |

|

BulkHostCreate |

1000 |

1 |

0m0.7s |

regular |

|

Manual |

1000 |

1000 |

2m01.2s |

admin |

|

Manual |

1000 |

1000 |

2m03.8s |

regular |

|

BulkHostCreate |

10000 |

10 |

0m06.7s |

admin |

|

BulkHostCreate |

10000 |

10 |

0m06.7s |

regular |

|

BulkHostCreate |

100000 |

100 |

2m02.3s |

admin |

|

BulkHostCreate |

100000 |

100 |

2m05.5s |

regular |

As clearly seen under wall clock time, there is more than 100x improvement in adding hosts via bulk host create as compared to adding hosts via api/v2/hosts/ endpoint.

Takeaways & where to go next

With the above feature, bulk API enables you to make fewer API calls to perform the same things that you were doing before on the automation controller. It reduces the load on automation controller API making it less probable to reach a breaking point.

If you're interested in detailed information on automation controller, then the automation controller documentation is a must-read. To download and install the latest version, please visit the automation controller installation guide. To view the release notes of recent automation controller releases, please visit release notes 4.4. If you are interested in more details about Ansible Automation Platform, be sure to check out our e-books.

À propos de l'auteur

Plus de résultats similaires

Sovereign AI architecture: Scaling distributed training with Kubeflow Trainer and Feast on Red Hat OpenShift AI

End-to-end security for AI: Integrating AltaStata Storage with Red Hat OpenShift confidential containers

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud