Kubernetes is a popular choice among many developers for application deployments, and many of these deployments can benefit from a shared storage layer with greater persistency. Red Hat OpenShift Service on AWS (ROSA) is a managed OpenShift integration on AWS developed by Red Hat and jointly supported by AWS and Red Hat.

ROSA clusters typically store data on locally attached Amazon Elastic Block Store (EBS) volumes. Some customers need the underlying data to be persistent and shared across multiple containers, including containers deployed across multiple Availability Zones (AZs). These customers are looking for a storage solution that scales automatically and provides a more consistent interface to run workloads across on-prem and cloud environments.

ROSA offers an integration with Amazon FSx for NetApp ONTAP NAS – a scalable, fully managed shared storage service built on NetApp's ONTAP file system. With FSx for ONTAP, customers have access to popular ONTAP features like snapshots, FlexClones, cross-region replication with SnapMirror, and a highly available file server with seamless failover.

FSx for ONTAP NAS is integrated with the NetApp Trident driver, a dynamic Container Storage Interface (CSI) to handle Kubernetes Persistent Volume Claims (PVCs) on storage disks. The Trident CSI driver manages on-demand provisioning of storage volumes across different deployment environments and makes it easier to scale and protect data for your applications.

In this blog, I demonstrate the use of FSx for ONTAP as a persistent storage layer for ROSA applications. I'll walk through a step-by-step installation of the NetApp Trident CSI driver on a ROSA cluster, provision an FSx for ONTAP NAS file system, deploy a sample stateful application, and demonstrate pod scaling across multi-AZ nodes using dynamic persistent volumes. Finally, I'll cover backup and restore for your application. With this solution, you can set up a shared storage solution that scales across AZ and makes it easier to scale, protect and restore your data using the Trident CSI driver.

Prerequisites

You need the following resources:

- AWS account

- A Red Hat account

- IAM user with appropriate permissions to create and access ROSA cluster

- AWS CLI

- ROSA CLI

- OpenShift command-line interface (oc)

- Helm 3 documentation

- A ROSA cluster

- Access to Red Hat OpenShift web console

The diagram below shows the ROSA cluster deployed in multiple availability zones (AZs). The ROSA cluster's master nodes, infrastructure nodes and worker nodes run in a private subnet of a customer's Virtual Private Cloud (VPC). You'll create an FSx for ONTAP NAS file system within the same VPC and install the Trident driver in the ROSA cluster, allowing all the subnets of this VPC to connect to the file system.

Figure 1 – ROSA integration with Amazon FSx for NetApp ONTAP NAS

1. Create a ROSA cluster and clone the GitHub repository

First, install the ROSA cluster. Use Git to clone the GitHub repository. If you don't have Git, install it with the following command:

sudo dnf install gitClone the Git repository:

git clone https://github.com/aws-samples/rosa-fsx-netapp-ontap.git2. Provision FSx for ONTAP

Create a multi-AZ FSx for the ONTAP NAS file system in the same VPC as your ROSA cluster.

If you want to provision the file system with a different storage capacity and throughput, you can override the default values by setting StorageCapacity and ThroughputCapacity parameters in the CFN template.

The value for FSxAllowedCIDR is the allowed Classless Inter-Domain Routing (CIDR) range for the FSx for ONTAP security groups ingress rules to control access. You can use 0.0.0.0/0 or any appropriate CIDR to allow all traffic to access the specific ports of FSx for ONTAP.

Also, take note of the VPC ID, the two subnet IDs corresponding to the subnets you want your file system to be in, and all route table IDs associated with the ROSA VPC subnets. Enter those values in the command below.

Run this command in a terminal to create FSx for the ONTAP file system:

cd rosa-fsx-netapp-ontap/fsx

aws cloudformation create-stack \

--stack-name ROSA-FSXONTAP \

--template-body file://./FSxONTAP.yaml \

--region <region-name> \

--parameters \

ParameterKey=Subnet1ID,ParameterValue=[subnet1_ID] \

ParameterKey=Subnet2ID,ParameterValue=[subnet2_ID] \

ParameterKey=myVpc,ParameterValue=[VPC_ID] \

ParameterKey=FSxONTAPRouteTable,ParameterValue=[routetable1_ID,routetable2_ID] \

ParameterKey=FileSystemName,ParameterValue=ROSA-myFSxONTAP \

ParameterKey=ThroughputCapacity,ParameterValue=256 \

ParameterKey=FSxAllowedCIDR,ParameterValue=[your_allowed_CIDR] \

ParameterKey=FsxAdminPassword,ParameterValue=[Define password] \

ParameterKey=SvmAdminPassword,ParameterValue=[Define password] \

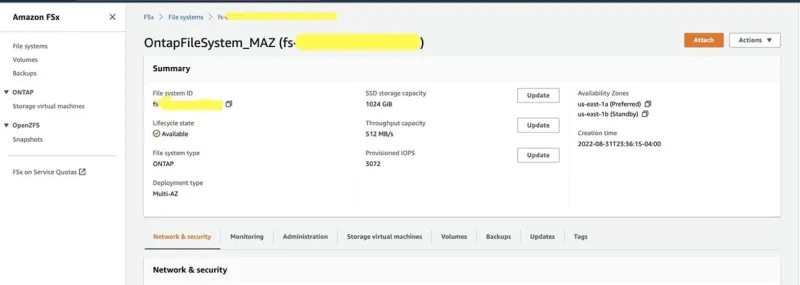

--capabilities CAPABILITY_NAMED_IAM Verify that your file system and storage virtual machine (SVM) has been created using the Amazon FSx console, shown below:

Figure 2 – Amazon FSx Console

3. Install and configure the Trident CSI driver for the ROSA cluster

Use the following Helm command to install the Trident CSI driver in the "trident" namespace on the OpenShift cluster.

First, add the Astra Trident Helm repository:

helm repo add netapp-trident https://netapp.github.io/trident-helm-chartNext, use helm install and specify a name for your deployment as in the following example, where 23.01.1 is the version of Astra Trident you are installing:

helm install <name> netapp-trident/trident-operator --version 23.01.1 --create-namespace --namespace tridentVerify the Trident driver installation:

$ helm status trident -n trident

NAME: trident

LAST DEPLOYED: Fri Dec 23 23:17:26 2022

NAMESPACE: trident

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing trident-operator, which deploys and manages NetApp's Trident CSI storage provisioner for Kubernetes.Finally, verify the installation:

oc get pods -n trident

NAME READY STATUS RESTARTS AGE

trident-controller-cdb6ccbc5-hfp42 6/6 Running 0 85s

trident-node-linux-7gtbr 2/2 Running 0 84s

trident-node-linux-7tjdj 2/2 Running 0 84s

trident-node-linux-gpnb9 2/2 Running 0 85s

trident-node-linux-hwj67 2/2 Running 0 85s

trident-node-linux-kq9k2 2/2 Running 0 84s

trident-node-linux-kxsct 2/2 Running 0 84s

trident-node-linux-n86rc 2/2 Running 0 84s

trident-node-linux-p2j8g 2/2 Running 0 85s

trident-node-linux-t7vpv 2/2 Running 0 84s

trident-operator-74977bc66d-xxh8n 1/1 Running 0 110s4. Create a secret for the SVM username and password in the ROSA cluster

Create a new file with the SVM username and admin password and save it as svm_secret.yaml. A sample svm_secret.yaml file is included in the fsx folder.

svm_secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: backend-fsx-ontap-nas-secret

namespace: trident

type: Opaque

stringData:

username: vsadmin

password: step#2 passwordThe SVM username and its admin password were created in Step 2. You can retrieve it from the AWS Secrets Manager:

Figure 3 – AWS Secrets Manager Console

Add the secrets to the ROSA cluster with the following command:

oc apply -f svm_secret.yamlTo verify that the secrets have been added to the ROSA cluster, run the following command:

oc get secrets -n trident |grep backend-fsx-ontap-nasYou see the following output:

backend-fsx-ontap-nas-secret Opaque 2 16d5. Configure the Trident CSI backend to FSx for ONTAP NAS

The Trident back-end configuration tells Trident how to communicate with the storage system (in this case, FSx for ONTAP). You'll use the ontap-nas driver to provision storage volumes.

To get started, move into the fsx directory of your cloned Git repository. Open the file backend-ontap-nas.yaml. Replace the managementLIF and dataLIF in that file with the Management DNS name and NFS DNS name of the Amazon FSx SVM and svm with the SVM name, as shown in the screenshot below.

Note: ManagementLIF and DataLIF can be found in the Amazon FSx Console under "Storage virtual machines" as shown below (highlighted as "Management DNS name" and "NFS DNS name").

Figure 4 - Amazon FSx console - SVM page

Now execute the following commands in the terminal to configure the Trident backend using management DNS and SVM name configuration in the ROSA cluster.

cd fsx

oc apply -f backend-ontap-nas.yamlRun the following command to verify backend configuration.

oc get tbc -n trident

NAME BACKEND NAME BACKEND UUID PHASE STATUS

backend-fsx… fsx-ontap 586106c8… Bound SuccessNow that Trident is configured, you can create a storage class to use the backend you've created. This is a resource object that describes and classifies the type of storage you can request from different storage types available to Kubernetes cluster. Review the file storage-class-csi-nas.yaml in the fsx folder.

6. Create storage class in ROSA cluster

Now, create a storage class:

oc apply -f storage-class-csi-nas.yamlVerify the status of the trident-csi storage class creation by running the following command:

oc get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE…

gp2 kubernetes.io/a… Delete WaitForFirstConsumer…

gp2-csi ebs.csi.aws.com Delete WaitForFirstConsumer…

gp3 ebs.csi.aws.com Delete WaitForFirstConsumer…

gp3-csi ebs.csi.aws.com Delete WaitForFirstConsumer…

trident-csi csi.trident… Retain Immediate…This completes the installation of Trident CSI driver and its connectivity to FSx for ONTAP file system. Now you can deploy a sample MySQL stateful application on ROSA using file volumes on FSx for ONTAP.

If you want to verify that applications can create PV using Trident operator, create a PVC using the pvc-trident.yaml file provided in the fsx folder.

Deploy a sample MySQL stateful application

In this section, you deploy a highly-available MySQL application onto the ROSA cluster using a Kubernetes StatefulSet and have the PersistentVolume provisioned by Trident.

Kubernetes StatefulSet verifies the original PersistentVolume (PV) is mounted on the same pod identity when it's rescheduled again to retain data integrity and consistency. For more information about the MySQL application replication configuration, refer to MySQL Official document.

1. Create MySQL Secret

Before you begin with MySQL application deployment, store the application's sensitive information like username and password in Secrets. Save the following manifest to a file name mysql-secrets.yaml and execute the command below to create the secret.

Create the mysql namespace:

oc create namespace mysqlNext, create a mysql secret file called mysql-secrets.yaml:

apiVersion: v1

kind: Secret

metadata:

name: mysql-secret

type: Opaque

stringData:

password: <SQL Password>Apply the YAML to your cluster:

oc apply -f mysql-secrets.yamlVerify that the secrets were created:

$ oc get secrets -n mysql | grep mysql

mysql-password opaque 12. Create application PVC

Next, you must create the PVC for your MySQL application. Save the following manifest in a file named mysql-pvc.yaml:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: mysql-volume

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

storageClassName: trident-csiApply the YAML to your cluster:

oc apply -f mysql-pvc.yamlConfirm that the pvc exists:

$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-volume Bound pvc-26319553-f29b-4616-b2bb-c700c8416a6b 50Gi RWO trident-csi 7s3. Run the MySQL application

To deploy your MySQL application on your ROSA cluster, save the following manifest to a file named mysql-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-fsx

spec:

replicas: 1

selector:

matchLabels:

app: mysql-fsx

template:

metadata:

labels:

app: mysql-fsx

spec:

containers:

- image: mysql:5.7

name: mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: password

volumeMounts:

- mountPath: /var/lib/mysql

name: mysqlvol

volumes:

- name: mysqlvol

persistentVolumeClaim:

claimName: mysql-volume Apply the YAML to your cluster:

oc apply -f mysql-deployment.yamlVerify that the application has been deployed:

$ oc get pods -n mysql

NAME READY STATUS RESTARTS AGE

mysql-fsx-6c4d9f6fcb-mzm82 1/1 Running 0 15d4. Create a service for the MySQL application

A Kubernetes service defines a logical set of pods and a policy to access pods. StatefulSet currently requires a headless service to control the domain of its pods, directly reaching each pod with stable DNS entries. By specifying None for the clusterIP, you can create a headless service.

$ oc apply -f mysql-service.yamlVerify the service:

$ oc get svc -n mysql

NAME TYPE CLUSTER-IP EXTERNAL-IP PORTS

mysql ClusterIP None <none> 3306/TCP5. Create MySQL client for MySQL

You need a MySQL client so you can access the MySQL applications that you just deployed. Review the content of mysql-client.yaml, and then deploy it:

oc apply -f mysql-client.yamlVerify the pod status:

$ oc get pods

NAME READY STATUS

mysql-client 1/1 RunningLog in to the MySQL client pod:

$ oc exec --stdin --tty mysql-client -- shInstall the MySQL client tool:

$ apk add mysql-clientWithin the mysql-client pod, connect to the MySQL server using the following command:

$ mysql -u root -p -h mysql.mysql.svc.cluster.localalEnter the password stored in the mysql-secrets.yaml. Once connected, create a database in the MySQL database:

MySQL [(none)]> CREATE DATABASE erp;

MySQL [(none)]> CREATE TABLE erp.Persons ( ID int, FirstName varchar(255),Lastname varchar(255));

MySQL [(none)]> INSERT INTO erp.Persons (ID, FirstName, LastName) values (1234 , "John" , "Doe");

MySQL [(none)]> commit;

MySQL [(none)]> select * from erp.Persons;

+------+-----------+----------+

| ID | FirstName | Lastname |

+------+-----------+----------+

| 1234 | John | Doe |

+------+-----------+----------+Backup and Restore

Now create a Kubernetes VolumeSnapshotClass so you can snapshot the persistent volume claim (PVC) used for the MySQL deployment.

1. Create a snapshot

Save the manifest in a file called volume-snapshot-class.yaml:

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotClass

metadata:

name: fsx-snapclass

driver: csi.trident.netapp.io

deletionPolicy: DeleteRun the manifest:

$ oc create -f volume-snapshot-class.yaml

volumesnapshotclass.snapshot.storage.k8s.io/fsx-snapclass createdNext, create a snapshot of the existing PVC by creating VolumeSnapshot to take a point-in-time copy of your MySQL data. This creates an FSx snapshot that takes almost no space in the filesystem backend. Save this manifest in a file called volume-snapshot.yaml:

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: mysql-volume-snap-01

spec:

volumeSnapshotClassName: fsx-snapclass

source:

persistentVolumeClaimName: mysql-volumeApply it:

$ oc create -f volume-snapshot.yaml volumesnapshot.snapshot.storage.k8s.io/mysql-volume-snap-01 createdAnd confirm:

$ oc get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE mysql-volume-snap-01 true mysql-volume 50Gi fsx-snapclass snapcontent-bce1f186-7786-4f4a-9f3a-e8bf90b7c126 13s 14s2. Delete the database erp

Now you can delete the database erp. Log in to the container console using a new terminal:

$ oc exec --stdin --tty mysql-client -n mysql -- sh

mysql -u root -p -h mysql.mysql.svc.cluster.localDelete the database erp:

MySQL [(none)]> DROP DATABASE erp;

Query OK, 1 row affected3. Restore the snapshot

To restore the volume to its previous state, you must create a new PVC based on the data in the snapshot you took. To do this, save the following manifest in a file named pvc-clone.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-volume-clone

spec:

accessModes:

- ReadWriteOnce

storageClassName: trident-csi

resources:

requests:

storage: 50Gi

dataSource:

name: mysql-volume-snap-01

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.ioApply it:

$ oc create -f pvc-clone.yaml

persistentvolumeclaim/mysql-volume-clone createdAnd confirm:

$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-volume Bound pvc-a3f98de0-06fe-4036-9a22-0d6bd697781a 50Gi RWO trident-csi 40m

mysql-volume-clone Bound pvc-9784d513-8d45-4996-abe3-7372cd879151 50Gi RWO trident-csi 36s4. Redeploy the database

Now you can redeploy your MySQL application with the restored volume. Save the following manifest to a file named mysql-deployment-restore.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-fsx

spec:

replicas: 1

selector:

matchLabels:

app: mysql-fsx

template:

metadata:

labels::q:q!

app: mysql-fsx

spec:

containers:

- image: mysql:5.7

name: mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: password

volumeMounts:

- mountPath: /var/lib/mysql

name: mysqlvol

volumes:

- name: mysqlvol

persistentVolumeClaim:

claimName: mysql-volume-clone Apply the YAML to your cluster:

$ oc apply -f mysql-deployment-restore.yamlVerify the application has been deployed with the following command:

oc get pods -n mysql

NAME READY STATUS RESTARTS AGE

mysql-fsx-6c4d9f6fcb-mzm82 1/1 Running 0 15dTo validate that the database has been restored as expected, go back to the container console and show the existing databases:

MySQL [(none)]> SHOW DATABASES;

+--------------------+

| Database |

+--------------------+ |

| erp |

+--------------------+

MySQL [(none)]> select * from erp.Persons;

+------+-----------+----------+

| ID | FirstName | Lastname |

+------+-----------+----------+

| 1234 | John | Doe |

+------+-----------+----------+Summary

In this article, you've seen how quick and easy it can be to restore a stateful application using snapshots, nearly instantly. Combining all the capabilities of FSx for ONTAP NAS with the sub-millisecond latencies and multi-AZ availability, FSx for ONTAP NAS is a great storage option for your containerized applications running in ROSA on AWS.

For more information on this solution, refer to the NetApp Trident documentation. If you would like to improve upon the solution provided in this post, follow the instructions in the GitHub repository.

À propos des auteurs

Mayur Shetty is a Principal Solution Architect with Red Hat’s Global Partners and Alliances (GPA) organization, working closely with cloud and system partners. He has been with Red Hat for more than five years and was part of the OpenStack Tiger Team.

George James came to Red Hat with more than 20 years of experience in IT for financial services companies. He specializes in network and Windows automation with Ansible.

Plus de résultats similaires

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Kubernetes and the quest for a control plane | Technically Speaking

SREs on a plane | Technically Speaking

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud