Integrating AI into applications requires an MLOps (Machine Learning Operations) ready platform that often needs to work on premises as well as in the cloud. Businesses looking to put AI into production will want to understand this methodology in detail.

Operationalizing AI is no small feat. In a recent survey sponsored by Red Hat with Gartner Peer Community 50% of the respondents said that it takes them 7-12 months on average to get a model deployed. That’s a long time in today’s generative AI space.

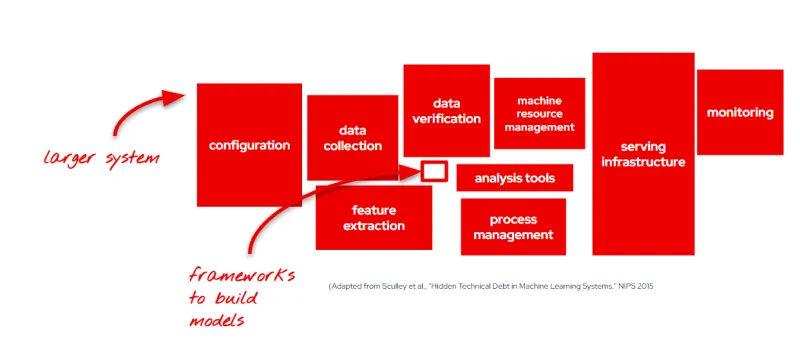

Research supports Gartner’s findings. In Google’s seminal paper from 2015, Sculley et al. argues that “Only a small fraction of real-world ML systems is composed of the ML code, as shown by the small white (edit) box in the middle.”

The cost associated with maintaining a model is draining. Many environments have a data scientist spending their time handling issues outside the core Machine Learning environment, or outside their area of expertise. The time they spend managing the system increases the time it takes to get models up and running. Tasks such as running a container/environment are meant as Developer Operations (DevOps) tasks and technical debt is added when a system is designed in such a way that a data scientist needs to create their own DevOps methodology instead of inheriting it from the company.

Utilizing a hybrid application platform extended with AI tooling lets you serve MLOps ready models. The models then inherit the methodology of DevOps which allows them to easily feed into an application and are portable allowing them to be trained on data on a customer’s premises but also move to a cloud platform like Google.

Let’s explore how to train a model on-prem and register it on Vertex AI in Google Cloud.

Red Hat OpenShift on Google Cloud

Red Hat OpenShift is the leading enterprise application platform for enterprises who want to build, deploy and run cloud-native applications from a hybrid cloud to the edge. It provides full-stack automated operations, brings security to the entire application development process, offers a consistent experience across all environments, and self-service provisioning for developers.

Google Cloud is one of the leading cloud vendors, enabling you to quickly and easily get access to cloud computing. It has especially honed in on AI workloads with its Vertex AI offering making it easy to build, train, manage, and deploy AI models in the cloud.

Red Hat OpenShift is available in Google Cloud in multiple ways: Self managed in Bring your own subscription or directly from the Google Cloud marketplace and retire spend against your Google Cloud consumption Commits.

Customers can also leverage the Red Hat OpenShift managed cloud service offering called Red Hat Openshift Dedicated running on Google Cloud available on console.redhat.com and soon from the Google Cloud marketplace. This allows them to focus more on the application lifecycle while having a team of Site Reliability Engineers maintaining the application platform.

Note that all the different Red Hat OpenShift flavors will offer a very similar user experience and functionality whether running on Google Cloud or in the datacenter.

Have a look at the following demo to get more details on how you can provision a Red Hat OpenShift cluster running Google Cloud:

The benefits of a Hybrid Strategy

Imagine having petabytes of valuable data that could be used to greatly improve customer experience. For example a recommendations engine for shoppers.

The challenge? Your data cannot leave on-prem for any reason.

What about building new image recognition software for healthcare using data based on patient data?

You can probably see where this is going, training models means often running them where the data is to make sure no sensitive data leaks outside your premise. This is especially true when the data is challenging to mask or anonymize.

Not wanting to move the data for compliance, cost, or other reasons causes an effect called data gravity, where operations are run near the data.

Many enterprises are staying or moving to on-prem, on/nearshoring to de-risk compliance management, minimize cloud egress costs and to maximise the value potential for data.

However, for many, on-premises infrastructure alone does not provide the optimal solution. Cloud computing and storage offer numerous advantages, such as access to virtually limitless resources and the ability to easily expose any application hosted there.

This is where a hybrid cloud strategy comes into play. By utilizing tools and solutions that function consistently regardless of their operating environment, you can distribute your MLOps (Machine Learning Operations) workflow and run specific functions where they fit best.

For instance, keep raw data on-premises to comply with data policies, and then anonymize the data before running a burst training session to the cloud to accelerate it with additional resources. Alternatively, handle both data processing and training on-premises and serve the model in the cloud, simplifying integration into cloud-based applications.

A Hybrid Architecture: Google Cloud and Red Hat OpenShift Integration

Combining Red Hat OpenShift and Google Cloud enables a hybrid strategy. This approach capitalizes on Red Hat OpenShift’s ability to provide consistent behaviour in both environments, and Google Cloud’s advanced cloud computing and AI capabilities.

One key component of this architecture is OpenShift AI, a modular MLOps platform that offers AI tooling as well as flexible integration options with various independent software vendors (ISVs) and open source components.

The Vertex AI Model Registry is a central repository for managing the lifecycle of ML models. It provides an overview to organize, track, and train new versions. To deploy a new model version, assign it to an endpoint directly from the registry, or using aliases, deploy models to an endpoint.

Combined, the architecture looks like this:

In this example workflow, we gather and process the data on our on-premise servers. Then experiment with that data and develop a model architecture. Finally, train that model on-premise.

If we just need to retrain an existing model we could skip the “Develop model” stage.

When the model is trained we push it to the model registry on Google Cloud for easy access to test and serve.

While it’s serving, we gather metrics to make sure it performs well while live. We also collect data and push it back to our on-premise data lake.

Thanks to using Red Hat OpenShift as the underlying application platform for both the on-premise and cloud clusters, we can transition between them smoothly as the environment and tools will be familiar to us.

What’s next?

While what we presented above is a simple example of what a hybrid architecture can look like, it can expand much further. A Hybrid MLOps platform has the capability to span across multiple cloud environments or be deployed to various edge locations, all while maintaining centralized management of the deployed models.

The MLOps workflow can be further enhanced to incorporate automated pipelines that retrain the models in response to data drift or at specific intervals. The workflow can also integrate with a multitude of additional components that can contribute to the overall model development and deployment lifecycle.

No matter which direction you choose to take, a hybrid cloud MLOps architecture serves as a robust foundation for expanding AI into production applications and business critical data.

Visit red.ht/datascience to get started with your hybrid MLOps system.

À propos des auteurs

Seasoned AI/ML practitioner focused on AI platforms, customer collaboration, and shaping product direction with real-world insight. With 10+ years building models and platforms—and experience founding an ML startup—he helps teams stand up AI platforms that shorten the path from idea to impact and power intelligent applications.

Plus de résultats similaires

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Context as architecture: A practical look at retrieval-augmented generation

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud