These are exciting times for AI. Enterprises are blending AI capabilities with enterprise data to deliver better outcomes for employees, customers, and partners. But as organizations weave AI deeper into their systems, that data and infrastructure also become more attractive targets for cybercriminals and other adversaries.

Generative AI (gen AI), in particular, introduces new risks by significantly expanding an organization’s attack surface. That means enterprises must carefully evaluate potential threats, vulnerabilities, and the risks they bring to business operations. Deploying AI with a strong security posture, in compliance with regulations, and in a way that is more trustworthy requires more than patchwork defenses, it demands a strategic shift. Security can’t be an afterthought—it must be built into the entire AI strategy.

AI security vs. AI safety

AI security and AI safety are related but distinct concepts. Both are necessary to reduce risk, but they address different challenges.

AI security

AI security focuses on protecting the confidentiality, integrity, and availability of AI systems. The goal is to prevent malicious actors from attacking or manipulating those systems. Threats can arise from:

- Exploiting vulnerabilities in software components

- Misconfigured systems or broken authentication allowing unauthorized access to sensitive enterprise data

- Prompt injection or model “jailbreaking”

In other words, AI security looks much like traditional IT security, but applied to new types of systems.

AI safety

AI safety helps maintain the intended behavior of AI systems, keeping them aligned with company policies, regulations, and ethical standards. The risks here are not about system compromise, but about what the system produces. Safety issues include:

- Harmful or toxic language

- Bias or discrimination in responses

- Hallucinations (plausible-sounding but false answers)

- Dangerous or misleading recommendations

A safety lapse can erode trust, cause reputational damage, and even create legal or ethical liabilities.

Examples of security and safety risks

AI Security | AI Safety |

Memory safety issues: Inference software and other AI system components may be vulnerable to traditional memory safety issues. For example, a heap overflow on an image manipulation library may allow an attacker to manipulate the classification done by a model. | Bias: The model responses discriminate against a social group based on race, religion, gender, or other personal characteristics. |

Insecure configurations: The MCP server of a tool is exposed to the Internet without authentication and attackers are able to access sensitive information through it. | Hallucination: The model invents responses that are not based on facts. |

Broken authentication or authorization: It’s possible to access the MCP server functionality by using the user token instead of using a specific limited token for the MCP client. | Harmful responses: The model provides responses that can cause damage to users. For example, if it provides unsafe medical advice. |

Malware: Supply chain attack where malicious code is added to a trusted open source model | Toxic language: The model responses contain offensive or abusive content. |

Jailbreaking: Bypass LLM built-in safety controls to extract restricted content. |

The new risk frontier: Demystifying “safety”

While foundational security is critical, large language models (LLMs) introduce an additional risk domain: safety. Unlike the traditional use of the term, in AI contexts safety means trustworthiness, fairness, and ethical alignment.

- A security failure might expose sensitive data.

- A safety failure might produce biased hiring recommendations, toxic responses in a customer chatbot, or other outputs that undermine trust.

Why does this happen? LLMs are trained to predict the “next word” based on training data, not to distinguish between truth and falsehood. They also try to answer every question, even ones they weren’t trained for. Add in their non-deterministic nature, the fact that responses vary even to the same prompt, and you get unpredictable results.

This unpredictability creates governance challenges. Even with safe training data, a model can still generate harmful content. Fine-tuning can also weaken built-in safeguards, sometimes unintentionally.

Managing these risks requires a new toolset:

- Pre-training and alignment focused on safety

- Continuous evaluation and benchmarking of safety outcomes

- “LLM evaluation harnesses” to measure the likelihood of harmful outputs, as routine as performance testing

The key is recognizing that a harmful output is usually a safety issue, not a traditional security flaw.

Should the AI model get all the attention?

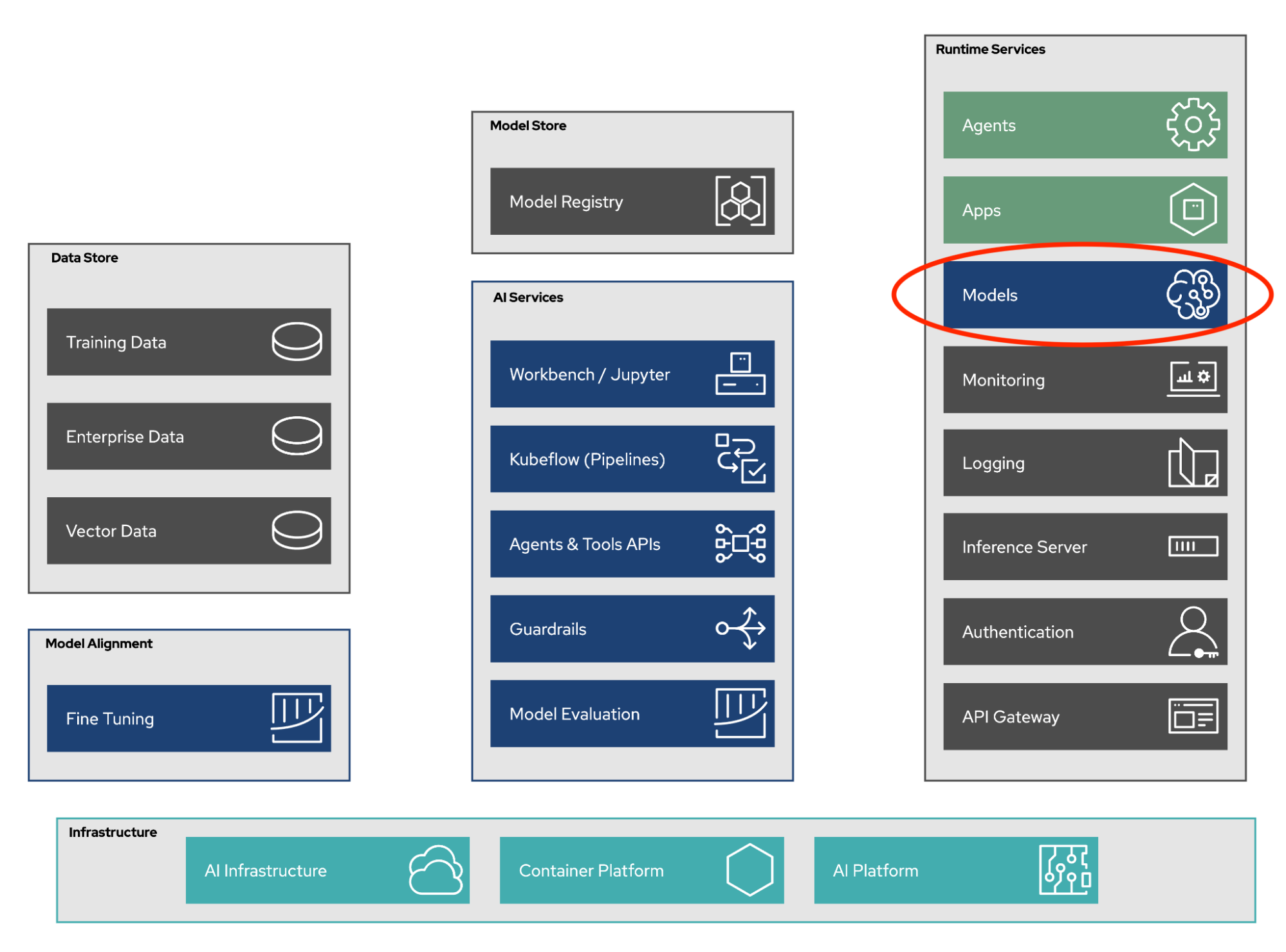

AI models often attract the spotlight, but they’re only one component of a broader AI system. In fact, non-AI components (servers, APIs, orchestration layers) often make up the bulk of the system and carry their own risks.

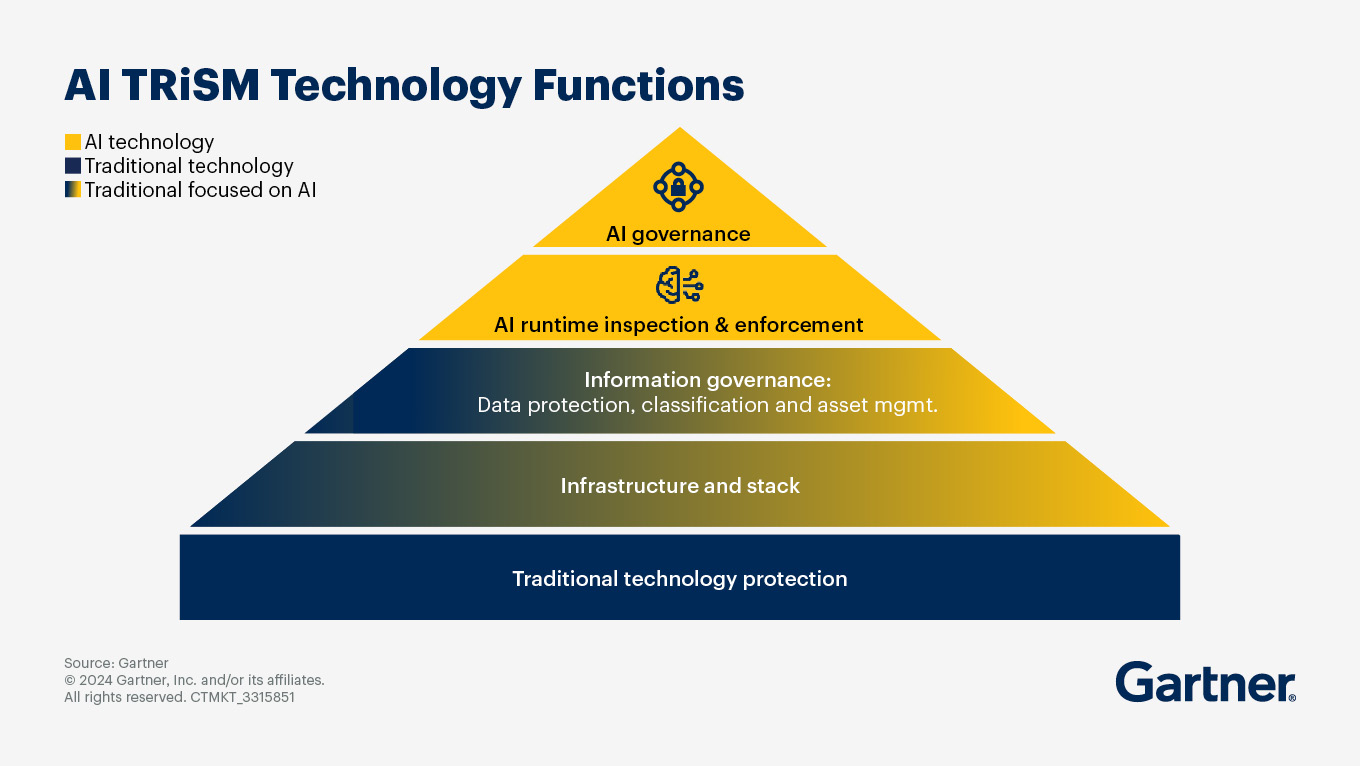

The right approach is a defense-in-depth strategy—strengthen existing enterprise security practices, then layer on additional protections for the novel risks that generative AI introduces.

Unifying AI security with enterprise cybersecurity

AI security isn’t about reinventing the wheel. The same principles that underpin traditional enterprise security also apply here. In fact, your existing investments in cybersecurity are the foundation of your AI security posture.

Security is all about managing risk—reducing the chance of a threat materializing and minimizing its impact if it does. That means:

- Risk modeling to identify and prioritize AI-related risks

- Penetration testing to measure robustness

- Continuous monitoring to detect and respond to incidents

The classic Confidentiality, Integrity, Availability (CIA) triad still applies, whether the system is AI-powered or not. To strengthen AI security, enterprises should extend proven practices, including:

- Secure software development lifecycle (SDLC): Building in security at every phase. Frameworks such as the Red Hat SDLC mean that security is part of the entire development process, not something that's added later.

- Secure supply chain: Vetting every model, library, and container to guard against vulnerabilities or malicious code.

- SIEM (Security Information and Event Management) and SOAR (Security Orchestration, Automation, and Response) tools: Monitoring inputs, system stress, and possible data exfiltration.

Enterprises should also integrate AI risk into existing governance frameworks, such as an Information Security Management System (ISMS), rather than creating standalone AI programs. By treating AI security as an extension of your existing posture, you can leverage the same skills, tools, and processes to cover most risks more effectively.

Moving forward

Long story short, enterprises should take a holistic approach to AI security and safety. Frameworks highlight the need for integrated risk management, combining security, governance, and compliance with AI-specific safeguards.

The bottom line is that AI brings enormous opportunities, but also unique risks. By unifying AI security with your established cybersecurity practices and layering in safety measures, enterprises can build more trustworthy systems that deliver business value.

Conclusion

AI Security and AI safety are two sides of the same coin—one focused on protecting systems from attack, the other on strengthening responsible and trustworthy behavior. Enterprises that treat AI security and safety as integral to their overall risk management strategy will be best positioned to unlock AI’s business value while maintaining the trust of customers, employees, and regulators.

Navigate your AI journey with Red Hat. Contact Red Hat AI Consulting Services for AI security and safety discussions for your business.

Please reach out to us with any questions or comments.

Learn more

- What is AI Security?

Red Hat OpenShift AI for managing entire AI/ML lifecycle

```

Gartner®, AI Trust and AI Risk: Tackling Trust, Risk and Security in AI Models, Avivah Litan, 24 December 2024

Red Hat Product Security

À propos des auteurs

Ishu Verma is Technical Evangelist at Red Hat focused on emerging technologies like edge computing, IoT and AI/ML. He and fellow open source hackers work on building solutions with next-gen open source technologies. Before joining Red Hat in 2015, Verma worked at Intel on IoT Gateways and building end-to-end IoT solutions with partners. He has been a speaker and panelist at IoT World Congress, DevConf, Embedded Linux Forum, Red Hat Summit and other on-site and virtual forums. He lives in the valley of sun, Arizona.

Florencio has had cybersecurity in his veins since he was a kid. He started in cybersecurity around 1998 (time flies!) first as a hobby and then professionally. His first job required him to develop a host-based intrusion detection system in Python and for Linux for a research group in his university. Between 2008 and 2015 he had his own startup, which offered cybersecurity consulting services. He was CISO and head of security of a big retail company in Spain (more than 100k RHEL devices, including POS systems). Since 2020, he has worked at Red Hat as a Product Security Engineer and Architect.

Plus de résultats similaires

Building the foundation for an AI-driven, sovereign future with Red Hat partners

Chasing the holy grail: Why Red Hat’s Hummingbird project aims for "near zero" CVEs

Data Security And AI | Compiler

Data Security 101 | Compiler

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud