Network engineering for the high availability of application services is nothing new. However, as Kubernetes-based environments become more widespread, companies are beginning to look for innovative deployments across multiple types of infrastructures (on-premise, AWS, Azure, IBM Cloud). They then begin to ask what achieving successful application delivery looks like for those types of deployments.

In our series on Davie Street Enterprises (DSE), we've used a fictitious company to illustrate how organizations have implemented protective measures against ransomware, transformed applications, and more.

Red Hat classé leader dans le rapport Magic Quadrant™ de Gartner® de 2023

Red Hat a obtenu la meilleure note pour sa capacité d'exécution et sa vision globale dans la catégorie Gestion des conteneurs du rapport Magic Quadrant de Gartner de 2023.

In this post, we’ll cover how Gloria Fenderson, DSE’s Senior Manager of Network Engineering, plans to use Red Hat OpenShift and the NGINX Ingress Controller to deliver applications on a cluster running across multiple types of underlying infrastructure. Both NGINX and Red Hat solutions are designed to enable the deployment of container-based environments across multiple clouds; we'll see now how easily this is accomplished.

Application Delivery Strategy Across Multiple Clouds

The Value of the Hybrid Cloud

DSE will be migrating to a hybrid cloud environment using Red Hat OpenShift. Red Hat OpenShift uses Red Hat Enterprise Linux CoreOS to create centralized management of OpenShift cluster deployments across different infrastructures from within the cluster itself. It doesn’t matter if that cluster is in DSE’s on-premise environment or in a public cloud, or both.

This is done to provide the benefits of cloud computing while also leveraging DSE’s existing investment in its on-premise infrastructure. When demand increases, DSE will be able to “burst” into the cloud and scale-up applications to match demand and then dynamically scale back down to reduce costs and optimize efficiency. Red Hat OpenShift will also provide consistency in how developers and administrators interact with the containerized environment, allowing them to trust that an application built to run on OpenShift will run anywhere the same.

Fenderson realizes getting traffic into and out of the environment (ingress and egress traffic) across different nodes will be critical to a successful deployment. To that end, Fenderson is most concerned about the application delivery aspect in this new environment and wants to keep using NGINX to accomplish this asher team has done in the past. She knows that NGINX has an ingress controller that has been certified on Red Hat OpenShift for handling application delivery in the OpenShift clusters.

From a networking perspective, Fenderson is looking for a solution that allows her team to see the health of individual services running across the different infrastructures, scale those services up and down as needed, and do all this consistently and reliably across the different types of infrastructure. To accomplish this, Red Hat and NGINX have combined forces to support the NGINX Ingress Operator for OpenShift, which helps automate the installation and maintenance of the NGINX Ingress Controller.

Utilizing the latest open source technologies is also a big win for Fenderson, who appreciates the dedication both Red Hat and NGINX have to open source software. With that in mind, she feels confident in telling her team to go ahead with the initial deployment and to run a test application.

Installing the Operator

The certified NGINX Operator for OpenShift is easy to install and automates the installation and deployment of the NGINX Ingress Controller. Red Hat OpenShift also helps with the management of all your operators through a catalog in OperatorHub and Operator Lifecycle Management (OLM)

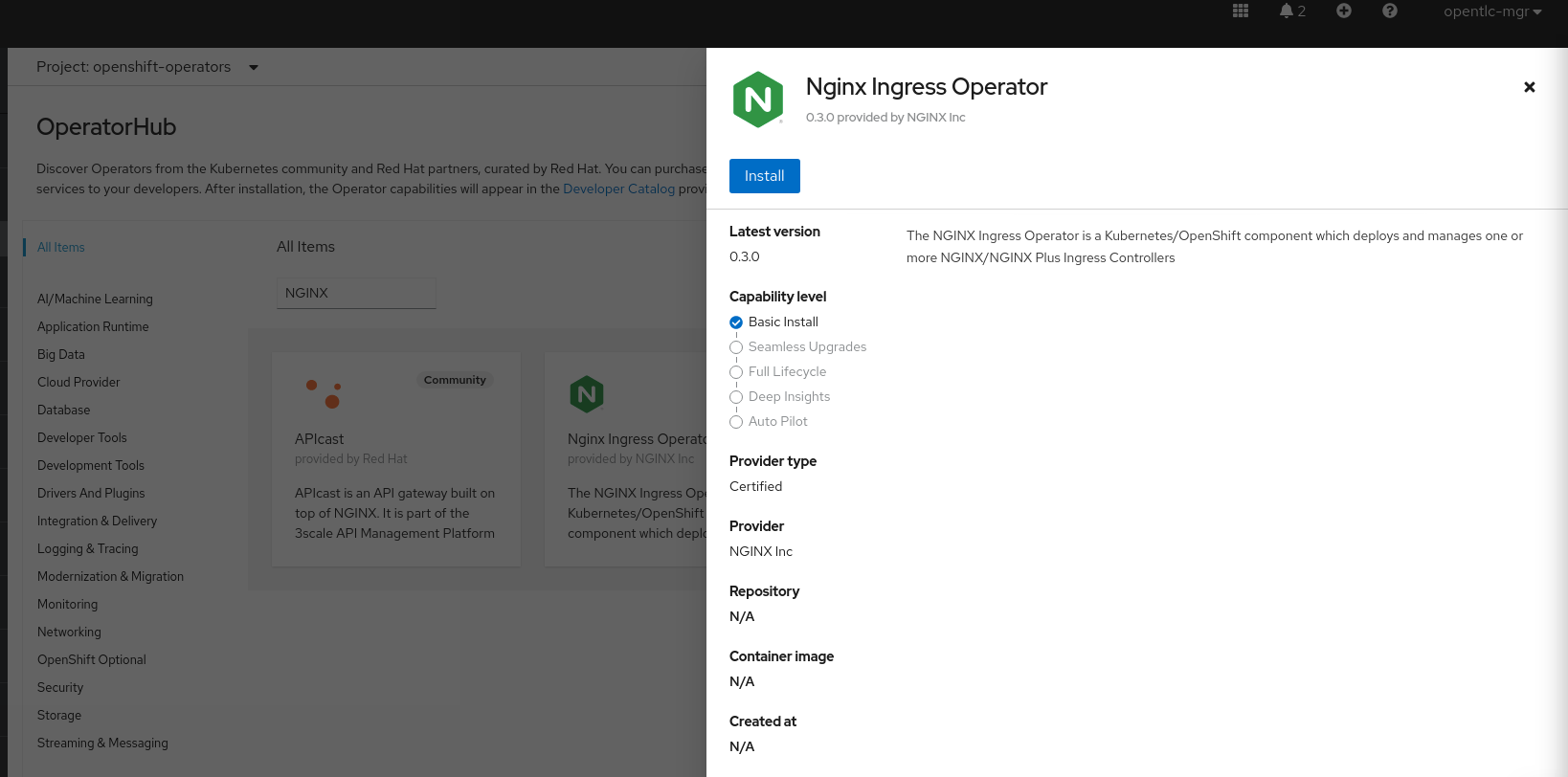

First, Fenderson's team downloads the NGINX Certified Operator from the OpenShift OperatorHub. OperatorHub lists all the certified operators that Red Hat jointly supports with its partners so that you know the software you are using will have the support of Red Hat behind it. Below is an example of what installing an operator from the OperatorHub looks like.

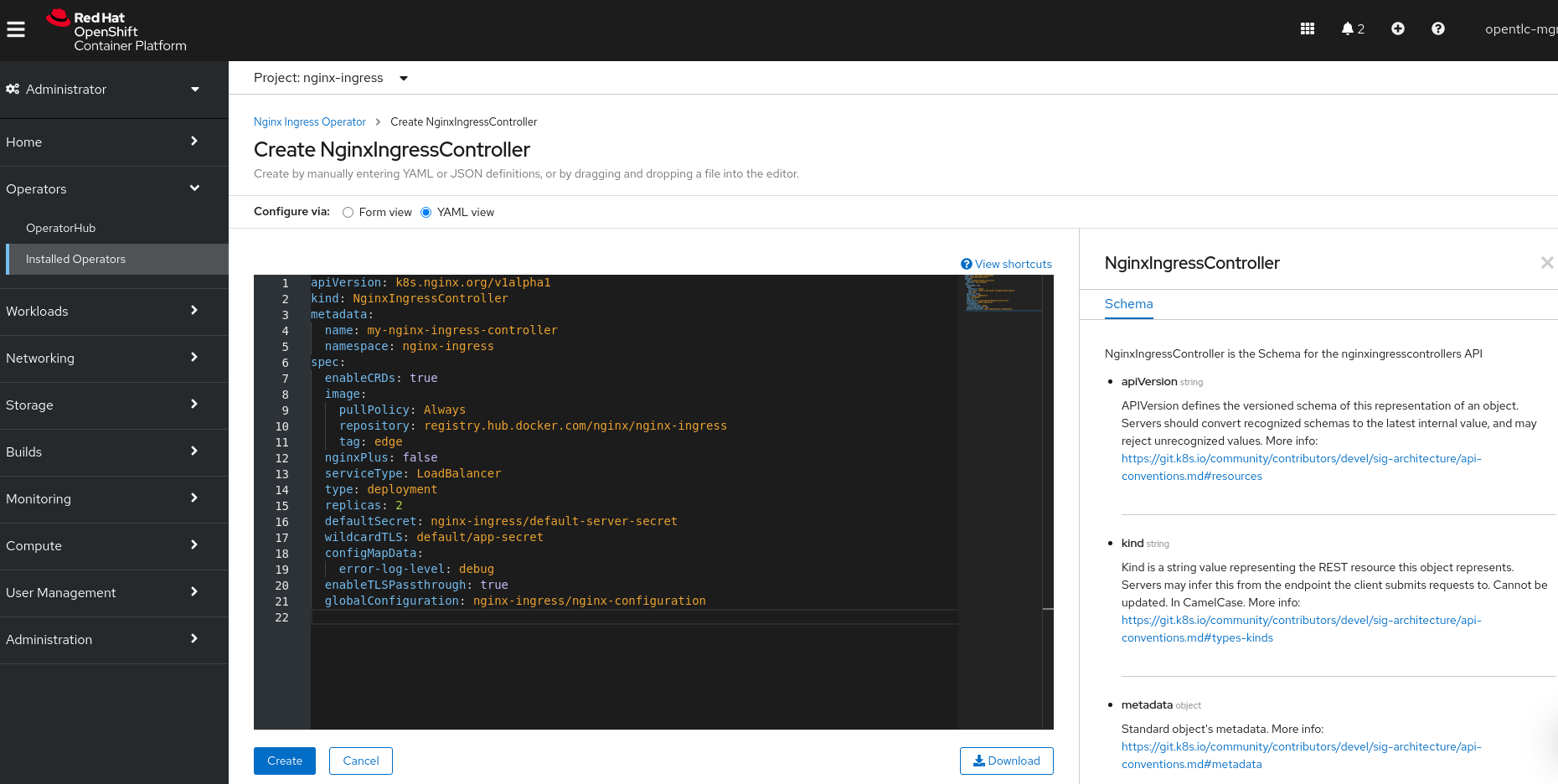

Deploying the NGINX Ingress Controller with the certified operator

Next, they use the operator to deploy an instance of the NGINX Ingress Controller. They do this by using the YAML example on GitHub to create the NGINX Ingress Controller. The team learns that you can modify your deployment of the NGINX Ingress Controller Custom Resource in different ways to include NGINX App Protect or other features needed for its environment.

Now that the ingress is successfully installed along with the Operator, the team decides to deploy a test application. They will use the Github repo NGINX made available to do just that.

Once the team deploys the cafe application, they use the NGINX+ Status page to monitor the status of the services running on the OpenShift Nodes deployed across any infrastructure.

Conclusion

Fenderson’s team is only beginning to unlock the abilities of the open source powered solutions that Red Hat and NGINX have created. The team is excited to begin migrating the applications it has created onto Red Hat OpenShift and become more flexible in where and how to run them in a highly available multi-cloud environment.

In case you missed them, you can find all posts in our DSE series in one place. See how Red Hat solutions have helped DSE implement DevSecOps, embrace GitOps, and more.

À propos de l'auteur

Cameron Skidmore is a Global ISV Partner Solution Architect. He has experience in enterprise networking, communications, and infrastructure design. He started his career in Cisco networking, later moving into cloud infrastructure technology. He now works as the Ecosystem Solution Architect for the Infrastructure and Automation team at Red Hat, which includes the F5 Alliance. He likes yoga, tacos and spending time with his niece.

Plus de résultats similaires

Simplify Red Hat Enterprise Linux provisioning in image builder with new Red Hat Lightspeed security and management integrations

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

Can Kubernetes Help People Find Love? | Compiler

Scaling For Complexity With Container Adoption | Code Comments

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud