In this blog series, we will introduce the Red Hat OpenShift sandboxed containers peer-pods feature, which has been released as a dev-preview feature in Red Hat OpenShift 4.12.

In this post, we will provide a high-level solution overview of the new peer-pods feature. Other posts will document a technical deep dive, as well as deployment and hands-on instructions for using peer-pods.

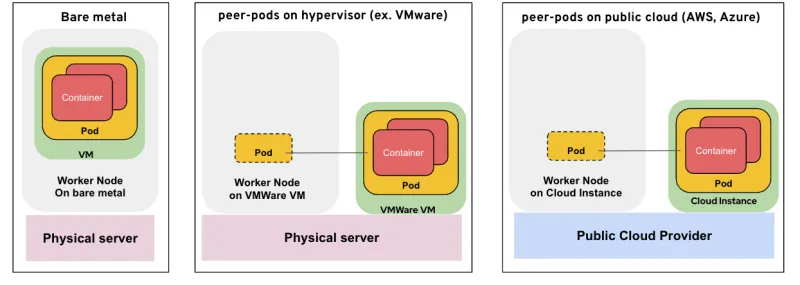

Peer-pods take the existing OpenShift sandboxed containers solution targeted for bare-metal deployments, and extend it to run on public cloud (starting with AWS) and OpenShift deployments running on VMware. Peer-pods are also a key enabler for confidential containers, which we’ll also cover in this blog post.

How does the peer-pods solution work?

As detailed in this article, OpenShift sandboxed containers provides the means to deliver Kata Containers to OpenShift cluster nodes. This adds a new runtime to the platform, which can run workloads in a separate virtual machine (VM) containing a pod, and then starts containers inside that pod. The existing solution is based on QEMU and KVM as the means to launch VMs on bare metal servers. The implication is that this solution is currently only supported on bare metal servers. These bare metal servers are the OpenShift Container Platform worker nodes, and OpenShift sandboxed containers will use QEMU/KVM to spin up VMs on those servers. This is as opposed to a more typical deployment of OpenShift Container Platform where the controller/worker nodes are deployed as VMs on top of the bare metal servers (for example in public cloud deployments).

So the question is, why can’t the workloads (pods within VMs) run over OpenShift Container Platform nodes running as VMs? This is because it will mean that the VMs being created are now a VM running inside another VM (aka nested virtualization), and such deployments are not supported by Red Hat in production environments. It should also be noted that some cloud providers don’t support nested virtualization (such as AWS), so even if nested virtualization was supported, the workloads could not be deployed on the OpenShift Container Platform cluster running in the cloud.

So the question is: How do we run OpenShift sandboxed container workloads in an OpenShift Container Platform cluster on a public cloud or on a VM-based OpenShift Container Platform deployment (such as a cluster installed over VMware), given these constraints?

Enter peer-pods, also known as the Kata remote hypervisor (note that this repository is in the confidential containers Github and not in Kata Github—we will get back to this point later on).

The peer-pods solution enables the creation of Kata VMs on any environment without requiring bare metal servers or nested virtualization support (yep, it’s magic). It does this by extending Kata containers runtime to handle VM lifecycle management using cloud provider APIs (eg. AWS, Azure) or third-party hypervisors APIs (such as VMware vSphere).

To help explain things, let's start by comparing a standard pod to an OpenShift sandboxed containers (Kata) pod, and add a Red Hat OpenShift Virtualization workload to the mix (to emphasize the differences) and then show how the peer-pods fit in.

Standard pod versus OpenShift sandboxed containers pod

First, we want to compare a standard pod to an OpenShift sandboxed containers pod:

The standard pod (left) runs on top of an OpenShift Container Platform worker node and within it, containers are spun up.

In the case of an OpenShift sandboxed containers pod (right), another layer of isolation for each pod is added using a VM (which for OpenShift sandboxed containers runs a CoreOS image or an isolated kernel). The VM is launched using QEMU/KVM with a 1:1 mapping between it and the isolated pod. This means that the lifecycle of the VM (QEMU/KVM based) is tightly coupled to the pod’s lifecycle. Similar to the standard pod, in an OpenShift sandboxed containers pod multiple containers are spun up.

Comparing OpenShift sandboxed containers with OpenShift Virtualization

It’s important to mention that there is another project which uses VMs in OpenShift and that is OpenShift Virtualization based on the KubeVirt project.

The OpenShift Virtualization solution (right) allows customers to bring their own VMs with their own OS, which runs inside a pod on top of a worker node. That VM runs inside a pod.

The OpenShift sandboxed containers solution (center) allows customers to bring their own container workloads (the same as the standard pod case) and use a VM layer to isolate the pod where the customers' workloads are running. So the fundamental difference is that for OpenShift sandboxed containers, the customer is not directly aware of the VM, but rather only sees the resulting additional isolation layer, while for OpenShift Virtualization the customer is fully aware of the VM.

So when we next look at peer-pods, keep in mind that we are only looking at the OpenShift sandboxed containers-based solution.

OpenShift sandboxed containers with peer-pods

So now let’s compare the existing solution to a peer-pods-based solution:

In the solution on the left, the bare metal server itself is the OpenShift worker node, with the OpenShift sandboxed containers VM running on top using QEMU/KVM.

In the solution in the middle, something interesting starts to emerge.

The OpenShift worker node is now running as a VM on top of the hypervisor (such as VMware). The OpenShift sandboxed containers VM, however, is created as yet another VM on the hypervisor (not nested) equivalent at its level to the worker node VM. You can think of these VMs as neighbors, or peers. From the worker nodes perspective, a pod has been created on it. In practice, however, a pod has also been created on the peer VM, and both pods are seamlessly connected to resemble a single entity from the OpenShift perspective. The solution consisting of a peer VM and the two connected pods is the peer-pods solution.

For creating the peer VM and seamlessly connecting the pods, we invoke the hypervisor APIs (VMware in this case). It is important to mention that we could have invoked QEMU APIs to create the VM on the worker node VM itself, but, as we already mentioned, this can’t be supported in production.

In the solution on the right, we are extending a similar solution to the public cloud. In this case, the OpenShift worker node is running as a VM on a public cloud (for example, AWS—it can be any public cloud). The OpenShift sandboxed containers/peer-pods will invoke the AWS APIs to create a peer VM, then create a pod inside that VM and seamlessly connect the worker node pod to the pod inside the peer VM.

Assuming you have been able to follow us until this point, let’s dive deeper in the next section to explain what it actually means to create the peer VM and seamlessly connect the pods.

The peer-pods architecture

Local hypervisor versus remote hypervisor

As mentioned, OpenShift sandboxed containers is based on the Kata project.

In this section, we will use the terminology of Kata instead of OpenShift sandboxed containers and Kubernetes (k8s) instead of OpenShift to help follow the upstream terminology. For this discussion, however, we can assume that OpenShift sandboxed containers and Kata mean the same thing, as do k8s and OpenShift.

The following diagram compares the exiting Kata solution (local hypervisor) and the Kata peer-pods solution (remote hypervisor):

In the current Kata solution (top), the Kata runtime will use a local hypervisor (QEMU/KVM) to create a Kata VM and pod inside on top of the k8s worker node, which for us is a bare metal server.

In the Kata peer-pods solution (bottom) the Kata runtime will use a remote hypervisor (public cloud APIs or 3rd party hypervisor APIs) to spin up a VM external to the k8s worker node and inside it create the pod.

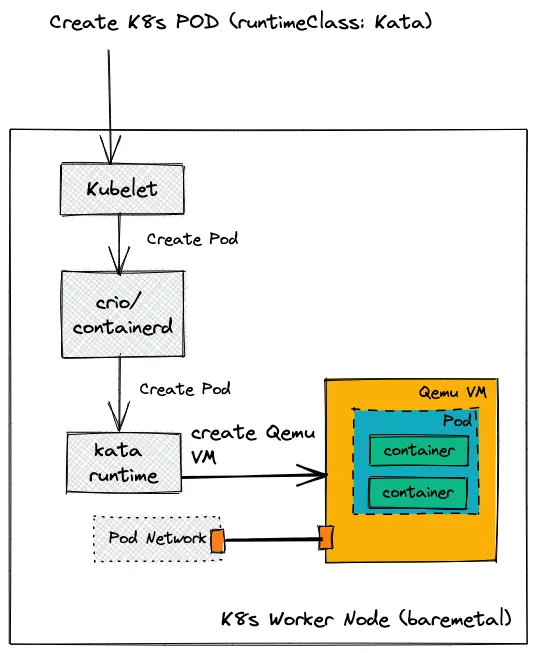

Creating a pod

The following diagrams show the flow of creating a Kata workload today versus creating a Kata peer-pods workload on top of AWS:

Let’s take a closer look at the differences between these solutions for creating a pod:

- The first steps for both solutions are the same with Kubelet invoking CRIO/containerd which then calls the Kata runtime.

- In the current Kata solution (top) the Kata runtime uses QEMU/KVM to create a VM on the current server with a pod within the VM (and spins up containers inside the pod).

- In the Kata peer-pods solution (bottom) the VM is created by invoking EC2 APIs on a remote server. Inside that VM we have our pods and containers.

- The difference you immediately see between these solutions is the networking portion: on the left we can simply connect the pod we created to the pod network. However on the peer-pods solution (right) we create a tunnel from the k8s worker node to the peer-pod VM (we call this the underlay network) and on it we connect the pod inside the VM to the pod networking inside the k8s worker node (we call this the overlay network).

- In a similar manner (not shown in this image), we need to connect the external VMs storage and logs to the k8s worker node.

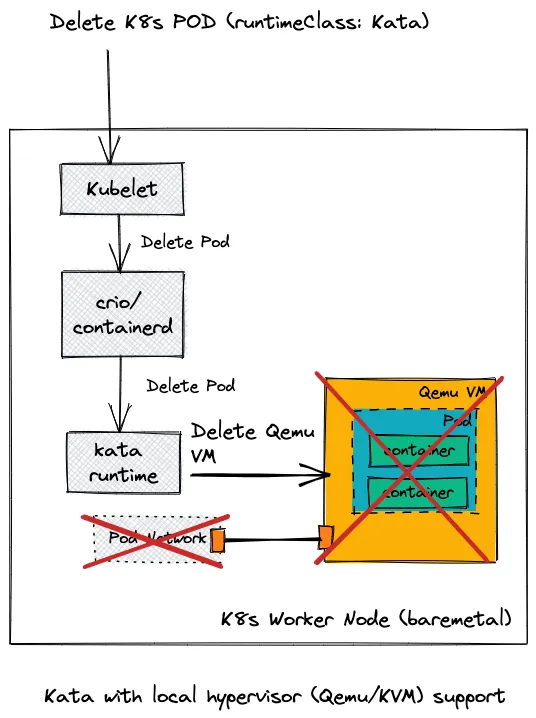

Deleting a pod

The following diagram shows the flow of deleting a Kata workload today versus deleting a Kata peer-pods workload on top of AWS:

Let’s take a closer look at the differences between these solutions for deleting a pod:

- The first steps for both solutions are the same with Kubelet invoking CRIO/containerd who then calls the Kata runtime.

- In the current Kata solution (top) the Kata runtime uses QEMU/KVM to delete a VM on the current server with a pod within the VM (and containers within the pod).

- In the Kata peer-pods solution (bottom) the VM is deleted by invoking EC2 APIs on a remote server. Inside that VM the pods and containers are also removed.

- For the standard Kata use case (top) the pod networking is deleted as part of the deletion of all the resources. For the Kata peer-pods case however we need to also remove the networking tunnel we created between the VM and k8s worker node VM (deleting first the overlay network and then the underlay network).

- In a similar manner (not shown in this image), storage and logging resources connected to the peer-pods VM are also removed in the process.

Why do we need peer-pods?

Now that we have covered what peer-pods are and how the solution works, let’s talk about actual use cases or why do we need peer-pods?

OpenShift sandboxed containers deployed on public cloud or third-party hypervisors

As mentioned before, one important use case for OpenShift sandboxed containers peer-pods is the ability to deploy the OpenShift sandboxed containers solution on different public clouds and third-party hypervisors. Third-party hypervisors cover OpenShift clusters running in VMs over VMware as one example. Another example is a cluster running in VMs over Red Hat Enterprise Linux on the host. Since peer-pods avoid the need for running nested VMs, we can now safely deploy OpenShift sandboxed containers on a deployment in production.

OpenShift sandboxed containers is a powerful solution with its additional isolation kernel layer and provides these benefits:

- It can protect the host from malicious workloads running in the pods

- It can protect a workload from potentially being attacked by another workload running on the cluster

- It provides a guardrail to cluster administrators for safely running pods requiring privileged capabilities

Customers can take advantage of these capabilities on any deployment of OpenShift Container Platform, rather than only on bare metal deployments.

Confidential containers

The CNCF confidential containers project (CoCo) was created this year to enable cloud-native confidential computing by leveraging Trusted execution environments (TEE) to protect containers and data.

For more details on what this project is about and why Red Hat cares about this technology see our blog post: What is the Confidential Containers project?

If we look at our previous list of what OpenShift sandboxed containers provides when combined with CoCo we get the following list (a 4th capability added):

- It can protect the host from malicious workloads running in the pods

- It can protect a workload from potentially being attacked by another workload running on the cluster

- It provides a guardrail to cluster administrators for safely running pods requiring privileged capabilities

- It can protect a workload from the host trying to access it’s private information or it’s secret sauce

So how does this all connect to peer-pods?

The CoCo solution embeds a k8s pod inside a VM together with an engine called the enclave software stack. There is a one-to-one mapping between a k8s pod and a VM-based TEE (or enclave).

In other words, to take advantage of the hardware (HW) encryption capabilities we use in confidential containers, we need a VM object. This VM object can’t be nested (HW limitations) and in the majority of cases will need to connect it to a k8s cluster already deployed on VMs (and not bare metal servers).

Peer-pods are the way to spin up these additional VMs as peers instead of nested to leverage the HW encryption capabilities. For that reason, peer-pods are a fundamental part of the CoCo project and a lot of the peer-pods code is developed under the confidential containers repository.

Demo: Running privileged pipelines using OpenShift sandboxed containers on AWS

Let’s dive into an actual demo showing how OpenShift sandboxed containers peer-pods can be used to solve a real customer problem for an OpenShift cluster running on AWS:

We have two developers, only one is allowed to use elevated privileges (container running as root).

- Our goal is to run an OpenShift pipeline in an OpenShift cluster running on AWS.

- In our case, the OpenShift pipeline job requires elevated privileges (happens in a number of cases).

- The developer without the elevated privileges will fail to run the pipeline due to insufficient privileges.

- The developer with elevated privileges will succeed to run the pipeline, however the elevated privileges granted to this developer by the cluster administrator could potentially harm the cluster and thus are typically disabled in a production environment. However, there are scenarios where elevated privileges are required and disabling the same limits the developer productivity and flexibility.

- The demo shows how we can potentially force all the workloads that the developer with elevated privileges creates, to run using OpenShift sandboxed containers (Kata runtime).

- By doing this, we are protecting the OpenShift cluster from potential mistakes that this developer could perform (intentionally or not) with workloads running with root access.

- This enables running OpenShift pipelines with elevated privileges in production environments.

You can watch the video here:

Summary

In this blog post, we provided an introduction to the OpenShift sandboxed containers peer-pods feature, the reasoning behind it and how it differs from the current OpenShift sandboxed containers solution.

We also provided a short overview of the OpenShift sandboxed containers peer-pods architecture and provided some insight into the planned use cases.

In the next post, we will dive into the technical details of this solution.

Sobre os autores

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Mais como este

Ford's keyless strategy for managing 200+ Red Hat OpenShift clusters

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

AI Is Changing The Threat Landscape | Compiler

What Is Product Security? | Compiler

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem