In a 2019 report, 451 Research called the service mesh concept “a Swiss Army Knife of modern-day software, solving for the most vexing challenges of distributed microservices-based applications.” In fact, service mesh is becoming increasingly important as companies expand their use of microservices.

In a recent O’Reilly survey, 28% of respondents said their organizations have been using microservices for at least three years, while 61% said their organizations have been using microservices for a year or more. In the survey, conducted among about 1,500 subscribers to O’Reilly publications, respondents noted that the biggest benefits of microservices include feature flexibility, the ability to respond quickly to changing technology and business requirements, better overall scalability, and more frequent code refreshes.

In today’s constantly changing business and global environment, these benefits aren’t just nice-to-haves; they are must-haves to attain and maintain a competitive advantage.

But, of course, nothing comes without tradeoffs, and the biggest tradeoff to the benefits of microservices is complexity. According to respondents to the O’Reilly survey, that complexity comes from both the act of breaking down legacy monolithic applications into microservices and managing microservices in general.

This is where service mesh comes in.

What is a service mesh?

To execute a function, one service must request data from potentially dozens of other services—a complex and often problem-prone process, especially as microservices scale. A service mesh helps head off problems by automatically routing requests from one service to the next while optimizing how all these moving parts work together.

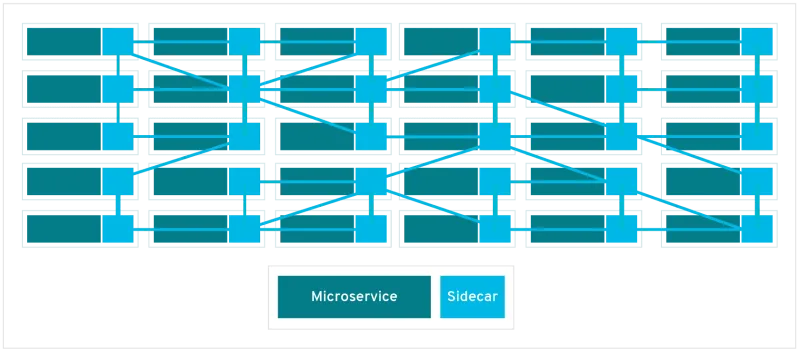

The service mesh is a dedicated, configurable infrastructure layer built into an app that can document how different parts of an app’s microservices interact. There are a couple of ways of implementing a service mesh, but most often, a sidecar proxy is applied to each microservice as a contact point. (The interactions among the sidecars put the mesh in the service mesh term.) Sidecars enable service requests to flow through the application, helping to smooth the data path among an application’s microservices.

Of course, sometimes that path is still a little (or a lot) bumpy, and in a complex microservices architecture, it can be challenging to determine where problems originate. The service mesh captures all elements of service-to-service communication as performance metrics; the resulting data on what works most effectively can be applied to the rules for communication, resulting in more efficient and reliable requests.

The use of a service mesh can also help to harden applications and make them more resilient. For example, with the Istio service mesh, organizations can consistently enforce authentication, encryption, and other policy across diverse protocols or runtimes. In addition, service mesh provides the ability to reroute requests from failed services, increasing resiliency.

Service mesh options

There are a number of service mesh options available for specific cloud instances or through upstream communities like Istio. The differences among these options are based on the capabilities they offer, above and beyond basic service discovery, observability, security, and monitoring. A table comparing the major service mesh offerings, as well as best practices for evaluating service meshes, can be found here.

How does Kubernetes fit in?

Many companies deploying microservices are doing so via containers. Kubernetes has become the de-facto standard for container orchestration, and service mesh works with Kubernetes (or, more specifically, on top of Kubernetes). While Kubernetes focuses on managing the application, the service mesh focuses on making communications among services safe and reliable at the infrastructure level.

The Istio service mesh, for example, works on top of Kubernetes. Earlier this year, Red Hat—which has been a major contributor to the open source Istio platform—released a productized version of Isitio for its OpenShift distribution of Kubernetes.

Bottom line

As seen in the productization of service mesh mentioned above, the technology is maturing and increasingly aligning with enterprises’ microservices goals and requirements. Working with the organization’s stakeholders, Enterprise Architects should look for the tipping point when libraries built within microservices will no longer handle service-to-service communication without disruption. Having a service mesh in place before the tipping point will go a long way toward ensuring that organizations can make the most of their microservices implementations—now and in the future.

Sobre el autor

Deb Donston-Miller is a veteran journalist, specializing in IT, business, career and education content. Deb was editor of eWEEK magazine, content director of eWEEK Labs, and director of audience recruitment and development at Ziff-Davis Enterprise. Deb currently develops content and content strategy for a variety of organizations. Find her on LinkedIn and Instagram. (Please excuse all the running selfies.)

Más como éste

From maintenance to enablement: Making your platform a force-multiplier

NGINX Gateway Fabric certified for Red Hat OpenShift

Ready to Commit | Command Line Heroes

The Fractious Front End | Compiler: Stack/Unstuck

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube