In the previous articles, we discussed how integrating AI into business-critical systems opens up enterprises to a new set of risks with AI security and AI safety [link], and explored the evolving AI security and safety threat landscape, drawing from leading frameworks such as MITRE ATLAS, NIST, OWASP, and others [link]. In this article, we'll examine the architectural considerations for deploying AI systems that are both secure and safe.

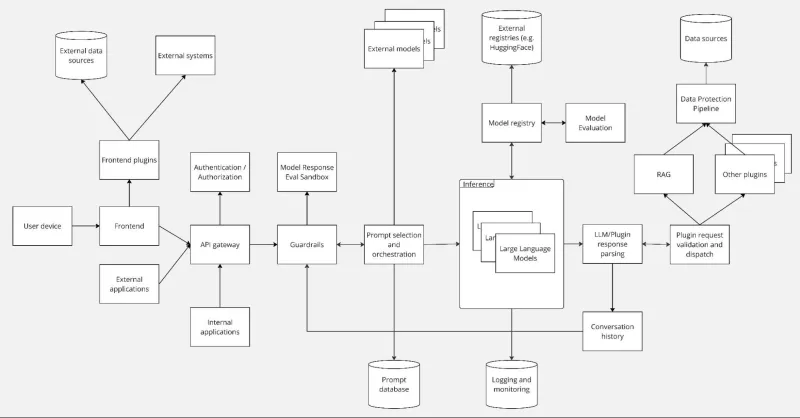

A resilient AI architecture must be designed with a defense-in-depth philosophy, integrating controls that address both traditional cybersecurity threats and unique AI safety risks. The following components are essential pillars of an enterprise-grade AI system implementation that puts security front-and-center. These components are layered on top of each other to create a comprehensive security posture.

1. Edge and ingress

Purpose: Control entry points by gating all external inputs, protecting APIs, and performing early sanitization.

Key component

API Gateway: As the entry point for all requests, the API gateway is a critical first line of defense. Its primary security function in a large language model (LLM) architecture is to regulate and limit the number of requests a user can make. This is critical for preventing attacks that rely on sending many queries to extract model weights or training data through techniques like model inversion or extraction attacks.

2. Identity and access

Purpose: Verify that only authorized actors access models, data, and operator tooling.

Key components

Authentication and authorization: This component confirms that every principal, whether human or system, is identified and authorized to perform a requested action. All communications between components (e.g., application to the AI model) must have a high level of security. Instead of relying on manageable secrets like API keys, the architecture should use short-lived tokens for programmatic access and scoped service tokens for platform components. Furthermore, the principle of least privilege must be enforced throughout, verifying that plugins, users, and services only have the access they absolutely need.

3. Model, compute, and tools (runtime)

Purpose: Host and run models with a stronger security footprint and with greater reliability; prevent exploitation of runtime components.

Key components

Inference engine: This is the software that loads and serves the LLM. It's a critical point of interaction with untrusted user input. For security, it should be run isolated, at least within a container, to reduce the impact of a potential compromise, whether from a vulnerability in the engine itself or from loading a malicious model.

The model: This is the LLM itself. Its integrity must be protected to keep it free from tampering, including being replaced by a malicious version.

Tools: Tools like Model Context Protocol (MCP) can extend an AI's functionality but also introduce risks, as they may see all data sent to and from the model and can sometimes elevate privilege. They must be thoroughly vetted, trusted, and restricted in what they are able to do.

4. Model and data protection

Purpose: Protect training, fine-tuning, and inference data; confirm model provenance.

Key components

Model registry: This is where models are stored and versioned. Models should be managed in a secure model registry where their supply chain can be vetted. It is essential to confirm that you are using the correct, official model and not a malicious copy from a public hub.

External data sources: This includes both the training data for the model and the data used by plugins like retrieval-augmented generation (RAG). It is critically important to protect the integrity of these data sources, as any unauthorized modification can directly impact the LLM's responses or be used to poison the model.

Data protection pipeline: The architecture must account for the security of data used in RAG. Because vector databases are so effective at finding information, a major risk is leaking poorly-permissioned data. Therefore, the architecture must include processes for data discovery, classification, and protection before it is made available to the AI system. The application must access this data strictly within the security context of the user making the request.

5. Safety and guardrails

Purpose: Keep model behavior aligned with policies, prevent harmful outputs, and mitigate hallucination and bias.

Key components

Runtime guardrails and content filters: This is a critical AI-specific component that acts as a specialized firewall, inspecting both incoming prompts and outgoing responses.

Input filtering: These controls are the primary defense against prompt injection and jailbreaking attacks, where an adversary tries to trick the model into bypassing its safety rules. Input filters can also block attempts to exfiltrate sensitive information or manipulate the model's behavior.

Output filtering: Output filters monitor the model's responses to block harmful content (hate speech, violence, self-harm instructions) and prevent the leakage of protected material like copyrighted text or proprietary source code from public repositories.

Output validators: Fact checking, citation requirements, and secondary verification models help validate the accuracy and reliability of model outputs, reducing the risk of hallucinations that could potentially lead to business harm.

Safety fine-tuning: Techniques like reinforcement learning from human feedback (RLHF) and alignment training, combined with adversarial testing harnesses, help models behave as intended even under adversarial conditions.

6. Observability

Purpose: Provide evidence for investigations, enforce governance and compliance, and drive monitoring and MLOps.

Key component

Logging and monitoring: This is a crucial cross-cutting component. Because LLMs are non-deterministic, comprehensive logging is essential to debug issues, investigate user complaints of harmful responses, and provide an audit trail for all actions within the system. Enterprises should integrate AI system logs with existing SIEM (security information and event management) and SOAR (security orchestration, automation, and response) tools to enable unified threat detection and incident response. Monitoring should track not only system performance but also safety metrics such as guardrail triggers, rejected prompts, and filtered outputs.

7. Governance and lifecycle controls

Purpose: Confirm that AI usage aligns with enterprise risk appetite, regulatory obligations, and ethical guidelines.

Key components

Governance framework: Enterprises should integrate AI risk into existing governance frameworks, such as an information security management system (ISMS), rather than creating standalone AI programs. This includes establishing clear policies for AI use, defining roles and responsibilities, and implementing processes for risk assessment and mitigation.

Lifecycle management: AI systems require continuous evaluation throughout their lifecycle. This includes pre-deployment testing, ongoing monitoring of model performance and safety metrics, and regular updates to address emerging threats. Organizations should implement processes for model retraining, version control, and retirement of outdated or compromised models.

Compliance and audit: The architecture must support compliance with relevant regulations and standards, including data protection laws, industry-specific requirements, and emerging AI-specific regulations. Regular audits should verify that controls are functioning as intended and that the system maintains alignment with organizational policies and values.

Conclusion

Building an AI system that prioritizes comprehensive safety and security requires a holistic, defense-in-depth approach that extends traditional cybersecurity practices while addressing the unique challenges of AI technology. By implementing these 7 architectural pillars, enterprises can create AI systems that are more resilient to both traditional cyber threats and AI-specific attacks, while helping to align outputs with organizational values and regulatory requirements.

The key is recognizing that AI security and AI safety are not isolated concerns but must be integrated throughout the entire system architecture. From the edge to the core, from development to deployment, security and safety considerations must be embedded at every layer. Organizations that take this comprehensive approach will be well positioned to unlock AI's business value while maintaining the trust of customers, employees, and regulators.

Navigate your AI journey with Red Hat. Contact Red Hat AI Consulting Services for AI security and safety discussions for your business.

Please reach out to us with any questions or comments.

Ressource

L'entreprise adaptable : quand s'adapter à l'IA signifie s'adapter aux changements

À propos des auteurs

Ishu Verma is Technical Evangelist at Red Hat focused on emerging technologies like edge computing, IoT and AI/ML. He and fellow open source hackers work on building solutions with next-gen open source technologies. Before joining Red Hat in 2015, Verma worked at Intel on IoT Gateways and building end-to-end IoT solutions with partners. He has been a speaker and panelist at IoT World Congress, DevConf, Embedded Linux Forum, Red Hat Summit and other on-site and virtual forums. He lives in the valley of sun, Arizona.

Florencio has had cybersecurity in his veins since he was a kid. He started in cybersecurity around 1998 (time flies!) first as a hobby and then professionally. His first job required him to develop a host-based intrusion detection system in Python and for Linux for a research group in his university. Between 2008 and 2015 he had his own startup, which offered cybersecurity consulting services. He was CISO and head of security of a big retail company in Spain (more than 100k RHEL devices, including POS systems). Since 2020, he has worked at Red Hat as a Product Security Engineer and Architect.

Plus de résultats similaires

Smarter troubleshooting with the new MCP server for Red Hat Enterprise Linux (now in developer preview)

The AI resolution that will still matter in 2030

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud