Red Hat OpenShift Container Platform 4 enables the mass production of container hosts at scale across cloud providers, virtualization platforms and bare metal. To create a true cloud platform, we had to tightly control the supply chain to increase the reliability of the sophisticated automation. Standardizing on Red Hat Enterprise Linux CoreOS (a variant of Red Hat Enterprise Linux) and CRI-O was an obvious choice

Red Hat classé leader dans le rapport Magic Quadrant™ de Gartner® de 2023

Red Hat a obtenu la meilleure note pour sa capacité d'exécution et sa vision globale dans la catégorie Gestion des conteneurs du rapport Magic Quadrant de Gartner de 2023.

Since sailing is such an apropos analogy for Kubernetes and containers, let’s use Brunel’s Sailing Blocks as an example to demonstrate the business problem that CoreOS and CRI-O solve. In 1803, Marc Brunel needed to produce 100,000 sailing blocks to meet the needs of the expanding British naval fleet. Sailing blocks are a type of pulley used to tie ropes to sails. Up until the very early 1800s, they were all built by hand, but Brunel changed that by investing in automation, building the pulleys in a standardized way with machines. This level of automation meant that pulleys were nearly identical, could be replaced when broken, and manufactured at scale.

Now imagine Brunel needed to do this over 20 major revisions of ship chassis designs (Kubernetes version) across five planets with completely different water and wind chemistry (cloud providers). Oh, and he wants all of the ships (OpenShift clusters), no matter which planet they sail on, to behave the same for captains (cluster operators). Extending this analogy, ship captains don’t care about which pulley (CRI-O) is used, they just want it to be strong and reliable.

OpenShift 4, as a cloud platform, has quite a similar business challenge. Nodes need to be manufactured at cluster build time, when nodes break, and when the cluster scales up. When a new node is provisioned, the critical components within the host, including CRI-O, must also be configured. Like any manufacturing, this requires the raw materials as inputs to the process. In the case of ships, this is metal and wood. However, in the case of manufacturing a container host in OpenShift 4 cluster, the inputs are configuration files and API provisioned servers. OpenShift then delivers level of automation over a lifecycle and provides product support to end users, offering them return on investment in the platform.

OpenShift 4 is designed to handle seamless in place upgrades within the 4.X lifecycle across all major cloud providers, virtualization platforms and even bare metal. To do this, nodes have to be manufactured with interchangeable parts. When a cluster requires a new version of Kubernetes, it will also get a matching version of CRI-O on CoreOS. Since the version of CRI-O maps directly to the version of Kubernetes, the permutations for testing, troubleshooting and support are vastly simplified. This lowers costs for end users as well as Red Hat.

This is a completely new way of thinking about Kubernetes clusters and lays the groundwork for a roadmap of exciting new features. CRI-O (Container Runtime Interface - Open Container Initiative aka CRI-OCI) was simply the best choice for manufacturing nodes at the scale that openshift needs. CRI-O will replace the previously provided Docker engine, giving OpenShift users a lean, stable, simple, and boring, yes boring, container engine designed specifically for Kubernetes.

The World of Open Containers

The world has been moving toward open containers for quite some time. Whether in the Kubernetes world, or in the lower layers, container standards have bred an ecosystem of innovation at every level.

It all began with the formation of the Open Containers Initiative back in June 2015. This early work standardized the container image and runtime specifications. These guaranteed that tools could standardize on a single format for container images and how to run them. Later, a distribution specification was added, allowing users everywhere to freely share container images.

Next, the Kubernetes community standardized on a pluggable interface called the Container Runtime Interface (CRI). This allowed Kubernetes users to plug in different container engines other than Docker.

Engineers from Red Hat and Google recognized the market need for a container engine that accepted requests from the Kubelet over the CRI protocol and fired up containers that were compliant with the above mentioned OCI specifications. And so, OCID was born. But wait, you said this article was about CRI-O? Well, nothing too crazy, it just got a name change before it was released 1.0. And so, CRI-O was born.

Innovation with CRI-O and CoreOS

With OpenShift 4, the default container engine is moving from Docker to CRI-O, providing a lean, stable, simple and boring container runtime that moves in lock step with Kubernetes. This vastly simplifies support and configuration of the cluster. All configuration and management of the container engine and host becomes automated as part of OpenShift 4.

Wait what?

That’s right, with OpenShift 4, there’s no need to log into individual container hosts and install the container engine, configure the storage, configure the registry servers to search, nor configure the networking. OpenShift 4 has been redesigned to use the Operator Framework not just for end user applications, but also for core platform operations like installation, configuration, and upgrades.

Kubernetes has always enabled users to manage applications by defining the desired state and employing Controllers to ensure that the actual state matches the defined state as closely as possible. This defined state, actual state methodology is powerful for both developers and operations. Developers can define the state that they want, hand it off to an operator as a YAML or JSON file, and the operator can then instantiate the application in production with the exact same definition.

By using Operators in the platform, OpenShift 4 brings the defined state, actual state paradigm to managing RHEL CoreOS and CRI-O. The task of configuring and versioning of the operating system and container engine is automated by what’s called the Machine Config Operator (MCO). The MCO vastly simplifies the cluster administrator’s job, by handling the last mile of installation as well as day two operations in a completely automated fashion. This makes OpenShift 4 a true cloud platform. More on this later.

Running Containers

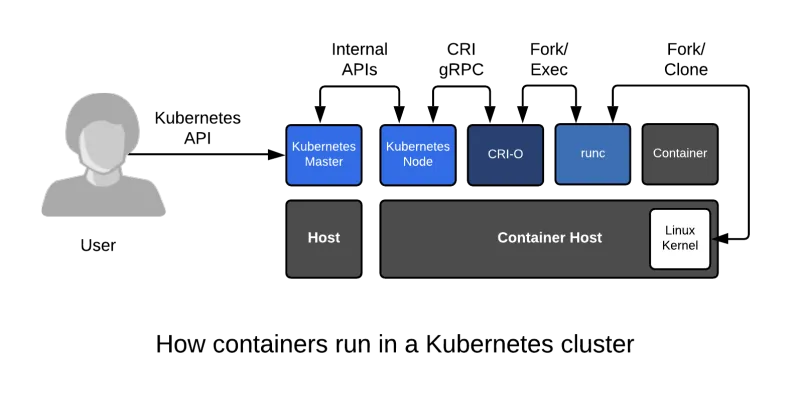

Users have had the option to use CRI-O in OpenShift since version 3.7 as Tech Preview and 3.9 as Generally Available (supported). Red Hat has also been running CRI-O for production workloads at scale in OpenShift Online since version 3.10. This has given the CRI-O team a lot of experience with running containers at scale in large Kubernetes clusters. To get a basic idea of how Kubernetes uses CRI-O, take a look at the following architectural drawing. .

CRI-O simplifies the manufacturing of new container hosts by keeping the entire top layer in lock step as new nodes are provisioned as well as when new versions of OpenShift are released. Revisioning the entire platform together allows for transactional updates/rollbacks, and also prevents deadlocks in dependencies between the container host kernel, container engine, Kubelet and Kubernetes Master. There is always a clear path from point A to point B when all of the components of the platform are version controlled together. This leads to easier updates, better security, better performance accountability, and less cost in upgrades and new releases.

Demonstrating the Power of Replaceable Parts

As mentioned before, leveraging the Machine Config Operator to manage the container host and container engine in OpenShift 4 allows for a new level of automation that has not been seen before with Kubernetes. To demonstrate, let’s show how you would deploy a change to the crio.conf file. Don’t get lost in the terminology, just focus on the outcome.

To demonstrate, let’s create what’s called a Container Runtime Config. Think of this as a Kubernetes resource which represents a configuration for CRI-O. In reality it’s a specialized version of what’s called a MachineConfig, which represents any configuration deployed to a RHEL CoreOS machine within an OpenShift cluster.

This custom resource called a ContainerRuntimeConfig has been implemented to make it easy for cluster administrators to tune CRI-O. It’s even powerful enough to only apply it to certain nodes based on what’s called a MachineConfigPool. Think of this as a group of machines that all serve the same purpose.

Notice the last two lines are what we are going to change in the /etc/crio/crio.conf file. These two lines look strikingly similar to lines in the crio.conf file because they are:

vi ContainerRuntimeConfig.yaml

Output:

apiVersion: machineconfiguration.openshift.io/v1 kind: ContainerRuntimeConfig metadata: name: set-log-and-pid spec: machineConfigPoolSelector: matchLabels: debug-crio: config-log-and-pid containerRuntimeConfig: pidsLimit: 2048 logLevel: debug

Now, submit it to the Kubernetes cluster and verify that it has been created. Notice, it looks just like any other Kubernetes resource:

oc create -f ContainerRuntimeConfig.yaml oc get ContainerRuntimeConfig

Output:

NAME AGE set-log-and-pid 22h

After we have created a ContainerRuntimeConfig, we have to modify one of the MachineConfigPools to tell Kubernetes that we want this configuration applied to a particular group of machines in the cluster. In this case we will modify the MachineConfigPool for the master nodes:

oc edit MachineConfigPool/master

Output. Trimmed for visibility:

... metadata: creationTimestamp: 2019-04-10T23:42:28Z generation: 1 labels: debug-crio: config-log-and-pid operator.machineconfiguration.openshift.io/required-for-upgrade: "" ...

At this point, the MCO goes to work manufacturing a new crio.conf file for the cluster. In a beautiful stroke of elegance, the fully rendered config file can be inspected through the Kubernetes API. Remember, a ContainerRuntimeConfig, is just a specialized version of a MachineConfig, so we can see the output by looking at the rendered MachineConfigs:

oc get MachineConfigs | grep rendered

Output:

rendered-master-c923f24f01a0e38c77a05acfd631910b 4.0.22-201904011459-dirty 2.2.0 16h rendered-master-f722b027a98ac5b8e0b41d71e992f626 4.0.22-201904011459-dirty 2.2.0 4m rendered-worker-9777325797fe7e74c3f2dd11d359bc62 4.0.22-201904011459-dirty 2.2.0 16h

Notice there is a rendered config file for the masters that is newer than the original configurations manufactured . To inspect it, run the following command. Fair warning, this might be the greatest and best one-liner ever invented in the history of Kubernetes:

python3 -c "import sys, urllib.parse; print(urllib.parse.unquote(sys.argv[1]))" $(oc get MachineConfig/rendered-master-f722b027a98ac5b8e0b41d71e992f626 -o YAML | grep -B4 crio.conf | grep source | tail -n 1 | cut -d, -f2) | grep pid

Output:

pids_limit = 2048

Now, verify that it has been deployed out to the master nodes. First, get a list of the nodes in the cluster:

oc get node | grep master Output: ip-10-0-135-153.us-east-2.compute.internal Ready master 23h v1.12.4+509916ce1 ip-10-0-154-0.us-east-2.compute.internal Ready master 23h v1.12.4+509916ce1 ip-10-0-166-79.us-east-2.compute.internal Ready master 23h v1.12.4+509916ce1

Inspect the file deployed. You will notice it’s been updated with the new pid and debug directives we specified in the ContainerRuntimeConfig resource. Pure elegance:

oc debug node/ip-10-0-135-153.us-east-2.compute.internal -- cat /host/etc/crio/crio.conf | egrep 'debug||pid’

Output:

... pids_limit = 2048 ... log_level = "debug" ...

All of these changes were made to the cluster without ever running SSH. Everything was done by communicating with the Kuberentes master. Only the master nodes were configured with these new parameters. The worker nodes were not changed, demonstrating the value of the defined state, actual state methodology of Kubernetes applied to container hosts and container engines with interchangeable parts.

The above demo highlights the ability to make changes to a small OpenShift Container Platform 4 cluster with three worker nodes, or a huge production instance with 3000. It’s the same amount of work either way - not much - one ContainerRuntimeConfig file, and one label change to a MachineConfigPool. And, you can do this with any version of Kubernetes underlying OpenShift Container Platform 4.X during its entire life cycle. That is powerful.

Often technology companies are moving so fast that we don’t do a good job of explaining why we make technology choices for the underlying components. Container engines have historically been a component which users interact with directly. Because the popularity of containers legitimately started with the container engine, users often have a personal interest in them. This made it worth it to highlight why Red Hat chose CRI-O. Containers have evolved and the focus is now squarely on orchestration, and we have found CRI-O to deliver the best experience within OpenShift 4.

À propos de l'auteur

At Red Hat, Scott McCarty is Senior Principal Product Manager for RHEL Server, arguably the largest open source software business in the world. Focus areas include cloud, containers, workload expansion, and automation. Working closely with customers, partners, engineering teams, sales, marketing, other product teams, and even in the community, he combines personal experience with customer and partner feedback to enhance and tailor strategic capabilities in Red Hat Enterprise Linux.

McCarty is a social media start-up veteran, an e-commerce old timer, and a weathered government research technologist, with experience across a variety of companies and organizations, from seven person startups to 20,000 employee technology companies. This has culminated in a unique perspective on open source software development, delivery, and maintenance.

Plus de résultats similaires

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Can Kubernetes Help People Find Love? | Compiler

Scaling For Complexity With Container Adoption | Code Comments

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud