When using the public cloud there are always challenges which need to be overcome. Organizations lose some of the control over how security is handled and who can access the elements which, in most cases, are the core of the company's business. Additionally, some of those elements are controlled by local laws and regulations.

This is especially true in the Financial Services and Insurance Industry (FSI) where regulations are gradually increasing in scope. For example in the EU, the emerging Digital Operational Resiliency Act (DORA) now includes the protection and handling of data while it is executed (data in use). In the future, this extension is expected to be added via additional laws throughout the EU.

The primary way to address this challenge is through confidential computing. But, as this is a new technology, there are areas which have not been addressed yet or require an increased focus on real-world business needs.

For confidential computing, one of the key use cases is secure cloud bursting. The goal in this is to extend the trust organizations have in their on-premise environment to the public cloud while adhering to regulatory requirements.

In cloud bursting we extend on-premise environments to the cloud by launching workloads in the cloud based on different criteria. This could include things like reaching a resource limitation in the on-prem environment or needing dedicated hardware resources available only in the cloud (such as specific GPUs or NPUs). Secure cloud bursting extends this idea and focuses on running the cloud workloads in a secure manner.

We will start with a base explanation of what confidential containers are and some of the necessary terminology in this context. We will also cover the different deployments we have (public cloud and on-prem). Lastly we will focus on secure cloud bursting.

What are OpenShift confidential containers?

Red Hat OpenShift sandboxed containers, built on Kata Containers, now provide the additional capability to run confidential containers (CoCo). Confidential containers are containers deployed within an isolated hardware enclave that help protect data and code from privileged users such as cloud or cluster administrators. The CNCF Confidential Containers project is the foundation for the OpenShift CoCo solution.

Confidential computing helps protect your data in use by leveraging dedicated hardware-based solutions. Using hardware, you can create isolated environments which are owned by you and help protect against unauthorized access or changes to your workload's data while it’s being executed (data in use).

Confidential containers enable cloud-native confidential computing using a number of hardware platforms and supporting technologies. CoCo aims to standardize confidential computing at the pod level and simplify its consumption in Kubernetes environments. By doing so, Kubernetes users can deploy CoCo workloads using their familiar workflows and tools without needing a deep understanding of the underlying confidential computing technologies.

TEEs, attestation and secret management

CoCo integrates Trusted Execution Environments (TEE) infrastructure with the cloud-native world. A TEE is at the heart of a confidential computing solution. TEEs are isolated environments with enhanced security (e.g. runtime memory encryption, integrity protection), provided by confidential computing-capable hardware. A special virtual machine (VM) called a confidential virtual machine (CVM) that executes inside the TEE is the foundation for OpenShift CoCo solution.

When you create a CoCo workload, a CVM is created and the workload is deployed inside the CVM. The CVM prevents anyone who isn’t the workload's rightful owner from accessing or even viewing what happens inside it.

Attestation is the process used to verify that a TEE—where the workload will run or where you want to send confidential information—is indeed trusted. The combination of TEEs and attestation capability enables the CoCo solution to provide a trusted environment to run workloads and enforce the protection of code and data from unauthorized access by privileged entities.

In the CoCo solution, the Trustee project provides the capability of attestation. It’s responsible for performing the attestation operations and delivering secrets (such as a decryption key) after successful attestation.

Deploying confidential containers through operators

The Red Hat confidential containers solution is based on 2 key operators:

- OpenShift confidential containers - a feature added to the OpenShift sandbox containers operator and is responsible for deploying the building blocks for connecting workloads (pods) and CVMs which run inside TEE provided by hardware

- Confidential compute attestation operator - responsible for deploying and managing the Trustee service in an OpenShift cluster

The following diagram shows the Red hat confidential containers solution deployed by these 2 operators:

Note the following:

- The Trustee solution is deployed an a trusted environment (more on this later) and it includes (among other things) an attestation service and a key management tool (for managing your secrets)

- Your confidential container is the combination of the pod running in a CVM which is required to perform attestation and obtained it’s secret through Trustee

- The CoCo workload can run in a public cloud or on-prem (more on this later)

For additional information on the CoCo solution and its components we recommend reading Exploring the OpenShift confidential containers solution and Use cases and ecosystem for OpenShift confidential containers.

Deployment for OpenShift confidential containers

Confidential computing can be used to extend IT services using on-demand environments such as public clouds. Confidential computing can also be used for isolating services within the company's existing on-prem infrastructure.

In this section we will present 2 types of confidential container deployments: A cloud-based deployment and a on-prem-based deployment.

Confidential containers cloud-based deployment

In a cloud-based deployment, confidential container workloads run in the public cloud. As of release OpenShift sandbox containers 1.7.0 confidential containers are supported in Microsoft Azure Cloud services (future releases will cover additional clouds).

The public cloud is considered as an untrusted environment since the cloud provider admins or someone with access to the cloud’s hardware may take advantage and obtain workloads' data in use (among other actions they can perform). To protect this data, we run our public cloud workloads in confidential containers so all data in use is encrypted.

The creation of confidential containers requires leveraging the confidential compute attestation operator which is responsible for attesting the workload and, when successful, providing the secret keys the workload needs. This is done by using Trustee for performing attestation and key management. It should be noted that keys can be used by the workload itself (for decrypting a large language model (LLM)as one example), for verifying the workload image is signed correctly before running it, for decrypting a container image before running it and other use cases.

For all this magic to come together, Trustee is required to run in a trusted environment.

A trusted environment is a location the owner of the workload (and its data) considers safe and secure. A typical trusted environment can be a laptop for someone testing a workload in the public cloud or, in the case of a production deployment, a physical server owned and installed by an entity that is considered safe. We can also create trusted environments in public clouds using a CVM and a kickstart attestation process which we will describe in future articles.

The following diagram shows how a confidential container is deployed in the public cloud:

Note the following:

- The confidential compute attestation operator which deploys Trustee and provides attestation and key management capabilities is deployed in a trusted environment

- The OpenShift cluster is deployed on the public cloud in a untrusted environment

- Confidential containers are created in the untrusted public cloud using the Trustee services so the workload and data are protected

Confidential containers on-prem-based deployment

In an on-prem-based deployment confidential container workloads run on bare metal servers. Tech preview OpenShift support for confidential containers on-prem deployments is planned in 2025. In this deployment, the on-prem infrastructure is considered as an untrusted environment.

There are 2 main industries who may consider on-prem as untrusted environment or lacking an additional level of security:

- Private cloud services - there are private cloud offerings where the owner of the infrastructure isn’t the owner of the workload, so private cloud admins and those with access to the hardware may obtain the workload’s data. Leveraging confidential containers the private cloud service can add an additional layer of security so all data in use is encrypted

- Public sector - Public sectors are extremely concerned with the security of their data and protecting its privacy. Using confidential containers in their on-prem environments provides additional means to protect the data in use

In the case of a on-prem deployment we again have the trusted and untrusted parts of the deployment:

Note the following:

- The confidential compute attestation operator which deploys Trustee and provides attestation and key management capabilities is deployed in a trusted environment. It should be emphasized that it can be deployed in a location different from the bare metal servers used for the OpenShift cluster

- The OpenShift cluster is deployed on the bare metal servers which we consider as an untrusted environment

- Confidential containers are created in the untrusted on-prem servers using the Trustee services and so their workload and data are protected

Use cases for on-prem and cloud focused deployments

Through discussions with Red Hat customers, we have observed 6 primary cases most of which are located on-prem or in a cloud-based deployment. None of those are purely in one or the other.

These use cases are relevant for confidential computing in general and not only for confidential containers. That is, these use cases are also applicable for confidential clusters and CVMs in OpenShift which we'll talk about in later articles.

Let's look at the specific use case for each of these deployment options.

On-premise-focused use cases

Let's start with the on-prem-focused deployments. The purpose here is to provide an extra level of security to either partners or (internal/external) customers within an on-prem datacenter.

IP protection / IP integrity

Let's say a supplier is interested in providing a service to a customer. This service includes running workloads in the customer’s environment, and these workloads include proprietary business logic the supplier owns (its secret sauce).

By using confidential containers, the supplier can run its workloads in the customer’s on-prem environment and still protect its business logic from the customer even though the customer has full control of the on-prem environment.

Total tenant isolation / Service provider

From talking to several public organizations, we've found that consolidating OpenShift services on a central infrastructure helps solve several problems. This includes reducing the number of administrators and providing dynamic load balancing between the tenants with higher utilization. This does, however, reduce the isolation between the different tenants, which many organizations are not comfortable with.. Such organizations require a strict separation between the OpenShift tenants to better prevent data leaks.

With confidential computing we are able to deploy all OpenShift services shared between tenants as confidential objects, helping prevent unauthorized tenants or users from obtaining the confidential data in use. This makes it possible for additional organizations to move to these consolidated services.

Aside from public organizations, specialized service providers (focusing on specific industries such as FSI) are also interested in a similar solution. We have seen use cases where a specialized service provider acts like a public cloud provider for their clients. In order to do this, they need to provide an environment to their customers while maintaining the isolation of each customer and thus adhering to regulations and laws.

Cloud-based use cases

The goal of the cloud-based use cases is to extend the on-prem datacenter and its security into the cloud thereby providing access to public cloud resources. The advantages of the public cloud, such as flexibility and consumption-based pricing, can be combined with the protections provided by an on-prem datacenter.

Digital sovereignty

In digital sovereignty the goal is to be able to provide a service that is totally independent of the surrounding infrastructure, services and operational model. It provides the means to move a workload from one cloud environment to another or even to an on-prem environment with ease and minimal downtime.

Digital sovereignty requires the decoupling of the service/workload from the cloud provider’s infrastructure and the use of APIs to enable seamless migration between clouds.

There are several areas where this decoupling is required, including when addressing security constraints. With confidential computing it is possible to set security constraints on data in use so it is decoupled from the cloud provider’s infrastructure. This enables the migration of confidential workloads between clouds while protecting the data in use.

Partner interaction / Data clean room

This use case involves 2 or more partners who interact with each other by sharing information. Such interactions require data or business models at its base to which each partner contributes a part.

The issue arises when this data is at the core of each partner’s business, so providing this data in an uncontrolled way might work against their business goals. There is a need to protect that data from the other partner while still being able to interact with the other partner's content. One such example is federated learning where a workload needs to learn from both partners' data while preventing each of those partners from accessing the other's data.

Confidential computing helps address this problem by running the federated learning workload in an isolated confidential container which assumes its environment is untrusted. It is able to obtain the data from both partners without sharing the data between them. Once the learning process has completed, the model is saved and the workload is deleted thus preventing any data in use leaks.

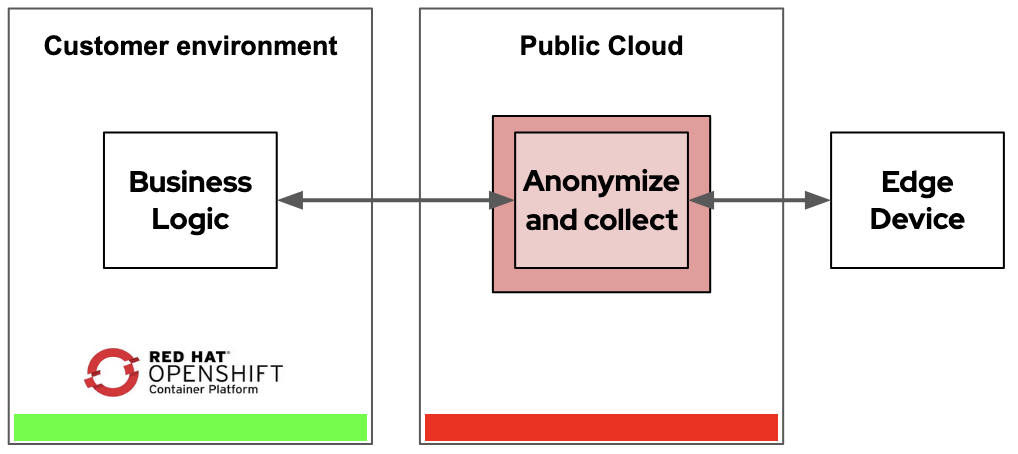

Edge

Edge use cases are particularly prevalent in the financial service area. Customers often have edge devices such as smart watches or phones. These devices collect data from the user by monitoring health related information, shopping information or geographical information. This is highly private data which needs to be protected.

Using confidential containers, this data can be sent from the device through secured channels to a public cloud-based workload inside a confidential container which aggregates and anonymizes the data. It can then send that anonymized data to a central component either in the cloud or in an on-prem datacenter to calculate a tariff or a benefit.

This way the company can take advantage of the public cloud, for example, to host the necessary compute power of unknown size for a new and seasonal campaign. The company can then forward the result to an on-prem datacenter to keep their business logic and data at their own environment.

Secure cloud burst

This use case focuses on using the cloud for its flexibility and consumption-based pricing.

There are many companies which want to use the cloud to either fully or partly host the applications which make up their business model. Most of the time these applications need private data, however, and in many of these cases regulators require that data be protected. When moving to the cloud from an on-prem environment, you lose a great deal of control over the data and the hardware which hosts your applications. With the move to a cloud-based infrastructure you need to strengthen your systems to provide the level of privacy needed.

In industries such as FSI or the public sector, there are regulators who will specifically focus on the security aspect of deploying components in the cloud. This is also being legislated in many parts of the world, so as in the EU with DORA in which you' find areas where strengthened security is needed, including the need to secure data in use.

Using confidential computing, we can now enforce security of data in use when cloud bursting to a public cloud. Let's take a closer look at this use case in the context of confidential containers.

Cloud bursting with confidential containers

Secure cloud bursting serves a number of goals and requirements, including:

- Extending the security constraints into the public cloud

- Providing secure access to consumption based resources (such as CPUs, NPUs and GPUs)

- Providing flexibility to fit the availability of resources to the unforeseen needs (for example event based requirements)

Here we'll focus on the details of implementing a secure cloud bursting use case.

Architecture and usage

The confidential containers cloud bursting solution builds on components and assumptions we previously described in the cloud-based deployment and the on-prem deployment.

Conceptually, we are combining both approaches where we are required to deploy workloads on untrusted environments, be it bare metal servers or public cloud. Both deployments will now share the Trustee solution which is deployed in a trusted environment to create confidential containers.

There is an important difference in this case, however—in cloud bursting your OpenShift cluster (and the operator used for confidential containers) is deployed on the bare metal servers, but there are no OpenShift nodes deployed on the public cloud, only workloads (using cloud bursting tools).

The following diagram shows a typical cloud bursting deployment:

Note the following:

- It should be noted that the cloud bursting as described in this blog is the operation of an OpenShift cluster running on-prem (right side) creating CoCo workloads on the public cloud (left side). The reason we have the Trustee in between (running in a trusted environment) is to emphasize that it’s used both for the CoCo workloads running on-prem and CoCo workloads running in the public cloud.

- The OpenShift cluster and the operator which enables confidential containers are deployed in the on-prem part (right)

- OpenShift confidential container workloads are created in the on-prem same way as previously described

- The Trustee solution (attestation and key management) is used for creating confidential containers both on the on-prem and public cloud deployment

- Trustee runs in a trusted environment (middle)

- There are no OpenShift nodes deployed in the public cloud (left)

- We are able to create Openshift confidential container workloads in the public cloud using the peer-pods approach. For those interested in how this is done, we recommend reading Learn about peer-pods for Red Hat OpenShift sandboxed containers

- The final outcome is confidential containers running both on-prem and, through cloud bursting, on the public cloud

Dynamic cloud bursting

We’ve covered the building blocks required for supporting cloud bursting with confidential containers.

The next step is to talk about dynamic cloud bursting where policies are added for deciding when and under what conditions workloads will be created on-prem or in the public cloud.

We use Kubernetes Event-driven Autoscaling (KEDA) providing event-driven scale capabilities for scaling the confidential container workloads by bursting to the public cloud. With KEDA you can scale pods based on CPU, memory, different metrics, storage solutions, etc.

Using such policies, you could, for example, decide that you would spin up workloads requiring GPU resources on a public cloud using confidential containers (and confidential GPUs).

Here's an example of scaling workloads to a public cloud based on a Pending status of the on-prem pod. If there are insufficient resources on-prem to start a new pod, that pod will be Pending status. In this situation we can scale pods to the cloud.

Below is a scale policy file, it scales pods based on Prometheus metrics which uses the OpenShift Container Platform monitoring as the metrics source.

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: pod-status-scaledobject

spec:

scaleTargetRef:

name: cloud-deployment

minReplicaCount: 0

maxReplicaCount: 4

advanced:

restoreToOriginalReplicaCount: true

triggers:

- type: prometheus

metadata:

serverAddress: https://thanos-querier.openshift-monitoring.svc.cluster.local:9092

namespace: default

metricName: kube_pod_status_phase

threshold: '1'

query: sum(kube_pod_status_phase{phase="Pending", namespace="default",pod=~"on-prem-deployment.*"})

authModes: bearer

authenticationRef:

name: keda-trigger-auth-prometheusDemo: Confidential containers dynamic cloud bursting

In this demo we will simplify things so it’s easier to follow through the steps:

- We will assume the OpenShift cluster is deployed in on-prem in a trusted environment

- The Trustee is deployed on the same on-prem trusted environment

- We will cloud burst to Azure cloud which is considered as an untrusted environment

- This means that when we create workloads on the bare metal server, we can avoid using confidential containers

- We will be using confidential containers for deploying workloads on Azure cloud using Intel TDX

- We will use a KEDA policy based on memory which uses the memory allocation of the pod to decide when to burst to Azure cloud with a confidential container

Demo link:

À propos des auteurs

Master of Business Administration at Christian-Albrechts university, started at insurance IT, then IBM as Technical Sales and IT Architect. Moved to Red Hat 7 years ago into a Chief Architect role. Now working as Chief Architect in the CTO Organization focusing on FSI, regulatory requirements and Confidential Computing.

Pei Zhang is a quality engineer in Red Hat since 2015. She has made testing contributions to NFV Virt, Virtual Network, SR-IOV, KVM-RT features. She is working on the Red Hat OpenShift sandboxed containers project.

Emanuele Giuseppe Esposito is a Software Engineer at Red Hat, with focus on Confidential Computing, QEMU and KVM. He joined Red Hat in 2021, right after getting a Master Degree in CS at ETH Zürich. Emanuele is passionate about the whole virtualization stack, ranging from Openshift Sandboxed Containers to low-level features in QEMU and KVM.

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Plus de résultats similaires

Chasing the holy grail: Why Red Hat’s Hummingbird project aims for "near zero" CVEs

Elevate your vulnerabiFrom challenge to champion: Elevate your vulnerability management strategy lity management strategy with Red Hat

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud