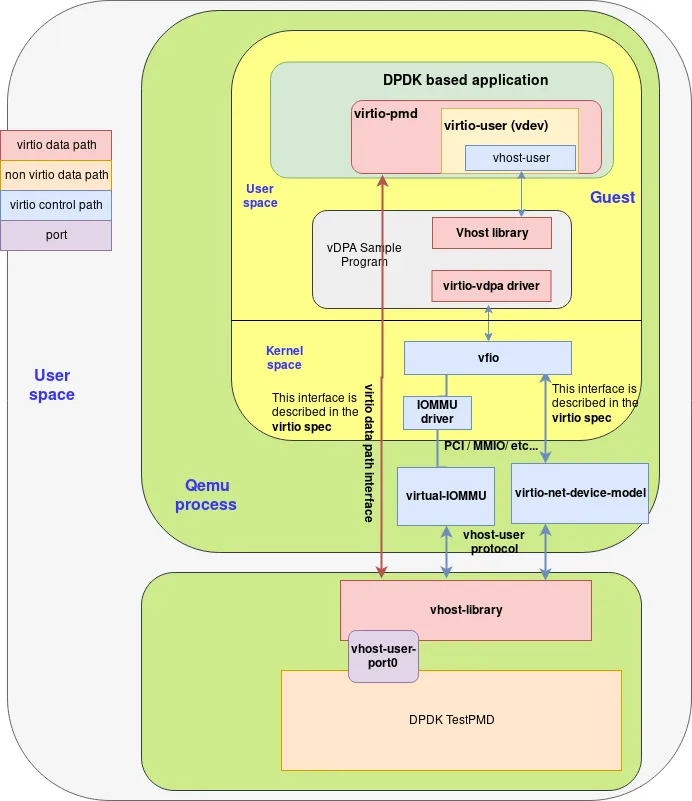

In this post, we will set up vDPA using its DPDK framework. Since vDPA compatible HW cards are in the process of being commonly available on the market, we will work around the HW constraint by using a paravirtualized Virtio-net device in a guest as if it was a full Virtio HW offload NIC.

Setting up

For readers interested in playing with DPDK but not in configuring and installing the required setup, we have Ansible playbooks in a Github repository that can be used to automate everything. Let’s start with the basic setup.

Requirements:

-

A computer running a Linux distribution. This guide uses CentOS 7 however the commands should not change significantly for other Linux distros, in particular for Red Hat Enterprise Linux 7.

-

A user with sudo permissions

-

~ 25 GB of free space in your home directory

-

At least 8GB of RAM

To start, we install the packages we are going to need:

sudo yum install qemu-kvm libvirt-daemon-qemu libvirt-daemon-kvm libvirt virt-install libguestfs-tools-c kernel-tools dpdk dpdk-tools

Creating a VM

First, download the latest CentOS-Cloud-Base image from the following website:

user@host $ sudo wget -O /var/lib/libvirt/images/CentOS-7-x86_64-GenericCloud.qcow2 http://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud.qcow2

(Note the URL above might change, update it to the latest qcow2 image from https://wiki.centos.org/Download.)

This downloads a preinstalled version of CentOS 7, ready to run in an OpenStack environment. Since we’re not running OpenStack, we have to clean the image. To do that, first we will make a copy of the image so we can reuse it in the future:

user@host $ sudo qemu-img create -f qcow2 -b /var/lib/libvirt/images/CentOS-7-x86_64-GenericCloud.qcow2 /var/lib/libvirt/images//vhuser-test1.qcow2 20G

The libvirt commands to do this can be executed with an unprivileged user (recommended) if we export the following variable:

user@host $ export LIBVIRT_DEFAULT_URI="qemu:///system"

Now, the cleaning command (change the password to your own):

user@host $ sudo virt-sysprep --root-password password:changeme --uninstall cloud-init --selinux-relabel -a /var/lib/libvirt/images/vhuser-test1.qcow2 --network --install “dpdk,dpdk-tools,pciutils”

This command mounts the filesystem and applies some basic configuration automatically so that the image is ready to boot afresh.

We need a network to connect our VM as well. Libvirt handles networks in a similar way it manages VMs, you can define a network using an XML file and start it or stop it through the command line.

For this example, we will use a network called ‘default’ whose definition is shipped inside libvirt for convenience. The following commands define the ‘default’ network, start it and check that it’s running.

user@host $ virsh net-define /usr/share/libvirt/networks/default.xml Network default defined from /usr/share/libvirt/networks/default.xml user@host $ virsh net-start default Network default started user@host $virsh net-list Name State Autostart Persistent -------------------------------------------- default active no yes

Finally, we can use virt-install to create the VM. This command line utility creates the needed definitions for a set of well known operating systems. This will give us the base definitions that we can then customize:

user@host $ virt-install --import --name vdpa-test1 --ram=5120 --vcpus=3 \

--nographics --accelerate --machine q35 \

--network network:default,model=virtio --mac 02:ca:fe:fa:ce:aa \

--debug --wait 0 --console pty \

--disk /var/lib/libvirt/images/vdpa-test1.qcow2,bus=virtio --os-variant centos7.0

The options used for this command specify the number of vCPUs, the amount of RAM of our VM as well as the disk path and the network we want the VM to be connected to.

As you may have noticed, there is one difference compared to the Vhost-user hands-on as we specify the machine type to be “q35” instead of the default “pc” type. This is a requirement to enable support for the virtual IOMMU.

Apart from defining the VM according to the options that we specified, the virt-install command should have also started the VM for us so we should be able to list it:

user@host $ virsh list Id Name State ------------------------------ 1 vdpa-test1 running

Voilà! Our VM is running. We need to make some changes to its definition soon. So we will shut it down now:

user@host $ virsh shutdown vdpa-test1

Preparing the host

DPDK helps with optimally allocating and managing memory buffers. On Linux this requires using hugepage support which must be enabled in the running kernel. Using pages of a size bigger than the usual 4K improves performance by using less pages and therefore less TLB (Translation Lookaside Buffers) lookups. These lookups are required to translate virtual to physical addresses. To allocate hugepages during boot we add the following to the kernel parameters in the bootloader configuration.

user@host $ sudo grubby --args=“default_hugepagesz=1G hugepagesz=1G hugepages=6” --update-kernel /boot/<your kernel image file>

What does this do?

default_hugepagesz=1G: make all created hugepages by default 1G big hugepagesz=1G: for the hugepages created during startup set the size to 1G as well hugepages=6: create 6 hugepages (of size 1G) from the start. These should be seen after booting in /proc/meminfo

Now the host can be rebooted. After it comes up we can check that our changes to the kernel parameters were effective by running this:

user@host $ cat /proc/cmdline

Prepare the guest

The virt-install command created and started a VM using libvirt. To connect our DPDK based vswitch testpmd to QEMU we need to add the definition of the vhost-user interface (backed by UNIX sockets) and setup the virtual IOMMU to the device section of the XML:

user@host $ virsh edit vdpa-test1

Let’s start with adding all the necessary devices to have the Virtio-net device working with IOMMU support.

First we need to create a PCIe root port dedicated to the Virtio-net device we will use as vDPA device, so that it has its own IOMMU group. Otherwise, it would be shared with other devices on the same bus, preventing it to be used in user-space:

<controller type='pci' index='8' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='8' port='0xe'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </controller>

Then we can add the Virtio-net device using Vhost-user backend, and plug it into the PCIe root port we just created (Bus 8). We also need to enable IOMMU and ATS (Address Translation Service) supports:

<interface type='vhostuser'> <mac address='52:54:00:58:d7:01'/> <source type='unix' path='/tmp/vhost-user1' mode='client'/> <model type='virtio'/> <driver name='vhost' rx_queue_size='256' iommu='on' ats='on'/> <address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </interface>

Finally, we add the IOMMU device, enabling both interrupt remapping and IOTLB support:

<iommu model='intel'> <driver intremap='on' iotlb='on'/> </iommu>

A few other XML tweaks are necessary to have Vhost-user with IOMMU enabled to work.

First, as in Vhost-user hands-on, we need to make the guest to use huge pages, it is done by adding the following to the guest definition:

<memoryBacking>

<hugepages>

<page size='1048576' unit='KiB' nodeset='0'/>

</hugepages>

<locked/>

</memoryBacking>

<numatune>

<memory mode='strict' nodeset='0'/>

</numatune>

And for the memory to be accessible by the vhost-user backend, we need an additional setting in the guest configuration. This is an important setting, without it we won’t see any packets being transmitted:

<cpu mode='host-passthrough' check='none'>

<topology sockets='1' cores='3' threads='1'/>

<numa>

<cell id='0' cpus='0-2' memory=’5242880’ unit='KiB' memAccess='shared'/>

</numa>

</cpu>

Finally, we need to have the I/O APIC to be managed by Qemu for the interrupt remapping to work:

<features>

<acpi/>

<apic/>

<ioapic driver='qemu'/>

</features>

Now we need to start our guest. Because we configured it to connect to the vhost-user UNIX sockets we need to be sure they are available when the guest it started. This is achieved by starting testpmd, which will open the sockets for us:

user@host $ sudo testpmd -l 0,2 --socket-mem=1024 -n 4 \

--vdev 'net_vhost0,iface=/tmp/vhost-user1,iommu-support=1' -- \

--portmask=1 -i --rxq=1 --txq=1 \

--nb-cores=1 --forward-mode=io

One last thing, because we connect to the vhost-user unix sockets, we need to make QEMU run as root for this experiment. For this set “user = root” in /etc/libvirt/qemu.conf. This is required for our special use case but not recommended in general. In fact readers should revert this setting after following this hands-on article by commenting out the “user = root” setting.

Now we can start the VM with virsh start:

user@host $ virsh start vdpa-test1

Log in as root. The first thing we do in the guest is to add some parameter to the kernel command line to reserve some hugepages at boot time and enable kernel support for IOMMU in pass-through mode:

root@guest $ sudo grubby --args=“default_hugepagesz=1G hugepagesz=1G hugepages=2 intel_iommu=on iommu=pt” --update-kernel /boot/<your kernel image file>

So that the new kernel parameters are applied, let’s reboot the guest.

At startup, we can now bind the virtio devices to the vfio-pci driver. To be able to do this we need to load the required kernel modules first.

root@guest $ modprobe vfio-pci

Let’s find out the PCI addresses of our virtio-net devices first.

root@guest $ dpdk-devbind --status net … Network devices using kernel driver =================================== 0000:01:00.0 'Virtio network device 1041' if=eth0 drv=virtio-pci unused=virtio_pci,vfio-pci *Active* 0000:08:00.0 'Virtio network device 1041' if=eth1 drv=virtio-pci unused=virtio_pci,vfio-pci

In the output of dpdk-devbind look for the virtio-device in the section that is not marked active. We can use this one for our experiment:

root@guests $ dpdk-devbind.py -b vfio-pci 0000:08:00.0

Now the guest is prepared to run our DPDK based vDPA application. But before experimenting vDPA, let’s first ensure we can use the Virtio device using its Virtio PMD driver with testpmd when IOMMU is enabled:

root@guests $ testpmd -l 0,1 --socket-mem 1024 -n 4 -- --portmask=1 -i --auto-start

Then we can start packet forwarding in the host testpmd instance with injecting a burst of packets in the IO forwarding loop:

HOST testpmd> start tx_first 512

In order to check all is working properly, we can check port statistics in either of the testpmd instances by repeating calls to show port stats all command:

HOST testpmd> show port stats all ######################## NIC statistics for port 0 ######################## RX-packets: 60808544 RX-missed: 0 RX-bytes: 3891746816 RX-errors: 0 RX-nombuf: 0 TX-packets: 60824928 TX-errors: 0 TX-bytes: 3892795392 Throughput (since last show) Rx-pps: 12027830 Tx-pps: 12027830 ############################################################################

Creating the accelerated data path

Building the vDPA application

Having ensured both the host and the guest are properly configured, let the fun begin!

As mentioned in the previous post post, the Virtio-vDPA DPDK driver, which enables the use of a paravirtualized Virtio-net device or a full Virtio offload HW NIC, is being reviewed on the upstream DPDK mailing list and so is not available in an official upstream release yet (It is planned for v19.11 release).

It means we have to apply the series introducing this driver and build DPDK instead of using the DPKD downstream package.

First let’s install all dependencies to build DPDK:

root@guests $ yum install git gcc numactl-devel kernel-devel

Then we build the DPDK libraries:

root@guests $ git clone https://gitlab.com/mcoquelin/dpdk-next-virtio.git dpdk root@guests $ cd dpdk root@guests $ git checkout remotes/origin/virtio_vdpa_v1 root@guests $ export RTE_SDK=`pwd` root@guests $ export RTE_TARGET=x86_64-native-linuxapp-gcc root@guests $ make -j2 install T=$RTE_TARGET DESTDIR=install

Finally, we build the vDPA example application available in DPDK tree. This application will be probe the vDPA driver and uses the vDPA framework to bind the vDPA device to a vhost-user socket:

root@guests $ cd examples/vdpa root@guests $ make

Starting the vDPA application

If not done already, we need to bind the Virtio-net device to vfio-pci driver:

root@guests $ dpdk-devbind.py -b vfio-pci 0000:08:00.0

Then we can start the vdpa application, requesting it to bind available vDPA devices to Vhost-user sockets prefixed with /tmp/vdpa.

In order to have the Virtio-net device being probed with its Virtio-vDPA driver and not with the Virtio PMD, we have to pass the “vdpa=1” device argument to the whitelisted device in the EAL command line.

As we have only one vDPA device in this example, the application will create a single vhost-user socket in /tmp/vdpa0:

root@guests $ ./build/vdpa -l 0,2 --socket-mem 1024 -w 0000:08:00.0,vdpa=1 -- --iface /tmp/vdpa EAL: Detected 3 lcore(s) EAL: Detected 1 NUMA nodes EAL: Multi-process socket /var/run/dpdk/rte/mp_socket EAL: Selected IOVA mode 'VA' EAL: Probing VFIO support... EAL: VFIO support initialized EAL: WARNING: cpu flags constant_tsc=yes nonstop_tsc=no -> using unreliable clock cycles ! EAL: PCI device 0000:08:00.0 on NUMA socket -1 EAL: Invalid NUMA socket, default to 0 EAL: probe driver: 1af4:1041 net_virtio EAL: PCI device 0000:08:00.0 on NUMA socket 0 EAL: probe driver: 1af4:1041 net_virtio_vdpa EAL: using IOMMU type 1 (Type 1) iface /tmp/vdpa VHOST_CONFIG: vhost-user server: socket created, fd: 27 VHOST_CONFIG: bind to /tmp/vdpa0 enter 'q' to quit

Starting the user application

Now that the Vhost-user socket bound to the vDPA device is ready to be consumed, we can start the application that will use the accelerated Virtio datapath.

In a real-life scenario, it could be passed to a VM so that its Virtio datapath bypasses the host, but we are already running from within a guest so it would mean setting-up nested virtualization.

Other possible scenario would be to pass the Vhost-user socket to a container, so that it can benefit from an high-speed interface, but we will cover this later, in future posts.

For the sake of simplicity, we will just start a testpmd application, which will connect to the vhost-user socket using the Virtio-user PMD. Virtio-user PMD re-uses the datapath from the Virtio PMD, but instead of managing the control path through PCI, it implements the master side of the Vhost-user protocol.

As we already have a DPDK application running, we have to take care to prefix the file names of the hugepages it will allocate. This is done thanks to this command:

root@guests $ ./install/bin/testpmd -l 0,2 --socket-mem 1024 --file-prefix=virtio-user --no-pci --vdev=net_virtio_user0,path=/tmp/vdpa0

That’s it, our accelerated data path is set up! To confirm all is properly let’s try again to inject some packets and see if it is working fine:

HOST testpmd> start tx_first 512 HOST testpmd> show port stats all ######################## NIC statistics for port 0 ######################## RX-packets: 3565442688 RX-missed: 0 RX-bytes: 228188332032 RX-errors: 0 RX-nombuf: 0 TX-packets: 3565459040 TX-errors: 0 TX-bytes: 228189378560 Throughput (since last show) Rx-pps: 11997065 Tx-pps: 11997065 ############################################################################

Conclusion

In this post, we created a standard accelerated data path using the DPDK’s vDPA framework. Between the initial testpmd run using Virtio PMD directly and the run once vDPA is setup, we introduced the vDPA layer to provide additional flexibility without impacting the performance.

The value of this framework is that you can now experiment with the vDPA control plane without requiring vendor NICs to support the vDPA data plane (ring layout support in HW) which is currently being developed by the industry.

We will now move from the realm of VMs to the realm of containers. The next hands on post will build on this framework to experiment with vDPA accelerated containers in on-prem and in a hybrid cloud deployment.

Sull'autore

Altri risultati simili a questo

Accelerating VM migration to Red Hat OpenShift Virtualization: Hitachi storage offload delivers faster data movement

Achieve more with Red Hat OpenShift 4.21

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud