By using hardware offloading and dedicated hardware businesses can free up their CPU resources and handle network traffic more efficiently. In this post we'll look at using NVIDIA BlueField-2 data processing units (DPU) with Red Hat Enterprise Linux (RHEL) and Red Hat OpenShift to boost performance and reduce CPU load on commodity x64 servers.

Modern networks are expected to be able to quickly and securely move a large number of data packets. Processing that data on both the sending and the receiving ends is an expensive operation for servers that are responsible for handling the network traffic. As the server is performing network operations, its CPUs are spending valuable cycles handling the networking tasks and, as a result, have fewer cycles available to run the actual applications or process the data. A practical solution to this problem is to use hardware offloading to transfer resource intensive computational tasks from the server’s CPU to a separate piece of hardware.

Not all network protocols are created equal, as some are relatively more expensive to implement than others. Two protocols that come to mind are Internet Protocol Security (IPsec), used for authenticating and encrypting packets, and Generic Network Virtualization Encapsulation (Geneve), used for managing overlay networks. These protocols can be completely moved from the server’s CPU to a specialized piece of hardware called a DPU.

DPUs represent a new class of devices designed to accelerate software-defined datacenter services, and in the case of NVIDIA BlueField-2 DPU, combine multiple hardware accelerators with an on-board general purpose processor, making it possible to offload critical networking tasks from the server’s CPU.

We will first look at how CPU resources can be freed by employing the BlueField-2 to offload IPsec and Geneve using Red Hat Enterprise Linux (RHEL). Next, we’ll move part of the setup to Red Hat OpenShift Container Platform and measure Geneve performance. Read on to see what we’ve discovered through these experiments.

Test results with RHEL

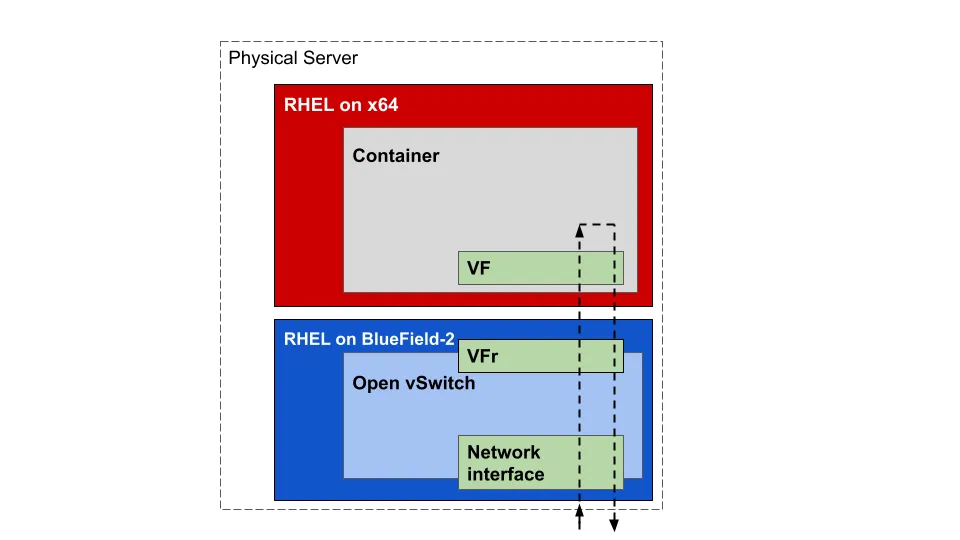

We began by installing RHEL 8 on both the server and the BlueField-2 DPU. On the server we’ve put our benchmark application, iperf3, inside a container along with a virtual function (VF). On the BlueField-2 DPU we ran Open vSwitch (OVS) software with the goal of reducing the x64 host’s computational load required for processing network traffic.

Figure 1: Virtual Function (VF) is placed inside the container and is associated with a Virtual Function Representor (VFr) on the BlueField-2.

OVS, IPsec, Geneve, and other network functions are offloaded to the BlueField-2’s Arm processor cores

OVS flows are offloaded to the NVIDIA BlueField-2’s network hardware accelerators

The BlueField-2 does the heavy lifting in the following areas: Geneve encapsulation, IPsec encapsulation/decapsulation and encryption/decryption, routing, and network address translation. The x64 host and container see only simple unencapsulated, unencrypted packets.

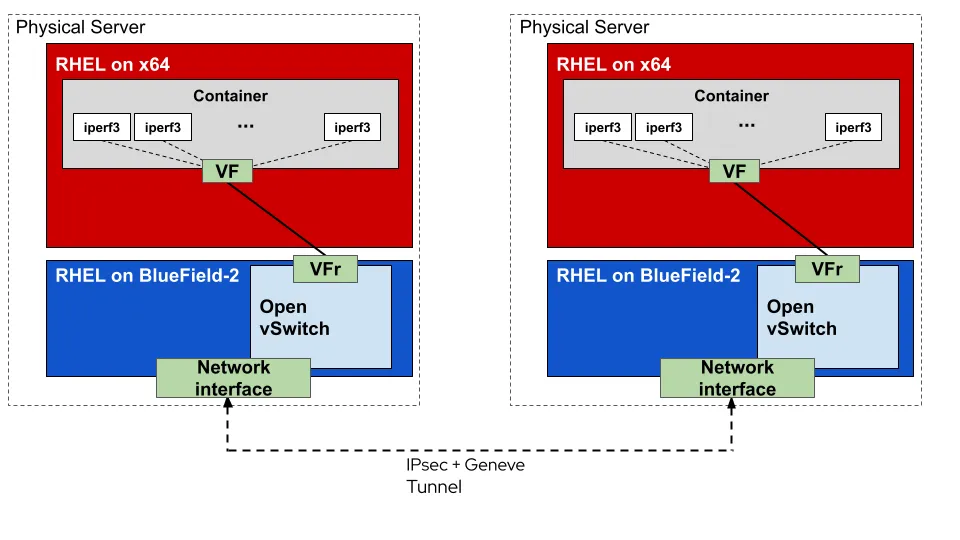

In our benchmark testing we compare the CPU utilization of the server with the network functions running on the x64 host versus running on the BlueField-2. This test simulates east-to-west traffic inside a data center and demonstrates server CPU resource savings resulting from offloading. Naturally, once CPU resources are freed, they can be used to run additional applications and workloads on the host.

The setup for this test is similar to the one described previously. To capture results with network function offloaded to the BlueField-2, we used two servers each with a 100 Gbps BlueField-2 DPUs directly connected back-to-back without a switch.

Figure 2: Test topology for the network function offloaded to BlueField-2

For the non-offload test (network function running on x64 host) our methodology is identical with two exceptions: OVS runs on the x64 CPU and BlueField-2 is replaced by a generic, non-intelligent network interface card (NIC) without hardware offload capabilities.

Traffic generation in all cases was done with multiple iperf3 (TCP) instances running inside the container. x64 CPU cores were allocated for iperf3. However, CPU utilization shown below contains only the network function. CPU utilization due to traffic generation is excluded by only considering cores other than those that run iperf3.

We found that without BlueField-2 offload capabilities, when IPsec network function is running on the x64 CPUs, we were unable to reach 100 Gbps throughput (also called line rate) that the NIC is rated at. This was not a surprise as the IPsec protocol is computationally intensive and saturated the host's x64 CPUs. Adding Geneve tunneling only further increased the computational demand on x64 servers.

For the offload test, BlueField-2 had no problem achieving 100 Gbps line rate while encrypting and tunneling the network traffic.

Figure 3: CPU utilization includes both the receive (RX) and transmit (TX) servers taken as an aggregate.

* - CPU utilization measured during testing reflects only the number cores required for processing network traffic

** - During testing x64 server CPUs were not able to process the encrypted network traffic at 100 Gbps

Test results with OpenShift

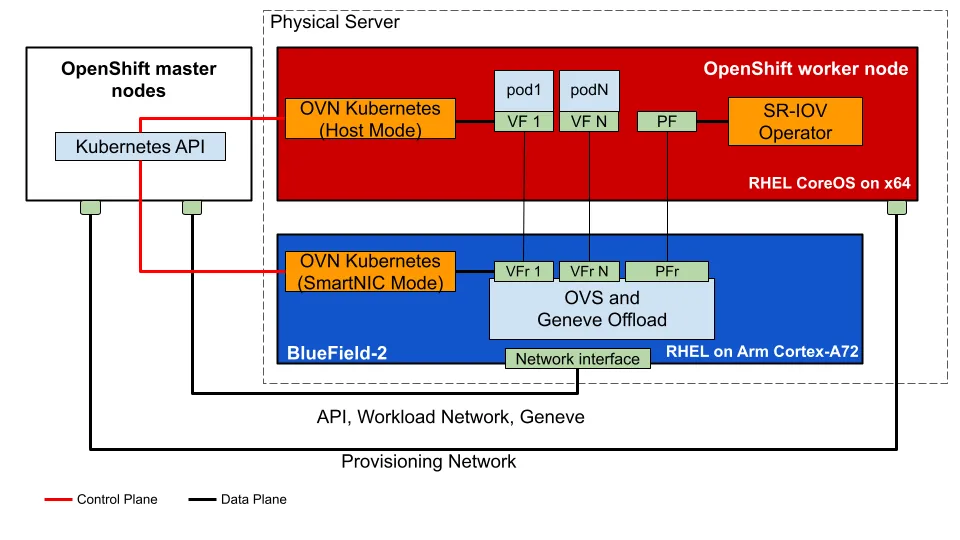

To further build on our RHEL testing, we decided to integrate BlueField-2 into an OpenShift cluster. To accomplish this, a few changes to configuration were required. The primary change was to run the OVN-Kubernetes pod, a large piece of the OpenShift network function, on the BlueField-2 DPU. Coordination with existing Kubernetes Operators to attach virtual functions (VFs) to the workload pods running on the x64 worker node was also required. These VFs have an associated virtual function representor (VFr) on the BlueField-2. This pairing of VFs and VFrs allows the BlueField-2 to pass simple unencapsulated packets directly to the workload pod running on the x64 host.

Figure 4: Test topology for OpenShift cluster with network function offloaded to NVIDIA BlueField-2

We used iperf3 (TCP) as a network traffic generator running inside OpenShift instantiated pods on the x64 host. BlueField-2 DPUs used in this test are rated at 25 Gbps per network interface. OpenShift worker pods communicate through Geneve tunnels. As before, we’re comparing CPU utilization when the network function runs on the x64 host versus running on the BlueField-2.

We found that our x64 server running Geneve is able to saturate the 25 Gbps link when the network function is deployed completely on the host.

After moving the network function to BlueField-2, we saw the server’s CPU utilization drop by 70% without affecting the network throughput.

Figure 5: CPU utilization includes both the receive (RX) and transmit (TX) servers taken as an aggregate.

* - CPU utilization measured during testing reflects only the number of cores required for processing network traffic

Additional integration work on OpenShift

In this round of testing, we’ve only considered the improvements in x64 server utilization from offloading Geneve to the BlueField-2 DPU on OpenShift. BlueField-2 has many other capabilities that provide further opportunities to offload the x64 host CPU on the OpenShift worker nodes. As an example, there's IPsec, which is supported in OpenShift version 4.7 and later.

In the future, we would like to test other OpenShift integration improvements, including:

BlueField hardware being recognized directly by the OpenShift cluster

IPsec (encrypted east-to-west traffic)

Shared gateway mode

DNS Proxy pods moved to BlueField-2 DPU

Benefits of using DPUs for network function offloading

Unlike traditional single purpose accelerators, the BlueField-2 DPU provides security isolation and architectural compartmentalization while managing multiple software-defined hardware-accelerated functions. An important aspect of this flexibility is the capability to run a general purpose operating system, such as RHEL, that manages software-defined functions on Arm processor cores to help address the exploding complexity of running layered software stacks on the host. Among other benefits, running enterprise grade RHEL on BlueField-2 provides additional protection against common vulnerabilities and exposures (CVE) threats while reducing the attack surface.

All of those benefits combined with the fact that a single BlueField-2 card can reduce CPU utilization in an x64 server by 3x, while maintaining the same network throughput, makes them very attractive for optimizing network functions in heavily loaded datacenters.

Additionally, the combination of OpenShift and RHEL running on BlueField-2 provides a fertile ground for designing solutions that are focused on stability and reliability while providing performance and security advantages backed by enterprise-grade support.

These solutions extend across many applications and use cases, helping customers to manage their applications and datacenters. At Red Hat, we see this as a key area of innovation and a valuable opportunity to collaborate with partners like NVIDIA on the upstream open source projects that support these new technologies and use cases.

Learn more about Red Hat OpenShift and NVIDIA BlueField-2 at NVIDIA’s GTC, April 12th-16th. Registration is free.

关于作者

Eric has been at Red Hat since 2016. In his tenure he has contributed to many open source projects: Linux, Open vSwitch, nftables and firewalld. He has been the upstream maintainer of firewalld since 2017.

Rashid Khan is a seasoned technology leader with over 25 years of engineering expertise, currently serving as the Senior Director of Core Platforms at Red Hat. In this role, he oversees the development and strategic direction of networking technologies, focusing on innovation in cloud and bare metal networking. His work extends to significant contributions in hardware enablement for the edge, cloud and AI. Rashid is also actively involved in the open source communities, holding esteemed positions such as Chairman of the Board of Governors at DPDK (Data Plane Development Kit) under the Linux Foundation. Furthermore, he is a member of the Board of Governors at OPI (Open Platform Initiative) within the Linux Foundation, where he plays a pivotal role in shaping the future of open source technologies and their applications across various industries. Rashid's commitment to advancing technology and fostering a collaborative ecosystem reflect his deep dedication to both innovation and community building.

Yan Fisher is a Global evangelist at Red Hat where he extends his expertise in enterprise computing to emerging areas that Red Hat is exploring.

Fisher has a deep background in systems design and architecture. He has spent the past 20 years of his career working in the computer and telecommunication industries where he tackled as diverse areas as sales and operations to systems performance and benchmarking.

Having an eye for innovative approaches, Fisher is closely tracking partners' emerging technology strategies as well as customer perspectives on several nascent topics such as performance-sensitive workloads and accelerators, hardware innovation and alternative architectures, and, exascale and edge computing.