L'architettura di rete di Red Hat OpenShift offre un front end robusto e scalabile per una miriade di applicazioni containerizzate. I servizi forniscono un semplice bilanciamento del carico basato sui tag dei pod e in routing espone tali servizi alla rete esterna. Questi concetti funzionano molto bene per i microservizi, ma possono rivelarsi impegnativi per le applicazioni eseguite nelle macchine virtuali su OpenShift Virtualization, in cui esiste già un'infrastruttura di gestione dei server che presuppone un accesso completo e continuo alle macchine virtuali.

In un articolo precedente, ho illustrato come installare e configurare OpenShift Virtualization e come eseguire una macchina virtuale di base. In questo articolo vengono illustrate le diverse opzioni per configurare il cluster OpenShift Virtualization al fine di consentire alle macchine virtuali di accedere alla rete esterna in modo molto simile agli hypervisor più diffusi.

Connessione di OpenShift a reti esterne

OpenShift può essere configurato per accedere a reti esterne oltre alla rete pod interna. Ciò si ottiene utilizzando l'operatore NMState in un cluster OpenShift. È possibile installare l'operatore NMState da OpenShift Operator Hub.

L'operatore NMState funziona con Multus, un plugin CNI per OpenShift che consente ai pod di comunicare con più reti. Poiché abbiamo già almeno un ottimo articolo su Multus, ho omesso la spiegazione dettagliata in questo post, per concentrarmi invece su come utilizzare NMState e Multus per connettere macchine virtuali a più reti.

Panoramica dei componenti di NMSate

Dopo aver installato l'operatore NMState, vengono aggiunte tre CustomResoureDefinitions (CRD), che consentono di configurare le interfacce di rete sui nodi del cluster. Interagisci con questi oggetti quando configuri le interfacce di rete sui nodi OpenShift.

NodeNetworkState

(

nodenetworkstates.nmstate.io) stabilisce un oggetto NodeNetworkState (NNS) per ogni nodo del cluster. Il contenuto dell'oggetto descrive in dettaglio lo stato corrente della rete di quel nodo.NodeNetworkConfigurationPolicies

(

nodenetworkconfigurationpolicies.nmstate.io) è una policy che indica all'operatore NMState come configurare interfacce di rete diverse su gruppi di nodi. In breve, rappresentano le modifiche alla configurazione dei nodi OpenShift.NodeNetworkConfigurationEnactments

(

nodenetworkconfigurationenactments.nmstate.io) archivia i risultati di ogni NodeNetworkConfigurationPolicy (NNCP) applicato negli oggetti NodeNetworkConfigurationEnactment (NNCE). Esiste un NNCE per ogni nodo, per ogni NNCP.

Dopo queste definizioni, puoi passare alla configurazione delle interfacce di rete sui nodi OpenShift. La configurazione hardware dell'ambiente utilizzato per questo articolo include tre interfacce di rete. La prima è enp1s0, già configurata durante l'installazione del cluster tramite il bridge br-ex. È il bridge - l'interfaccia - che utilizzerò nell'Opzione n. 1. La seconda interfaccia, enp2s0, si trova sulla stessa rete di enp1s0, e la utilizzerò per configurare il bridge OVS br0 nell'Opzione n. 2 di seguito. Infine, l'interfaccia enp8s0 è connessa a una rete separata senza accesso a Internet e senza server DHCP. Utilizzerò questa interfaccia per configurare il bridge Linux br1 nell'Opzione n. 3.

Opzione n. 1: utilizzo di una rete esterna con una singola NIC

Se i nodi OpenShift dispongono di una sola NIC per la rete, l'unica opzione per connettere le macchine virtuali alla rete esterna è riutilizzare il bridge br-ex, che è l'impostazione predefinita su tutti i nodi in esecuzione in un cluster OVN-Kubernetes . Ciò significa che questa opzione potrebbe non essere disponibile per i cluster che utilizzano la versione precedente di Openshift-SDN.

Poiché non è possibile riconfigurare completamente il bridge br-ex senza influire negativamente sul funzionamento di base del cluster, è necessario aggiungere una rete locale al bridge. È possibile eseguire questa operazione con la seguente impostazione NodeNetworkConfigurationPolicy:

$ cat br-ex-nncp.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-ex-network

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: br-ex-network

bridge: br-ex

state: presentPer la maggior parte, l'esempio precedente è lo stesso in ogni ambiente che aggiunge una rete locale al bridge br-ex. Le uniche parti che verrebbero comunemente modificate sono il nome di NNCP (.metadata.name) e il nome di localnet (.spec.desiredstate.ovn.bridge-mappings). In questo esempio, sono entrambi br-ex-network, ma i nomi sono arbitrari e non devono necessariamente coincidere. Qualunque sia il valore utilizzato per localnet sarà necessario per la configurazione diNetworkAttachmentDefinition, quindi ricordalo.

Applicare la configurazione NNCP ai nodi del cluster:

$ oc apply -f br-ex-nncp.yaml

nodenetworkconfigurationpolicy.nmstate.io/br-ex-networkControlla lo stato di avanzamento di NNCP e NNCE con questi comandi:

$ oc get nncp

NAME STATUS REASON

br-ex-network Progressing ConfigurationProgressing

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Progressing 1s ConfigurationProgressing

lt01ocp11.matt.lab.br-ex-network Progressing 3s ConfigurationProgressing

lt01ocp12.matt.lab.br-ex-network Progressing 4s ConfigurationProgressingIn questo caso, il singolo NNCP denominato br-ex-network ha generato un NNCE per ogni nodo. Dopo alcuni secondi, il processo è completo:

$ oc get nncp

NAME STATUS REASON

br-ex-network Available SuccessfullyConfigured

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Available 83s SuccessfullyConfigured

lt01ocp11.matt.lab.br-ex-network Available 108s SuccessfullyConfigured

lt01ocp12.matt.lab.br-ex-network Available 109s SuccessfullyConfiguredÈ ora possibile passare a NetworkAttachmentDefinition, che definisce il modo in cui le macchine virtuali si collegano alla nuova rete appena creata.

Configurazione di NetworkAttachmentDefinition

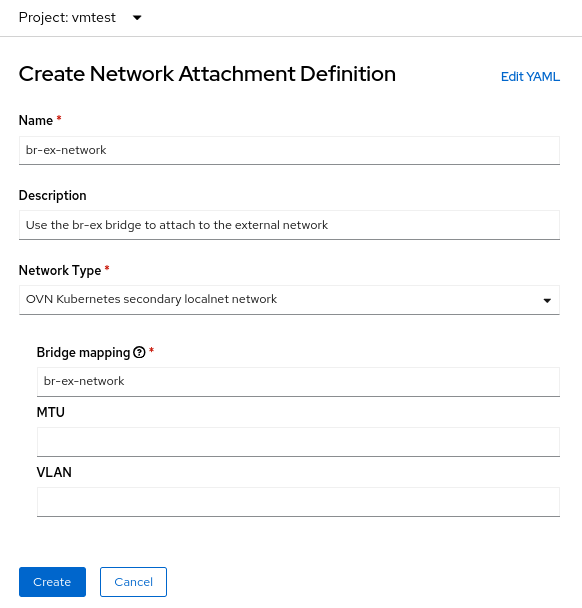

Per creare una NetworkAttachmentDefinition nella console di OpenShift, seleziona il progetto in cui crei le macchine virtuali (vmtest in questo esempio) e vai a Networking > NetworkAttachmentDefinitions. Quindi clicca sul pulsante blu con l'etichetta Create network attachment definition.

La console presenta un modulo che consente di creare la NetworkAttachmentDefinition:

Il campo Name è arbitrario, ma in questo esempio viene utilizzato lo stesso nome utilizzato per NNCP (br-ex-network). Per Network Type, devi scegliere OVN Kubernetes secondary localnet network.

Nel campo Bridge mapping, inserisci il nome della rete locale configurata in precedenza (che in questo esempio è br-ex-network ). Poiché il campo richiede una "mappatura del bridge", potresti essere tentato di inserire "br-ex", ma in realtà devi utilizzare la rete locale che hai creato, che è già connessa a br-ex.

In alternativa, puoi creare NetworkAttachmentDefinition utilizzando un file YAML anziché utilizzare la console:

$ cat br-ex-network-nad.yaml

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: br-ex-network

namespace: vmtest

spec:

config: '{

"name":"br-ex-network",

"type":"ovn-k8s-cni-overlay",

"cniVersion":"0.4.0",

"topology":"localnet",

"netAttachDefName":"vmtest/br-ex-network"

}'

$ oc apply -f br-ex-network-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/br-ex-network createdIn NetworkAttachmentDefinition YAML sopra, il campo del nome in .spec.config è il nome della rete locale dal NNCP, mentre netAttachDefName è lo spazio dei nomi/nome che deve corrispondere ai due campi identici nella sezione .metadata (in questo caso, vmtest/br-ex-network).

Le macchine virtuali utilizzano IP statici o DHCP per l'indirizzamento IP, pertanto la gestione degli indirizzi IP (IPAM) in una NetworkAttachmentDefinition

Configurazione della NIC della macchina virtuale

Per utilizzare la nuova rete esterna con una macchina virtuale, modificare la sezione Network interfaces della macchina virtuale e selezionare la nuova rete vmtest/br-ex come Network type. In questo modulo è anche possibile personalizzare l'indirizzo MAC.

Continua a creare la macchina virtuale come faresti normalmente. Dopo l'avvio della macchina virtuale, la NIC virtuale viene connessa alla rete esterna. In questo esempio, la rete esterna dispone di un server DHCP, pertanto viene assegnato automaticamente un indirizzo IP e l'accesso alla rete è consentito.

Eliminare NetworkAttachmentDefinition e localnet su br-ex

Se desideri annullare i passaggi precedenti, assicurati innanzitutto che nessuna macchina virtuale utilizzi NetworkAttachmentDefinition, quindi eliminala utilizzando la console. In alternativa, usa il comando:

$ oc delete network-attachment-definition/br-ex-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br-ex-network" deletedQuindi, elimina NodeNetworkConfigurationPolicy. L'eliminazione della policy non annulla le modifiche apportate ai nodi OpenShift.

$ oc delete nncp/br-ex-network

nodenetworkconfigurationpolicy.nmstate.io "br-ex-network" deletedL'eliminazione di NNCP comporta anche l'eliminazione di tutti gli NNCE associati:

$ oc get nnce

No resources foundInfine, modifica il file YAML NNCP utilizzato in precedenza, ma cambia lo stato di mappatura del bridge da presentaabsent:

$ cat br-ex-nncp.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br-ex-network

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: br-ex-network

bridge: br-ex

state: absent # Changed from presentRiapplica il NNCP aggiornato:

$ oc apply -f br-ex-nncp.yaml

nodenetworkconfigurationpolicy.nmstate.io/br-ex-network

$ oc get nncp

NAME STATUS REASON

br-ex-network Available SuccessfullyConfigured

$ oc get nnce

NAME STATUS STATUS AGE REASON

lt01ocp10.matt.lab.br-ex-network Available 2s SuccessfullyConfigured

lt01ocp11.matt.lab.br-ex-network Available 29s SuccessfullyConfigured

lt01ocp12.matt.lab.br-ex-network Available 30s SuccessfullyConfiguredLa configurazione di localnet è stata rimossa. È possibile eliminare l'NNCP in modo sicuro.

Opzione n. 2: utilizzo di una rete esterna con un bridge OVS su una NIC dedicata

I nodi OpenShift possono essere connessi a più reti che utilizzano NIC fisiche diverse. Sebbene esistano molte opzioni di configurazione (come bonding e VLAN), in questo esempio sto utilizzando una NIC dedicata per configurare un bridge OVS. Per ulteriori informazioni sulle opzioni di configurazione avanzate, come la creazione di vincoli o l'utilizzo di una VLAN, consulta la nostra documentazione.

Puoi visualizzare tutte le interfacce dei nodi utilizzando questo comando (l'output viene troncato qui per comodità):

$ oc get nns/lt01ocp10.matt.lab -o jsonpath='{.status.currentState.interfaces[3]}' | jq

{

…

"mac-address": "52:54:00:92:BB:00",

"max-mtu": 65535,

"min-mtu": 68,

"mtu": 1500,

"name": "enp2s0",

"permanent-mac-address": "52:54:00:92:BB:00",

"profile-name": "Wired connection 1",

"state": "up",

"type": "ethernet"

}In questo esempio, la scheda di rete inutilizzata su tutti i nodi è enp2s0.

Come nell'esempio precedente, inizia con un NNCP che crea un nuovo bridge OVS chiamatobr0 sui nodi, utilizzando una NIC inutilizzata (enp2s0, in questo esempio). L'NNCP si presenta così:

$ cat br0-worker-ovs-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br0-ovs

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br0

description: |-

A dedicated OVS bridge with enp2s0 as a port

allowing all VLANs and untagged traffic

type: ovs-bridge

state: up

bridge:

options:

stp: true

port:

- name: enp2s0

ovn:

bridge-mappings:

- localnet: br0-network

bridge: br0

state: presentLa prima cosa da notare nell'esempio precedente è il campo .metadata.name, che è arbitrario e identifica il nome dell'NNCP. Alla fine del file, noterai che stai aggiungendo una mappatura del bridge localnet al nuovo bridge br0, proprio come hai fatto con br-ex nell'esempio della singola NIC.

Nell'esempio precedente che utilizzava br-ex come bridge, si presumeva che tutti i nodi OpenShift avessero un bridge con questo nome, quindi hai applicato NNCP a tutti i nodi di lavoro. Tuttavia, nello scenario attuale, è possibile avere nodi eterogenei con nomi NIC diversi per le stesse reti. In tal caso, è necessario aggiungere un'etichetta a ciascun tipo di nodo per identificare la configurazione di cui è dotato. Quindi, utilizzando .spec.nodeSelector nell'esempio precedente, puoi applicare la configurazione solo ai nodi che funzioneranno con questa configurazione. Per gli altri tipi di nodo, puoi modificare NNCP e nodeSelector e creare lo stesso bridge su tali nodi, anche quando il nome della NIC sottostante è diverso.

In questo esempio, i nomi delle NIC sono tutti uguali, quindi puoi utilizzare lo stesso NNCP per tutti i nodi.

Ora applica il NNCP, proprio come hai fatto per l'esempio della singola NIC:

$ oc apply -f br0-worker-ovs-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br0-ovs createdCome in precedenza, NNCP e NNCE richiedono alcuni secondi per portare a termine creazione e applicazione. Puoi vedere il nuovo bridge in NNS:

$ oc get nns/lt01ocp10.matt.lab -o jsonpath='{.status.currentState.interfaces[3]}' | jq

{

…

"port": [

{

"name": "enp2s0"

}

]

},

"description": "A dedicated OVS bridge with enp2s0 as a port allowing all VLANs and untagged traffic",

…

"name": "br0",

"ovs-db": {

"external_ids": {},

"other_config": {}

},

"profile-name": "br0-br",

"state": "up",

"type": "ovs-bridge",

"wait-ip": "any"

}Configurazione di NetworkAttachmentDefinition

Il processo di creazione di una NetworkAttachmentDefinition per un bridge OVS è identico all'esempio precedente di utilizzo di una singola NIC, perché in entrambi i casi si crea una mappatura del bridge OVN. Nell'esempio corrente, il nome del mapping del bridge è br0-network, ed è quello utilizzato nel modulo di creazione NetworkAttachmenDefinition:

In alternativa, puoi creareNetworkAttachmentDefinition utilizzando un file YAML:

$ cat br0-network-nad.yaml

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: br0-network

namespace: vmtest

spec:

config: '{

"name":"br0-network",

"type":"ovn-k8s-cni-overlay",

"cniVersion":"0.4.0",

"topology":"localnet",

"netAttachDefName":"vmtest/br0-network"

}'

$ oc apply -f br0-network-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/br0-network createdConfigurazione della NIC della macchina virtuale

Come in precedenza, crea una macchina virtuale come di consueto, utilizzando la nuova NetworkAttachmentDefinition denominatavmtest/br0-network per la rete NIC.

All'avvio della macchina virtuale, la NIC utilizza il bridge br0 sul nodo. In questo esempio, la NIC dedicata per br0 si trova sulla stessa rete del bridge br-ex, quindi si ottiene un indirizzo IP dalla stessa sottorete di prima e l'accesso alla rete è consentito.

Eliminare NetworkAttachmentDefinition e il bridge OVS

La procedura per eliminare NetworkAttachmentDefinition e il bridge OVS è sostanzialmente la stessa dell'esempio precedente. Verificare che nessuna macchina virtuale utilizzi NetworkAttachmentDefinition, quindi eliminarla dalla console o dalla riga di comando:

$ oc delete network-attachment-definition/br0-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br0-network" deletedQuindi, elimina NodeNetworkConfigurationPolicy (ricorda che l'eliminazione della policy non annulla le modifiche sui nodi OpenShift):

$ oc delete nncp/br0-ovs

nodenetworkconfigurationpolicy.nmstate.io "br0-ovs" deletedL'eliminazione dell'NNCP comporta l'eliminazione anche dell'NNCE associato:

$ oc get nnce

No resources foundInfine, modifica il file YAML NNCP utilizzato in precedenza, ma cambia lo stato dell'interfaccia da "up" a "absent" e lo stato di mappatura del bridge da "present" ad "absent":

$ cat br0-worker-ovs-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br0-ovs

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

Interfaces:

- name: br0

description: |-

A dedicated OVS bridge with enp2s0 as a port

allowing all VLANs and untagged traffic

type: ovs-bridge

state: absent # Change this from “up” to “absent”

bridge:

options:

stp: true

port:

- name: enp2s0

ovn:

bridge-mappings:

- localnet: br0-network

bridge: br0

state: absent # Changed from presentRiapplica il NNCP aggiornato. Al termine dell'elaborazione NNCP, è possibile eliminarla.

$ oc apply -f br0-worker-ovs-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br0-ovs createdOpzione n. 3: utilizzo di una rete esterna con un bridge Linux su una NIC dedicata

Nelle reti Linux, i bridge OVS e Linux hanno lo stesso scopo. In definitiva, la decisione di utilizzare l'uno rispetto all'altro dipende dalle esigenze dell'ambiente. Su Internet sono disponibili una miriade di articoli che illustrano i vantaggi e gli svantaggi di entrambi i tipi di bridge. In breve, i bridge Linux sono più maturi e più semplici dei bridge OVS, ma non sono così ricchi di funzionalità, mentre i bridge OVS hanno il vantaggio di offrire più tipi di tunnel e altre funzionalità moderne rispetto ai bridge Linux, ma è un po' più difficile risolvere problemi. Ai fini di OpenShift Virtualization, per impostazione predefinita dovresti probabilmente utilizzare i bridge OVS sui bridge Linux a causa di elementi come MultiNetworkPolicy, ma i deployment possono avere successo con entrambe le opzioni.

Quando un'interfaccia di una macchina virtuale è connessa a un bridge OVS, l'MTU predefinito è 1400. Quando un'interfaccia di una macchina virtuale è connessa a un bridge Linux, l'MTU predefinito è 1500. Ulteriori informazioni sulle dimensioni dell'MTU del cluster sono disponibili nella documentazione ufficiale.

Configurazione dei nodi

Come nell'esempio precedente, stiamo utilizzando una NIC dedicata per creare un nuovo bridge Linux. Per rendere l'esempio interessante, collegherò il bridge Linux a una rete che non dispone di un server DHCP, per mostrare come questo influisce sulle macchine virtuali connesse a quella rete.

In questo esempio, sto creando un bridge Linux chiamato br1 sull'interfaccia enp8s0, che è collegata alla rete 172.16.0.0/24 senza accesso a Internet o server DHCP. L'NNCP si presenta così:

$ cat br1-worker-linux-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-linux-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br1

description: Linux bridge with enp8s0 as a port

type: linux-bridge

state: up

bridge:

options:

stp:

enabled: false

port:

- name: enp8s0

$ oc apply -f br1-worker-linux-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br1-worker createdCome in precedenza, NNCP e NNCE richiedono alcuni secondi per portare a termine creazione e applicazione.

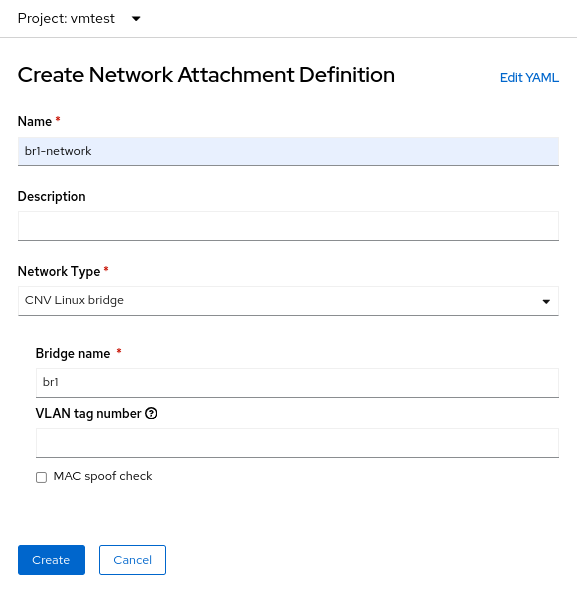

Configurazione di NetworkAttachmentDefinition

Il processo di creazione di una NetworkAttachmentDefinition per un bridge Linux è leggermente diverso da entrambi gli esempi precedenti perché questa volta non ci si connette a una rete locale OVN. Per una connessione bridge Linux, ci si connette direttamente al nuovo bridge. Ecco il modulo di creazione NetworkAttachmenDefinition:

In questo caso, seleziona il Network Type di CNV Linux bridge e inserisci il nome del bridge effettivo nel campo Bridge name, che in questo esempio è br1.

Configurazione della NIC della macchina virtuale

Ora crea un'altra macchi na virtuale, ma utilizza la nuovaNetworkAttachmentDefinition denominata vmtest/br1-network per la rete NIC. In questo modo si collega la NIC al nuovo bridge Linux.

All'avvio della macchina virtuale, la NIC utilizza il bridge br1 sul nodo. In questo esempio, la NIC dedicata si trova su una rete senza server DHCP e senza accesso a Internet, quindi assegna alla NIC un indirizzo IP manuale utilizzando nmcli e convalida la connettività solo sulla rete locale.

Eliminare NetworkAttachmentDefinition e il bridge Linux

Come negli esempi precedenti, per eliminare NetworkAttachmentDefinition e il bridge Linux, accertati innanzitutto che nessuna macchina virtuale utilizzi NetworkAttachmentDefinition. Eliminalo dalla console o dalla riga di comando:

$ oc delete network-attachment-definition/br1-network -n vmtest

networkattachmentdefinition.k8s.cni.cncf.io "br1-network" deletedQuindi, elimina NodeNetworkConfigurationPolicy:

$ oc delete nncp/br1-linux-bridge

nodenetworkconfigurationpolicy.nmstate.io "br1-linux-bridge" deletedL'eliminazione di NNCP comporta anche l'eliminazione di tutti gli NNCE associati (ricorda che l'eliminazione della policy non annulla le modifiche sui nodi OpenShift):

$ oc get nnce

No resources foundInfine, modifica il file YAML NNCP, modificando lo stato dell'interfaccia da up aabsent:

$ cat br1-worker-linux-bridge.yaml

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: br1-linux-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: br1

description: Linux bridge with enp8s0 as a port

type: linux-bridge

state: absent # Changed from up

bridge:

options:

stp:

enabled: false

port:

- name: enp8s0Riapplica il NNCP aggiornato. Al termine dell'elaborazione, l'NNCP potrebbe essere eliminato.

$ oc apply -f br1-worker-linux-bridge.yaml

nodenetworkconfigurationpolicy.nmstate.io/br1-worker createdReti avanzate in OpenShift Virtualization

Molti utenti di OpenShift Virtualization possono trarre vantaggio dalle numerose funzionalità di rete avanzate, già integrate in Red Hat OpenShift. Tuttavia, con la migrazione dei carichi di lavoro dagli hypervisor tradizionali, potresti voler sfruttare l'infrastruttura esistente. Ciò potrebbe richiedere la connessione diretta dei carichi di lavoro di OpenShift Virtualization alle reti esterne. L'operatore NMState, in combinazione con la rete Multus, offre un metodo flessibile per ottenere prestazioni e connettività semplificando la transizione da un hypervisor tradizionale a Red Hat OpenShift Virtualization.

Per saperne di più su OpenShift Virtualization, puoi leggere un altro mio post sul blog sull'argomento, o dare un'occhiata al prodotto sul nostro sito web. Per ulteriori informazioni sugli argomenti di rete trattati in questo articolo, la documentazione di Red Hat OpenShift contiene tutti i dettagli necessari. Infine, se desideri vedere una demo o utilizzare OpenShift Virtualization, contatta il tuo Account Executive.

Sull'autore

Matthew Secaur is a Red Hat Principal Technical Account Manager (TAM) for Canada and the Northeast United States. He has expertise in Red Hat OpenShift Platform, Red Hat OpenShift Virtualization, Red Hat OpenStack Platform, and Red Hat Ceph Storage.

Altri risultati simili a questo

PNC’s infrastructure modernization journey with Red Hat OpenShift Virtualization

Shadow-Soft shares top challenges holding organizations back from virtualization modernization

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud