Machine learning has become increasingly crucial in various sectors as more organizations leverage its power to make data-driven decisions. However, training models with large datasets can be time-consuming and computationally expensive. This is where distributed training and Open Data Hub come into play.

Open Data Hub is an open-source platform for running data science workloads on Openshift. It includes a suite of tools and services that make it easy to manage and scale machine learning workloads. Open Data Hub can be deployed on-premises or in the cloud, and it is compatible with a wide range of data science tools and frameworks.

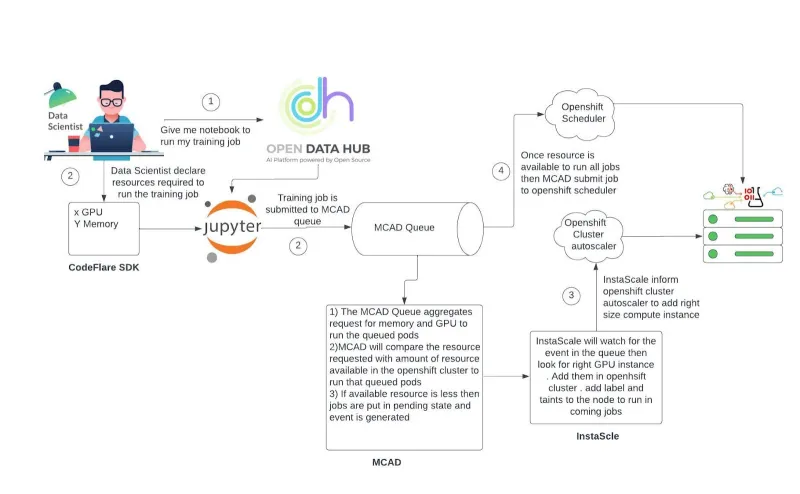

Distributed training involves training machine learning models using multiple nodes simultaneously, speeding up the process and allowing for the use of larger datasets and more complex models. With the introduction of the new Distributed Workloads component, the Open Data Hub provides a scalable and flexible solution for running AI/ML model batch training jobs on large datasets. In this article, we will explore the capabilities that make up this Distributed Workloads component, namely: Multi-Cluster Application Dispatcher (MCAD) Queueing, job management, and dynamic provisioning of GPU infrastructure with InstaScale. We’ll introduce how you can leverage these new technologies to enable your own AI/ML model batch training.

Batch training is a popular technique used in machine learning for training models on large datasets. However, Batch training workflows tend to be “All or nothing“, meaning that when jobs for batch training are submitted, worker processes need to be scheduled and executed concurrently. Sufficient compute resources need to be available when the job begins to execute, otherwise training jobs will fail. Furthermore, different data science groups often share the same compute infrastructure, and certain workloads may need to preempt others for that valuable but limited compute time. The new Distributed Workloads component of the Open Data Hub solves all of these problems. It provides a queue onto which jobs can be submitted and not scheduled until appropriate resources are available. The Open Data Hub can dynamically scale the OpenShift clusters to meet the resource requirements for these jobs, and all of this is done within the confines of administrator-managed resource quotas, job groups, and priorities.

Open Data Hub’s new Distributed Workloads stack is comprised of the following features that make AI/ML model batch training at scale easy and efficient:

Ease of use with Codeflare SDK: The Codefare SDK is integrated into the out-of-the-box ODH notebook images and provides an interactive client for data scientists to define resource requirements (GPU, CPU, and memory) and to submit and manage training jobs.

Batch Management with MCAD: MCAD manages a queue of training jobs that data scientists submit. MCAD makes sure that jobs are not started until all required compute resources are available on the cluster. MCAD makes sure that a given team has not requested more aggregate resources than their quota allows, and makes surethat highest priority jobs are executed first. Finally, MCAD makes surethat all processes necessary to execute a distributed run are scheduled concurrently, meaning that compute cycles aren’t wasted waiting for processes to be scheduled.

Dynamic scaling with Instascale: Instascale works alongside MCAD to make sure that the OpenShift cluster contains sufficient compute resources to execute a job. Instascale optimizes compute costs by launching the right-sizes compute instance for a given job, and releasing these instances when they are no longer needed.

Flexibility : Open Data Hub supports a wide range of machine learning frameworks, including TensorFlow, PyTorch, and Ray (KubeRay).

Conclusion

In conclusion, the Distributed Workloads component available in the newly released Open Data Hub version 1.6 adds the capability to run and manage distributed batch training of AI/ML models. This is made possible by innovative features like MCAD queueing, job management and Instasacle. By leveraging these new capabilities, data scientists can greatly improve the efficiency of their machine learning workloads, leading to better results and faster innovation. To get started using this new stack, check out the Open Data Hub Quick Start guide.

Sull'autore

Altri risultati simili a questo

Resilient model training on Red Hat OpenShift AI with Kubeflow Trainer

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

Technically Speaking | Platform engineering for AI agents

Technically Speaking | Driving healthcare discoveries with AI

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud