Deploying critical PostgreSQL applications in the cloud requires both consistent performance and resilience to protect essential data for business continuity. Together, technologies from Crunchy Data and Red Hat can help enable organizations to deliver data resilience for critical PostgreSQL applications.

Red Hat OpenShift Container Storage plays an increasingly important role, letting organizations deploy reliable, scalable, and highly available persistent storage for their most important PostgreSQL applications. This single software-defined storage solution can be launched on premise, in the public cloud, or in hybrid cloud deployments—increasing agility and resilience even as it simplifies operations.

-

The Crunchy PostgreSQL Operator for Red Hat OpenShift provides a way to create several PostgreSQL pods (multi-instance) with a single primary read-write PostgreSQL instance and multiple read-only replica PostgreSQL instances. The Operator offers database resilience and workload scalability through multiple, secondary read-only instances. When a failure occurs, the Crunchy PostgreSQL Operator can promote a secondary instance to primary and continue operations.

-

OpenShift Container Storage is based on container-native and Ceph storage, the Rook Kubernetes operator, and Noobaa multicloud object gateway technology. OpenShift Container Storage can offer storage redundancy to a PostgreSQL pod (single instance). The same storage layer deployment can also provide resilient and scalable storage to other databases and applications that need to store and access block, file, or object data.

The two approaches can be used individually, or combined for maximum resiliency, as befits the needs of the application.

Recent Red Hat testing evaluated both the Crunchy PostgreSQL Operator for Red Hat OpenShift and OpenShift Container Storage. Testing demonstrated deterministic, scalable performance while providing resiliency for the database and continuity across three Amazon Web Services (AWS) Availability Zones.

Testing simulated hardware failures such as network events, operating system failures, database corruption, and outages, as well as events due to human error, such as storage pod deletion, system reboots, and node failures.

Storage-based resilience, performance, and cost

OpenShift Container Storage is container-native storage that lets application and development teams dynamically provision persistent volumes (PVs), quickly scaling or deprovisioning storage on demand. In addition, OpenShift Container Storage can provide data resilience—both within a cloud provider availability zone as well as across multiple availability zones. The platform offers:

-

Agility to streamline app and dev workflows.

-

Scalability to support emerging data-intensive workloads.

-

Consistency providing a unified end user experience for data services across Red Hat platforms in the hybrid cloud.

Application resilience, performance, and cost always represent trade-offs, and must be considered carefully. The Crunchy PostgreSQL Operator provides resiliency with read replicas and failover. Providing additional resilience at the storage layer with Red Hat OpenShift Container Storage offers additional flexibility and applicability across multiple applications and services.

Modern cloud environments offer considerable choice in terms of storage services. Public cloud customers can choose between general-purpose storage classes or higher performance direct-attached storage volumes for their PVs. These choices can have ramifications for performance and cost as well as functional considerations such as failover capabilities and whether those capabilities are manual or automatic.

Adding OpenShift Container Storage to Amazon Web Services (AWS) storage volumes can help provide automated data failover protection both within a single AWS Availability Zone and across multiple Availability Zones—independent of the storage class selected. Red Hat testing with Crunchy Data PostgreSQL showed that this additional resilience can be accomplished across multiple AWS-provided storage volume classes while adding functionality.

-

AWS Elastic Block Storage (EBS) general-purpose (gp2) storage volumes offer failover within a single AWS Availability Zone. OpenShift Container Storage adds automatic failover for EBS gp2 instances across multiple Availability Zones.

-

For AWS instances with direct-attached storage—such as Amazon Elastic Cloud (EC2) I3en instances—OpenShift Container Storage both automates failover within a single Availability Zone and adds automatic failover across multiple availability zones.

Performance summary

Previous Red Hat testing has shown that Red Hat OpenShift Container Storage running on AWS elastic block storage (EBS) gp2 volumes demonstrated consistent performance when tested using Crunchy Data PostgreSQL pods, overcoming limitations encountered when using EBS gp2 volumes directly. Moreover, this approach added the ability to support high-performance, direct-attached storage (AWS EC2 i3en direct-attached storage) while providing replication across multiple AWS Availability Zones.

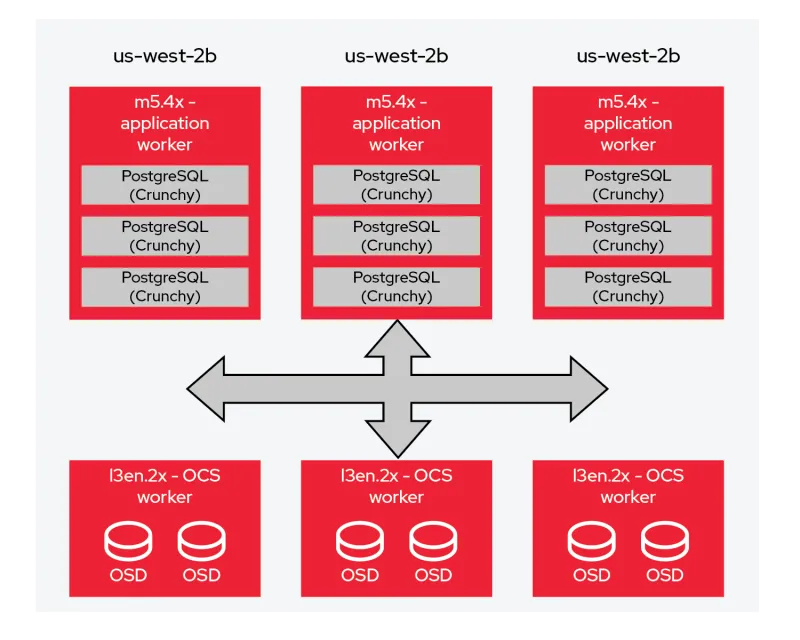

To evaluate performance further, Red Hat engineers compared two Crunchy Data PostgreSQL clusters running on OpenShift Container Storage. Each application worker node contained three Crunchy Data PostgreSQL databases for a total of nine. As shown, each OpenShift Container Storage worker node contained two Ceph object storage daemon (OSD) pods. Each of those pods used a single NVMe device, whether in a single or multiple availability zone environment.

One cluster was created within a single AWS Availability Zone (us-west-2b).

The second cluster was spread across three AWS Availability Zones (us-west-2a, us-west-2b, and us-west-2c).

Testing showed that the OSDs on each node had a stable and consistent spread of input/output (I/O) operations between the databases. This behavior demonstrated that Red Hat Ceph Storage handles the RADOS Block Device (RBD) volumes equally among the databases. Testing produced roughly 50 transactions per second (TPS) per database and 450 TPS for the entire cluster (all nine databases), which was within expectations.

Importantly, OpenShift Container Storage kept the same performance capabilities when moving from a single availability zone to running across multiple availability zones. This finding is remarkable because the devices used by Red Hat Ceph Storage were spread across three instances, with each instance located in a different availability zone. Though latency is typically higher across multiple availability zones, OpenShift Container Storage made the differences in latency essentially moot, providing substantially better resiliency with essentially no tradeoff in performance.

Resilience and business continuity

Resilience is one of the greatest challenges for any enterprise application, and PostgreSQL databases are no exception. Performance during failover is another critical consideration as services must continue to operate with sufficient performance, even while underlying infrastructure services are down or are recovering from failure. Engineers simulated a number of failure scenarios across availability zones, with OpenShift Container Storage running on direct-attached AWS i3en.2xlarge instances.

Failover scenarios included:

-

Simulating human error. To simulate human error, engineers ran the workload 10 times within the single availability zone cluster, failing one of the Ceph OSDs in the cluster by deleting one of the OSD pods. A different OSD pod was deleted in a random manner on each run at different points in the run timeline. Because Kubernetes can quickly bring up another OSD to support the same NVMe device that the OSD pod had used before, the impact on performance was minimal. In fact, Kubernetes reacted so quickly to recreate the deleted pod that performance was nearly identical with and without OSD pod deletion.

-

Simulating maintenance operations. Planned downtime for maintenance operations like security patching can likewise impact operations. To simulate maintenance operations, engineers rebooted an AWS instance, consisting of three AWS i3en.2xlarge instances with two OSDs each. This intervention had the effect of taking two Ceph OSDs down for 1-2 minutes. Kubernetes recognizes when the AWS instance reboots, notices when it is back up, and restarts the pods automatically. Again, actual TPS performance of the database varied only a little, similar to when one of the OSD pods was deleted.

-

Simulating maintenance operations with more OSDs. Because Ceph can handle significantly more OSDs than were deployed in the test cluster, engineers wanted to explore the impact on performance with more OSDs. An additional six AWS EC2 i3en.2xlarge instances were added to the OpenShift Container Storage cluster while keeping the number of instances for PostgreSQL the same. This testing showed even better TPS performance per database, lower I/O pause, and faster full recovery with a larger number of OSDs. These results demonstrate the power of decoupled software-defined storage. Storage resources can be scaled independently from computational (database) resources, potentially realizing dramatic performance gains.

-

Simulating a node failure. Simulating a node failure was important because the AWS EC2 instances have storage devices that are directly attached for much better performance. However, if the AWS instance is shut down and restarted, it will get new storage instances attached to it (distinct from rebooting an AWS instance with direct-attached storage, which retains the same storage devices). This scenario tested the inherent Ceph data replication of OpenShift Container Storage. Because other copies of the data are distributed on other OSDs, OpenShift Container Storage can automatically update new direct-attached storage devices to be part of the cluster. Upon restarting an AWS instance, resiliency was restored to 100% (three copies of data). There was no impact to the performance of the running workloads.

For full details on the performance testing with Crunchy Data PostgreSQL and OpenShift Container Storage, see the paper "Performance and resilience for PostgreSQL: Crunchy Data and Red Hat OpenShift Container Storage."

Conclusion

Coupled with cloud provider storage options, Red Hat OpenShift Container Storage provides a flexible storage platform for PostgreSQL databases. The platform demonstrates consistent performance, improving over AWS EBS gp2 volumes even when OpenShift Container Storage uses underlying EBS gp2 volumes for storage.

The platform provides the ability to utilize higher-performance EC2 i3en direct-attached volumes for PostgreSQL databases, and to employ them across availability zones. For small and large databases alike, the platform provides automatic failover across multiple availability zones, offering comparable performance to non-replicated cloud provider storage options, even under failure conditions.

What to read next:

- Solution overview: "Data resiliency for PostgreSQL: Crunchy Data PostgreSQL on Red Hat OpenShift Container Storage."

- Learn more about Red Hat OpenShift Container Storage 4 on OpenShift.com.

- Catch the reply of "All Things Data: Optimizing Crunchy PostgreSQL with OCS" on the OpenShift Channel on YouTube.

- Follow the Red Hat Cloud Storage and Data Services channel on the Red Hat Blog.

Sull'autore

Sagy Volkov is a former performance engineer in ScaleIO, he initiated the performance engineering group and the ScaleIO enterprise advocates group, and architected the ScaleIO storage appliance reporting to the CTO/founder of ScaleIO. He is now with Red Hat as a storage performance instigator concentrating on application performance (mainly database and CI/CD pipelines) and application resiliency on Rook/Ceph.

He has spoke previously in Cloud Native Storage day (CNS), DevConf 2020, EMC World and the Red Hat booth in KubeCon.

Altri risultati simili a questo

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Can Kubernetes Help People Find Love? | Compiler

Scaling For Complexity With Container Adoption | Code Comments

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud