There's a lot to manage as part of a software's deployment and maintenance. This includes virtual machine (VM) provisioning, patching, authentication and authorisation, web load-balancing, storage integration and redundancy, networking, security, backup and recovery and auditing. On top of all that, there's managing compliance and smooth governance of all those activities. Ideally, a modern architecture can make this simple for you.

It can be difficult to integrate all of the tools needed to provide these functions, and to ensure that they all seamlessly and efficiently interoperate with each other. The reality is that you often run into problems with integrating different systems:

- Scalability: How easily can we scale components of our architecture vertically or horizontally as load on the system increases?

- Decoupling: Can you replace one of your system components with a similar product with minimal change or outage? Ideally, each of your systems should not care what other systems are implementing elsewhere in the architecture.

- Lifecycling: When you need to update one of your systems, can that be done with zero or minimal impact to the rest of the architecture? Redundancy, high availability and disaster recovery are vital components of a resilient architecture design.

- Architectural flexibility: Expanding on the idea of a decoupled system, the components of your architecture should not care what the other components are. They should also not care where those components are. Of course, network latency, performance and regional availability are factors, but whether a given component is running in a public cloud, a private cloud on-premises, a co-location facility or at the edge, should not affect the behaviour of other system components.

GAMES Architectural Patterns

Every organisation is different. Each one has its own requirements, and there are typically a set of common considerations when supporting a specific business function:

- Location: Can it be on-premises? Can it be in the cloud? If so, which type of cloud: private or public?

- Software: On a physical server, in a VM, in a container or consumed as Software-as-a-Service (SaaS)?

- Integration: Direct connection, API calls and other forms of interaction with the wider organisation

Based on interactions with customers and companies over the years, we have found that the following 5 components and principles provide a simple, scalable and flexible architecture for all things software:

- GitOps (config-as-code)

- API

- Microservices

- Event-driven functions through a broker

- Shared Services Platform

GitOps and config-as-code

GitOps, or config-as-code, is a way of managing code (application functionality and configuration) and infrastructure using Git repositories as the single source of truth. The key points of such an approach are:

- Infrastructure and application configuration versioned in Git

- Automatic synchronization of configuration from Git to endpoints

- Drift detection, visualization and correction

- Precise control over synchronization order for complex rollouts

- Rollback and rollforward to any Git commit

- Manifest templating support

- Insight into synchronization status and history

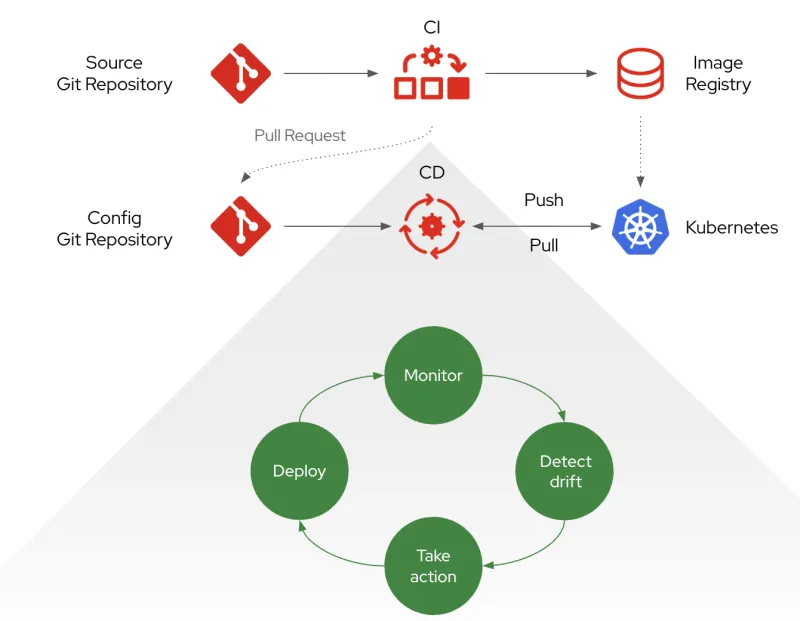

A common tool for implementing GitOps on Kubernetes is ArgoCD. A high level diagram describing the GitOps application delivery model is presented below.

The benefits of this approach include the following:

- GitOps allows you to combat configuration drift. For example, the state of the application configuration and the actual platform configuration are aligned to Git repositories containing strict definitions. In case of discrepancy, GitOps realigns the differences based on what is configured in Git

- The possibility of decoupling the build toolchains (for example, the build of the actual software) from the deployment environments. For example, different development teams can use different tools to build their artifacts (one team using Jenkins, for example, while another one uses Azure DevOps), but the deployment of the artifacts into the various environments is handled with a single tool (ArgoCD)

- There is no longer any need for a “God Mode” toolchain with access to all software artifacts. This makes security teams much happier!

API

An application programming interface (API) is a published definition of methods for interacting with a software component. This allows a system to expose only a select set of features. By adhering to the contract of a programmable interface, a consumer (sometimes a human, and sometimes another application or program) of those features can access the underlying service without being coupled to a particular implementation of that service.

The decoupling of components (that is, the API contract as opposed to the actual implementation of this contract) is key to the use of an API. The underlying services can be changed, upgraded or migrated to new providers without impacting the high-order functions.

There are, broadly speaking, two classes of API:

- External API: The goal is to increase Net Promoter Score (NPS), reduce churn, and drive revenue through increased service consumption

- Internal API: The goal is to reduce cost and support innovation

Organizations enforce the concept of internal and external by defining policy and security around the publishing of an API. One way to enforce this policy is with an API gateway. An API gateway provides a consistent set of security features, such as authentication, authorization, audit logs and rate limiting. It also provides analytics on API calls made through the gateway, which provides insight into how value is distributed across the systems.

These capabilities enable only authorized people or systems to access an API, and that they are doing so both securely and according to any commercial contracts that an organization might have in place.

There are several benefits to using an API:

- One of the key drivers is the decoupling of the implementation of the API from the actual definition of the service itself

- The API system itself can be hosted on any type of infrastructure, and easily migrated or spread between environments. This helps provide independence and portability

- Because it offers a contract between endpoints, an API is used to lifecycle each component individually (as opposed to having to lifecycle all endpoints at the same time)

Microservices

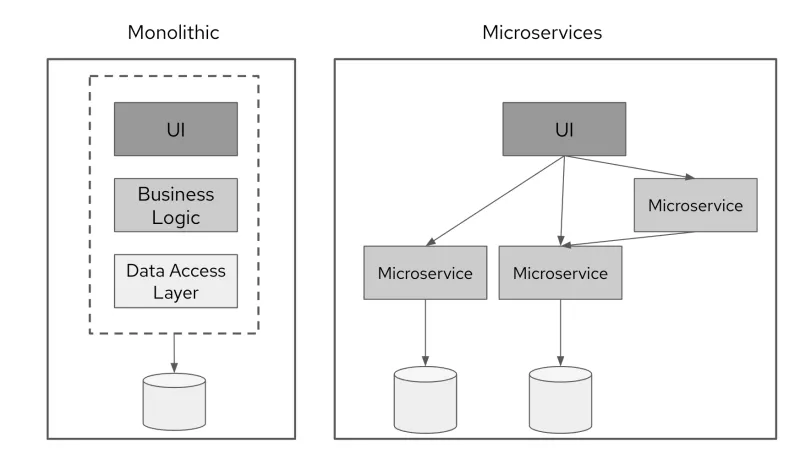

Microservices are a type of architecture for software development, usually used in opposition to monolithic architecture. They are also strongly associated with best practices for cloud-native technologies (developing, deploying or consuming software on cloud).

Instead of having all functions and services within a single application, locked together and operating as a single unit (a monolith), a microservice-based application consists of a collection of independent services communicating through lightweight APIs. The functions of the application can then be deployed, managed and run independently.

When elements of an application are segregated in this way, it allows for development and operations teams to work concurrently, without getting in the way of one another.

The diagram below shows the difference between a monolithic application and a microservices application:

One of the key technologies underpinning microservices is containerization. Containers make it easy to move an application between environments (dev, test, production and so on). Containerization is a key trend in the ISV market, with more and more software being delivered as containerized applications by default.

There are several benefits to using microservices and containers for an application:

- Better resource usage: Containers only package the libraries and configuration specifically needed for the application being run, which helps (among other things) with scalability

- Shorter development lifecycles: Development teams can rebuild their development and testing environments rapidly, without waiting for lengthy infrastructure provisioning

- Portability: A container image requires no knowledge of the operating system used to deploy it, so it doesn't need to be recompiled or reconfigured

- Improved security: The scope of tools and configuration provided in a container can be reduced to only include those necessary for the application, reducing the potential attack surface

Event-driven functions through a broker

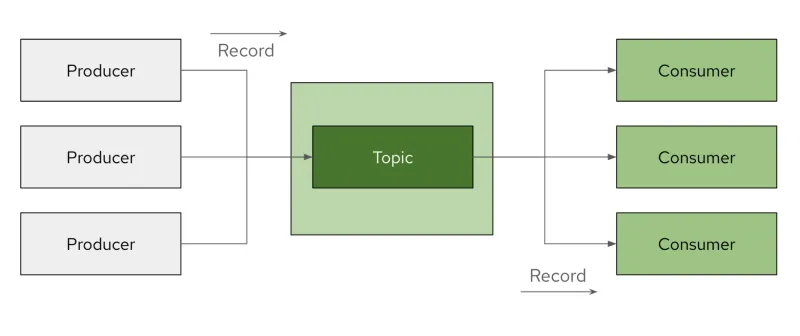

An event broker is responsible for brokering large amounts of data, quickly. It allows data to be classified by topic so that applications interested in a specific topic can retrieve only relevant information.

Traditionally, each data type is mapped directly to a specific application (logs in security, performance metrics in assurance and so on). Any other application requiring access to this information must then integrate with the application that holds it. This can become cumbersome and costly. With a broker in place, a set of publishers and consumers publish or consume data from specific topics, simplifying the overall access to data.

One example of brokering technology is Kafka. A simplified view of the Kafka system components is shown below.

Similar to the use of an API, the use of a broker allows for decoupling between event endpoints (resulting in producers and consumers).

The idea is to allow the component responsible for processing incoming information to do so at its own pace, while also providing flexibility for systems pushing information on a specific topic. For example, this could be used during a transition between two systems, or during migration when an existing system publishing on a topic is moved to a different infrastructure (during a cloud migration, perhaps).

There are several benefits to such an approach:

- Resiliency: A component can resume from a known good point after an outage

- Decoupling: The notification of a specific event is separate from the consumption of it by one or more systems. This is called multiplexing

- Scalability: You can scale endpoints (consumers and producers) independently of one another. The broker acts as a buffer between them

Shared Services Platform

Containers are a key technology underpinning modern architectures. Interestingly, all the platform functions discussed earlier can be deployed as containerized applications: GitOps, API gateways, event brokers and the microservices themselves.

That means you don't have to deploy a group of business applications or functions on different types of infrastructure (dedicated servers or virtual machines), nor manage them independently with their own tools and processes. It's now possible to provide a common platform to manage all these applications and functions in a single comprehensive environment.

This approach simplifies the management of applications by offloading services to a single platform. Ideally, this platform is also decoupled from the infrastructure, providing a complete set of repeatable patterns and deployment.

Conclusion

The use of microservices allows for smaller and modular components that require a rapid, easy to integrate method of communication. A GAMES event-driven architecture that incorporates GitOps, API, microservices, and event brokers can provide a full shared services platform. This supports:

- Transparent migration of individual components in the platform

- Multiple sources and consumers of events

- Integration of components running on completely separated infrastructure

In modern software deployments, providing a platform that allows for simpler, more efficient management is imperative. In our next article, we'll examine two demonstrations to incorporate virtual machines, Red Hat Ansible Automation Platform, and more.

Prova prodotto

Red Hat Ansible Automation Platform | Versione di prova del prodotto

Sull'autore

Simon Delord is a Solution Architect at Red Hat. He works with enterprises on their container/Kubernetes practices and driving business value from open source technology. The majority of his time is spent on introducing OpenShift Container Platform (OCP) to teams and helping break down silos to create cultures of collaboration.Prior to Red Hat, Simon worked with many Telco carriers and vendors in Europe and APAC specializing in networking, data-centres and hybrid cloud architectures.Simon is also a regular speaker at public conferences and has co-authored multiple RFCs in the IETF and other standard bodies.

Altri risultati simili a questo

RPM and DNF features and enhancements in Red Hat Enterprise Linux 10.1

From maintenance to enablement: Making your platform a force-multiplier

The Ground Floor | Compiler: Tales From The Database

Becoming a Coder | Command Line Heroes

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud