The Red Hat Enterprise Linux (RHEL) kernel provides hundreds of settings that can be customized. These settings are frequently customized to increase performance on systems or when performing security hardening.

Implementing consistent kernel settings across a large RHEL environment can be challenging without automation. Red Hat introduced the kernel_settings RHEL System Role to automate the implementation of settings under /proc/sys, /sys, and other settings such as CPU affinity. The role uses tuned to implement these settings on hosts.

RHEL System Roles are a collection of Ansible roles and modules that are included in RHEL to help provide consistent workflows and streamline the execution of manual tasks. For more information on kernel settings in RHEL, refer to the RHEL documentation on Managing, monitoring, and updating the kernel.

Environment overview

In my example environment, I have a control node system named controlnode running RHEL 8 and two managed nodes: rhel8-server1, rhel8-server2, both of which are also running RHEL 8.

In this scenario, these systems have the following workloads:

In this scenario, these systems have the following workloads:

-

rhel8-server1 is running workload1 and workload2

-

rhel8-server2 is running workload1 and workload3

Each of these three workloads, workload1, workload2, and workload3, has associated kernel settings that I would like to set. Note that these kernel settings are for example purposes only.

-

workload1 systems in my environment should have the following sysctl settings:

-

net.core.busy_read = 50

-

net.core.busy_poll = 50

-

net.ipv4.tcp_fastopen = 3

-

-

workload2 systems in my environment should have the following sysctl settings:

-

vm.swappiness = 1

-

-

workload3 systems in my environment should have the following sysctl settings:

-

vm.overcommit_memory = 2

-

I’ve already set up an Ansible service account on the three servers, named ansible, and have SSH key authentication set up so that the ansible account on controlnode can log in to each of the systems. In addition, the ansible service account has been configured with access to the root account via sudo on each host.

I’ve also installed the rhel-system-roles and ansible packages on controlnode. For more information on these tasks, refer to the Introduction to RHEL System Roles post.

In this environment, I’m using a RHEL 8 control node, but you can also use Ansible automation controller or Red Hat Satellite as your RHEL system roles control node. Ansible automation controller provides many advanced automation features and capabilities that are not available when using a RHEL control node.

For more information on Ansible automation controller and its functionality, refer to this overview page. For more information on using Satellite as your RHEL system roles control node, refer to Automating host configuration with Red Hat Satellite and RHEL System Roles.

Defining the inventory file, playbook and role variables

From the controlnode system, the first step is to create a new directory structure to hold the playbook, inventory file and variable files.

[ansible@controlnode ~]$ mkdir -p kernel_settings

I need to define an Ansible inventory file to list and group the hosts that I want the kernel_settings System Role to configure. I’ll create the main inventory file at kernel_settings/inventory.yml with the following content:

all: children: workload1: hosts: rhel8-server1: rhel8-server2: workload2: hosts: rhel8-server1: workload3: hosts: rhel8-server2:

![]() If using Ansible automation controller as your control node, this Inventory can be imported into Red Hat Ansible Automation Platform via an SCM project (example Github or Gitlab) or using the awx-manage Utility as specified in the documentation.

If using Ansible automation controller as your control node, this Inventory can be imported into Red Hat Ansible Automation Platform via an SCM project (example Github or Gitlab) or using the awx-manage Utility as specified in the documentation.

This inventory defines three groups that describe the workloads, and assigns the hosts to the appropriate groups:

-

workload1 group contains the rhel8-server1 and rhel8-server2 hosts.

-

workload2 group contains the rhel8-server1 host

-

workload3 group contains the rhel8-server2 host

Or from the host perspective:

-

rhel8-server1 is a member of the workload1 and workload2 inventory groups

-

rhel8-server2 is a member of the workload1 and workload3 inventory groups

The kernel_settings role, by default, will implement sysctl (settings under /proc/sys) and sysfs (settings under /sys) kernel settings in an additive method. This means the role can be called to set some kernel settings, and then can be called again to set additional settings, while retaining the settings from the first time it was run.

If both runs of the role specified the same kernel setting, the last run of the role would replace the value of the setting.

Due to the way the kernel settings are additive, I will structure the playbook with a task for each of the workloads. For each of these tasks, I’ll use the hosts line to limit the task to only run on hosts in the corresponding workload inventory group, and will use the var_files line to load a corresponding variable file for the workload that defines the workload kernel settings.

I’ll create the playbook at kernel_settings/kernel_settings.yml with the following content:

- name: Configure workload1 tunables hosts: workload1 vars_files: workload1.yml roles: - rhel-system-roles.kernel_settings - name: Configure workload2 tunables hosts: workload2 vars_files: workload2.yml roles: - rhel-system-roles.kernel_settings - name: Configure workload3 tunables hosts: workload3 vars_files: workload3.yml roles: - rhel-system-roles.kernel_settings

![]() If you are using Ansible automation controller as your control node, you can import this Ansible playbook into Red Hat Ansible Automation Platform by creating a Project, following the documentation provided here. It is very common to use Git repos to store Ansible playbooks. Ansible Automation Platform stores automation in units called Jobs which contain the playbook, credentials and inventory. Create a Job Template following the documentation here.

If you are using Ansible automation controller as your control node, you can import this Ansible playbook into Red Hat Ansible Automation Platform by creating a Project, following the documentation provided here. It is very common to use Git repos to store Ansible playbooks. Ansible Automation Platform stores automation in units called Jobs which contain the playbook, credentials and inventory. Create a Job Template following the documentation here.

Next, I’ll define the variable files for each of the workloads in files named workload1.yml, workload2.yml, and workload3.yml. These variable files will define each workload's desired kernel settings.

The README.md file for the kernel_settings role, available at /usr/share/doc/rhel-system-roles/kernel_settings/README.md, contains important information about the role, including a list of available role variables and how to use them.

In this example, each workload will use the kernel_settings_sysctl variable to define the sysctl related settings for the workload.

The kernel_settings/workload1.yml variable file will contain:

kernel_settings_sysctl: - name: net.core.busy_read value: 50 - name: net.core.busy_poll value: 50 - name: net.ipv4.tcp_fastopen value: 3

The kernel_settings/workload2.yml variable file will contain:

kernel_settings_sysctl: - name: vm.swappiness value: 1

And the kernel_settings/workload3.yml variable file will contain:

kernel_settings_sysctl: - name: vm.overcommit_memory value: 2

Running the playbook

At this point, everything is in place, and I’m ready to run the playbook. For this demonstration, I’m using a RHEL control node and will run the playbook from the command line. I’ll use the cd command to move into the kernel_settings directory, and then use the ansible-playbook command to run the playbook.

[ansible@controlnode ~]$ cd kernel_settings [ansible@controlnode kernel_settings]$ ansible-playbook kernel_settings.yml -b -i inventory.yml

I specify that the kernel_settings.yml playbook should be run, that it should escalate to root (the -b flag), and that the inventory.yml file should be used as my Ansible inventory (the -i flag).

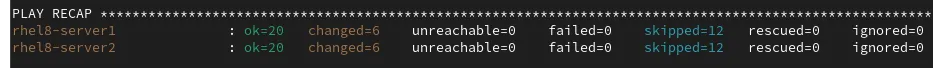

After the playbook completes, I verified that there were no failed tasks:

![]() If you are using Ansible automation controller as your control node, you can launch the job from the automation controller web interface.

If you are using Ansible automation controller as your control node, you can launch the job from the automation controller web interface.

Validating the configuration

I’ll start by checking the settings on rhel-server1:

[ansible@controlnode kernel_settings]$ ssh rhel8-server1 sudo sysctl -a | egrep "net.core.busy_read|net.core.busy_poll|net.ipv4.tcp_fastopen |swappiness|vm.overcommit_memory" net.core.busy_poll = 50 net.core.busy_read = 50 net.ipv4.tcp_fastopen = 3 vm.overcommit_memory = 0 vm.swappiness = 1

The desired configuration was properly set, and vm.overcommit_memory (which should only apply to servers in the workload3 inventory group, which rhel8-server1 is not a member of) is still at its default value (zero).

I’ll also check the settings on rhel8-server2:

$ ssh rhel8-server2 sudo sysctl -a | egrep "net.core.busy_read|net.core.busy_poll|net.ipv4.tcp_fastopen |swappiness|vm.overcommit_memory" net.core.busy_poll = 50 net.core.busy_read = 50 net.ipv4.tcp_fastopen = 3 vm.overcommit_memory = 2 vm.swappiness = 30

Again, the desired configuration was properly set, and vm.swappiness (which should only apply to servers in the workload2 inventory group, which rhel8-server2 is not a member of) is still at its default value (30).

Conclusion

The kernel_settings System Role can help you quickly and easily implement custom kernel settings across your RHEL environment. These custom kernel settings can be used to improve performance or security on RHEL systems.

We offer many RHEL System Roles that can help automate other important aspects of your RHEL environment. To explore additional roles, review the list of available RHEL System Roles and start managing your RHEL servers in a more efficient, consistent and automated manner today.

You can try out the kernel_settings system role in the building a standard operating environment with RHEL System Roles interactive lab environment.

Sull'autore

Brian Smith is a product manager at Red Hat focused on RHEL automation and management. He has been at Red Hat since 2018, previously working with public sector customers as a technical account manager (TAM).

Altri risultati simili a questo

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Friday Five — January 30, 2026 | Red Hat

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud