Introduction

In part one of this series, we saw three approaches to fully automate the provisioning of certificates and create end-to-end encryption. Based on feedback from the community suggesting the post was a bit too theoretical and not immediately actionable, this article will illustrate a practical example. You can see a recording of the demo here.

The Scenario

To demonstrate this approach, we are going to use the Customer -> Preference -> Recommendation microservices application that is being used in the Red Hat Istio tutorial. Within the tutorial, encryption is handled by Istio. In our case, encryption will be configured and handled by the application pods.

We assume that in our environment implements two pieces of Public Key Infrastructure (PKI):

- External PKI

- Internal PKI

For external PKI, certificates must be created to facilitate trust from all browsers and operating system to the external facing services. Companies usually use Certificate Authorities (CA’s) such as Digicert, Verisign etc. for this type of certificates. These companies bill by the number of certificates requested, so we need to keep the external certificate number limited. In our example we are going to use Let’s Encrypt as the external PKI. Let’s Encrypt is a trusted CA maintained by a consortium of companies that provides certificates free of charge with the goal of creating a secure Internet; the catch is that the certificates provisioned via Let’s Encrypt are very short lived, so the only way to use this service effectively is if you automate your certificate provisioning process (this is not an issue as it is the primary goal of this scenario).

The internal PKI is used to establish a trust between internal services (services that do not need to be visible for external consumers and or systems). There are many products that can be installed on premise to act as CAs. One solution that we encounter frequently is Venafi. Red Hat also offers its own CAs product called FreeIPA. Unlike Let’s Encrypt, we can request as many certificates as we want to with this CA because the cost here is not tied to the number of certificate requests, so it is ideal for development and other fast-changing environments. In our solution, we are going to use a RootCA owned by our certificate operator for the internal PKI.

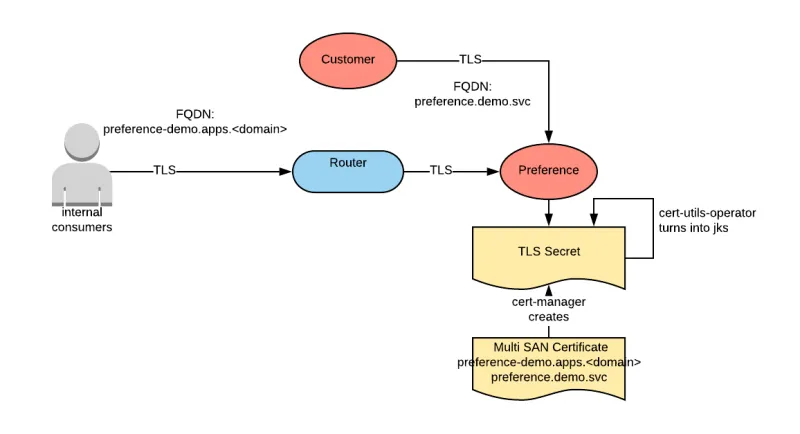

The target architecture is as follows:

The Customer service is exposed to the external clients and it calls the Preference service. The Preference service is also exposed outside of the cluster, but only to internal systems. It calls the Recommendation service. The recommendation service is an internal to the cluster service and not exposed externally.

For automating the certificate provisioning process, we are going to use three operators:

- Cert-manager: this operator is responsible for provisioning certificates. It interfaces with the various CAs and brokers certificate provisioning or renewal requests. Provisioned certificates are placed into Kubernetes secrets.

- Cert-util-operator: this operator provides utility functions around certificates. In particular, it will be used to inject certificates into OpenShift routes and turn PEM-formatted certificates into java-readable keystores.

- Reloader: this operator is used to trigger a deployment when a configmap or secret changes. We are going to use it to restart our applications when certificates get renewed.

Step by Step Instructions

The following describe the steps necessary for deploying the solution.. These steps align to the assets found within this repository.

These steps are presented in a sequential way to allow for better understanding of all the components needed for a successful implementation. In a real world scenario, a developer would configure their CI/CD pipeline to deploy the resources that have been properly set up with the correct annotations/configurations.

This example has been tested on OpenShift Container Platform (OCP) 4.1.x running on Amazon Web Services (AWS). You will need to make some adjustments to the Let’s Encrypt certificate issuer if you change environment (more specifically, if you change the target DNS provider).

To begin, clone the project repository from a directory of your choosing and then enter the project directory:

git clone https://github.com/raffaelespazzoli/end-to-end-encryption-democd end-to-end encryption demo

Installing the operators

These steps may vary as more and more operators are added to the OperatorHub. To support OCP 3.x in which OperatorHub is not available, Helm-based steps are being used.

Helm is being used in a tiller-less fashion, so you will need only the helm CLI.

Cert-manager

oc new-project cert-manager

oc label namespace cert-manager certmanager.k8s.io/disable-validation=true

oc apply --validate=false -f https://github.com/jetstack/cert-manager/releases/download/v0.9.0/cert-manager-openshift.yaml

oc patch deployment cert-manager -n cert-manager -p '{"spec":{"template":{"spec":{"containers":[{"name":"cert-manager","args":["--v=2","--cluster-resource-namespace=$(POD_NAMESPACE)","--leader-election-namespace=$(POD_NAMESPACE)","--dns01-recursive-nameservers=8.8.8.8:53"]}]}}}}'

Cert-utils operator

oc new-project cert-utils-operator

helm repo add cert-utils-operator https://redhat-cop.github.io/cert-utils-operator

helm repo update

export cert_utils_chart_version=$(helm search cert-utils-operator/cert-utils-operator | grep cert-utils-operator/cert-utils-operator | awk '{print $2}')

helm fetch cert-utils-operator/cert-utils-operator --version ${cert_utils_chart_version}

helm template cert-utils-operator-${cert_utils_chart_version}.tgz --namespace cert-utils-operator | oc apply -f - -n cert-utils-operator

rm cert-utils-operator-${cert_utils_chart_version}.tgz

Reloader

oc new-project reloader

helm repo add stakater https://stakater.github.io/stakater-charts

helm repo update

export reloader_chart_version=$(helm search stakater/reloader | grep stakater/reloader | awk '{print $2}')

helm fetch stakater/reloader --version ${reloader_chart_version}

helm template reloader-${reloader_chart_version}.tgz --namespace reloader --set isOpenshift=true | oc apply -f - -n reloader

rm reloader-${reloader_chart_version}.tgz

Configuring the certificate issuers

Next, configure the two issuers for cert-manager; one for Let’s Encrypt and the other for the internal PKI:

Let’s Encrypt issuer

export EMAIL=

oc apply -f issuers/aws-credentials.yaml

sleep 5

export AWS_ACCESS_KEY_ID=$(oc get secret cert-manager-dns-credentials -n cert-manager -o jsonpath='{.data.aws_access_key_id}' | base64 -d)

export REGION=$(oc get nodes --template='{{ with $i := index .items 0 }}{{ index $i.metadata.labels "failure-domain.beta.kubernetes.io/region" }}{{ end }}')

export zoneid=$(oc get dns cluster -o jsonpath='{.spec.publicZone.id}')

envsubst < issuers/lets-encrypt-issuer.yaml | oc apply -f - -n cert-manager

Internal PKI issuer

oc apply -f issuers/internal-issuer.yaml -n cert-manager

Deploying the application

oc new-project demooc apply -f customer/kubernetes/Deployment.yml -n demo

oc apply -f customer/kubernetes/Service.yml -n demooc apply -f preference/kubernetes/Deployment.yml -n demo

oc apply -f preference/kubernetes/Service.yml -n demo

oc apply -f recommendation/kubernetes/Deployment.yml -n demo

oc apply -f recommendation/kubernetes/Service.yml -n demo

If you check the status of the application at this point (for example with oc get pods), you will notice that all the pods are failing. This is due to the fact that these java microservices expect to be able to open a keystore configured in the application properties, but this keystore is not available yet. It will be injected as a secret in the following steps.

Securing the Customer Service

We are going to secure the customer service with a re-encrypt route. The certificate exposed by the route is going to be signed by the Let’s Encrypt CA, the certificate used by the pod is going to be signed by the internal CA.

## create the route

oc create route reencrypt customer --service=customer --port=https -n demo

## create the external certificate

namespace=demo route=customer host=$(oc get route $route -n $namespace -o jsonpath='{.spec.host}') envsubst < certificates/ACME-certificate.yaml | oc apply -f - -n demo

## create internal certificate

service=customer namespace=demo envsubst < certificates/internal-certificate.yaml | oc apply -f - -n demo;

## annotate the secret to create keystores

oc annotate secret service-customer -n demo cert-utils-operator.redhat-cop.io/generate-java-keystores=true;

## mount the secret to the pod

oc set volume deployment customer -n demo --add=true --type=secret --secret-name=service-customer --name=keystores --mount-path=/keystores --read-only=true

## annotate the route to use the certificate

oc annotate route customer -n demo cert-utils-operator.redhat-cop.io/certs-from-secret=route-customer

## make the route trust the internal certificate

oc annotate route customer -n demo cert-utils-operator.redhat-cop.io/destinationCA-from-secret=service-customer

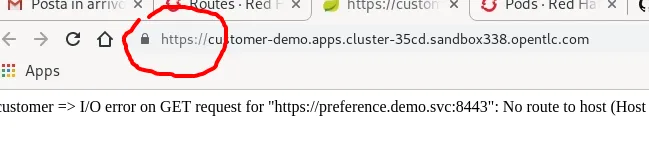

At this point, if you open a browser and navigate to the route, you should see an error (because the preference service is still not working). However, you should observe that the certificate is trusted by the browser.

To find the customer route hostname type the following:

oc get route customer -n demo -o jsonpath='{.spec.host}'

Securing the Preference Service

The preference service needs to be accessible from outside of the cluster by internal systems and not by external consumers. Due to this, we can use a certificate signed by the internal PKI. This certificate will need to have two Subject Alternate Names (SAN’s): one for the route and one for the internal service name (the latter is used by the customer service). We can mount this certificate on the pod and create a passthrough route to access the pod.

## create the passthrough route

oc create route passthrough preference --service=preference --port=https -n demo

## create the two-SANs certificate

namespace=demo route=preference host=$(oc get route $route -n $namespace -o jsonpath='{.spec.host}') service=preference envsubst < certificates/multiSAN-internal-certificate.yaml | oc apply -f - -n demo

## annotate the secret to create keystores

oc annotate secret route-service-preference -n demo cert-utils-operator.redhat-cop.io/generate-java-keystores=true;

## mount the secret to the pod

oc set volume deployment preference-v1 -n demo --add=true --type=secret --secret-name=route-service-preference --name=keystores --mount-path=/keystores --read-only=true

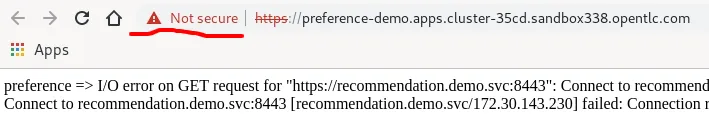

If you navigate using your browser to the preference route, you should see an untrusted certificate.

To find the preference route hostname type the following:

oc get route preference -n demo -o jsonpath='{.spec.host}'

Notice that the customer service trusts calling the preference service because we have configured it’s truststore based on a certificate created with the internal PKI. Since the preference service certificate has also been created with the internal PKI, then trust can be established. The same is true with the Preference to Recommendation connection.

Securing the Recommendation service

In our example, the Recommendation service is an internal service and not exposed outside of the cluster. Given that fact, we can secure it with a certificate signed by the internal PKI.

service=recommendation namespace=demo envsubst < certificates/internal-certificate.yaml | oc apply -f - -n demo;## annotate the secret to create keystores

oc annotate secret service-recommendation -n demo cert-utils-operator.redhat-cop.io/generate-java-keystores=true;

## mount the secret to the pod

oc set volume deployment recommendation-v1 -n demo --add=true --type=secret --secret-name=service-recommendation --name=keystores --mount-path=/keystores --read-only=true

Now, if we navigate our browser to the customer service, everything should be working correctly.

To find the customer route hostname type the following:

oc get route customer -n demo -o jsonpath='{.spec.host}'

Certificate Renewal

So far so good. Consider what happens when a certificate needs to be renewed. Cert-manager monitors the certificates it has issued and will renew them when it’s they are about to expire (this functionality can be customized to trigger the certificate renewal by changing the renewBefore field). At renewal time, the following occurs:

- Cert-manager contacts the configured CA to get a new certificate.

- Cert-manager replaces the secret content with the new certificate files.

- If a route is configured to use the specified certificate, the cert-utils operator updates the route TLS configuration.

- If a certificate secret is annotated to expose the certificate in keystore format, the cert-utils operator refreshes the keystore and truststore fields.

- If a certificate secret is mounted by a pod, then Kubernetes updates the content of the secret in the pod’s file system.

At this point, if an application is able to reconfigure itself based on the secret content being refreshed, then the certificate is successfully renewed. However, many applications still read their configuration at start-up and don’t monitor for subsequent changes. For those situations we can use the Reloader operator to have an application redeployed when the certificate secret is changed.

Let’s apply this configuration to enable this functionality to all of our deployments:

oc annotate deployment customer -n demo secret.reloader.stakater.com/reload=service-customer;oc annotate deployment preference-v1 -n demo secret.reloader.stakater.com/reload=route-service-preference;

oc annotate deployment recommendation-v1 -n demo secret.reloader.stakater.com/reload=service-recommendation;

To demonstrate that this is working as anticipated, we can configure cert-manager to renew the preference certificate every minute:

oc patch certificate preference -n demo -p '{"spec":{"duration":"1h1m","renewBefore":"1h"}}' --type=mergeoc delete secret route-service-preference -n demo

sleep 5

oc annotate secret route-service-preference -n demo cert-utils-operator.redhat-cop.io/generate-java-keystores=true;

At this point, one should be able to observe that the preference pods are redeployed every minute or so.

Conclusions

In this article, we explored an example of how one can automate the entire certificate lifecycle (provisioning, renewal, retirement) for applications while at the same time integrating with existing CAs (external and or on premise). By being able to combine features from several operators, we were able to complete this task in a reliable and repeatable fashion.

Sull'autore

Raffaele is a full-stack enterprise architect with 20+ years of experience. Raffaele started his career in Italy as a Java Architect then gradually moved to Integration Architect and then Enterprise Architect. Later he moved to the United States to eventually become an OpenShift Architect for Red Hat consulting services, acquiring, in the process, knowledge of the infrastructure side of IT.

Currently Raffaele covers a consulting position of cross-portfolio application architect with a focus on OpenShift. Most of his career Raffaele worked with large financial institutions allowing him to acquire an understanding of enterprise processes and security and compliance requirements of large enterprise customers.

Raffaele has become part of the CNCF TAG Storage and contributed to the Cloud Native Disaster Recovery whitepaper.

Recently Raffaele has been focusing on how to improve the developer experience by implementing internal development platforms (IDP).

Altri risultati simili a questo

Deploy Confidential Computing on AWS Nitro Enclaves with Red Hat Enterprise Linux

Red Hat OpenShift sandboxed containers 1.11 and Red Hat build of Trustee 1.0 accelerate confidential computing across the hybrid cloud

What Is Product Security? | Compiler

Technically Speaking | Security for the AI supply chain

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud