This is the third post on OpenShift on OpenStack and availability zones. In the first part we introduced OpenStack AZs and presented different OpenShift deployment options regarding AZs. In the second part we explained the ‘best case scenario’, using OpenShift on OpenStack with multiple Nova AZs and multiple Cinder AZs where the AZ names match.

In this last post in the series we will explain how to configure OpenShift on OpenStack with multiple Nova AZs and a single Cinder AZs, and the final conclusions.

PART III - Scenario Two: Multiple Nova AZs with a Single Cinder AZ

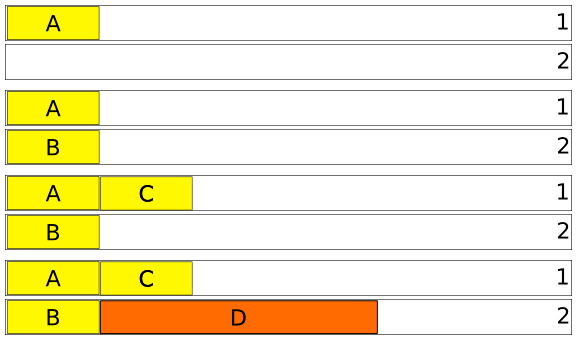

In this part of the blog series, the following scenario consists of using:

-

Three Nova AZs (AZ1, AZ2, AZ3)

-

One Cinder AZ (nova)

This scenario touches on a typical scenario where an OpenStack and OpenShift administrator find themselves in a situation where they don’t have matching AZ for both Nova and Cinder and instead need to find ways to workaround the issue of not being able to create PVCs because of the AZ name differences between the Nova and Cinder services.

NOTE: Ensure the value of cross_az_attach = True located within the nova.conf file under the [cinder] section is set in order to mount cinder volumes in different availability zones.

As in our previous scenario, we will demonstrate our use case with the asb-etcd pod. The StorageClass is created at installation time as in scenario one.

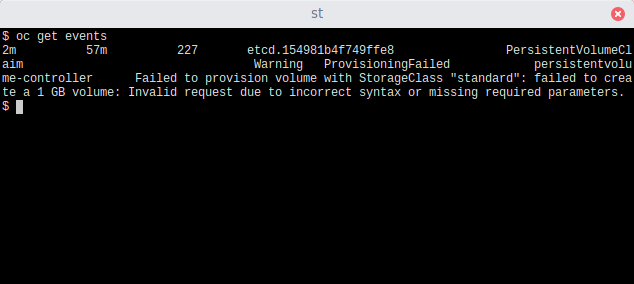

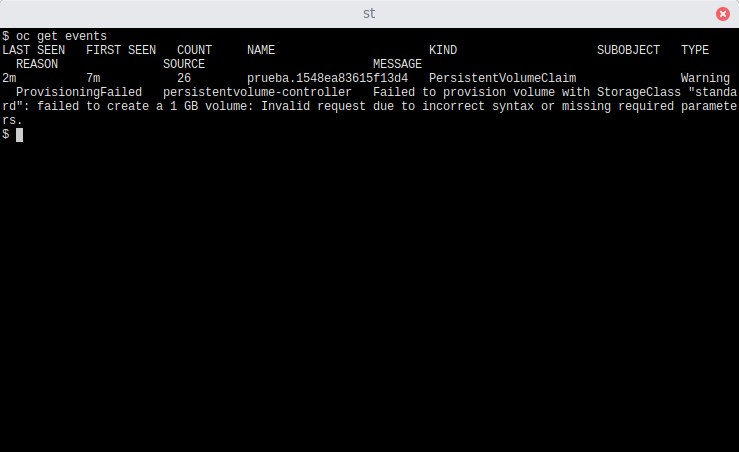

After a successful installation, the pod is in an error state. Looking in the events, we see that the error is “Invalid request due to incorrect syntax or missing required parameters:

This means OpenShift has tried to create a Cinder volume but it doesn’t know how to create it. Let’s take a look at the OpenShift controller logs.

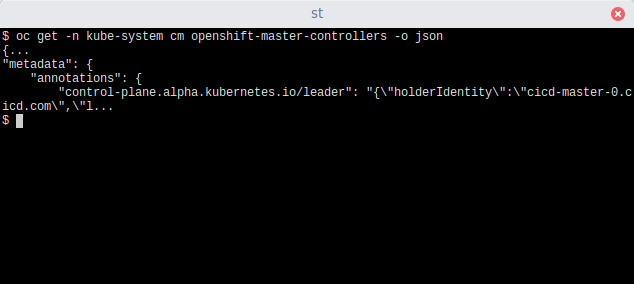

First, check who is the current OpenShift controller:

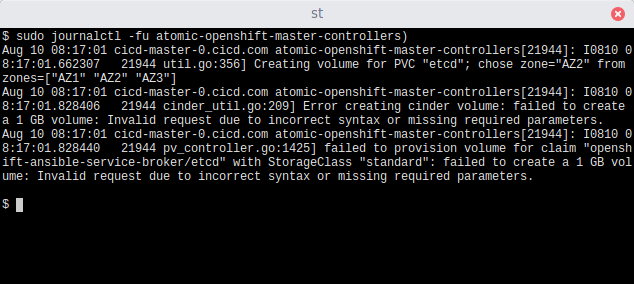

Next, within the cicd-master-0.cicd.com host, take a look at the OpenShift controller logs:

The logs report that it failed to create a volume due to an “Invalid request due to incorrect syntax or missing required parameters” but the first line shows that it is trying to create the volume in Cinder ‘AZ2’, which doesn’t exist. The OpenShift scheduler is expecting Cinder AZs such as AZ1,AZ2,AZ3 as shown in our previous scenario.

In order to solve this, we are try a few methodologies. These include:

-

Modifying the StorageClass to specify the Cinder AZ

-

Modifying the scheduler to ignore the Cinder AZ.

-

Modifying the OpenStack cloud provider configuration in OpenShift to ignore the cinder AZ (available since OCP 3.10).

For those in a hurry, the correct answer is C - Modifying the OpenStack cloud provider configuration in OpenShift to ignore the cinder AZ. For those interested in understanding how we got to this conclusion, check out the other existing scenarios.

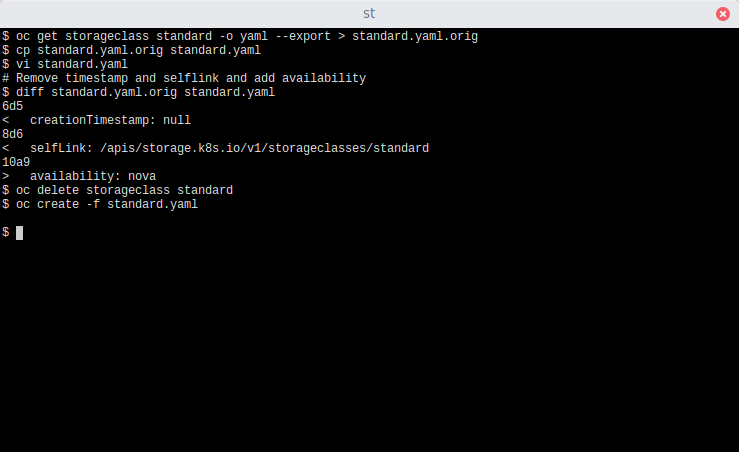

Scenario 2A: Modifying the StorageClass to specify the Cinder AZ

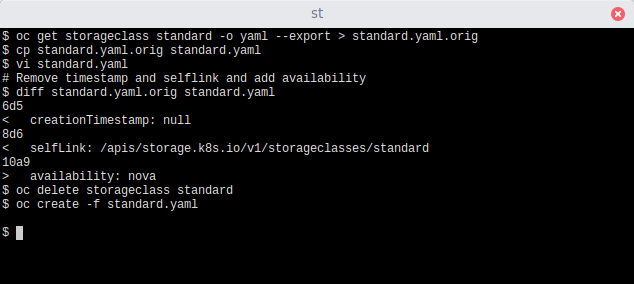

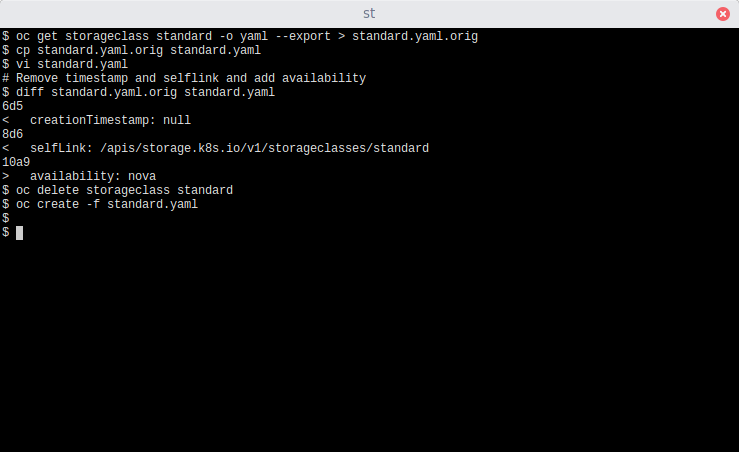

Attempting to modify a StoreClass is not permitted. Instead, delete the existing StorageClass, create a new StorageClass, and set the ‘availability: nova’ parameter:

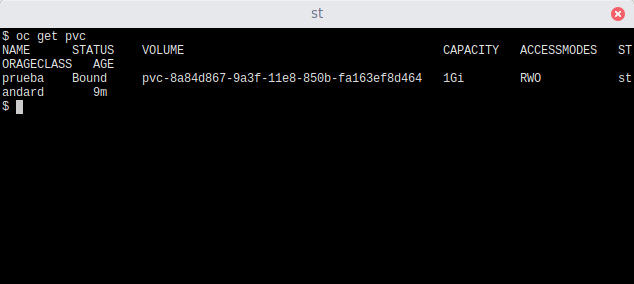

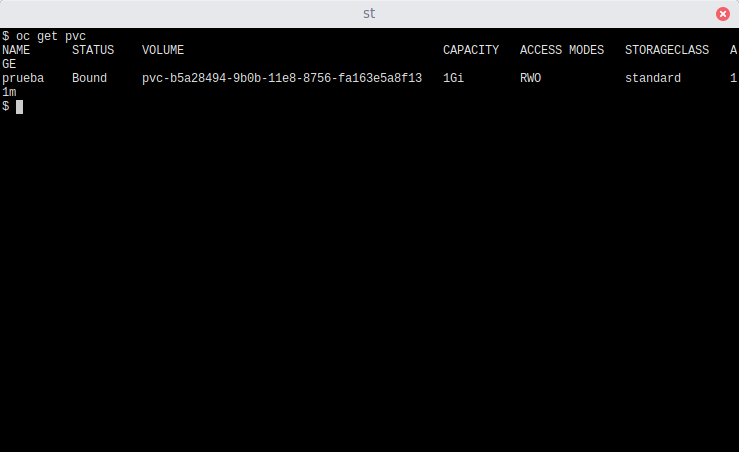

The creation of the new StorageClass will create a new volume that can be seen using the oc get pvc command.

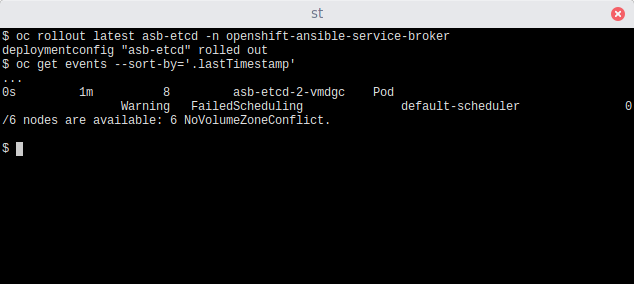

With the new StorageClass in place, let us re-attempt the failed deployment of the asb-etcd pod using the oc rollout command.

OpenShift Controller logs shows:

... Aug 07 08:54:27 cicd-master-0.cicd.com atomic-openshift-master-controllers[19164]: I0807 08:54:27.441451 19164 event.go:218] Event(v1.ObjectReference{Kind:"Pod", Namespace:"openshift-ansible-service-broker", Name:"asb-etcd-2-vmdgc", UID:"04871049-9a41-11e8-99b9-fa163efacf4c", APIVersion:"v1", ResourceVersion:"19197", FieldPath:""}): type: 'Warning' reason: 'FailedScheduling' 0/6 nodes are available: 6 NoVolumeZoneConflict. ...

The new attempt unfortunately fails due to it is unable to find a proper node as there are no nodes tagged with the ‘nova’ availability zone (Cinder AZ != Nova AZ)

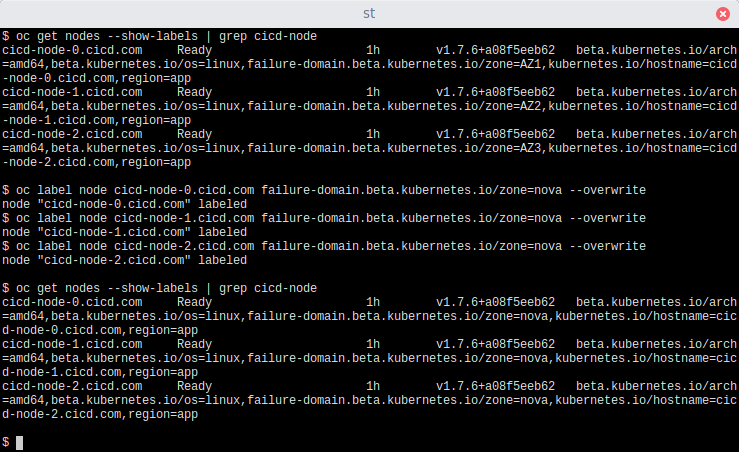

What if we relabel the nodes to pretend they belong to the ‘nova’ AZ instead the proper one? (This is not recommended.)

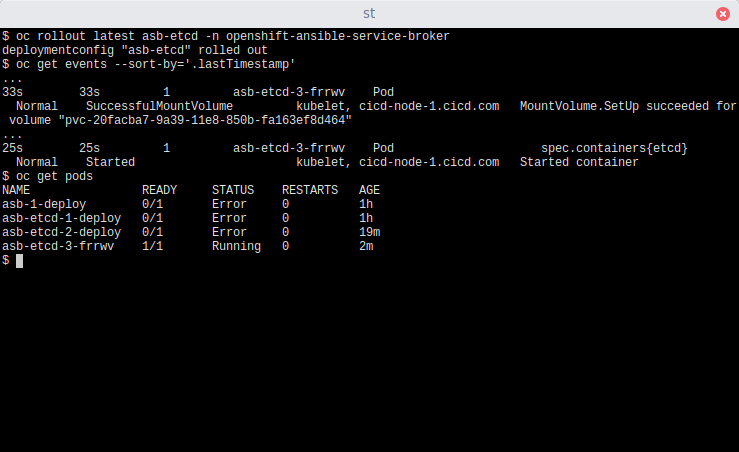

Let’s re-attempt the failed deployment of the asb-etcd pod using the oc rollout command once more.

It is working!

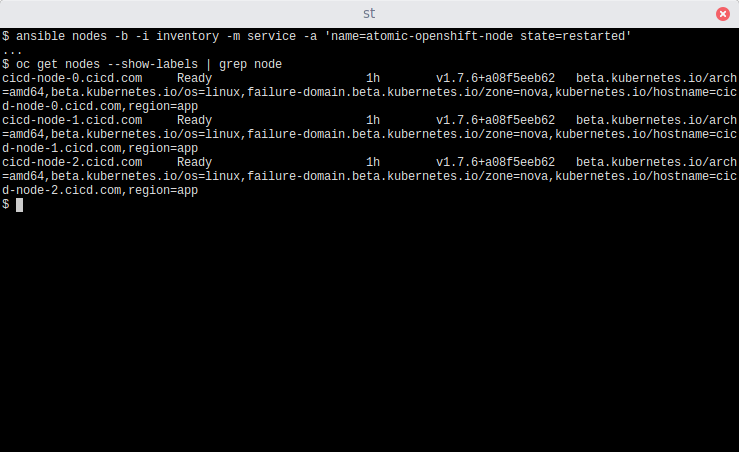

Let’s restart the node processes to see if they are relabeled automatically:

Nothing seems to happen. Would a reboot relabel the nodes?

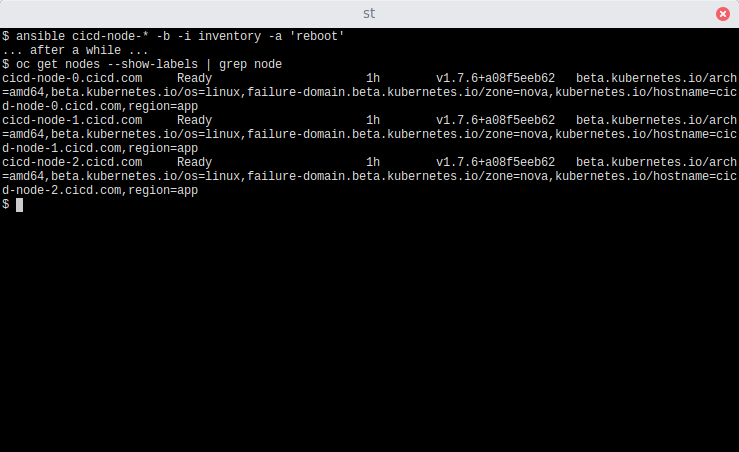

Still working… let’s remove the nodes objects and restart the nodes themselves:

After removing the nodes, they are registered automatically again and as they use the OpenStack API, they are labeled automatically with the Nova AZ they are running (overwriting the fake ‘nova’ AZ name we use before)… so re-labeling the nodes is not a proper solution.

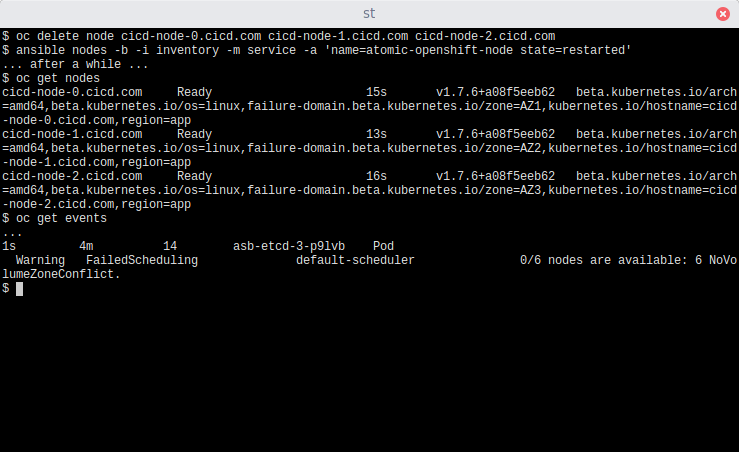

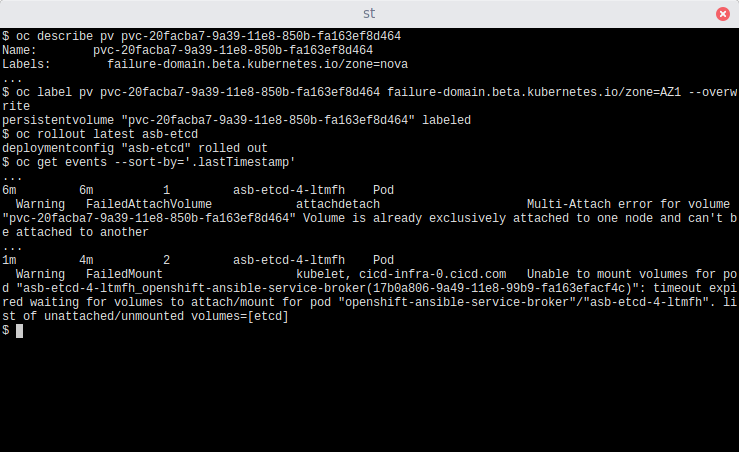

What about relabeling the volume instead?

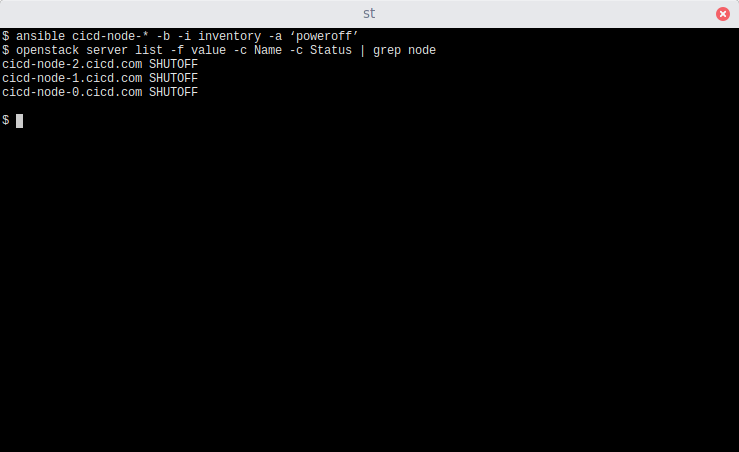

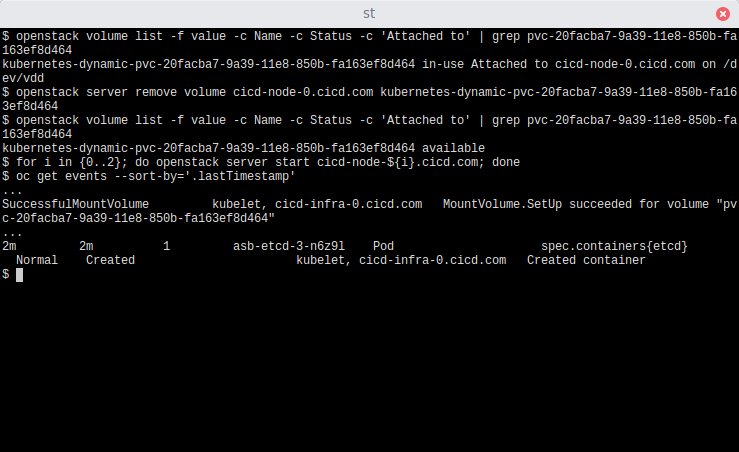

In this case, it is failing because there are timeouts trying to unmount the volumes. For testing purposes, we will shutdown the nodes, detach the volume manually from OpenStack CLI and restart the instances:

Our PV ID was ‘pvc-20facba7-9a39-11e8-850b-fa163ef8d464’ so the openstack volume should have a name like kubernetes-dynamic-${PVID}, as shown in the next figure:

Relabeling works in this scenario because the PV was previously created. During the installation time this would not be a valid solution as the label for the PV does not exist, thus we cannot recommend this scenario.

Scenario 2A Conclusion

While relabeling the nodes and volumes technically could work, it required multiple workarounds not acceptable for production. In this example, the PV was given the fake label of AZ1 and the pod started in a node within AZ1. However, this workaround is not recommended because it required to relabel the PV once it was created and the creation of the PV required a relabeling of the nodes themselves. Due to this, this solution is not recommended. Let’s take a look at the next method.

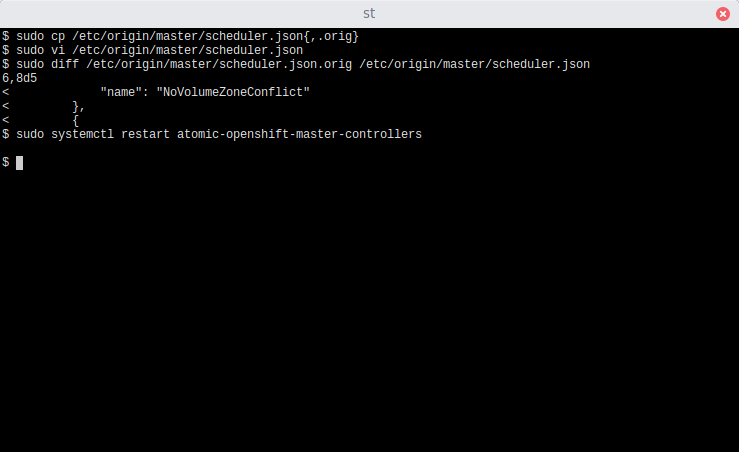

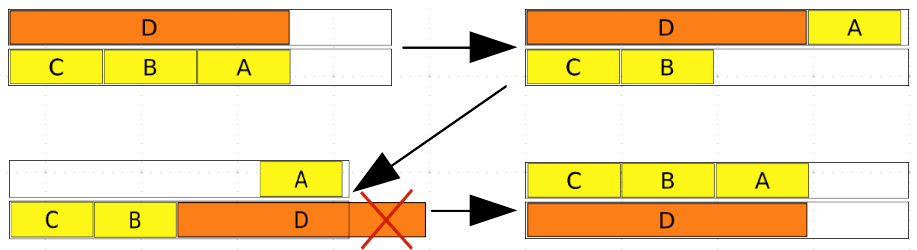

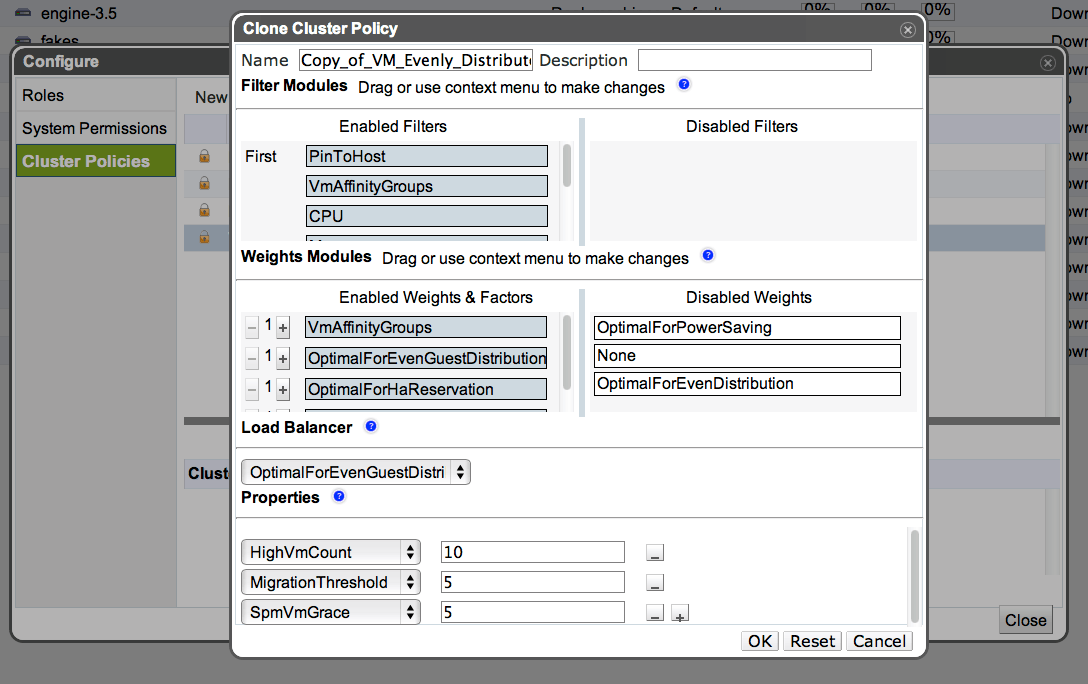

Scenario 2B: Modifying the Scheduler to ignore the AZ

Modifying the scheduler is a risky process and should be avoided unless no other options are available. The following step is shown for scenario completion but is not recommended as there are better options than modifying the scheduler (a key component of OpenShift) that we will see later. See the "Default Scheduling" section of the Red Hat OpenShift Container Platform docs for more information.

For this use case, let’s try to modify the default scheduler to ignore the volumes AZ in a fresh environment as done previously.

On every OpenShift master host:

The Controller log files will show event errors similar to this output:

1s 1m 8 etcd PersistentVolumeClaim Warning ProvisioningFailed persistentvolume-controller Failed to provision volume with StorageClass "standard": Invalid request due to incorrect syntax or missing required parameters.

Controller logs:

Aug 13 06:25:21 cicd-master-1.cicd.com atomic-openshift-master-controllers[13090]: I0813 06:25:21.787551 13090 util.go:360] Creating volume for PVC "etcd"; chose zone="AZ2" from zones=["AZ1" "AZ2" "AZ3"] Aug 13 06:25:21 cicd-master-1.cicd.com atomic-openshift-master-controllers[13090]: E0813 06:25:21.960043 13090 openstack_volumes.go:325] Failed to create a 1 GB volume: Invalid request due to incorrect syntax or missing required parameters.

Let’s modify the StorageClass to include the AZ information to avoid the missing parameter (even if the scheduler will ignore it later):

Verify the successful creation of the PVC:

Reviewing the Controller logs shows the volume was created successfully:

Aug 13 06:26:38 cicd-master-1.cicd.com atomic-openshift-master-controllers[13090]: I0813 06:26:38.310962 13090 openstack_volumes.go:329] Created volume 9301e353-f1a3-4254-95f4-80648007842a in Availability Zone: nova ... Aug 13 06:27:51 cicd-master-1.cicd.com atomic-openshift-master-controllers[13090]: I0813 06:27:51.820119 13090 reconciler.go:287] attacherDetacher.AttachVolume started for volume "pvc-e669dc86-9ee2-11e8-841f-fa163e08f44d" (UniqueName: "kubernetes.io/cinder/9301e353-f1a3-4254-95f4-80648007842a") from node "cicd-node-0.cicd.com"

Scenario 2B Conclusion

Modifying the scheduler to remove the NoVolumeZoneConflict deem to be a valuable solution, however, this procedure is not recommended as there is a better way to mitigate the problem.

Scenario 2C: Modifying the OpenStack Cloud Provider Configuration in OpenShift to ignore the Cinder AZ.

There is a parameter in the openstack.conf file named ‘ignore-volume-az’ that it is used to influence availability zone use when attaching Cinder volumes. This ensures the scheduler will ignore the volume AZ and attempt to attach the volume to the proper host even if the Nova AZ name and Cinder AZ name are different.

NOTE: Currently, there is no support in the OCP installer to add the ignore-volume-az parameter so it is required to modify it at post installation time (see this Bugzilla entry for more information).

In order to test this scenario of using the ignore-volume-az parameter, we must follow this workflow:

-

Create an openstack.conf file with our environment details and the ignore-volume-az parameter set to true in the [BlockStorage] section of that ini file.

-

Copy the fixed openstack.conf file to all the master/nodes.

-

Restart the OpenShift master/node services (or to simplify, just reboot them all).

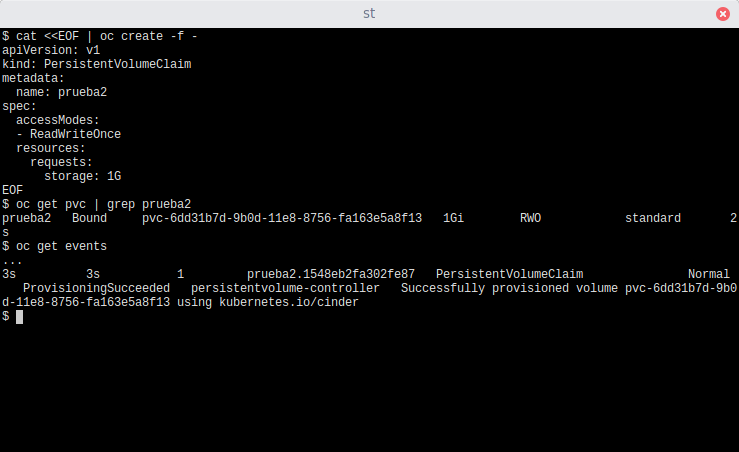

Let’s create a new PVC:

And then use `oc get events` to check:

Let’s modify the StorageClass to add the Cinder AZ name (‘nova’) in the ‘parameters’ section as before:

Verify the successful creation of the PVC:

Notice if a separate PVC is created, it is automatically provisioned:

Controller logs:

Aug 10 08:50:13 cicd-master-0.cicd.com atomic-openshift-master-controllers[2022]: I0810 08:50:13.936575 2022 openstack_volumes.go:454] Created volume b5a28494-9b0b-11e8-8756-fa163e5a8f13 in Availability Zone: nova Ignore volume AZ: true

Success! The Controller log shows how the volume was created and ignored the Cinder Availability Zone.

After those modifications, some Deployments or DeploymentConfigs requiring storage need to be rolled out as there will be in a failed state due to timeout waiting for storage.

NOTE: For the ability to modify the StorageClass at installation time, the availability zone parameter can be configured using the openshift_storageclass_parameters variable as openshift_storageclass_parameters={'fstype': 'xfs', 'availability': 'nova'}

Scenario 2C Conclusions (Recommended)

In order to successfully have an environment with 3 Nova AZs (AZ1, AZ2 & AZ3) and a single Cinder AZ (‘nova’) it is required to:

-

Define the Cinder AZ in the storageclass

-

At installation time with the ‘openshift_storageclass_parameters={'fstype': 'xfs', 'availability': 'nova'}’ ansible variable

-

-

Add the ignore-volume-az parameter in the openstack.conf configuration file.

Final Conclusions

Looking at the different use cases to solve the availability zone issue, we turn to understanding how certain parameters required during the installation of OpenShift on OpenStack may vary depending on the specific architecture. These include:

-

ignore-volume-az: If using different AZ names in Nova than in Cinder

-

openshift_storageclass_parameters: to define certain parameters in the default StorageClass (such as ‘availability’: ‘nova’)

-

openshift_cloudprovider_openstack_blockstorage_version: If having issues with Cinder API version auto detection

When looking at the best options, providing an OpenShift on OpenStack environment that is able to provide the same AZ names for both Nova and Cinder services provides the best results in ensuring a highly available OpenShift environment.

When multiple Cinder AZs is not an option, modifying the openstack.conf file the environment details and setting the ignore-volume-az parameter to true in the [BlockStorage] section of that ini file provides the next best option when dealing with multiple Nova AZs and a single Cinder AZ.

Sull'autore

Altri risultati simili a questo

Ricerca per canale

Automazione

Le ultime novità sulla piattaforma di automazione che riguardano la tecnologia, i team e gli ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Servizi cloud

Maggiori informazioni sul nostro portafoglio di servizi cloud gestiti

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Serie originali

Raccontiamo le interessanti storie di leader e creatori di tecnologie pensate per le aziende

Prodotti

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servizi cloud

- Scopri tutti i prodotti

Strumenti

- Formazione e certificazioni

- Il mio account

- Risorse per sviluppatori

- Supporto clienti

- Calcola il valore delle soluzioni Red Hat

- Red Hat Ecosystem Catalog

- Trova un partner

Prova, acquista, vendi

Comunica

- Contatta l'ufficio vendite

- Contatta l'assistenza clienti

- Contatta un esperto della formazione

- Social media

Informazioni su Red Hat

Red Hat è leader mondiale nella fornitura di soluzioni open source per le aziende, tra cui Linux, Kubernetes, container e soluzioni cloud. Le nostre soluzioni open source, rese sicure per un uso aziendale, consentono di operare su più piattaforme e ambienti, dal datacenter centrale all'edge della rete.

Seleziona la tua lingua

Red Hat legal and privacy links

- Informazioni su Red Hat

- Opportunità di lavoro

- Eventi

- Sedi

- Contattaci

- Blog di Red Hat

- Diversità, equità e inclusione

- Cool Stuff Store

- Red Hat Summit