In the previous blog post in this series we looked at what single root I/O virtualization (SR-IOV) networking is all about and we discussed why it is an important addition to Red Hat Enterprise Linux OpenStack Platform. In this second post we would like to provide a more detailed overview of the implementation, some thoughts on the current limitations, as well as what enhancements are being worked on in the OpenStack community.

Note: this post does not intend to provide a full end to end configuration guide. Customers with an active subscription are welcome to visit the official article covering SR-IOV Networking in Red Hat Enterprise Linux OpenStack Platform 6 for a complete procedure.

Setting up the Environment

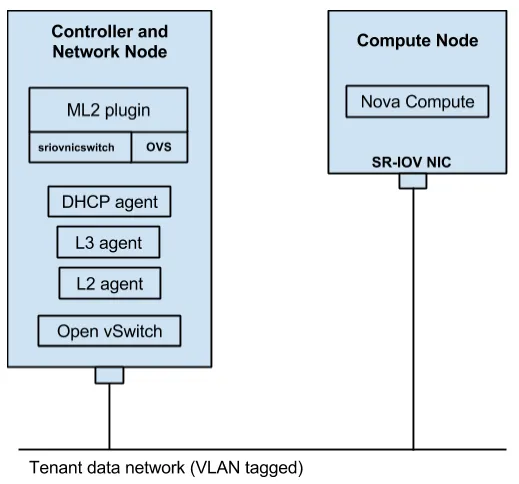

In our small test environment we used two physical nodes: one serves as a Compute node for hosting virtual machine (VM) instances, and the other serves as both the OpenStack Controller and Network node. Both nodes are running Red Hat Enterprise Linux 7.

Compute Node

This is a standard Red Hat Enterprise Linux OpenStack Platform Compute node, running KVM with the Libvirt Nova driver. As the ultimate goal is to provide OpenStack VMs running on this node with access to SR-IOV virtual functions (VFs), SR-IOV support is required on several layers on the Compute node, namely the BIOS, the base operating system, and the physical network adapter. Since SR-IOV completely bypasses the hypervisor layer, there is no need to deploy Open vSwitch or the ovs-agent on this node.

Controller/Network Node

The other node which serves as the OpenStack Controller/Network node includes the various OpenStack API and control services (e.g., Keystone, Neutron, Glance) as well as the Neutron agents required to provide network services for VM instances. Unlike the Compute node, this node still uses Open vSwitch for connectivity into the tenant data networks. This is required in order to serve SR-IOV enabled VM instances with network services such as DHCP, L3 routing and network address translation (NAT). This is also the node in which the Neutron server and the Neutron plugin are deployed.

Topology Layout

For this test we are using a VLAN tagged network connected to both nodes as the tenant data network. Currently there is no support for SR-IOV networking on the Network node, so this node still uses a normal network adapter without SR-IOV capabilities. The Compute node on the other hand uses an SR-IOV enabled network adapter (from the Intel 82576 family in our case).

Configuration Overview

Preparing the Compute node

- The first thing we need to do is to make sure that Intel VT-d is enabled in the BIOS and activated in the kernel. The Intel VT-d specifications provide hardware support for directly assigning a physical device to a virtual machine.

- Recall that the Compute node is equipped with an Intel 82576 based SR-IOV network adapter. For proper SR-IOV operation, we need to load the network adapter driver (igb) with the right parameters to set the maximum number of Virtual Functions (VFs) we want to expose. Different network cards support different values here, so you should consult the proper documentation from the card vendor. In our lab we chose to set this number to seven. This configuration effectively enables SR-IOV on the card itself, which otherwise defaults to regular (non SR-IOV) mode.

- After a reboot, the node should come up ready for SR-IOV. You can verify this by utilizing the lspci utility that lists detailed information about all PCI devices in the system.

Verifying the Compute configuration

Using lspci we can see the Physical Functions (PFs) and the Virtual Functions (VFs) available to the Compute node. Our network adapter is a dual port card, so we get total of two PFs available (one PF per physical port), and seven VFs available for each PF:

# lspci | grep -i 82576

05:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

05:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

05:10.0 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.1 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.2 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.3 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.4 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.5 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.6 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:10.7 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:11.0 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:11.1 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:11.2 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:11.3 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:11.4 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

05:11.5 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

You can also get all the VFs assigned to a specific PF:

# ls -l /sys/class/net/enp5s0f1/device/virtfn*

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn0 -> ../0000:05:10.1

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn1 -> ../0000:05:10.3

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn2 -> ../0000:05:10.5

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn3 -> ../0000:05:10.7

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn4 -> ../0000:05:11.1

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn5 -> ../0000:05:11.3

lrwxrwxrwx. 1 root root 0 Jan 25 13:22 /sys/class/net/enp5s0f1/device/virtfn6 -> ../0000:05:11.5

One parameter you will need to capture for future use is the PCI vendor ID (in vendor_id:product_id format) of your network adapter. This can be extracted from the output of the lspci command with -nn flag. Here is the output from our lab (the PCI vendor ID marked in bold):

# lspci -nn | grep -i 82576

05:00.0 Ethernet controller [0200]: Intel Corporation 82576 Gigabit Network Connection [8086:10c9] (rev 01)

05:00.1 Ethernet controller [0200]: Intel Corporation 82576 Gigabit Network Connection [8086:10c9] (rev 01)

05:10.0 Ethernet controller [0200]: Intel Corporation 82576 Virtual Function [8086:10ca] (rev 01)

Note: this parameter may be different based on your network adapter hardware.

Setting up the Controller/Network node

- In the Neutron server configuration, ML2 should be configured as the core Neutron plugin. Both the Open vSwitch (OVS) and SR-IOV (sriovnicswitch) mechanism drivers need to be loaded.

- Since our design requires a VLAN tagged tenant data network, ‘vlan’ must be listed as a type driver for ML2. Other alternative would be to use ‘flat’ networking configuration which allows transparent forwarding with no specific VLAN tag assignment.

- The VLAN configuration itself is done through Neutron ML2 configuration file, where you can set the appropriate VLAN range and the physical network label. This is the VLAN range you need to make sure is properly configured for transport (i.e., trunking) across the physical network fabric. We are using ‘sriovnet’ as our network label with 80-85 as the VLAN range: network_vlan_ranges = sriovnet:80:85

- One of the great benefits of the SR-IOV ML2 driver is the fact that it is not bound to any specific NIC vendor or card model. The ML2 driver can be used with different cards as long as they support the standard SR-IOV specification. As Red Hat Enterprise Linux OpenStack Platform is supported on top of Red Hat Enterprise Linux, we inherit RHEL rich support of SR-IOV enabled network adapters. In our lab we use the igb/igbvf driver which is included in RHEL 7 and being used to interact with our Intel SR-IOV NIC. To set up the ML2 driver so that it can communicate properly with our Intel NIC, we need to configure the PCI vendor ID we captured earlier in the ML2 SR-IOV configuration file (under supported_pci_vendor_devs), then restart the Neutron server. The format of this config is product_id:vendor_id which is 8086:10ca in our case.

- To allow proper scheduling of SR-IOV devices, Nova scheduler needs to use the FilterScheduler with the PciPassthroughFilter filter. This configuration should be applied on the Controller node under the nova.conf file.

Mapping the required network

To enable scheduling of SR-IOV devices, the Nova PCI Whitelist has been enhanced to allow tags to be associated with PCI devices. PCI devices available for SR-IOV networking should be tagged with physical network label. The network label needs to match the label we used previously when setting the VLAN configuration in the Controller/Network node (‘sriovnet’).

Using the pci_passthrough_whitelist under the Nova configuration file, we can map the VFs to the required physical network. After configuring the whitelist there is a need to restart the nova-compute service for the changes to take effect.

In the below example, we set the Whitelist so that the Physical Function (enp5s0f1) is associated with the physical network (sriovnet). As a result, all the Virtual Functions bound to this PF can now be allocated to VMs.

# pci_passthrough_whitelist={"devname": "enp5s0f1", "physical_network":"sriovnet"}

Creating the Neutron network

Next we will create the Neutron network and subnet; Make sure to use the --provider:physical_network option and specify the network label as was configured on the Controller/Network node (‘sriovnet’). Optionally, you can also set a specific VLAN ID from the range:

# neutron net-create sriov-net1 --provider:network_type=vlan --provider:physical_network=sriovnet --provider:segmentation_id=83

# neutron subnet-create sriov-net1 10.100.0.0/24

Creating an SR-IOV instance

After setting up the base configuration on the Controller/Network node and Compute node, and after creating the Neutron network, now we can go ahead and create our first SR-IOV enabled OpenStack instance.

In order to boot a Nova instance with an SR-IOV networking port, you first need to create the Neutron port and specify its vnic-type as ‘direct’. Then in the ‘nova boot’ command you will need to explicitly reference the port-id you have created using the --nic option as shown below:

# neutron port-create <sriov-net1 net-id> --binding:vnic-type direct

# nova boot --flavor m1.large --image <image> --nic port-id=<port> <vm name>

Examining the results

- On the Compute node, we can now see that one VF has been allocated:

# ip link show enp5s0f1

12: enp5s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT qlen 1000

link/ether 44:1e:a1:73:3d:ab brd ff:ff:ff:ff:ff:ff

vf 0 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

vf 1 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

vf 2 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

vf 3 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

vf 4 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

vf 5 MAC fa:16:3e:0e:3f:0d, vlan 83, spoof checking on, link-state auto

vf 6 MAC 00:00:00:00:00:00, spoof checking on, link-state auto

In the above example enp5s0f1 is the Physical Function (PF), and ‘vf 5’ is allocated to a instance. [We can tell that because it shows with a specific MAC address][this phrase read oddly to me, re-read to confirm that it says what you intend for it to say], and configured with VLAN ID 83 which was allocated based on our configuration.

- On the Compute node, we can also verify the virtual interface definition on Libvirt XML:

Locate the instance_name and of the VM and the hypervisor it is running on:

# nova show <vm name>

The relevant fields are OS-EXT-SRV-ATTR:host and OS-EXT-SRV-ATTR:instance_name.

On the compute node run:

# virsh dumpxml <instance_name>

<SNIP>

<interface type='hostdev' managed='yes'>

<mac address='fa:16:3e:0e:3f:0d'/>

<driver name='vfio'/>

<source>

<address type='pci' domain='0x0000' bus='0x05' slot='0x10' function='0x5'/>

</source>

<vlan>

<tag id='83'/>

</vlan>

<alias name='hostdev0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</interface>

- On the virtual machine instance, running the ‘ifconfig’ command shows a ‘eth0’ interface exposed the the guest operating system with IP address assigned:

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1400

inet 10.100.0.2 netmask 255.255.255.0 broadcast 10.100.0.255

inet6 fe80::f816:3eff:feb9:d855 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:0e:3f:0d txqueuelen 1000 (Ethernet)

RX packets 182 bytes 25976 (25.3 KiB)

Using ‘lspci’ in the instance we can see that the the interface is indeed a PCI device:

# lspci | grep -i 82576

00:04.0 Ethernet controller: Intel Corporation 82576 Virtual Function (rev 01)

Using ‘ethtool’ in the instance we can see that the interface driver is ‘igbvf’ which is Intel’s driver for 82576 Virtual Functions:

# ethtool -i eth0

driver: igbvf

version: 2.0.2-k

firmware-version:

bus-info: 0000:00:04.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: no

supports-register-dump: yes

supports-priv-flags: no

As you can see, the interface behaves as a regular one from the instance point of view, and can be used by any application running inside the guest. The interface was also assigned an IPv4 address from Neutron which means that we have proper connectivity to the Controller/Network node where the DHCP server for this network resides.

As the interface is directly attached to the network adapter and the traffic does not flow through any virtual bridges on the Compute node, it’s important to note that Neutron security groups cannot be used with SR-IOV enabled instances.

What’s Next?

Red Hat Enterprise Linux OpenStack Platform 6 is the first version in which SR-IOV networking was introduced. While the ability to bind a VF into a Nova instance with an appropriate Neutron network is available, we are still looking to enhance the feature to address more use cases as well as to simplify the configuration and operation.

Some of the items we are currently considering include the ability to plug/unplug a SR-IOV port on the fly which is currently not available, launching an instance with a SR-IOV port without explicitly creating the port first, and the ability to allocate an entire PF to a virtual machine instance. There is also active work to enable Horizon (Dashboard) support.

One other item is Live Migration support. An SR-IOV Neutron port may be directly connected to its VF as shown above (vnic_type ‘direct’) or it may be connected with a macvtap device that resides on the Compute (vnic_type ‘macvtap’), which is then connected to the corresponding VF. The macvtap option provides a baseline for implementing Live Migration for SR-IOV enabled instances.

Interested in trying the latest OpenStack-based cloud platform from the world’s leading provider of open source solutions? Download a free evaluation of Red Hat Enterprise Linux OpenStack Platform 6 or learn more about it from the product page.

저자 소개

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래