Dstat is a beloved tool by many, and a staple when diagnosing system performance issues. However, the original dstat is no longer actively developed. This poses an immediate problem for distributions like Fedora moving to a Python 3 stack, as it lacks a Python 3 implementation (both the tool itself, and its many plugins). It is also problematic in that the plugin system was relatively simplistic and in need of a significant redesign and rewrite to add new desired features.

Performance Co-Pilot to the rescue

Performance Co-Pilot (PCP) is a lightweight performance analysis toolkit. Used to easily collect metrics, analyze (both) live and historical performance data. It's also designed to be easily extensible, offering APIs and libraries to extract and make use of performance metrics from your own application.

We've taken those features, combined them with the original dstat UI code, and produced a new dstat. From an architectural perspective, PCP separates the collection of metrics from their analysis/display through the performance metrics api (PMAPI). This allows developers to write metric collections/sampling functionality once (efficiently), and reuse that implementation across different requests for reporting/visualizing the metric by abstracting it through calls with the PMAPI instead of the metric sources directly.

Adapting this to dstat

Similar to dstat, PCP has its own implementation of vmstat, iostat, and mpstat. Each of which utilize PCP's extensible collection API's to extract the metrics in a manner similar to their original tools.

We've done this again with dstat, and with our Python libraries, our libraries are compatible with Python 2 and Python 3 versions.

As with the original dstat, you can specify alternate/in-depth metric categories to display via command line:

Plugins

As we continued to develop this new dstat and strove towards feature parity, one important aspect of the dstat UI we wanted to ensure we explicitly included was plugins. However, because we're already able to abstract the metric collection details through the PMAPI, this was a natural place to evolve how we describe plugins within the dstat UI and combined it with a longstanding TODO of creating a configuration file functionality.

Now, all PCP dstat metrics displayed are from 'plugins' defined by config files. This brings an advantage of making it even easier to create plugins for PCP's dstat. For example, compare the nfs3 plugin implementations:

### Author: Dag Wieers <dag@wieers.com>

class dstat_plugin(dstat):

def __init__(self):

self.name = 'nfs3 client'

self.nick = ('read', 'writ', 'rdir', 'inod', 'fs', 'cmmt')

self.vars = ('read', 'write', 'readdir', 'inode', 'filesystem', 'commit')

self.type = 'd'

self.width = 5

self.scale = 1000

self.open('/proc/net/rpc/nfs')

def check(self):

info(1, 'Module %s is still experimental.' % self.filename)

def extract(self):

for l in self.splitlines():

if not l or l[0] != 'proc3': continue

self.set2['read'] = long(l[8])

self.set2['write'] = long(l[9])

self.set2['readdir'] = long(l[17]) + long(l[18])

self.set2['inode'] = long(l[3]) + long(l[4]) + long(l[5]) + long(l[6]) + long(l[7]) + long(l[10]) + long(l[11]) + long(l[12]) + long(l[13]) + long(l[14]) + long(l[15]) + long(l[16])

self.set2['filesystem'] = long(l[19]) + long(l[20]) + long(l[21])

self.set2['commit'] = long(l[22])

for name in self.vars:

self.val[name] = (self.set2[name] - self.set1[name]) * 1.0 / elapsed

if step == op.delay:

self.set1.update(self.set2)

# vim:ts=4:sw=4:et

This implementation not only has to define the metrics -- including how to display them -- but also has to define how to scrape them from their source (in this case; /proc/net/rpc/nfs).

Versus, in the new dstat, where we only have to list the metrics we’ll be using, and how to display them using PCP’s pmrep.conf(5) syntax.

Adding additional (per-user) configurations follows the same procedure. For example, if we wanted a new plugin for kernel entropy, we could create a config file with just a few lines of information:

We can invoke that using “--entropy” as an option to pcp’s dstat command, like so:

While we don't have perfect plugin coverage yet, we'll be working towards this over time. Of course, contributions are welcome!

No daemon required

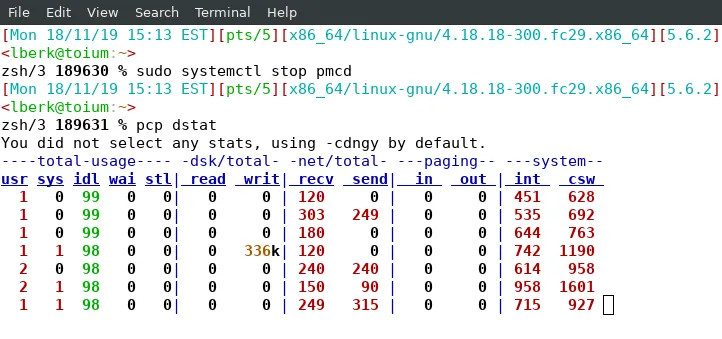

One misconception I’ve heard is that PCP requires daemons to use the new dstat, but this isn’t the case.

To do this, PCP uses 'local context mode'. and pulls metrics directly from their respective Performance Metric Domain Agents (PMDAs).

Enhancing dstat with PCP

With this in mind, integrating a new dstat into PCP's framework adds several advantages and fits in nicely with TODO's that have been on the dstat page for some time. In particular, this one:

Allow to force to given magnitude (--unit=kilo)

This is done throughout the PCP toolkit, which not only means every metric is given a default unit on collection, but the PMAPI knows how to translate between different units when requested.

From a dstat perspective, this is as simple as specifying the unit in the config file. Checking man 5 pcp-dstat gives us this information:

metric.unit (string)

Defines the unit/scale conversion for the metric. Needs to be dimension-compatible and is used with non-string metrics. For allowed values, see pmrep(1).

Another TODO that we’re ticking off is “allow for different types of export modules (only CSV now).”

PCP's framework has a variety of tools that already exist to write pcp data (including those metrics

collected by dstat) to alternative formats. Several kept in the main source tree;

pcp2elasticsearch

pcp2graphite

pcp2influxdb

pcp2json

pcp2spark

pcp2xlsx

pcp2xml

pcp2zabbix

Allow to write buffered to disk

PCP has had its own archive format for the past 20 years, which are written by the 'pmlogger' program (usually run as a service). When a PCP client tool uses the PMAPI (as this dstat implementation does), they also gain access to metrics stored in archives without having to implement the functionality themselves. This means even the brand-new dstat implementation can display previously gathered values as if they were live, using PCP standard flags to specify the timeframe of metrics to display.

Look into interfacing with apps

Another todo we looked after is adding interfaces for apps/protocols like Amavis, Apache httpd, Bind, CIFS, dhcpd, dnsmasq, gfs, Samba, Squid and others.

While not a 1:1 match with the original dstat, we have coverage of more than 70 different applications/domains of metrics. A full list is on GitHub in the PCP repository. Several of interest for dstat users include PMDAs for Apache, Bind, CIFS, GFS2, Gluster, Libvirt, MySQL, NGINX, Postfix, Prometheus, Redis, XFS, and others.

Another TODO we address is “design proper object model and namespace for _all_ possible stats.”

This is done in PCP with the Performance Metrics Name Space (PMNS). Each metric is given a name, which is used to reference it in any given analysis tool, as well as in historical archives.

Finally, there’s the “create client/server monitoring tool” TODO. In the context of PCP's dstat, one of the main benefits of having the Performance Metrics Collector Daemon (PMCD) enabled on the target host is using dstat remotely. This distributed nature was built into PCP from the ground up, and using the PMAPI allows the new dstat to access the functionality remotely.

We think that dstat is a fantastic tool, and that's why we want to see it continue being used, even in the Python 3 world, and want to help it continue to evolve and expand its available features. PCP is available in most modern Linux distros. For further documentation and information, see the Performance Co-Pilot homepage. Want to contribute? Feel free to open a pull request on GitHub, or send your configurations to pcp@groups.io.

저자 소개

유사한 검색 결과

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 6)

Red Hat Enterprise Linux now available on the AWS European Sovereign Cloud

The Overlooked Operating System | Compiler: Stack/Unstuck

Linux, Shadowman, And Open Source Spirit | Compiler

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래