When debugging or tracing running workloads in Red Hat OpenShift deployments, there will frequently be a need to run the workloads with elevated privileges. This is not possible or desirable in production deployments, however, due to the risks to the cluster and other running workloads.

In this article we will demonstrate how customers can leverage an OpenShift route-based deployment strategy in combination with OpenShift sandboxed containers to run such workloads with elevated privileges while ensuring the safety of the OpenShift cluster and other running workloads.

Let’s start with a concrete example

As a developer, you would like to trace a running workload (container) and you would like to take advantage of eBPF. Using eBPF for tracing purposes requires elevated privileges. You have two options to obtain the elevated privileges:

- Re-deploy the running workload with the required higher privileges and perform tracing

- As an admin, access the host running the workload and perform the necessary tracing operation

Option 1 will require cluster-wide policy changes to allow the execution of privileged workloads. Additionally, allowing privileged workloads on the host increases the risk of security mishaps on the cluster.

Option 2 will only be feasible in some of the deployments since, as a developer, you will not have cluster-admin access to the host to perform privileged operations like tracing.

Given these practical challenges, you may be wondering if there is a safer and acceptable mechanism, but don't be worried. There is a way that you can use privileged capabilities safely even in production clusters. In the following sections we will explain how this is done in practice.

OpenShift route-based deployment

OpenShift's route-based deployments enable external access to your applications. By creating a route, you host your application at a public URL, either secured or unsecured. An unsecured route uses the basic HTTP routing protocol and exposes a service on an unsecured application port, while secure routes offer several types of TLS termination to serve certificates to the client. With this, you can better ensure that your application is safely accessible to users outside of your network.You can read more about OpenShift routes here: Configuring Routes | Networking | OpenShift Container Platform 4.12

OpenShift sandboxed containers

Red Hat OpenShift sandboxed containers (OSC) use lightweight virtual machines (VMs) for an OCI-compliant container runtime, providing an additional layer of isolation, resource sharing and a native Kubernetes user experience.

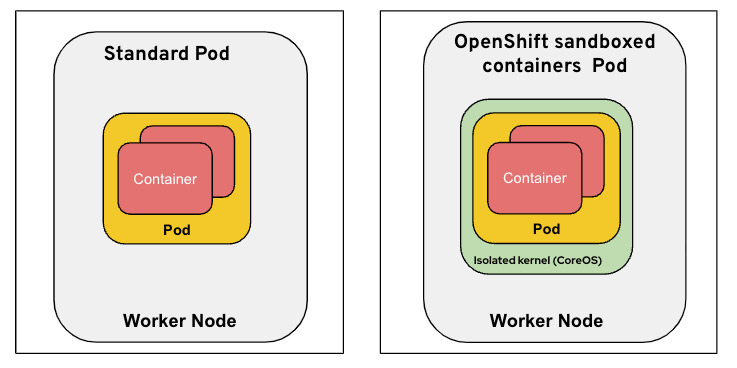

The following diagram compares a standard pod to an OSC pod (note the additional green VM layer for isolation):

For additional details on OSC vs standard pods see Standard pod versus OpenShift sandboxed containers pod.

So the question then is: How does OSC help address the issue of needing elevated privileges for many of the advanced debugging techniques (such as eBPF)?

The answer is that OSC runs the pod inside a VM, so in many of the cases we can safely provide elevated privileges to the workload running in the pod since those privileges only affect the sandbox VM (green box above) and not the worker node itself (gray box above). We say “in many” and not in all cases since there may be some cases where the workload requires elevated privileges affecting the worker node itself (a.k.a. the host), however, from our experience, the majority of advanced debugging capabilities can be addressed at the VM level.

So as long as we spin up our workload inside OpenShift sandboxed containers we can use debugging tools that require privileged capabilities.

The next question to ask is: How can we run the majority of workloads as standard pods and the workloads to be debugged as OSC pods?

The overall idea

OpenShift route-based deployment is typically used for A/B deployments, where both versions A and B are active simultaneously—some users use version A, and others use version B. The traffic splitting feature of the OpenShift route-based deployment allows you to define multiple backend services for a single route and distribute the traffic between them based on a percentage or weight.

Using the same route with multiple backend services and splitting the traffic between the two services simplifies the deployment and management of the workloads, as you can use a single route to manage multiple versions of the same workload.

Going back to standard and OpenShift sandboxed container pods, we deploy a mix of the same workload running as standard pods (one service) and OSC pods (second service) with debugging tools deployed as sidecar containers. OpenShift route-based deployment steers the traffic to both types of the services.

The idea is to deploy multiple backend services:

- One service using standard pods

- Another service using OSC pods

- Leveraging traffic splitting to distribute the traffic across these services as needed

Details

We'll use a very simple “http-echo” application to demonstrate the running of privileged containers safely in an OpenShift cluster.

Deploy the following YAML to create a pod which runs the http-echo application as a standard container. The application is exposed to the outside world using OpenShift Routes. Note that we use a Kubernetes Pod object to focus on the bare essentials.

apiVersion: v1

kind: Pod

metadata:

name: echo-runc

labels:

app: echo-runc

namespace: sample

spec:

containers:

- name: echo-runc

image: quay.io/bpradipt/http-echo

args: [ "-text", "Hello from runc container", "-listen", ":8080"]

---

kind: Service

apiVersion: v1

metadata:

name: echo-runc

namespace: sample

spec:

selector:

app: echo-runc

ports:

- port: 8080

---

kind: Route

apiVersion: route.openshift.io/v1

metadata:

name: echo-route

namespace: sample

spec:

to:

kind: Service

name: echo-runc

weight: 100

tls:

termination: edge

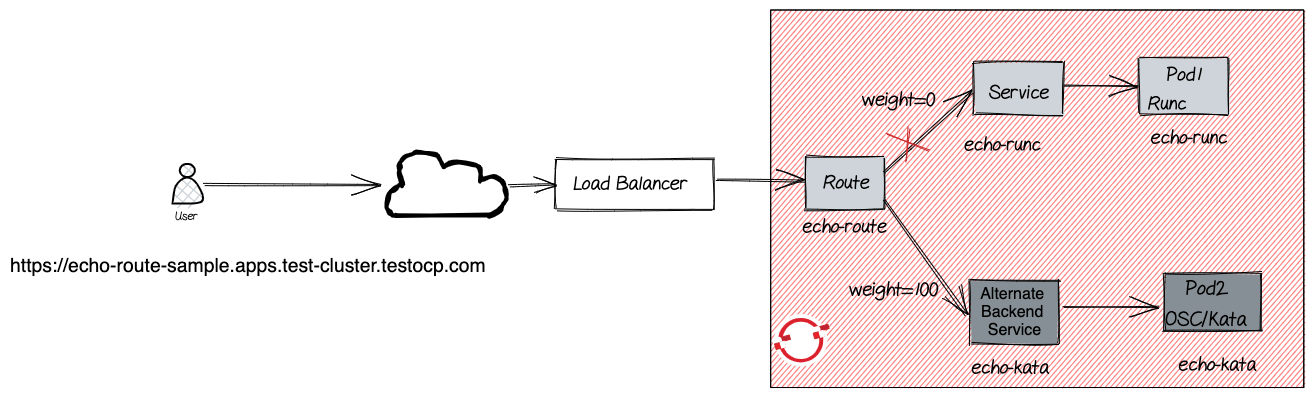

The following diagram shows the logical view of the different OpenShift objects involved when using Routes. The route URL that is shown in the diagram is of the form:

https://<route-name>-<namespace>.apps.<cluster-name>.<cluster-domain>

Now for debugging the deployed application, we'll create another pod running the same application but with a slight twist.

We'll create a pod that runs the application as an OpenShift sandboxed container and use a privileged sidecar container to use eBPF. Note the addition of the perf-sidecar container in the manifest file shown below. The perf-sidecar container includes the required eBPF tools. Depending on your requirements, you might need a container image that has the necessary debugging or tracing tools.

Also note the use of the shareProcessNamespace attribute in the manifest file. This ensures that the processes in a container are visible to all other containers in the same pod and is required for debugging and tracing the workload running the primary container (echo-kata).

apiVersion: v1

kind: Pod

metadata:

name: echo-kata

labels:

app: echo-kata

namespace: sample

spec:

containers:

- name: echo-kata

image: quay.io/bpradipt/http-echo

args: [ "-text", "Hello from Kata container", "-listen", ":8080"]

- name: perf-sidecar

image: quay.io/bpradipt/perf-amd64:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: perf-output

mountPath: /out

securityContext:

privileged: true

volumes:

- name: perf-output

hostPath:

path: /run/out

type: DirectoryOrCreate

shareProcessNamespace: true

runtimeClassName: kata

---

kind: Service

apiVersion: v1

metadata:

name: echo-kata

namespace: sample

spec:

selector:

app: echo-kata

ports:

- port: 8080

Now we need to redirect the traffic coming to the primary application (echo-runc) to our debug application (echo-kata). We can either redirect all the traffic or only a percentage of the traffic.

OpenShift Routes makes it easier to implement this pattern.

Let's say we want to stop all the traffic to the primary application and redirect all traffic to the debug application seamlessly. All that we need is to modify the existing Route object as highlighted in the YAML shown below.

kind: Route

apiVersion: route.openshift.io/v1

metadata:

name: echo-route

namespace: sample

spec:

to:

kind: Service

name: echo-runc

weight: 0

alternateBackends:

- kind: Service

name: echo-kata

weight: 100

tls:

termination: edge

We patch the existing route to initiate the traffic switch.

oc patch -n sample route/echo-route --patch '{"spec": {"to": {"kind": "Service","name": "echo-runc","weight": 0}, "alternateBackends": [{"kind": "Service","name": "echo-kata","weight": 100}]}}'

Now all traffic will be redirected to the echo-kata service. If you want to split the traffic then you'll need to modify the weights accordingly.

The following diagram shows the logical view of the different components. Setting weight=0 for echo-runc service ensures all traffic is routed to the alternate backend service, i.e. the echo-kata service.

After reconfiguring the route, you can attach to the terminal of the perf-sidecar container and perform your tracing activities.

Some sample tracing commands are listed below:

mount -t debugfs none /sys/kernel/debug

bpftrace -l 'tracepoint:syscalls:sys_enter_*'

bpftrace -e 'tracepoint:syscalls:sys_enter_openat { printf("%s %s\n", comm, str(args->filename)); }'

You can see a demo in action here:

The following provide more details about eBPF:

Summary

Here we have described an approach of making creative use of OpenShift functionalities, specifically OpenShift Routes and OpenShift sandboxed containers, to provide a secure environment for running containers with privileged capabilities and performing live debugging of applications.

You can use a similar approach to perform A/B testing and capture profiling data for tuning the application.

You can extend this approach to deploy critical business code with legacy dependencies as an interim measure to buy more time to deploy a new version with updated dependencies. OpenShift's defense-in-depth approach provides a comprehensive and flexible security solution that covers all aspects of your container environment.

Learn more about Red Hat OpenShift sandboxed containers.

Sobre los autores

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Jens Freimann is a Software Engineering Manager at Red Hat with a focus on OpenShift sandboxed containers and Confidential Containers. He has been with Red Hat for more than six years, during which he has made contributions to low-level virtualization features in QEMU, KVM and virtio(-net). Freimann is passionate about Confidential Computing and has a keen interest in helping organizations implement the technology. Freimann has over 15 years of experience in the tech industry and has held various technical roles throughout his career.

Más como éste

AI trust through open collaboration: A new chapter for responsible innovation

AI in telco – the catalyst for scaling digital business

Understanding AI Security Frameworks | Compiler

Data Security And AI | Compiler

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube