In this post we will be leading you through the different building blocks used for implementing the virtio full HW offloading and the vDPA solutions. This effort is still in progress, thus some bits may change in the future, however the governing building blocks are expected to stay the same.

We will be discussing the VFIO, vfio-pci and vhost-vfio all intended on accessing drivers from the userspace both in the guest and the host. We will also be discussing MDEV, vfio-mdev, vhost-mdev and virtio-mdev transport API constructing the vDPA solutions.

The post is a technical deep dive and is intended for architects, developers and those who are passionate about understanding how all the pieces fall into place. If you are more interested in understanding the big picture of vDPA, the previous post “Achieving network wirespeed in an open standard manner - introducing vDPA” is strongly recommended instead (we did warn you).

In this post we will also be referring you to the previous post “A journey to the vhost-users realm” on vhost-user were VFIO and IOMMU are explained in detail.

By the end of this post you should have a solid understanding of the technologies used for implementing virtio full HW offloading and vDPA approaches providing accelerated interfaces to VMs. These building blocks will also be used in later on posts for providing accelerated interfaces for kubernetes containers.

Also, looking forward the virtio-mdev-transport APIs described in this post is targeted to be the basis for the AF_VIRTIO framework providing accelerated interfaces to kubernetes containers through sockets (instead of DPDK). This will be discussed in the final post of this series. Let’s begin.

SR-IOV PFs and VFs

The single root I/O virtualization (SR-IOV) interface is an extension to the PCI Express (PCIe) specification. SR-IOV allows a device, such as a network adapter, to separate access to its resources among various PCIe hardware functions.

The PCIe hardware functions functions consist of the following types:

-

PCIe Physical Function (PF) - This function is the primary function of the device and advertises the device's SR-IOV capabilities.

-

PCIe Virtual Functions (VFs) - one or more VFs. Each VF is associated with the device's PF. A VF shares one or more physical resources of the device such as a memory and a network port with the PF and other VFs on the device.

Each PF and VF are assigned a unique PCI Express Requester ID (RID) that allows an I/O memory management unit (IOMMU) to differentiate between different traffic streams and apply memory and interrupt translations between the PF and VFs. This allows traffic streams to be delivered directly to the appropriate DMA domain.

As a result, nonprivileged data traffic flows from the PF to VF without affecting other VFs.

VFIO and IOMMU

VFIO is a userspace driver development framework that allows userspace applications to directly interact with devices (bypassing the kernel) in a secure IOMMU protected environment such as non-privileged userspace drivers. VFIO is described in detail in the “A journey to the vhost-users realm” post and you can also refer to the kernel documentation.

IOMMU is pretty much the equivalent of an MMU for I/O space where devices access memory directly using DMA. It sits between the main memory and the devices, creates a virtual I/O address space for each device, and provides a mechanism to map that virtual I/O memory dynamically to physical memory. Thus, when a driver configures a device’s DMA it configures the virtual addresses and when the device tries to access them, it gets remapped by the IOMMU.

IOMMU are described in detail in the “A journey to the vhost-users realm” post.

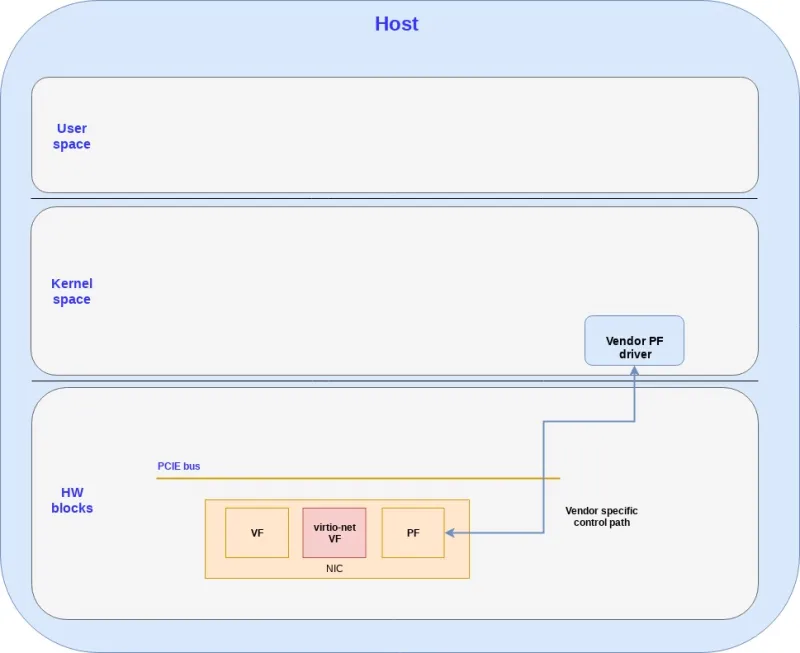

HW blocks for virtio full HW offloading

Virtio full HW offloading device is part of the vendor’s NIC and it’s basically a special type of VF.

Usually the PF will create a VF using the vendor’s proprietary ring layout. However, when a virtio full HW offloading device is created by the PF, then the ring layout uses the virtio ring layout. The PCIe bus is used for connecting the PF and VFs to the kernel driver.

The following diagram shows the PF and VF HW blocks:

SW blocks for virtio full HW offloading

vfio-pci is a host/guest kernel driver that exposes APIs to the userspace for accessing the PCI and PCIe HW devices. In our case this is how we can access the virtio full HW offloading device. The vfio-pci is located underneath the VFIO block which exposes to the userspace ioctl commands to manipulate the device (through the vfio-pci driver). This is true both on the host and the guest.

vfio-device is located inside the qemu process and it used for exposing the virtio full HW offloading device to the guest via the vfio-pci (the host’s kernel one). The vfio-device is responsible for presenting a virtual device to the guest who can then use it to access the real HW NIC.

The vfio-pci running in the guest kernel is using a PCIe to access the vfio-device (inside the qemu) while the vfio-pci in the host kernel is using PCIe to access the virtio full HW offloading driver. It’s important to clarify that in the first case this is a virtualized PCIe while in the second case it’s a physical PCIe going to the NIC.

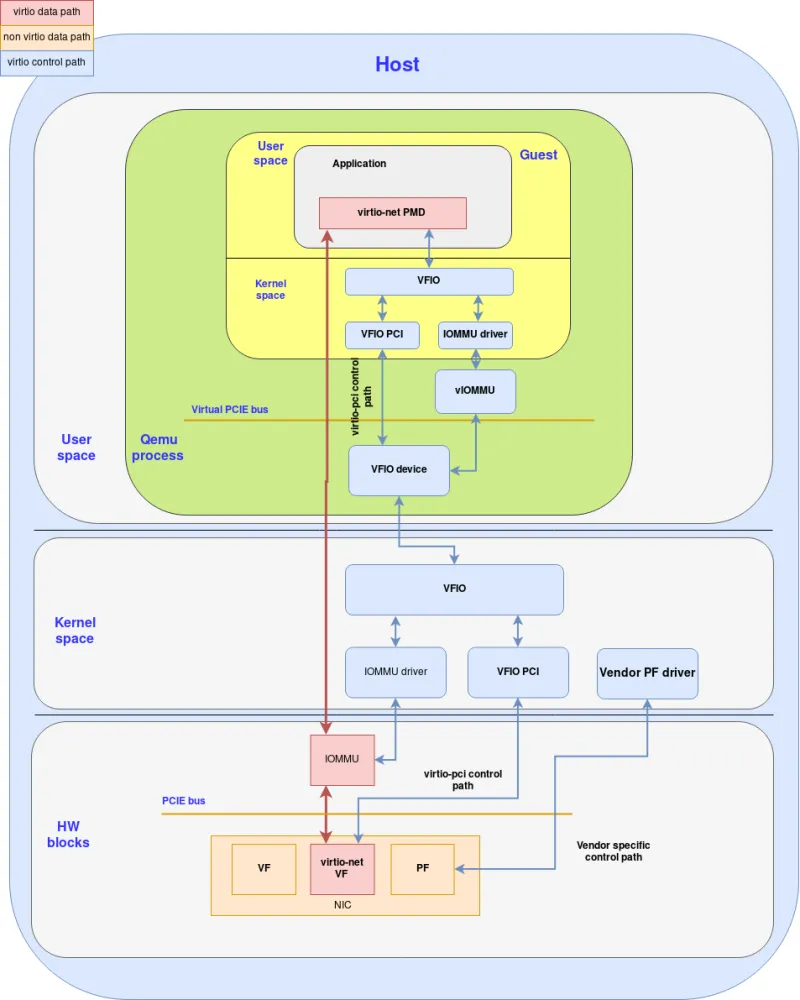

Virtio full HW offloading

Now that we have covered the building blocks let’s see how everything fits together to provide the virtio full HW offloading solution were the NIC implements the virtio spec.

The following diagram shows how everything comes together:

Figure: Virtio full hardware offloading

Note that in this case the NIC contains a virtio-net VF which is fully compliant with both the virtio data plane and control plane. Later on we will discuss vDPA in which case we relate to the NIC VF as a vDPA device since the data plane supports virtio however the control plane is vendor specific.

Let’s take you through the flow of how the NIC can access the guest application queues:

-

In order to start the DMA a mapping between the NIC and guest should be established.

-

For setting up the DMA mapping in the guest the guest DPDK pmd driver uses ioctl commands to configure the DMA and vfio components. For the vIOMMU it maps the GIOVA (Guest IO Virtual Address) to GPA (Guest Physical address).

-

From that point on vIOMMU will translate GPA to HVA (Host virtual address).

-

This results with the guest getting mappings from the GIOVA to HVA.

-

A similar mapping will be done by the VFIO on the host side from GIOVA to HPA (host physical address) .

-

Once the NIC starts using the GIOVA it can reach the HPA belonging to the guest application.

-

If we set up the mapping for the virtqueues in the guest applications the HW can now access them and perform populations of the queues (since all the mapping issues are now resolved).

The virtio control path starting at the virtio-net-pmd progresses like this:

-

Virtio-net-pmd communicates with the guest kernel VFIO (ioctl commands).

-

The guest VFIO communicates with the guest kernel vfio-pci.

-

The guest kernel vfio-pci communicates with the QEMU vfio device via the virtualized PCIe bus.

-

The QEMU vfio device accesses the host kernel VFIO (ioctl commands).

-

The host kernel VFIO communicates with the host kernel vfio-pci.

-

The host kernel vfio-pci communicates with the virtio full HW offloading device via PCIe bus.

With a full virtio HW offloading device, a generic driver on both the host (VFIO) and the guest (virtio) are sufficient to drive the device, improving performance for VMs with no need for vendor specific software.

So what does that mean in practice?

In this approach both the data plane and control plane are offloaded to the HW. This requires the vendor to implement the virtio spec fully inside the NIC through a popular transport such as PCI. Note that each vendor will have its own proprietary implementation.

Live migration considerations

When using virtio full HW offloading, in order to support live migration the hardware must be extended beyond what the virtio spec describes to support an additional capability, such as dirty pages tracking which requires new APIs between QEMU and the NIC (as of version 1.1 the virtio spec doesn’t cover these topics).

Virtio data path HW offloading

Some vendors might choose to implement only a part of a virtio device in hardware, focusing on the parts that are involved in data path operations. The result is commonly referred to as a virtio data path accelerator, or vDPA. This also turns out to be helpful for architectures such as scalable IOV which unlike SR-IOV do not specify a hardware configuration interface (as described below).

The idea behind vDPA is to have a vendor specific control path but can still be presented as a standard virtio device for guests. This requires some emulation or mediation in software between the NIC APIs and the virtio commands.

With vDPA, no vendor specific software is required to operate the device by the VM guest, however, some vendor specific software is used on the host side. The following sections will focus on describing the mdev framework and different components for providing this functionality on the host.

Mediator devices (MDEV)

MDEV allows us to perform device emulation (virtualization done by SW) and mediation (virtualization split between HW/SW) such as exposing a single physical device as multiple virtual devices. This is useful when multiple VMs want to consume the same physical resource each being allocated a different chunk of the physical device.

The idea, thus, is to have data path operations offloaded to hardware, with control path operations emulated for VM guests and mediated using the MDEV framework.

SR-IOV provides multiple VFs on a single NIC, with a standard interface for configuring the VFs. IOMMU limits each VF’s access to the memory space of the virtio driver (e.g. VM guest memory) - a VF can not access the MDEV memory on the host. Thus, when using SR-IOV, control path operations involve the MDEV and thus either have to go through the PF or limit themselves to one-way accesses from MDEV to the VF. To make things more flexible, advanced technologies such as scalable IOV can be used with MDEV (will be elaborated later on).

Going back to the VFIO driver framework, it provides APIs for directly accessing devices from userspace. It exposes direct device access to userspace in a secure IOMMU protected environment. The framework is used for multiple devices (GPUs, different accelerators etc..) while our focus is on network adapters. The direct device access means that VMs and userspace apps can directly access the physical devices. As will be seen, the vfio-mdev builds on this framework.

MDEV can be split into the following parts:

-

mediated core driver APIs

-

mediated device APIs

-

mdev-bus

-

management interface

The mediated core driver provides a common interface for managing mediated devices by drivers. It provides a generic interface for performing the following operations:

-

Create and destroy a mediated device.

-

Add a mediated device to and remove it from a mediated bus driver.

-

Add a mediated device to and remove it from an IOMMU group.

The mediated device API provide an interface for registering a bus driver.

The mdev-bus provides a method to enable the driver and the device to communicate (basically this is a set of agreed-upon APIs).The management interfaces provide a userspace sysfs path through files to trigger the mediated core driver operations (from the userspace).

The first implementation of MDEV is vfio-mdev. Vfio-mdev contains a driver part using the mediated core driver and the device part implementing the mediated device API. “Implementing the mediated device API” means that the device implements a set of CB function APIs in order to communicate with the driver.

The reason we call this vfio-mdev is that when the driver is probed it will ask the device to be added to a vfio group in order to enable the userspace to use the vfio APIs. This implies that you can now access the mediated device as a real HW device in a secure manner (IOMMU).

The following diagram shows how the different building blocks connect together:

Figure: mdev infrastructure

Note the following points:

-

As pointed out the mdev bus provides software bus implementation in the kernel which allows multiple mdev devices and drivers to be attached

-

Vfio-mdev driver exposes VFIO API to userspace while talking to the vfio-mdev device through the mdev bus API

-

Vfio-mdev device implements the actual mediated device towards the upper layer (vfio-mdev driver). It implements generic VFIO API to lower layer (e.g real PCI bus). In practice it’s a driver that translates VFIO command to device specific command. For the functions the physical device can’t support it will emulate them instead.

In conclusion, the mdev framework allows the kernel to expose a device for a driver to consume. The real function could be offloaded to hardware or emulate through software.

Going back to implementing vDPA, the mdev capabilities match our goal of offloading the data plane to hardware while mediating the control plane via the kernel thus the vDPA implementation relies heavily on it.

For more information on mdev refer to the kernel documentation:

Virtio-mdev framework

The purpose of the virtio-mdev framework is to provide vDPA NIC vendors with a standard set of APIs to implement their own control plane drivers. As pointed out, the mdev provides the framework for splitting the data plane to HW while running the control plane in SW.

The driver could be either a userspace driver (VFIO based) or a kernel virtio driver given that the device exposes a unified set of APIs. What this means is that the consumer of the driver can be in the userspace or in the kernel space. In this series of posts, our focus will be on the userspace driver however in the future post series we will discuss the kernel virtio driver as well (for example as part of AF_VIRTIO future framework).

With the help of the virtio-mdev framework the control path can pass through the mdev SW block while the datapath is offloaded to HW. This could be used to simplify the implementation of the virtio mdev device since it only needs to follow and implement the virtio-mdev API for both kernel and userspace drivers.

The virtio mdev transport API is the implementation of the virtio-mdev framework. The API includes functions to:

-

Set and get the device configuration space

-

Set and get the virtqueue metadata: vring address, size, base

-

Kick a specific virtqueue

-

Register interrupt callback for a specific vring

-

Feature negotiation

-

Set/get the dirty page log

-

Start/reset the device

These functions provide the basic abstraction of the virtio device for a driver to consume. The driver can choose to use all or only part of these functions.

One such example is that dirty page tracking functionality is used for VM migration however if kubernetes containers are using these kernel drivers there is no need for dirty page tracking (assuming stateless containers).

Vhost-mdev is a kernel module that is responsible for two functions:

-

Forwards the userspace virtio control commands to virtio mdev transport API.

-

Reuses the VFIO framework for preparing DMA map and unmap request from the userspace. This is a prerequisite for the device to access the process memory

At a high level the vhost-mdev works is as follows:

-

Registers itself as a new type mdev driver.

-

Expoes several vhost_net compatible ioctls for the userspace driver to pass the virtio control commands.

-

It then translates commands to virtio mdev transport API and passes them through the mdev bus to the virtio mdev device (explained later).

-

When a new mdev device is created in the kernel will attempt to probe the device

-

During the probe process the vhost-mdev will attach the virtio mdev device to the VFIO group and then the DMA requests can go through the VFIO container FD.

The vhost-mdev is in essence a bridge between the userspace driver and virtio-mdev device. It provides two file descriptors (FDs) for the userspace driver to use:

-

vhost-mdev FD - accepts vhost control commands from the userspace

-

VFIO container fd - used by the userspace driver to setup the DMA

Virtio-mdev driver is a kernel module that implements the virtio configs ops through the virtio mdev transport API. The virtio configs ops are the set of APIs that let the virtio device communicate with the transport implementation (such as PCI or mdev in this case).

The virtio-mdev driver will register itself as a new kind of mdev driver and expose an mdev based transport to the virtio driver. It’s a bridge between the kernel virtio driver and virtio mdev device implementation. Since this is yet another transport the virtio can use (in addition to PCI for example) no other modifications are required to connect it to the virtio-net driver looking forward. This will be handy looking forward when talking about AF_VIRTIO.

Virtio-mdev device is the actual mdev device implementation (compared with the vfio-mdev device described in the previous section). It can be used by the virtio-mdev driver or the vhost-mdev driver. Note that the vDPA flow focuses on the vhost-mdev driver flow.

If we look at the virtio-mdev device from the perspective of the mdev-bus then this is a device since it’s implementing the mdev device APIs.

If we look at the virtio-mdev device from the perspective of the PCIe-bus the HW then this is a driver since it’s sending commands to the real HW device.

The following diagram shows how these building blocks come together:

Figure: virtio-mdev transport API

Note that the virtio-mdev driver and virtio-net driver are presented to demonstrate the benefits of the virtio-mdev transport unified APIs however they are not part of the vDPA kernel flow.

vDPA kernel based implementation

For understanding the vDPA kernel based implementation we need to present another building block, the vhost-vfio.

Vhost-vfio - From qemu’s point of view the vhost-vfio is a new type of network backend for qemu which is paired with the virtio-net device model. From the kernel’s point of view, vhost-vfio is the userspace driver for the vhost-mdev device. Its main tasks are:

-

Setting up the vhost-mdev device which includes:

-

Opening vhost-mdev device file for passing vhost specific command to vhost-mdev.

-

Obtaining the container of the vhost-mdev device and other VFIO setups for passing DMA setup commands to VFIO container.

-

-

Accepting the datapath offloading commands (set/get virtqueue state, set dirty page log, feature negotiations etc...) from virtio-net device model and translating them to vhost-mdev device ioctls. This happens only when the driver wants to configure the device.

-

Accepting vIOMMU map and unmap request and perform those mapping through VFIO DMA ioctl to insure the mdev receives the correct mapping. Depending on the type of driver the mapping could be temporary (e.g kernel driver use temporary mapping) or longevity (dpdk driver use static mapping).

Now that we have the vhost-vfio as well we can present the vDPA kernel based implementation:

Figure: vhost-mdev for VM

An important point to mention is that we describe vDPA as using the NIC’s VFs that are SRIOV based. This however is not required and if the NIC vendor can provide any other means to support multiple vDPA instances on a single physical NIC then the vDPA architecture would still work. This is since while currently the virtio layout is implemented on top of SRIOV, it doesn't matter what component implements it as long as the layout (exposed to the guest or a userpsace process) is kept as defined in the virtio specification.

One such example is the scalable IOV technology where the NIC is not split into VFs but rather into queue pairs providing a higher granularity to the NICs resources. If we were to use scalable IOV instead of SRIOV then assuming the NIC vendor implements the virtio ring layout, the data plane stays the same. The only change is in the control plane were new blocks for the virtio-mdev device and vendor PF driver will be provided (PF meaning the physical NIC configuration).

Going back to the vDPA overall diagram (bottom up):

-

For the hardware layer we have a vDPA device (red block in the NIC next to the VF and PF) which populates the virtqueue directly using it’s proprietary control path. This differs from the virtio-net VF described in the virtio full HW offloading (both data plane and control plane are virtio)

-

The virtio-mdev device implements the actual functions that drive the underlayer vDPA device. This vDPA device is how we offload virtio datapath to the device. The virtio-mdev device accepts virtio-mdev transport command through virtio-mdev transport API and performs command translation, emulation or mediation.

-

The virtio-mdev transport API (through the mdev-bus) provides a unified API that lets both the userspace driver and kernel driver to drive the virtio-mdev device. It basically abstracts a device for the driver.

-

For the userspace, the vhost-mdev driver registers itself as a new type of mdev driver and serves as a bridge between the userspace vhost driver and the virtio-mdev device. It accepts ioctls that are compatible with vhost_net through the vhost-mdev fd and translates them to virtio-mdev transport API. Since it was attached to the VFIO group any DMA specific setup can be performed through the VFIO API the container fd exposed

-

The vhost-vfio is the userspace vhost driver that pairs with the virtio device model in qemu to provide a virtualized virtio device (e.g PCI device) for VMs. The DMA mapping request are received from vIOMMU and then forwarded through the VFIO container fd while the virtio device specific setup and configuration are done through vhost-mdev fd.

-

In the guest, VFIO is used to expose the device API and DMA API for userspace drivers such as virtio-net PMD residing inside the DPDK application to be consumed.

vDPA DPDK based implementation

DPDK implements support for vDPA since v18.05. It is based on Vhost-user protocol to provide a unified control path to the frontend. This DPDK implementation is aimed as being an initial step to demonstrate vDPA capabilities, leading towards a more standard, in-kernel, implementation of the vDPA framework based on MDEV framework.

In this section we build on the “A journey to the vhost-users realm” post to understand the different virtio-networking blocks used with DPDK. on top of that we add 2 additional builds blocks:

The first is the vDPA driver which is the userspace driver for controlling the vDPA HW device

The second is the vDPA framework which provides a bridge between the vhost-user socket and the vDPA driver.

The following diagram shows how this all comes together:

The DPDK vDPA framework introduces a set of callbacks implemented by the vendor vDPA drivers, that are called by the vhost-user library to set-up the datapath:

/** * vdpa device operations */ struct rte_vdpa_dev_ops { /** Get capabilities of this device */ int (*get_queue_num)(int did, uint32_t *queue_num); /** Get supported features of this device */ int (*get_features)(int did, uint64_t *features); /** Get supported protocol features of this device */ int (*get_protocol_features)(int did, uint64_t *protocol_features); /** Driver configure/close the device */ int (*dev_conf)(int vid); int (*dev_close)(int vid); /** Enable/disable this vring */ int (*set_vring_state)(int vid, int vring, int state); /** Set features when changed */ int (*set_features)(int vid); /** Destination operations when migration done */ int (*migration_done)(int vid); /** Get the vfio group fd */ int (*get_vfio_group_fd)(int vid); /** Get the vfio device fd */ int (*get_vfio_device_fd)(int vid); /** Get the notify area info of the queue */ int (*get_notify_area)(int vid, int qid, uint64_t *offset, uint64_t *size); /** Reserved for future extension */ void *reserved[5]; };

Half of these callbacks have a 1:1 relationship with standard Vhost-user protocol requests, such as the ones to get and set the Virtio features, to get the supported Vhost-user protocol features, etc…

The dev_conf callback is used to setup and start the device, and is called once the Vhost-user layer has received all the information needed to setup and enable the datapath. In this callback, it is expected the vDPA device driver setups the rings addresses, setups the DMA mappings and starts the device.

In the case the vDPA device supports live-migration dirty pages tracking features, its driver may implement the migration_done callback to be notified about the migration completion. Otherwise, the vDPA frameworks offers the possibility to switch to SW processing of the rings while the live-migration operation is performed.

Finally, a set of callbacks is proposed to share the rings notification areas with the frontend for performance reasons. Its support relies on the host-notifier vhost-user protocol request specially introduced for this purpose.

Combining vDPA and virtio full HW offloading

In previous sections, we explained that full virtio HW offloading means the HW device implements both the Virtio data and control plane. This means this device can be probed directly with the Kernel's virtio-net driver or DPDK's Virtio PMD.

One alternative to this approach would be to use devices such as vDPA devices, meaning the data path is still fully offloaded, while the control path is managed through the vDPA API.

Why is this important?

The advantages of such an architecture versus using the Virtio PMD directly to drive the device are:

-

Flexibility: it makes it possible to swap to other vDPA devices or to SW rings processing in a transparent manner from a guest point of view.

-

Migration: it enables live-migration support, by switching to SW processing of the rings to perform the dirty pages tracking.

-

Standardization: it provides a single interface for containers (the Vhost-user socket in case of DPDK vDPA framework), whatever the underlying device being fully HW offloaded, vDPA only, or vendor specific data path. In the later case, it implies the Virtio ring being processed in SW, and packets being forwarded to the vendor PMD.

Let's see how it looks when using DPDK's vDPA framework:

The vDPA driver used is named Virtio-vDPA. It is a generic vDPA driver compatible with devices compliant to the Virtio specification for both the control and data paths. This driver is not available yet in upstream DPDK releases, but is under review and should land in DPDK v19.11 release.

Summary

In this post we have covered the grizzly details of virtio full HW offloading and vDPA.

We are well aware that this post is complex and contains many daunting building blocks both in the kernel and userspace. Still by understanding these building blocks and how they come together you are now able to grasp the importance of the vDPA approach replacing existing VM acceleration techniques such as SRIOV with an open standard framework.

Looking forward to new technologies such as scale IOV, we believe vDPA will truly shine given its building blocks are flexible enough to work there as well without any changes on the VM or kubernetes side.

In the next post we will move on to some hands vDPA. Given that vDPA cards are still in the process of being released to the market, we will be using a paravirtualized Virtio-net device in a guest. The device will emulate the vDPA control plane while using existing vhost-net for the data plane.

In later posts we will show how to use vDPA for accelerating kubernetes containers as well.

Prior posts / Resources

- Introducing virtio-networking: Combining virtualization and networking for modern IT

- Introduction to virtio-networking and vhost-net

- Deep dive into Virtio-networking and vhost-net

- Hands on vhost-net: Do. Or do not. There is no try

- How vhost-user came into being: Virtio-networking and DPDK

- A journey to the vhost-users realm

- Hands on vhost-user: A warm welcome to DPDK

- Achieving network wirespeed in an open standard manner: introducing vDPA

Über die Autoren

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.

Ähnliche Einträge

Achieve more with Red Hat OpenShift 4.21

Shadow-Soft shares top challenges holding organizations back from virtualization modernization

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Virtualisierung

Erfahren Sie das Neueste über die Virtualisierung von Workloads in Cloud- oder On-Premise-Umgebungen