This post provides a high level overview of the vDPA (virtio data path acceleration) kernel framework merged to the Linux kernel in March 2020. This effort spanned almost three years, involved many developers and went through a number of iterations until agreed upon by the upstream community. For additional information on vDPA in general please refer to our previous post, "Achieving network wirespeed in an open standard manner: introducing vDPA."

The vDPA kernel framework is a pillar in productizing the end-to-end vDPA solution and it enables NIC vendors to integrate their vDPA NIC kernel drivers into the framework as part of their productization efforts.

This solution overview post and the technical deep dive posts to follow provide important updates to the vDPA post published in October 2019 ("How deep does the vDPA rabbit hole go?"). This is since a number of changes were made in the final design. In later posts we will also provide a summary of the key changes done in the architecture when comparing the two approaches.

A short recap on vDPA

A “vDPA device” means a type of device whose datapath complies with the virtio specification, but whose control path is vendor specific. Refer to previous posts for more information on the basic concept of vDPA.

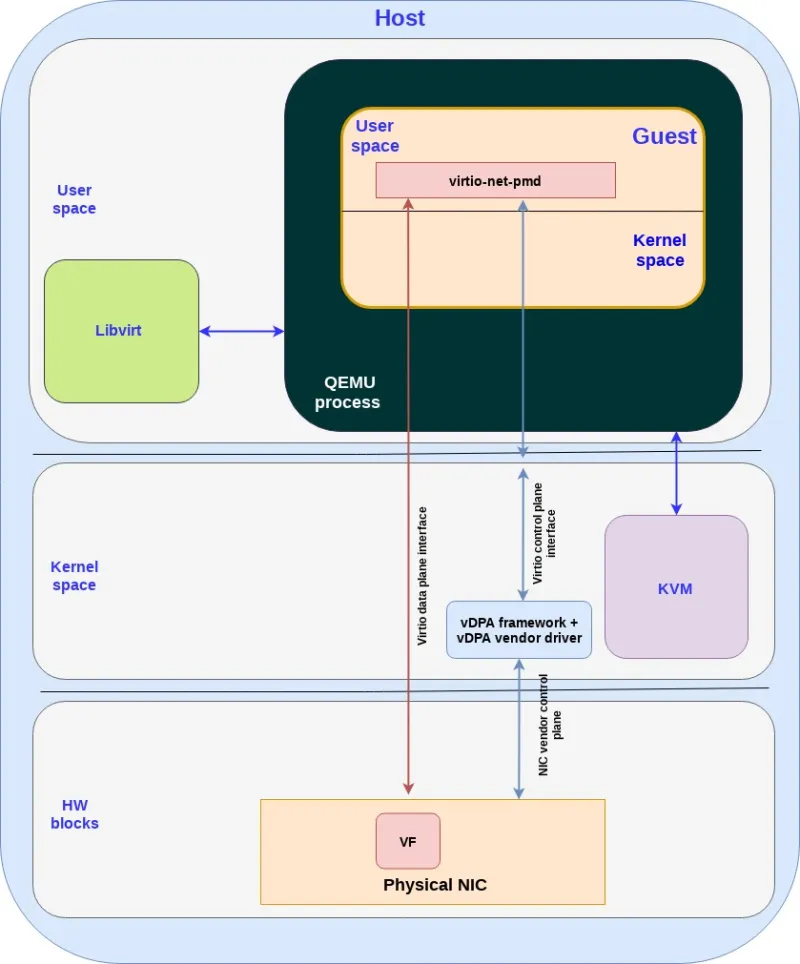

The following diagram shows how the vDPA kernel framework provides a translation between the NIC vendor control plane and the virtio control plane interface for a VM running on the host:

Figure: vDPA kernel framework translating between NIC and virtio in a virtual machine

As mentioned in previous posts the virtio data plane is mapped directly from the guest application to the VF in the physical NIC.

The next diagram shows how the vDPA kernel framework provides a translation between the NIC vendor control plane and the virtio control plane interface for a container running on the host:

Figure: vDPA kernel framework translating between NIC and virtio

Similar to the case of the VM, the virtio data plane is mapped directly from the containerized application to the VF in the physical NIC.

Comparing the control plane of the VM and container use case, although they both use the same virtio-net-pmd driver, the actual APIs used for the control by the driver are different:

-

In the VM use case since the QEMU exposes a virtio PCI device to the guest, the virtio-net-pmd will use PCI commands on the control plane and the QEMU will translate them to the vDPA kernel APIs (system calls).

-

In the container use case the virtio-net-pmd will invoke the vDPA kernel APIs (system calls) directly.

vDPA existing frameworks and some history

vDPA has basic support in the host DPDK via the standard vhost-user protocol (see "A journey to the vhost-users realm"). Note that we distinguish between the host DPDK part (such as OVS-DPDK or other DPDK daemons running on the host) and the guest DPDK (a PMD driver running in the guest/container).

An advantage of the vDPA DPDK (host) framework is that QEMU can leverage it to connect to the vDPA hardware as if it was yet another vhost-user backend. Today there are already vDPA DPDK (host) drivers implemented by major NIC vendors.

Still, although the vDPA DPDK (host) enables application to leverage vDPA HW, there are a number of limitations:

-

DPDK Library dependency on the host side is required for supporting this framework which is another dependency to take into account.

-

Since the vhost-user only provides userspace APIs, it can’t be connected to kernel subsystems. This means that the consumer of the vDPA interface will lose kernel functionality such as eBPF support.

-

Since DPDK focuses on the datapath then it doesn’t provide tooling for provisioning and controlling the HW. This also applies to the vDPA DPDK framework.

In order to address these limitations (and more) a vDPA kernel approach is required.

With the help of kernel vDPA support, there will be no extra dependencies on libraries such as DPDK. vDPA could then be used by either userspace drivers or kernel subsystems such as networking, block etc. Standard APIs such as sysfs or netlink could then be integrated into the vDPA solution avoiding the large overhead required for doing the same with the vDPA DPDK framework.

In our previous vDPA post ("How deep does the vDPA rabbit hole go?") the mdev based approach for kernel vDPA framework was introduced. mdev is the VFIO mediator device framework which is used for translation between vendor specific commands and emulated PCI devices.

Following a number of discussions upstream the community decided to drop the mdev approach for vDPA and develop a separate subsystem independent from VFIO. A few of the reasons for this change were:

-

VFIO and mdev provide a low level abstraction of devices compared to vDPA which is based on virtio and provides a high level device abstraction. Introducing high level APIs into VFIO was not a natural approach

-

A number of NIC devices developed by the industry do not fit well into the VFIO IOMMU model so their support for vDPA would have been challenging

The vDPA kernel framework was then merged into the kernel as a separate subsystem (Linux mainline 5.7). In the next sections we will touch the main parts of the architecture.

The vDPA kernel framework architecture

The main objective for the vDPA kernel framework is to hide the complexity of the hardware vDPA implementation and provide a safe and unified interface for both the kernel and userspace subsystem to consume.

From the hardware perspective, vDPA could be implemented by several different types of devices. If vDPA is implemented through PCI, it could be a physical function (PF), a virtual function (VF) or other vendor specific slices of the PF, such as sub functions (SF). vDPA could also be implemented via a non-PCI device or even through a software emulated device. Note that each device may have their own vendor specific interfaces and DMA models adding to the complexity.

As illustrated by the following figure, the vDPA framework abstracts the common attributes of vDPA hardware and leverages the existing vhost and virtio subsystem:

Figure: vDPA framework overview

The vhost subsystem is the historical data path implementation of virtio inside the kernel. It was used for emulating the datapath of virtio devices on the HOST side. It exposes mature userspace APIs for setting up the kernel data path through vhost device (which are char devices). Various backends have been developed for using different types of vhost device (e.g. networking or SCSI). The vDPA kernel framework leverages these APIs through this subsystem.

The virtio subsystem is the historical virtio kernel driver framework used for connecting guests/processes to a virtio device. It was used for controlling emulated virtio devices on the guest side. Usually we have the virtio device on the host and virtio driver on the guest which combine to create the virtio interface. There are however use cases where we want both the virtio device and virtio driver to run on the host kernel such as XDP (eXpress DataPath) use cases. This basically enables running applications that leverage vDPA (via the vhost subsystem) and those who do not (via the virtio subsystem) on the same physical vDPA NIC.

For more details on virtio-net see our previous post on "introduction virtio-networking and vhost-net."

So how does the vDPA kernel framework actually work?

For userspace drivers, vDPA framework will present a vhost char device. This allows the userspace vhost drivers to control the vDPA devices as if they are vhost devices. Various userspace applications could be connected to vhost devices in this way. A typical use case is to connect a vDPA device with QEMU and use it as a vhost backend for virtio drivers running inside the guest.

For kernel virtio drivers the vDPA framework will present a virtio device. This allows the kernel virtio drivers to control vDPA devices as if they were standard virtio devices. Various kernel subsystems could be connected to virtio devices for userspace applications to consume.

The vDPA framework is an independent subsystem which is designed to be flexible for implementing new hardware features. In order to support live migration, the framework supports saving and restoring device state via the existing vhost API. The framework could be easily extended to support hardware capable dirty page tracking or a software assisted dirty page tracking could be done by the userspace drivers. Thanks to the kernel integration, vDPA framework could also be easily extended to support technologies such as SVA (Shared Virtual Address) and PASID (Process Address Space ID) when compared with other kernel subsystems (e.g IOMMU).

vDPA framework will not allow direct hardware register mapping except for the doorbell registers (used for notifying the HW on work to do). For supporting vDPA a device mediation layer is required in the userspace between the host and the guest such as QEMU. Such an approach is safer than device passthrough (used in SR-IOV) since it minimizes the attack surface from general registers. The vDPA framework also fits more naturally to the new device slicing technologies such as Scalable IOV and sub function (SF) which require software meditation layers as well.

Adding vDPA hardware drivers to the vDPA framework

The vDPA kernel framework is designed to ease the development and integration of hardware vDPA drivers. This is illustrated in the following figure:

Figure: Adding vDPA driver to the vDPA framework

The vDPA framework abstracts the common attributes and configuration operations used by vDPA devices. Following this abstraction, a typical vDPA driver is required to implement the following capabilities:

-

Device probing/removing: Vendor specific device probing and removing procedure.

-

Interrupt processing: Vendor or platform specific allocation and processing of the interrupt.

-

vDPA device abstraction: Implement the functions that are required by the vDPA framework most of which are the translation between virtio control command, vendor specific device interface and registering the vDPA device to the framework

-

DMA operation: For the device that has its own DMA translation logic, it can require the framework to pass DMA mapping to the driver for implementing vendor specific DMA translation

Since the datapath is offloaded to the vDPA hardware, the hardware vDPA driver becomes thin and simple to implement. The userspace vhost drivers or kernel virtio drivers control and setup the hardware datapath via vhost ioctls or virtio bus commands (depending on the subsystem you chose). The vDPA framework will then forward the virtio/vhost commands to the hardware vDPA drivers which will implement those commands in a vendor specific way.

vDPA framework will also relay the interrupts from vDPA hardware to the userspace vhost drivers and kernel virtio drivers. Doorbell and interrupt passthrough will be supported by the framework as well to achieve the device native performance.

Summary

The purpose of this post was to provide a high level overview of the vDPA kernel framework.

We started by providing a short recap on the vDPA solution. We then briefly discussed the shortcomings of the existing vDPA userspace framework (DPDK based) and the historical mdev based kernel vDPA framework which had been rejected by the community.

We then proceeded to provide an overview of a new independent kernel vDPA framework which hides the complexity and differences between vDPA hardwares and provides a safe and unified way for driver access. Futuristic hardware features integration was also described briefly.

We concluded with providing some details on how the vDPA HW drivers look like and what capabilities they are expected to provide.

In the next posts we will dive into the technical details of the vDPA framework and show how things actually work underneath the hood. For example, we will discuss the vDPA bus, integration with QEMU/containers, supporting HW with/without IOMMU, and more.

Want to learn more about edge computing?

Edge computing is in use today across many industries, including telecommunications, manufacturing, transportation, and utilities. Visit our resources to see how Red Hat's bringing connectivity out to the edge.

Edge computing is in use today across many industries, including telecommunications, manufacturing, transportation, and utilities. Visit our resources to see how Red Hat's bringing connectivity out to the edge.

Über die Autoren

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Virtualisierung

Erfahren Sie das Neueste über die Virtualisierung von Workloads in Cloud- oder On-Premise-Umgebungen