You may have seen some posts that introduce Performance Co-Pilot (PCP). Here, we will focus on some questions we hear from customers while they are looking at PCP for the first time.

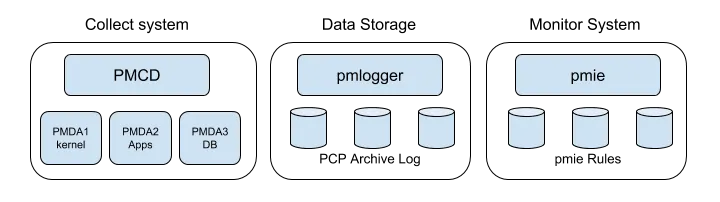

If you are familiar with other monitoring tools, then PCP should not be too hard to understand. First, let’s look at how PCP maps to the generic monitoring system. In general, a monitoring system often has three layers and the lower layer will target collecting varieties of performance metrics data, e.g. CPU, memory, I/O, and similar data.

The second layer is responsible for the storage of data and analyzing it in real time or via a retrospective archive. Above the second layer, the uppermost layer is composed of monitoring and alarms, reporting and resource view, which are designed to react to predefined thresholds and “visualize” the results of analysis.

Like many monitoring systems, PCP has its own daemons to perform the jobs defined in the three layers. Corresponding to the first layer the Performance Metrics Collection Daemon (PMCD) coordinates the gathering and exporting of performance statistics in response to requests from the PCP monitoring tools.

After the data is collected, it can be sent to the Performance Metrics Inference Engine (PMIE) for analysis, and the data can also be archived by the Performance Metrics Logger (PMLOGGER). Furthermore, a performance dashboard of a given system can be generated with the Performance Metrics Chart (PMCHART) utility. The diagram generated by PMCHART is somewhat raw. If you need a more elegant diagram, there are other graphical options for you.

Logging Interval

Now, let’s consider some questions commonly asked by customers. The first one that caught my attention is “how I can choose the logging interval?” They may say “I want to closely monitor an application, can I have it sampling every second?” The answer is yes. But is this necessary?

By default, PCP will sample and archive performance metrics data every 60 seconds. In most scenarios, this should be sufficient. As you should be aware, sar, a commonly used tool by enterprise customers, will capture metrics every 10 minutes by default.

However, when servers experience intermittent storage performance issues, we can change the sampling interval of PCP down to 10 seconds for the servers that need more detailed logging, which can be done by editing/etc/pcp/pmlogger/control (or /etc/pcp/pmlogger/control.d/local in newer PCP versions, see below) and appending -t 10s to the line for LOCALHOSTNAME, e.g.

# local primary logger # # (LOCALHOSTNAME is expanded to local: in the first column, # and to `hostname` in the fourth (directory) column.) # LOCALHOSTNAME y n PCP_LOG_DIR/pmlogger/LOCALHOSTNAME -r -T24h10m -c config.default -t 10s

Nevertheless, we need a reason to do that. Otherwise, it will cost system overhead unnecessarily.

System Overhead

Speaking of system overhead, here we come to another question I hear from customers: “How does PCP affect my existing systems?”

Before we go further, let’s see which factors we should take into consideration while talking about system overhead of PCP:

-

The number of managed hosts

-

Frequency of logging interval (e.g. every 10 seconds)

-

Duration of historical data retention (especially who this affects disk usage)

-

The number of performance metrics monitored

Without answers to the above questions, it is impossible to have an accurate assessment of the performance impact caused by PCP. The answers to these questions should be in place before the overhead of using PCP can be considered.

However, the PCP daemons are generally very lightweight in terms of computational demand; most of the (very small) CPU cost is associated with extracting performance metrics at the PCP collector system (PMCD and the PMDAs).

A local daemon consumes disk bandwidth and disk space on the PCP collector system. A remote daemon consumes disk space on the host where it is running and network bandwidth between that host and the PCP collector host. It is also worth mentioning that the archive logs typically grow at a rate of anywhere between a few kilobytes (KB) to tens of megabytes (MB) per day, depending on how many performance metrics are logged and the choice of sampling frequencies.

The archived metrics data can be found under /var/log/pcp/pmlogger/<hostname>, the table here is an example from my test server:

|

Date |

Size (MB) |

|

2018-10-13 |

2.6 (compressed) |

|

2018-10-14 |

3.2 (compressed) |

|

2018-10-15 |

23.5 |

|

2018-10-16 |

23.9 |

|

2018-10-17 |

23.2 |

Each entry corresponds with three log files for one day. The log files will be named <date of the day>.meta, <date of the day>.index and <date of the day>.0, for example 20181017.meta, 20181017.index and 20181017.0. From the above table, we can see that in average the size of archive log increases by approximately 23 MB per day.

To conserve disk space, the log file <date of the day>.0 will be compressed and renamed to <date of the day>.0.xz after three days by default. And, The days before compressing log files can be specified by pmlogger_daily utility with option ‘-x’ from /etc/cron.d/pcp-pmlogger as follows:

# # Performance Co-Pilot crontab entries for a monitored site # with one or more pmlogger instances running # # daily processing of archive logs (with compression enabled) 10 0 * * * pcp /usr/libexec/pcp/bin/pmlogger_daily -X xz -x 3 # every 30 minutes, check pmlogger instances are running 25,55 * * * * pcp /usr/libexec/pcp/bin/pmlogger_check -C

This is particularly useful for large numbers of pmlogger processes under the control of pmlogger_check.

Many customers also ask: "Do you have any reference guides that contain use cases or solutions for PCP?"

Since each system is so different, it's hard to have a manual to simply lay out how to deploy it step by step. Once you answer some of the questions presented here, you can best design and deploy your own PCP monitoring solution.

Über den Autor

Edward Jin has been working in IT for more than 12 years. He has a strong service mindset and design thinking and insights to IT systems and processes within the pharmaceutical , manufacturing and finance industry. Currently, he is helping customers on their digital journey using open source technology.

Ähnliche Einträge

Friday Five — January 9, 2026 | Red Hat

Smarter troubleshooting with the new MCP server for Red Hat Enterprise Linux (now in developer preview)

Technically Speaking | Build a production-ready AI toolbox

AI Is Changing The Threat Landscape | Compiler

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Virtualisierung

Erfahren Sie das Neueste über die Virtualisierung von Workloads in Cloud- oder On-Premise-Umgebungen