Written by Jiri Benc, Senior Software Engineer, Networking Services, Linux kernel, and Open vSwitch

By introducing a connection tracking feature in Open vSwitch, thanks to the latest Linux kernel, we greatly simplified the maze of virtual network interfaces on OpenStack compute nodes and improved its networking performance. This feature will appear soon in Red Hat OpenStack Platform.

Introduction

It goes without question that in the modern world, we need firewalling to protect machines from hostile environments. Any non-trivial firewalling requires you keep track of the connections to and from the machine. This is called "stateful firewalling". Indeed, even such basic rule as "don't allow machines from the Internet to connect to the machine while allowing the machine itself to connect to servers on the Internet" requires stateful firewall. This applies also to virtual machines. And obviously, any serious cloud platform needs such protection.

Stateful Firewall in OpenStack

It's of no surprise that OpenStack implements stateful firewalling for guest VMs. It's the core of its Security Groups feature. It allows the hypervisor to protect virtual machines from unwanted traffic.

As mentioned, for stateful firewalling the host (OpenStack node) needs to keep track of individual connections and be able to match packets to the connections. This is called connection tracking, or "conntrack". Note that connections are a different concept to flows: connections are bidirectional and need to be established, while flows are unidirectional and stateless.

Let's add Open vSwitch to the picture. Open vSwitch is an advanced programmable software switch. Neutron uses it for OpenStack networking - to connect virtual machines together and to create overlay network connecting the nodes. (For completeness, there are other backends than Open vSwitch available; however, Open vSwitch offers the most features and performance due to its flexibility and it's considered the "main" backend by many.)

However, packet switching in Open vSwitch datapath is based on flows and solely on flows. It had been traditionally stateless. Not a good situation when we need a stateful firewall.

Bending iptables to Our Will

There's a way out of this. The Linux kernel contains connection tracking module and it can be used to implement a stateful firewall. However, these features had been available only to the Linux kernel firewall at the IP protocol layer (called "iptables"). And that's a problem: Open vSwitch does not operate at the IP protocol layer (also called L3), it's at one layer below (called L2). In other words, not all packets processed by the kernel are subject to iptables processing. In order for a packet to be processed by iptables, it needs either to be destined to an IP address local to the host or routed by the host. Packets which are switched (either by Linux bridge or Open vSwitch) are not processed by iptables.

OpenStack needs the VMs to be on the same L2 segment, i.e. packet between them are switched. In order to still make use of iptables to implement a stateful firewall, it used a trick.

The Linux bridge (traditional software switch included in the Linux kernel) contains its own filtering mechanism called ebtables. While connection tracking cannot be used from within ebtables, by setting appropriate system config parameters it's possible to call iptables chains from ebtables. By using this technique, it's possible to make use of connection tracking even when doing L2 packet switching.

Now, the obvious question is where to put this on the OpenStack packet traversal path.

The heart of every OpenStack node is so called "integration bridge", br-int. In a typical deployment, br-int is implemented using Open vSwitch. It's responsible for directing packets between VMs, tunneling them between nodes and some other tasks. Thus, every VM is connected to an integration bridge.

The stateful firewall needs to be inserted between the VM and the integration bridge. We want to make use of iptables, which means inserting a Linux bridge between the VM and the integration bridge. That bridge needs to have the correct settings applied to call iptables and iptables rules need to be populated to utilize conntrack and do the necessary firewalling.

How It Looks

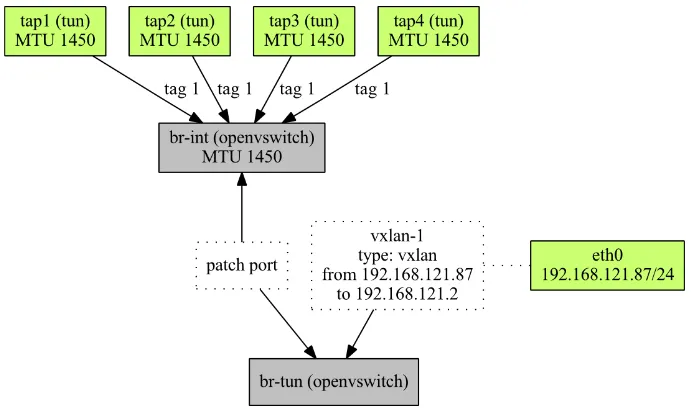

Looking at the picture below, let's examine how a packet from VM to VM traverses the network stack.

The first VM is connected to the host through the tap1 interface. A packet coming out of the VM is then directed to the Linux bridge qbr1. On that bridge, ebtables call into iptables where the incoming packet is matched according to configured rules. If the packet is approved, it passes the bridge and is sent out to the second interface connected to the bridge. That's qvb1 which is one side of the veth pair.

Veth pair is a pair of interfaces that are internally connected to each other. Whatever is sent to one of the interfaces is received by the other one and vice versa. Why the veth pair is needed here? Because we need something that could interconnect the Linux bridge and the Open vSwitch integration bridge.

Now the packet reached br-int and is directed to the second VM. It goes out of br-int to qvo2, then through qvb2 it reaches the bridge qbr2. The packet goes through ebtables and iptables and finally reaches tap2 which is the target VM.

This is obviously very complex. All those bridges and interfaces add cost in extra CPU processing and extra latency. The performance suffers.

Connection Tracking in Open vSwitch to the Rescue

All of this can be dramatically simplified. If only we could include the connection tracking directly in Open vSwitch...

And that's exactly what happened. Recently, the connection tracking code in the kernel was decoupled from iptables and Open vSwitch got support for conntrack. Now it's possible to match not only on flows but also on connections. Jakub Libosvar (Red Hat) made use of this new feature in Neutron.

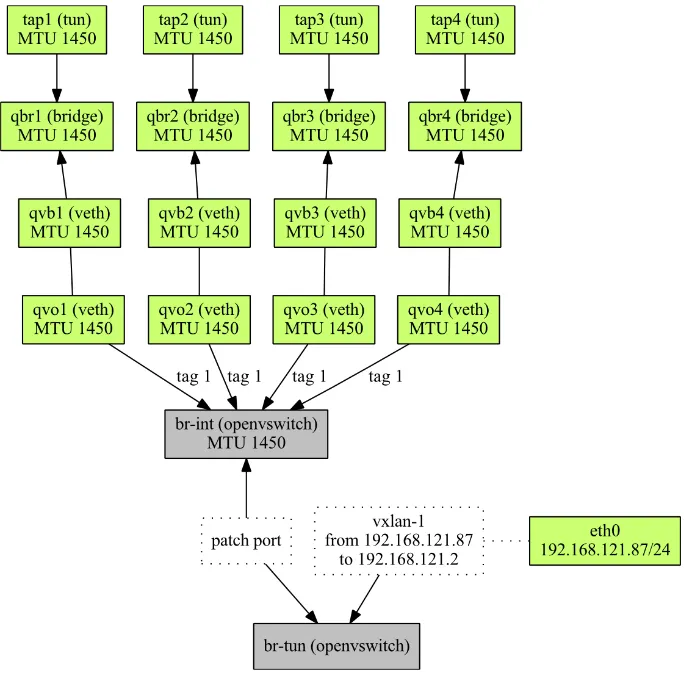

Now, VMs can connect directly to the integration bridge and stateful firewall is implemented just using Open vSwitch rules alone.

Let's examine the new, improved situation in the second picture below.

A packet coming out of the first VM (tap1) is directed to br-int. It's examined using the configured rules and either dropped or directly output to the second VM (tap2).

This substantially saves packet processing costs and thus increases performance. The following overhead was eliminated:

- Packet enqueueing on veth pair: The packet sent to a veth endpoint is put to a queue and dequeued and processed later.

- Bridge processing on per-VM bridge:. Each packet traversing the bridge is subject to FDB (forwarding database) processing.

- ebtables overhead: We measured that just enabling ebtables without any rules configured has performance costs on the bridge throughput. Generally, ebtables are considered obsolete and don't receive much work, especially not performance work.

- iptables overhead: There is no concept of per-interface rules in iptables, iptables rules are global. This means that for every packet, incoming interface needs to be checked and execution of rules branched to the set of rules appropriate for the particular interface. This means linear search using interface name matches which is very costly, especially with a high number of VMs.

In contrast, by using Open vSwitch conntrack, 1.-3. are gone instantly. Open vSwitch has only global rules, thus we still need to match for the incoming interface in Open vSwitch but unlike iptables, the lookup is done using port number (not textual interface name) and more importantly, using a hash table. The overhead in 4. is thus completely eliminated, too.

The only remaining overhead is of the firewall rules themselves.

In Summary

Without Open vSwitch conntrack:

- A Linux bridge needs to be inserted between a VM and the integration bridge.

- This bridge is connected to the integration bridge by a veth pair.

- Packets traversing the bridge are processed by ebtables and iptables, implementing the stateful firewall.

- There's substantial performance penalty caused by veth, bridge, ebtables and iptables overhead.

With Open vSwitch conntrack:

- VMs are connected directly to the integration bridge.

- The stateful firewall is implemented directly at the integration bridge using hash tables.

Images were captured on a real system using plotnetcfg and simplified to better illustrate the points of this article.

Über den Autor

Ähnliche Einträge

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

General Availability for managed identity and workload identity on Microsoft Azure Red Hat OpenShift

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Nach Thema durchsuchen

Automatisierung

Das Neueste zum Thema IT-Automatisierung für Technologien, Teams und Umgebungen

Künstliche Intelligenz

Erfahren Sie das Neueste von den Plattformen, die es Kunden ermöglichen, KI-Workloads beliebig auszuführen

Open Hybrid Cloud

Erfahren Sie, wie wir eine flexiblere Zukunft mit Hybrid Clouds schaffen.

Sicherheit

Erfahren Sie, wie wir Risiken in verschiedenen Umgebungen und Technologien reduzieren

Edge Computing

Erfahren Sie das Neueste von den Plattformen, die die Operations am Edge vereinfachen

Infrastruktur

Erfahren Sie das Neueste von der weltweit führenden Linux-Plattform für Unternehmen

Anwendungen

Entdecken Sie unsere Lösungen für komplexe Herausforderungen bei Anwendungen

Virtualisierung

Erfahren Sie das Neueste über die Virtualisierung von Workloads in Cloud- oder On-Premise-Umgebungen