Peer-pods extends Red Hat OpenShift sandboxed containers to run on any environment without requiring bare-metal servers or nested virtualization support. It does this by extending Kata containers runtime (OpenShift sandboxed containers is built on Kata containers) to handle virtual machine (VM) lifecycle management using cloud provider APIs (AWS, Azure and others) or a third-party hypervisor API such as VMware vSphere. The peer-pods solution is also the foundation for confidential containers on OpenShift.

Currently, there is no support for Container Storage Interface (CSI) persistent volumes for peer-pods solution in OpenShift. However, some alternatives are available depending on your environment and use case. For example, if you have configured the peer-pods solution on Azure and have a workload that needs to process data stored in Azure Blob Storage, Microsoft's object storage solution for the cloud, then you can use Azure BlobFuse.

BlobFuse is a virtual file system driver for Azure Blob Storage that allows the mounting of the blob storage as a directory inside the pod. BlobFuse2 is the latest version of BlobFuse.

This article demonstrates how to use Azure BlobFuse to access blob data in your workloads deployed using OpenShift sandboxed containers. The workflow demonstrated in this article is common for all blobfuse versions, but note that the sample commands necessarily specify the blobfuse version (blobfuse2).

Topology overview

Azure Blob Storage has 3 kinds of resources:

- The storage account

- A container in the storage account

- A blob in a container

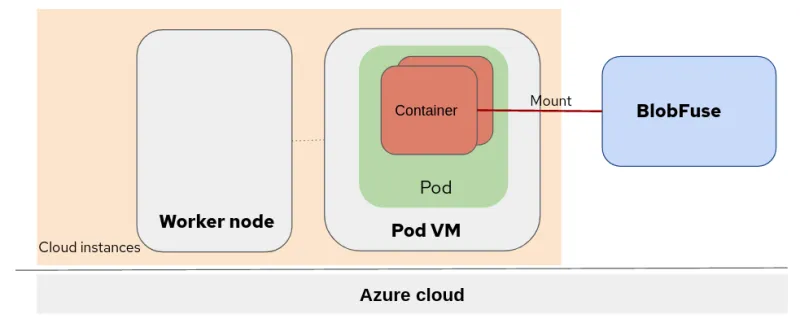

As you can see from the image illustrating the topology, we first need to create a storage account and a container to use BlobFuse.

Peer-pods with Blob Storage topology

This image shows the topology of Red Hat OpenShift sandboxed containers peer-pods with Azure Blob Storage mounted using BlobFuse:

In this example, we deployed the Red Hat OpenShift sandboxed containers on the Azure cloud instances (VMs). The OpenShift worker nodes and Pod VM are running at the same virtualization level. Blob Storage is mounted to the container running inside the PodVM.

Setting up Blob Storage on Azure and OpenShift

To follow along, you must have the OpenShift sandboxed containers operator installed with peer-pods. If you don't have that yet, follow the official documentation for setup instructions.

Create a storage account in Azure

First, you must create an Azure storage account. There are several tabs, including Basics, Advanced, Networking, Data protection, Encryption, Tags and others, so you can set up your storage account to meet your requirements.

- Select Home, and then select Storage accounts under Azure services, and then click Create.

- In the Basics tab, under Project details, enter values for the Subscription and Resource group.

- Enter a Storage account name (for example, peerpodsstorage), and also select the region.

- Keep everything else as the default, and click Review to apply.

Create a container under your storage account

In the below example we create a storage container for our use. Please refer here for more details about the storage container.

- Go to the storage account you created (for example, peerpodsstorage) and select Containers under Data storage

- Select + Container and enter a Name field (for example, blobfuse-container)

- Click the Create button to finish the container creation.

Create a secret having the required auth and related details

You must grant access to the storage account for the user mounting the container. Authorization can be provided by a configuration file or with environment variables. In this article, we've used a Kubernetes secret object to store the Azure storage account authorization details and made the details available as environment variables to the pod.

You need to explicitly specify the storage account and container to use. In this article, we use peerpodsstorage storage account (AZURE_STORAGE_ACCOUNT) and blobfuse-container container (AZURE_STORAGE_ACCOUNT_CONTAINER), created in previous steps.

# export AZURE_STORAGE_ACCOUNT=peerpodsstorage

# export AZURE_STORAGE_ACCOUNT_CONTAINER=blobfuse-container

# export AZURE_STORAGE_ACCESS_KEY=$(az storage account keys list --account-name $AZURE_STORAGE_ACCOUNT --query "[0].value" -o tsv )# cat > storage-secret.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: storage-secret

type: Opaque

stringData:

AZURE_STORAGE_ACCOUNT: "${AZURE_STORAGE_ACCOUNT}"

AZURE_STORAGE_ACCOUNT_TYPE: block

AZURE_STORAGE_ACCOUNT_CONTAINER: "${AZURE_STORAGE_ACCOUNT_CONTAINER}"

AZURE_STORAGE_AUTH_TYPE: key

AZURE_STORAGE_ACCESS_KEY: "${AZURE_STORAGE_ACCESS_KEY}"

EOF# oc apply -f storage-secret.yamlStart a pod

There are two approaches to use blobfuse mounted volumes in peer-pods containers. One is using blobfuse before the application workload. The other is using blobfuse in a container lifecycle hook.

Example 1: Use blobfuse before the application workload

Create a pod with the hello-openshift.yaml file. The blobfuse mounting is executed before the application workload, and you use secrets to provide Azure storage account authentication details.

---

apiVersion: v1

kind: Pod

metadata:

name: hello-openshift

labels:

app: hello-openshift

spec:

runtimeClassName: kata-remote

containers:

- name: hello-openshift

image: quay.io/openshift_sandboxed_containers/blobfuse

command: ["sh", "-c"]

args:

- mknod /dev/fuse -m 0666 c 10 229 && mkdir /mycontainer && blobfuse2 mount /mycontainer --tmp-path=/blobfuse2_tmp && sleep infinity

securityContext:

privileged: true

env:

- name: AZURE_STORAGE_ACCESS_KEY

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCESS_KEY

- name: AZURE_STORAGE_ACCOUNT_CONTAINER

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCOUNT_CONTAINER

- name: AZURE_STORAGE_AUTH_TYPE

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_AUTH_TYPE

- name: AZURE_STORAGE_ACCOUNT

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCOUNT

- name: AZURE_STORAGE_ACCOUNT_TYPE

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCOUNT_TYPEApply it with the oc command:

# oc apply -f hello-openshift.yaml

# oc get pods

NAME READY STATUS RESTARTS AGE

hello-openshift 1/1 Running 0 88sLog in to the container to verify that blobfuse is mounted.

# oc rsh hello-openshift

# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 32G 2.5G 29G 8% /

tmpfs 64M 0 64M 0% /dev

shm 16G 0 16G 0% /dev/shm

/dev/sda4 32G 2.5G 29G 8% /etc/hosts

blobfuse2 32G 2.5G 29G 8% /mycontainer

sh-5.1# mount

overlay on / type overlay (rw,relatime,seclabel,lowerdir=/run/image/layers/sha256_0bee2488e3e0209f173af99ba46b28581abddb3330e97b165bbaca270912b7b7:/run/image/layers/sha256_070c268bd77fadb1861fa52efbcf8f19dd3eda2ff49e50dc0f7e54219671402a:/run/image/layers/sha256_5843ce3a59c2b84f83d72ded700dc9cbd7f6ff5b15d35b99aa8d9e4d60d59451:/run/image/layers/sha256_cc7c08d56aada5b55c0b4a8ecc515a2fa2dc25a96479425437ff8fefa387cc20,upperdir=/run/image/overlay/1/upperdir,workdir=/run/image/overlay/1/workdir)

[...]

/dev/sda4 on /run/secrets/kubernetes.io/serviceaccount type xfs (ro,relatime,seclabel,attr2,inode64,logbufs=8,logbsize=32k,noquota)

blobfuse2 on /mycontainer type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,max_read=1048576)Example 2: Use blobfuse in a container lifecycle hook.

Create a pod with the hello-openshift2.yaml file. The blobfuse mounting is executed at the container postStart lifecycle and you use secrets to provide Azure storage account authentication.

---

apiVersion: v1

kind: Pod

metadata:

name: hello-openshift2

labels:

app: hello-openshift2

spec:

runtimeClassName: kata-remote

containers:

- name: hello-openshift2

image: quay.io/openshift_sandboxed_containers/blobfuse

imagePullPolicy: Always

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "mkdir /mycontainer && mknod /dev/fuse -m 0666 c 10 229 && blobfuse2 mount /mycontainer --tmp-path=/blobfuse2_tmp"]

securityContext:

privileged: true

env:

- name: AZURE_STORAGE_ACCESS_KEY

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCESS_KEY

- name: AZURE_STORAGE_ACCOUNT_CONTAINER

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCOUNT_CONTAINER

- name: AZURE_STORAGE_AUTH_TYPE

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_AUTH_TYPE

- name: AZURE_STORAGE_ACCOUNT

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCOUNT

- name: AZURE_STORAGE_ACCOUNT_TYPE

valueFrom:

secretKeyRef:

name: storage-secret

key: AZURE_STORAGE_ACCOUNT_TYPEApply it with the oc command:

# oc apply -f hello-openshift2.yaml

# oc get pods

NAME READY STATUS RESTARTS AGE

hello-openshift2 1/1 Running 0 2m10sLog in to the container to verify that blobfuse2 is mounted.

# oc rsh hello-openshift2

# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 32G 2.5G 29G 8% /

tmpfs 64M 0 64M 0% /dev

shm 16G 0 16G 0% /dev/shm

/dev/sda4 32G 2.5G 29G 8% /etc/hosts

blobfuse2 32G 2.5G 29G 8% /mycontainerSummary

In this article, we've provided examples of how to incorporate Azure Blob data into your workload using Azure BlobFuse. With or without bare-metal servers or nested virtualization support, you can now run OpenShift sandboxed containers on any environment!

Sobre os autores

Pei Zhang is a quality engineer in Red Hat since 2015. She has made testing contributions to NFV Virt, Virtual Network, SR-IOV, KVM-RT features. She is working on the Red Hat OpenShift sandboxed containers project.

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Mais como este

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Data Security 101 | Compiler

AI Is Changing The Threat Landscape | Compiler

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem