This blog post showcases the performance improvements achieved in the process of booting unlock for Clevis LUKS-bound devices. By removing a single function from the boot process, boot time was shortened by 20% to 47%, depending on the scenario.

Clevis is a software framework that allows booting encrypted LUKS devices without manual intervention. This tool is part of Network-Bound Disk Encryption (NBDE). Clevis is the “client” side, although it is not strictly necessary to work against a server, and can be configured to read keys in different ways. Clevis has a set of “pins” that allow different mechanisms for automatic unlocking:

- tang: real NBDE based in client-server architecture

- tpm2: secure cryptoprocessor on the machine

- sss: for composed configurations (for example, achieving high availability using two or more servers)

Basic scenarios

My initial idea was to identify any possible bottlenecks that could be making the boot process last longer than it needs to be. After all, Clevis is a set of commands that allow storing LUKS metadata and recover it in the boot process. Inspection of the different commands executed by Clevis, together with the time spent by each of them, would allow discovering if any function spends most of the execution time. Then I could study if it can be improved or removed.

For this task, I defined different scenarios:

- Virtual machine, one LUKS-encrypted device: A virtual machine with a “/” device for inspecting boot times

- Virtual machine, multiple LUKS-encrypted devices: A virtual machine with several encrypted devices to analyze a much more complex scenario similar to what is described in the Red Hat Enterprise Linux product documentation

- Real machine, one LUKS-encrypted device: A laptop machine with a “/” device for inspecting boot times on a physical machine

Basic configuration

Clevis can work with different configurations, which represent the different mechanisms to allow automatic encrypted disk unlocking. I identified the following configurations as the most representative:

- tpm2 (no pcr_id)

- tpm2 (pcr_id=7)

- tang

I did not include the sss configuration because it is composed of the basic ones, tpm2 and tang, which are already included.

Timestamp logging

I considered several mechanisms for dumping timestamps of each of the lines executing in Clevis boot:

systemd-analyze blame: This command dumps information about the time that has been spent from each of the commands executed in boot time fromsystemd. The kind of output dumped is as follows:

6.460s systemd-cryptsetup@luks\x2d18f5ee6f\x2d2ae9\x2d4807\x2da916\x2d106037930328.service 3.616s fwupd.service 2.632s plymouth-quit-wait.service 1.721s systemd-udev-settle.service …

It gives information about the time spent from each service separately, but no information about particular execution time for each process. So it is a good helper, but it cannot be a definitive way to process information per line.

- Manual dump: creation of a logging function with timestamp (including microseconds) to dump the timestamps before and after the execution of particular functions. In the end, this was the solution I took because, although not being the most reusable one, it serves as a very quick fix to implement a mechanism that allows dumping the timestamps of the main functions used at boot time. The code is available in my GitHub repository.

- This commit allows the dumping of the different timestamps before and after the most significant functions used in Clevis boot are processed. It dumps logs into a file (

/var/run/systemd/clevis.log) with the different execution times for each of the lines, in the following format:

- This commit allows the dumping of the different timestamps before and after the most significant functions used in Clevis boot are processed. It dumps logs into a file (

Bottleneck identification

After logging with the respective timestamps was complete, I could observe that the following functions/lines could be possible bottlenecks:

clevis_luks_check_valid_key_or_keyfile- sleep in clevis-luks-askpass

I identified the possible bottlenecks by analyzing the different times spent on the execution of each of the most representative functions that intervene in the boot process via Clevis. Further information on the logs dumped are available in this .xlsx file.

In particular, the tabs TPM2-pcr_id: TPM2-pcr_id:7 and tang contain timestamps of the dumped logs according to the change introduced to obtain such information. In any of these cases, it can be clearly observed that the possible bottlenecks are the places where the most of the execution time is spent.

Time measures (“clevis_luks_check_valid_key_or_keyfile”)

Considering the proposed scenarios, together with the different configurations that apply for each of them, I measured the time used by the system to boot and then analyzed the time saved when avoiding the function clevis_luks_check_valid_key_or_keyfile. To obtain these values, I used the systemd-analyze blame command and calculated the following time measures:.

Scenario 1: Virtual machine (VM) with one LUKS device, original code

Scenario 1: VM with one LUKS device after clevis_luks_check_valid_key_or_keyfile bottleneck removal

Scenario 2: VM with several LUKS devices, original code

Time measures: Scenario 2: VM with several LUKS devices, after clevis_luks_check_valid_key_or_keyfile bottleneck removal

It is apparent how the time spent in boot decreases proportionally to the number of devices unlocked in boot time.

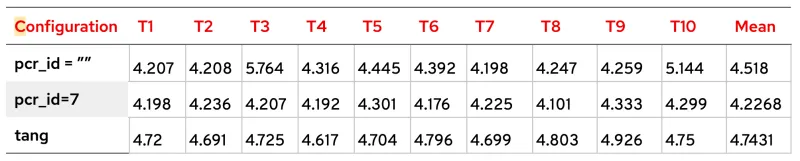

Scenario 3: Real machine with one LUKS device, original code

This table represents the time measures of the boot times used to unlock one LUKS2 device in a real machine, in particular, a Lenovo ThinkPad T14s Gen 1, model 20T1S39D5Q, with original code.

Scenario 3: Real machine with one LUKS device, after clevis_luks_check_valid_key_or_keyfile bottleneck removal

Time measures (different “sleep” values)

As demonstrated in the Bottleneck identification section above, another possible area of code that consumes much of the time in the boot process is sleep in clevis-luks-askpass. The sleep initial value is 0.5 seconds and is placed in the clevis-luks-askpass file, which is the file executed by systemd. It is introduced to wait for the unlocking process of the devices to unlock to be successful. So, the question here is: Is it really necessary? Can it be changed so that boot process is accelerated?

The short answer is that this sleep is already customized at an optimal value. Changes of its value did not cause improvements on the boot time, so the values will not be covered here. If you are interested, they are included in this .xlsx file.

Boot-time improvements

Here, we’ll show the time improvements obtained in each of the scenarios in bar graphs:

Scenario 1: VM with one LUKS device

The bar graph below represents boot time improvements in seconds for the individual keys due to the validation removal:

Improvement in boot times in seconds and as a percentage for each configuration:

Scenario 2: VM with multiple LUKS device

This bar graph represents boot time improvements in seconds on keys due to the validation removal:

Improvement in boot times in seconds and as a percentage for each configuration:

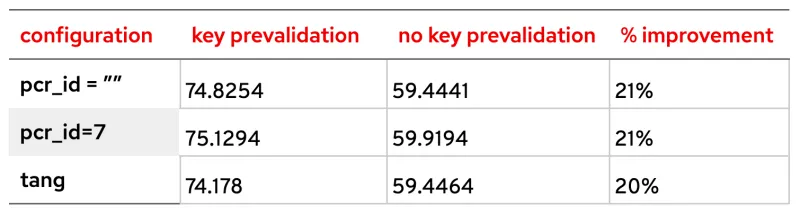

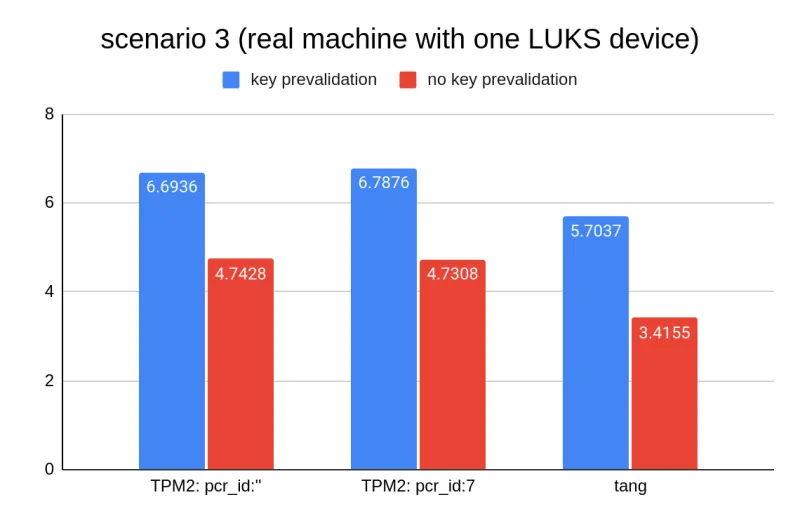

Scenario 3: real machine with unique LUKS device

This bar graph represents improvements on keys due to the validation removal:

Improvement in boot times in seconds and as a percentage for each configuration:

Actions

After analyzing time measures (See section: Time measures (“clevis_luks_check_valid_key_or_keyfile”) above), it is apparent that the real bottleneck is clevis_luks_check_valid_key_or_keyfile. So, the action that has been taken is simple, and the call to this function has been removed, but only for the execution path in the boot process. The function will be called in other Clevis-related commands, so its execution has been parameterized so that it is avoided only in the boot process.

Sobre el autor

Sergio Arroutbi is an experienced Software Engineer skilled in Linux, C++/Golang/Python programming, Shell Scripting, Software Design and Development. He has a Master's Degree focused in FLOSS (Free/Libre/Open Source Software) from Universidad Rey Juan Carlos and a Software Craftsmanship Master's Degree from Universidad Politécnica de Madrid. He also works as Associate Professor in Universidad Rey Juan Carlos teaching Programming related subjects.

Más como éste

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

Looking ahead to 2026: Red Hat’s view across the hybrid cloud

OS Wars_part 1 | Command Line Heroes

OS Wars_part 2: Rise of Linux | Command Line Heroes

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube