Si OpenShift Virtualization est une excellente solution pour les applications non conteneurisées, elle est plus difficile à utiliser avec les produits de virtualisation existants et les systèmes bare metal, notamment pour interagir avec les machines virtuelles. La plateforme OpenShift est conçue pour les applications conteneurisées qui n'ont généralement pas besoin de connexions entrantes pour leur configuration et leur gestion, ou du moins du même type de connexions nécessaires aux machines virtuelles pour leur gestion ou leur utilisation.

Cet article de blog présente plusieurs méthodes pour accéder aux machines virtuelles qui s'exécutent dans un environnement OpenShift Virtualization. En voici un bref résumé.

Interface utilisateur d'OpenShift

Les connexions VNC via l'interface utilisateur fournissent un accès direct à la console d'une machine virtuelle, par l'intermédiaire d'OpenShift Virtualization. Les connexions en série via l'interface utilisateur n'exigent aucune configuration lorsque des images fournies par Red Hat sont utilisées. Ces méthodes de connexion s'avèrent utiles pour résoudre les problèmes rencontrés avec une machine virtuelle.

Commande virtctl

La commande

virtctlutilise des objets WebSocket pour établir une connexion à une machine virtuelle. Elle fournit un accès via la console VNC, la console série et SSH à la machine virtuelle. L'accès via la console VNC et la console série est fourni par OpenShift Virtualization comme dans l'interface utilisateur. L'accès via la console VNC nécessite un client VNC sur le client exécutant la commandevirtctl. L'accès via la console série nécessite la même configuration de machine virtuelle que l'accès via la console série à partir de l'interface utilisateur. L'accès via SSH nécessite que le système d'exploitation de la machine virtuelle soit configuré pour l'accès SSH. Consultez la documentation de l'image de la machine virtuelle pour connaître les exigences liées au protocole SSH.Réseau de pods

L'utilisation d'un service pour exposer un port permet d'établir une connexion réseau à une machine virtuelle. Tous les ports d'une machine virtuelle peuvent être exposés à l'aide d'un service. Les ports courants sont les suivants : 22 (ssh), 5900+ (VNC), 3389 (RDP). Trois types de services sont présentés ici.

ClusterIP

Un service ClusterIP expose le port d'une machine virtuelle à l'intérieur du cluster. Cette fonctionnalité permet aux machines virtuelles de communiquer entre elles, mais n'autorise pas les connexions depuis l'extérieur du cluster.

NodePort

Un service NodePort expose le port d'une machine virtuelle à l'extérieur du cluster par le biais des nœuds du cluster. Le port de la machine virtuelle est mappé à un port sur les nœuds, généralement différent de celui de la machine virtuelle. L'accès à la machine virtuelle s'effectue en se connectant à l'adresse IP d'un nœud et au numéro de port approprié.

LoadBalancer (LB)

Un service LB fournit un pool d'adresses IP que les machines virtuelles peuvent utiliser. Ce type de service expose le port d'une machine virtuelle au cluster en externe. Un pool d'adresses IP obtenu ou spécifié est utilisé pour se connecter à la machine virtuelle.

Interface de couche 2 (L2)

Une interface réseau sur les nœuds du cluster peut être configurée en tant que pont pour autoriser la connectivité L2 à une machine virtuelle. L'interface d'une machine virtuelle se connecte au pont à l'aide d'une ressource NetworkAttachmentDefinition. Cette technique contourne la pile réseau du cluster et expose l'interface de la machine virtuelle directement au réseau relié. Ce contournement implique également celui de la sécurité intégrée du cluster. La machine virtuelle doit être sécurisée de la même manière qu'un serveur physique connecté à un réseau.

Informations sur le cluster

Le cluster utilisé dans cet article de blog se nomme wd et se trouve dans le domaine example.org . Il se compose de trois nœuds de plan de contrôle bare metal (wcp-0, wcp-1, wcp-2) et de trois nœuds de calcul bare metal (wwk-0, wwk-1, wwk-2) qui se trouvent sur le réseau principal du cluster, 10.19.3.0/24.

| Nœud | Rôle | IP | Nom de domaine complet |

|---|---|---|---|

| wcp-0 | plan de contrôle | 10.19.3.95 | wcp-0.wd.example.org |

| wcp-1 | plan de contrôle | 10.19.3.94 | wcp-1.wd.example.org |

| wcp-2 | plan de contrôle | 10.19.3.93 | wcp-2.wd.example.org |

| wwk-0 | nœud de calcul | 10.19.3.92 | wwk-0.wd.example.org |

| wwk-1 | nœud de calcul | 10.19.3.91 | wwk-1.wd.example.org |

| wwk-2 | nœud de calcul | 10.19.3.90 | wwk-2.wd.example.org |

MetalLB est configuré pour fournir quatre adresses IP (10.19.3.112-115) aux machines virtuelles de ce réseau.

Les nœuds de cluster disposent d'une interface réseau secondaire sur le réseau 10.19.136.0/24. Ce réseau secondaire possède un serveur DHCP qui fournit des adresses IP.

Les opérateurs suivants sont installés dans le cluster et sont fournis par Red Hat, Inc.

| Opérateur | Motif de l'installation |

|---|---|

| Opérateur Kubernetes NMState | Utilisé pour configurer la deuxième interface sur les nœuds |

| OpenShift Virtualization | Fournit les mécanismes pour exécuter des machines virtuelles |

| Local Storage | Requis par l'opérateur OpenShift Data Foundation dans le cadre de l'utilisation de disques durs locaux |

| Opérateur MetalLB | Fournit le service d'équilibrage de charge utilisé dans cet article de blog |

| OpenShift Data Foundation | Fournit un espace de stockage pour le cluster, créé à l'aide d'un deuxième disque dur sur les nœuds. |

Quelques machines virtuelles sont en cours d'exécution sur le cluster, dans l'espace de noms blog :

- Une machine virtuelle Fedora 38 appelée « fedora »

- Une machine virtuelle Red Hat Enterprise Linux 9 (RHEL9) appelée « rhel9 »

- Une machine virtuelle Windows 11 appelée « win11 »

Connexion via l'interface utilisateur

Différents onglets s'affichent lorsque vous accédez à une machine virtuelle via l'interface utilisateur. Ils fournissent tous des méthodes pour afficher ou configurer divers aspects de la machine virtuelle. L'onglet Console propose notamment trois méthodes pour se connecter à la machine virtuelle : la console VNC, la console série ou la visionneuse de bureau (RDP). La méthode RDP s'affiche uniquement pour les machines virtuelles exécutant Microsoft Windows.

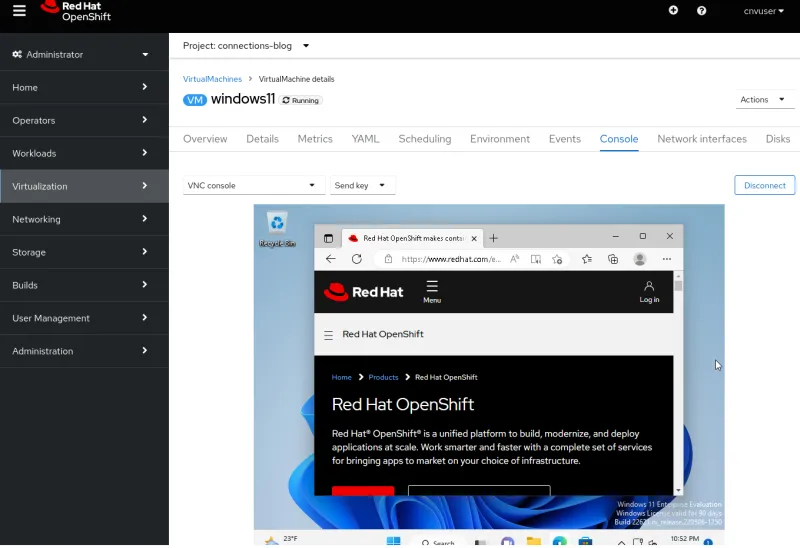

Console VNC

La console VNC est toujours disponible pour toutes les machines virtuelles. Le service VNC est fourni par OpenShift Virtualization et ne nécessite aucune configuration du système d'exploitation des machines virtuelles. Il fonctionne, tout simplement.

Console série

La console série nécessite une configuration dans le système d'exploitation de la machine virtuelle. Cette méthode de connexion ne fonctionne pas si le système d'exploitation n'est pas configuré pour une sortie sur le port série de la machine virtuelle. Les images de machine virtuelle fournies par Red Hat sont configurées pour générer des informations de démarrage sur le port série et pour afficher une invite de connexion lorsque la machine virtuelle a terminé le processus de démarrage.

Visionneuse de bureau

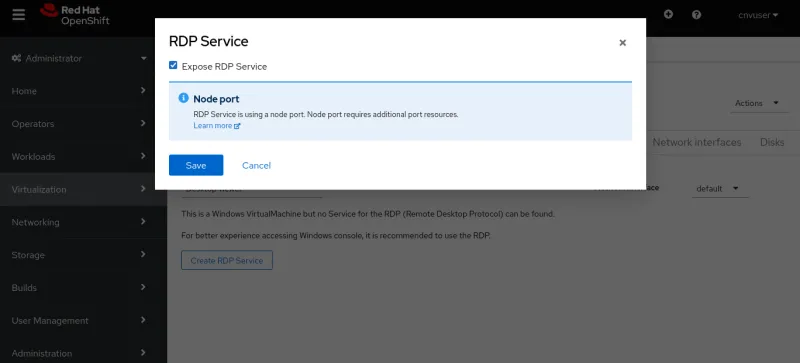

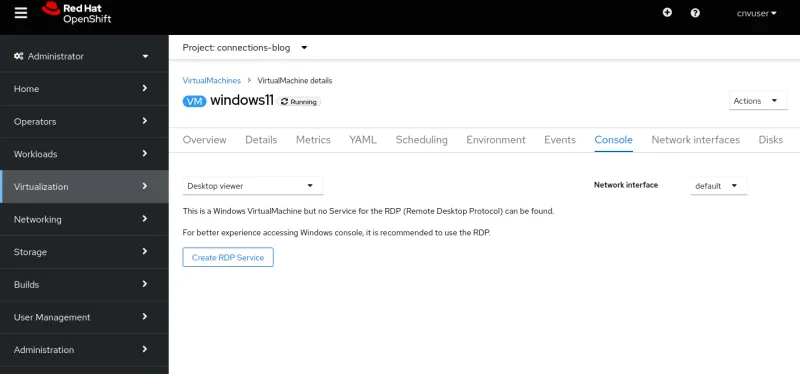

Cette connexion nécessite qu'un service Remote Desktop (RDP) soit installé et en cours d'exécution sur la machine virtuelle. Lorsque vous choisissez de vous connecter à l'aide du protocole RDP dans l'onglet Console, le système indique qu'il n'existe aucun service RDP pour la machine virtuelle et propose une option pour en créer un. Lorsque vous sélectionnez cette option, une fenêtre contextuelle s'affiche pour vous permettre de confirmer que vous souhaitez exposer le service RDP. Lorsque vous cochez la case, un service pour la machine virtuelle autorisant les connexions RDP est créé.

Une fois le service créé, l'onglet Console fournit des informations concernant la connexion RDP.

Un bouton permettant de lancer Remote Desktop (Launch Remote Desktop) est également accessible. Lorsque vous sélectionnez ce bouton, un fichier nommé console.rdp est téléchargé. Si le navigateur est configuré pour ouvrir les fichiers .rdp , il ouvre le fichier console.rdp dans un client RDP.

Connexion via la commande virtctl

La commande virtctl fournit un accès VNC, série et SSH à la machine virtuelle à l'aide d'un tunnel réseau via le protocole WebSocket.

- L'utilisateur exécutant la commande

virtctldoit s'être connecté au cluster à partir de la ligne de commande. - Si l'utilisateur ne se trouve pas dans le même espace de noms que la machine virtuelle, il doit indiquer l'option

--namespace.

La version correcte de la commande virtctl et d'autres clients peuvent être téléchargés à partir de votre cluster avec une adresse URL de type https://console-openshift-console.apps.NOMDUCLUSTER.DOMAINEDUCLUSTER/command-line-tools. Vous pouvez également les télécharger en cliquant sur l'icône en forme de point d'interrogation en haut de l'interface utilisateur et en sélectionnant Command line tools.

Console VNC

La commande virtctl se connecte au serveur VNC fourni par OpenShift Virtualization. Le système qui exécute la commande virtctl nécessite la commande virtctl et l'installation d'un client VNC.

Pour lancer une connexion VNC, il suffit d'exécuter la commande virtctl vnc . Les informations relatives à la connexion s'affichent dans le terminal et une nouvelle session de console VNC s'affiche.

Console série

Pour vous connecter à la console série à l'aide de la commande virtctl , exécutez la commande virtctl console. Comme nous l'avons vu précédemment, si la machine virtuelle est configurée pour une sortie sur son port série, vous devriez voir la sortie du processus de démarrage ou une invite de connexion.

$ virtctl console rhel9

Successfully connected to rhel9 console. The escape sequence is ^]

[ 8.463919] cloud-init[1145]: Cloud-init v. 22.1-7.el9_1 running 'modules:config' at Wed, 05 Apr 2023 19:05:38 +0000. Up 8.41 seconds.

[ OK ] Finished Apply the settings specified in cloud-config.

Starting Execute cloud user/final scripts...

[ 8.898813] cloud-init[1228]: Cloud-init v. 22.1-7.el9_1 running 'modules:final' at Wed, 05 Apr 2023 19:05:38 +0000. Up 8.82 seconds.

[ 8.960342] cloud-init[1228]: Cloud-init v. 22.1-7.el9_1 finished at Wed, 05 Apr 2023 19:05:38 +0000. Datasource DataSourceNoCloud [seed=/dev/vdb][dsmode=net]. Up 8.95 seconds

[ OK ] Finished Execute cloud user/final scripts.

[ OK ] Reached target Cloud-init target.

[ OK ] Finished Crash recovery kernel arming.

Red Hat Enterprise Linux 9.1 (Plow)

Kernel 5.14.0-162.18.1.el9_1.x86_64 on an x86_64

Activate the web console with: systemctl enable --now cockpit.socket

rhel9 login: cloud-user

Password:

Last login: Wed Apr 5 15:05:15 on ttyS0

[cloud-user@rhel9 ~]$

SSH

Pour appeler le client ssh, utilisez la commande virtctl ssh . L'option -i de cette commande permet à l'utilisateur d'indiquer la clé privée à utiliser.

$ virtctl ssh cloud-user@rhel9-one -i ~/.ssh/id_rsa_cloud-user

Last login: Wed May 3 16:06:41 2023

[cloud-user@rhel9-one ~]$

La commande virtctl scp peut également être utilisée pour transférer des fichiers vers une machine virtuelle. Je la mentionne ici, car elle fonctionne de la même manière que la commande virtctl ssh .

Redirection de port

La commande virtctl permet également de rediriger le trafic des ports locaux d'un utilisateur vers un port de la machine virtuelle. Consultez la documentation d'OpenShift pour en savoir plus sur son fonctionnement.

Cette fonctionnalité permet, par exemple, de rediriger votre client OpenSSH local vers la machine virtuelle au lieu d'utiliser le client ssh intégré de la commande virtctl . Vous trouverez un exemple de ce cas d'utilisation dans la documentation de Kubevirt .

Vous pouvez également vous connecter à un service sur une machine virtuelle lorsque vous ne souhaitez pas créer de service OpenShift pour exposer le port.

Par exemple, j'ai une machine virtuelle appelée fedora-proxy sur laquelle le serveur web NGINX est installé. Un script personnalisé sur la machine virtuelle écrit des statistiques dans un fichier appelé process-status.out. Je suis la seule personne intéressée par le contenu du fichier, mais je souhaite le consulter tout au long de la journée. Je peux utiliser la commande virtctl port-forward pour rediriger un port local de mon ordinateur de bureau ou portable vers le port 80 de la machine virtuelle. Je peux écrire un court script pour recueillir les données quand je le souhaite.

#! /bin/bash

# Create a tunnel

virtctl port-forward vm/fedora-proxy 22080:80 &

# Need to give a little time for the tunnel to come up

sleep 1

# Get the data

curl http://localhost:22080/process-status.out

# Stop the tunnel

pkill -P $$

L'exécution du script me permet d'obtenir les données dont j'ai besoin et d'effectuer un autonettoyage par la suite.

$ gather_stats.sh

{"component":"","level":"info","msg":"forwarding tcp 127.0.0.1:22080 to 80","pos":"portforwarder.go:23","timestamp":"2023-05-04T14:27:54.670662Z"}

{"component":"","level":"info","msg":"opening new tcp tunnel to 80","pos":"tcp.go:34","timestamp":"2023-05-04T14:27:55.659832Z"}

{"component":"","level":"info","msg":"handling tcp connection for 22080","pos":"tcp.go:47","timestamp":"2023-05-04T14:27:55.689706Z"}

Test Process One Status: Bad

Test Process One Misses: 125

Test Process Two Status: Good

Test Process Two Misses: 23

Connexion via un port exposé sur le réseau de pods (services)

Services

Les services d'OpenShift sont utilisés pour exposer les ports d'une machine virtuelle au trafic entrant, lequel peut provenir d'autres machines virtuelles et pods ou d'une source externe au cluster.

Cet article de blog montre comment créer trois types de services : ClusterIP, NodePort et LoadBalancer. Le type de service ClusterIP n'autorise pas l'accès externe aux machines virtuelles. Les trois types de service permettent un accès interne entre les machines virtuelles et les pods. Il s'agit de la méthode de communication privilégiée entre les machines virtuelles du cluster. Le tableau suivant liste les trois types de services et leur portée en matière d'accessibilité.

| Type | Portée interne à partir du DNS interne du cluster | Portée externe |

|---|---|---|

| ClusterIP | <service-name>.<namespace>.svc.cluster.local | Aucune |

| NodePort | <service-name>.<namespace>.svc.cluster.local | Adresse IP d'un nœud du cluster |

| LoadBalancer | <service-name>.<namespace>.svc.cluster.local | Adresse IP externe des pools d'adresses IP de LoadBalancer |

Les services peuvent être créés à l'aide de la commande virtctl expose ou en les définissant dans un fichier YAML à partir de la ligne de commande ou de l'interface utilisateur.

Commençons par définir un service à l'aide de la commande virtctl.

Créer un service à l'aide de la commande virtctl

Pour utiliser la commande virtctl , l'utilisateur doit être connecté au cluster. Si l'utilisateur ne se trouve pas dans le même espace de noms que la machine virtuelle, il peut utiliser l'option --namespace pour indiquer l'espace de noms qui la contient.

La commande virtctl expose vm crée un service qui peut être utilisé pour exposer le port d'une machine virtuelle. Les options suivantes sont couramment utilisées avec la commande virtctl expose lors de la création d'un service.

| --name | Nom du service à créer |

| --type | Indique le type de service à créer : ClusterIP, NodePort, LoadBalancer |

| --port | Numéro de port sur lequel le service écoute le trafic |

| --target-port | Facultative. Port de la machine virtuelle à exposer (s'il n'est pas indiqué, il est identique à la valeur de « --port ») |

| --protocol | Facultative. Protocole que le service doit écouter (il s'agit de TCP par défaut) |

La commande suivante crée un service pour l'accès SSH à une machine virtuelle nommée RHEL9.

$ virtctl expose vm rhel9 --name rhel9-ssh --type NodePort --port 22

Affichez le service pour déterminer le port à utiliser pour accéder à la machine virtuelle depuis l'extérieur du cluster.

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rhel9-ssh NodePort 172.30.244.228 <none> 22:32317/TCP 3s

Supprimons le port pour l'instant.

$ oc delete service rhel9-ssh

service "rhel9-ssh" deleted

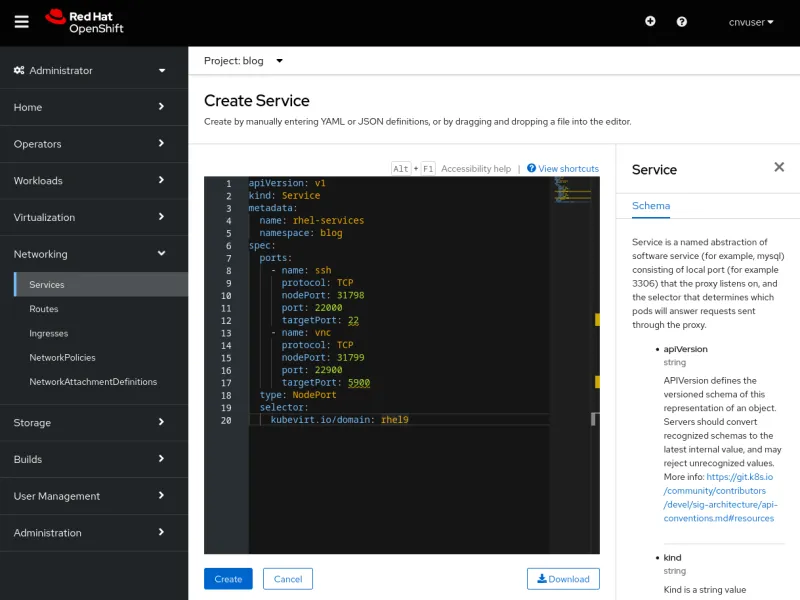

Créer un service à l'aide d'un fichier YAML

Pour créer un service à l'aide d'un fichier YAML, saisissez oc create -f dans la ligne de commande ou utilisez un éditeur dans l'interface utilisateur. Les deux méthodes fonctionnent et chacune a ses propres avantages. Si la ligne de commande est plus facile à écrire, l'interface utilisateur vous guide dans la définition du service.

Intéressons-nous d'abord au fichier YAML, car il s'agit du même fichier pour les deux méthodes.

Une seule définition de service peut exposer un ou plusieurs ports. Le fichier YAML ci-dessous est un exemple de définition de service qui expose deux ports, un pour le trafic SSH et un autre pour le trafic VNC. Les ports sont exposés en tant que NodePort. Les explications des éléments clés figurent après l'exemple de fichier YAML.

apiVersion: v1

kind: Service

metadata:

name: rhel-services

namespace: blog

spec:

ports:

- name: ssh

protocol: TCP

nodePort: 31798

port: 22000

targetPort: 22

- name: vnc

protocol: TCP

nodePort: 31799

port: 22900

targetPort: 5900

type: NodePort

selector:

kubevirt.io/domain: rhel9

Voici la description de quelques paramètres du fichier :

| metadata.name | Nom du service, unique dans son espace de noms |

| metadata.namespace | Espace de noms dans lequel se trouve le service |

| spec.ports.name | Nom du port défini |

| spec.ports.protocol | Protocole du trafic réseau, TCP ou UDP |

| spec.ports.nodePort | Port exposé à l'extérieur du cluster (unique au sein du cluster) |

| spec.ports.port | Port utilisé en interne au sein du réseau de clusters |

| spec.ports.targetPort | Port exposé par la machine virtuelle (plusieurs machines virtuelles peuvent exposer le même port) |

| spec.type | Type de service à créer (ici, nous utilisons NodePort) |

| spec.selector | Sélecteur utilisé pour lier le service à une machine virtuelle (ici le service est lié à une machine virtuelle appelée rhel9) |

Créer un service à partir de la ligne de commande

Créons les deux services dans le fichier YAML à partir de la ligne de commande, en utilisant la commande oc create -f.

$ oc create -f service-two.yaml

service/rhel-services created

$ oc get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rhel-services NodePort 172.30.11.97 <none> 22000:31798/TCP,22900:31799/TCP 4s

Nous pouvons voir que deux ports sont exposés dans un seul service. Supprimons à présent le service à l'aide de la commande oc delete service .

$ oc delete service rhel-services

service "rhel-services" deleted

Créer un service à partir de l'interface utilisateur

Créons le même service à l'aide de l'interface utilisateur. Accédez au menu Networking > Services et sélectionnez Create Service. Un éditeur s'ouvre avec une définition de service préremplie et une référence au schéma. Collez le contenu du fichier YAML ci-dessus dans l'éditeur et sélectionnez Create pour créer un service.

Après avoir cliqué sur Create, vérifiez les détails du service qui s'affichent.

Pour voir les services liés à une machine virtuelle, accédez à l'onglet VMs Details, ou saisissez oc get service dans la ligne de commande, comme précédemment. Supprimons le service comme nous l'avons fait précédemment.

$ oc get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rhel-services NodePort 172.30.11.97 <none> 22000:31798/TCP,22900:31799/TCP 4s

$ oc delete service rhel-services

service "rhel-services" deleted

Créer des services SSH et RDP en toute simplicité

L'interface utilisateur permet de créer des services SSH et RDP sur des machines virtuelles en quelques clics.

Pour activer facilement SSH, accédez à la liste déroulante SSH service type dans l'onglet Details de la machine virtuelle. Cette liste permet également de créer facilement un service NodePort ou LoadBalancer.

Le service est créé une fois le type sélectionné. L'interface utilisateur affiche une commande qui peut être utilisée pour se connecter au service créé.

Pour activer le protocole RDP, accédez à l'onglet Console de la machine virtuelle. Si la machine virtuelle est basée sur Windows, l'option Desktop Viewer s'affiche dans le menu déroulant de la console.

Sélectionnez-la pour afficher l'option Create RDP Service .

Lorsque vous sélectionnez cette option, une fenêtre contextuelle s'affiche pour confirmer la commande Expose RDP Service.

Une fois le service créé, l'onglet Console affiche les informations de connexion.

Exemple de connexion via un service ClusterIP

Les services de type ClusterIP permettent aux machines virtuelles de se connecter entre elles au sein du cluster. Cette fonctionnalité s'avère utile si une machine virtuelle fournit un service à d'autres machines virtuelles, comme une instance de base de données. Au lieu de configurer une base de données sur une machine virtuelle, exposons simplement le port SSH sur la machine virtuelle Fedora à l'aide d'un service ClusterIP.

Créons un fichier YAML qui crée un service exposant le port SSH de la machine virtuelle Fedora à l'intérieur du cluster.

apiVersion: v1

kind: Service

metadata:

name: fedora-internal-ssh

namespace: blog

spec:

ports:

- protocol: TCP

port: 22

selector:

kubevirt.io/domain: fedora

type: ClusterIP

Appliquons la configuration.

$ oc create -f service-fedora-ssh-clusterip.yaml

service/fedora-internal-ssh created

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

fedora-internal-ssh ClusterIP 172.30.202.64 <none> 22/TCP 7s

Avec la machine virtuelle RHEL9, nous savons qu'il est possible de se connecter à la machine virtuelle Fedora via le port SSH.

$ virtctl console rhel9

Successfully connected to rhel9 console. The escape sequence is ^]

rhel9 login: cloud-user

Password:

Last login: Wed May 10 10:20:23 on ttyS0

[cloud-user@rhel9 ~]$ ssh fedora@fedora-internal-ssh.blog.svc.cluster.local

The authenticity of host 'fedora-internal-ssh.blog.svc.cluster.local (172.30.202.64)' can't be established.

ED25519 key fingerprint is SHA256:ianF/CVuQ4kxg6kYyS0ITGqGfh6Vik5ikoqhCPrIlqM.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'fedora-internal-ssh.blog.svc.cluster.local' (ED25519) to the list of known hosts.

Last login: Wed May 10 14:25:15 2023

[fedora@fedora ~]$

Exemple de connexion via un service NodePort

Dans cet exemple, nous exposons le port RDP de la machine virtuelle windows11 à l'aide d'un service NodePort pour nous connecter à son poste de travail et ainsi bénéficier d'une meilleure expérience que si nous utilisions l'onglet Console. Cette connexion est destinée aux utilisateurs de confiance qui connaissent les adresses IP des nœuds du cluster.

Note à propos d'OVN-Kubernetes

La dernière version du programme d'installation d'OpenShift utilise par défaut la pile réseau OVN-Kubernetes. Si le cluster exécute la pile réseau OVN-Kubernetes et qu'un service NodePort est utilisé, le trafic sortant des machines virtuelles ne fonctionnera pas tant que le mode routingViaHost n'aura pas été activé.

L'application d'un correctif simple au cluster permet d'activer le trafic sortant lors de l'utilisation d'un service NodePort.

$ oc patch network.operator cluster -p '{"spec": {"defaultNetwork": {"ovnKubernetesConfig": {"gatewayConfig": {"routingViaHost": true}}}}}' --type merge

$ oc get network.operator cluster -o yaml

apiVersion: operator.openshift.io/v1

kind: Network

spec:

defaultNetwork:

ovnKubernetesConfig:

gatewayConfig:

routingViaHost: true

...Ce correctif n'est pas nécessaire si le cluster utilise la pile réseau OpenShiftSDN ou si un service MetalLB est utilisé.

Exemple de connexion via un service NodePort

Pour créer le service NodePort, commençons par le définir dans un fichier YAML.

apiVersion: v1

kind: Service

metadata:

name: win11-rdp-np

namespace: blog

spec:

ports:

- name: rdp

protocol: TCP

nodePort: 32389

port: 22389

targetPort: 3389

type: NodePort

selector:

kubevirt.io/domain: windows11

Créons le service.

$ oc create -f service-windows11-rdp-nodeport.yaml

service/win11-rdp-np created

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

win11-rdp-np NodePort 172.30.245.211 <none> 22389:32389/TCP 5s

Étant donné qu'il s'agit d'un service NodePort, nous pouvons nous y connecter en utilisant l'adresse IP de n'importe quel nœud. La commande oc get nodes permet d'afficher les adresses IP des nœuds.

$ oc get nodes -o=custom-columns=Name:.metadata.name,IP:status.addresses[0].address

Name IP

wcp-0 10.19.3.95

wcp-1 10.19.3.94

wcp-2 10.19.3.93

wwk-0 10.19.3.92

wwk-1 10.19.3.91

wwk-2 10.19.3.90

Nous pouvons utiliser le programme client xfreerdp pour les connexions RDP. Nous lui indiquerons de se connecter au nœud wcp-0 à l'aide du port RDP exposé sur le cluster.

$ xfreerdp /v:10.19.3.95:32389 /u:cnvuser /p:hiddenpass

[14:32:43:813] [19743:19744] [WARN][com.freerdp.crypto] - Certificate verification failure 'self-signed certificate (18)' at stack position 0

[14:32:43:813] [19743:19744] [WARN][com.freerdp.crypto] - CN = DESKTOP-FCUALC4

[14:32:44:118] [19743:19744] [INFO][com.freerdp.gdi] - Local framebuffer format PIXEL_FORMAT_BGRX32

[14:32:44:118] [19743:19744] [INFO][com.freerdp.gdi] - Remote framebuffer format PIXEL_FORMAT_BGRA32

[14:32:44:130] [19743:19744] [INFO][com.freerdp.channels.rdpsnd.client] - [static] Loaded fake backend for rdpsnd

[14:32:44:130] [19743:19744] [INFO][com.freerdp.channels.drdynvc.client] - Loading Dynamic Virtual Channel rdpgfx

[14:32:45:209] [19743:19744] [WARN][com.freerdp.core.rdp] - pduType PDU_TYPE_DATA not properly parsed, 562 bytes remaining unhandled. Skipping.

La connexion à la machine virtuelle a été établie.

Exemple de connexion via un service LoadBalancer

Pour créer le service LoadBalancer, commençons par le définir dans un fichier YAML. Nous utiliserons la machine virtuelle Windows et exposerons le port RDP.

apiVersion: v1

kind: Service

metadata:

name: win11-rdp-lb

namespace: blog

spec:

ports:

- name: rdp

protocol: TCP

port: 3389

targetPort: 3389

type: LoadBalancer

selector:

kubevirt.io/domain: windows11

Créons le service. Nous constatons qu'il obtient automatiquement une adresse IP.

$ oc create -f service-windows11-rdp-loadbalancer.yaml

service/win11-rdp-lb created

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

win11-rdp-lb LoadBalancer 172.30.125.205 10.19.3.112 3389:31258/TCP 3s

Nous voyons que nous nous connectons à l'adresse EXTERNAL-IP à partir du service et du port RDP standard 3389 exposé à l'aide du service. Le résultat de la commande xfreerdp indique que la connexion a été correctement établie.

$ xfreerdp /v:10.19.3.112 /u:cnvuser /p:changeme

[15:51:21:333] [25201:25202] [WARN][com.freerdp.crypto] - Certificate verification failure 'self-signed certificate (18)' at stack position 0

[15:51:21:333] [25201:25202] [WARN][com.freerdp.crypto] - CN = DESKTOP-FCUALC4

[15:51:23:739] [25201:25202] [INFO][com.freerdp.gdi] - Local framebuffer format PIXEL_FORMAT_BGRX32

[15:51:23:739] [25201:25202] [INFO][com.freerdp.gdi] - Remote framebuffer format PIXEL_FORMAT_BGRA32

[15:51:23:752] [25201:25202] [INFO][com.freerdp.channels.rdpsnd.client] - [static] Loaded fake backend for rdpsnd

[15:51:23:752] [25201:25202] [INFO][com.freerdp.channels.drdynvc.client] - Loading Dynamic Virtual Channel rdpgfx

[15:51:24:922] [25201:25202] [WARN][com.freerdp.core.rdp] - pduType PDU_TYPE_DATA not properly parsed, 562 bytes remaining unhandled. Skipping.

Je n'ai pas ajouté de capture d'écran ici, car il s'agit de la même que celle du dessus.

Connexion via une interface de couche 2

Si l'interface de la machine virtuelle doit être utilisée en interne et n'a pas besoin d'une exposition publique, il est judicieux de se connecter à l'aide d'une ressource NetworkAttachmentDefinition et d'une interface reliée par un pont sur les nœuds. Cette méthode permet de contourner la pile réseau des clusters, qui n'a pas besoin de traiter chaque paquet de données, avec à la clé une amélioration des performances du trafic réseau.

Elle présente toutefois certains inconvénients, car les machines virtuelles sont exposées directement à un réseau et ne sont protégées par aucune des fonctions de sécurité des clusters. Si une machine virtuelle est compromise, un intrus pourrait accéder aux réseaux auxquels la machine virtuelle est connectée. Si vous utilisez cette méthode, veillez à mettre en place des mesures de sécurité appropriées au sein du système d'exploitation de la machine virtuelle.

NMState

Vous pouvez utiliser l'opérateur NMState fourni par Red Hat pour configurer les interfaces physiques sur les nœuds après le déploiement du cluster. Diverses configurations peuvent être appliquées, notamment des ponts, des VLAN et des liaisons. Nous allons utiliser cet opérateur pour configurer un pont sur une interface inutilisée de chaque nœud du cluster. Consultez la documentation d'OpenShift pour en savoir plus sur l'utilisation de l'opérateur NMState.

Configurons un pont simple sur une interface inutilisée des nœuds. L'interface est reliée à un réseau qui fournit la connexion DHCP et distribue les adresses sur le réseau 10.19.142.0. Le fichier YAML suivant crée un pont nommé brint sur l'interface réseau ens5f1 .

---

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: brint-ens5f1

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: brint

description: Internal Network Bridge

type: linux-bridge

state: up

ipv4:

enabled: false

bridge:

options:

stp:

enabled: false

port:

- name: ens5f1

Appliquez le fichier YAML pour créer le pont sur les nœuds de calcul.

$ oc create -f brint.yaml

nodenetworkconfigurationpolicy.nmstate.io/brint-ens5f1 created

Utilisez la commande oc get nncp pour afficher l'état de l'objet NodeNetworkConfigurationPolicy. Utilisez la commande oc get nnce pour afficher l'état de la configuration des nœuds individuels. Une fois la configuration appliquée, l'état (STATUS) des deux commandes prend la valeur Available et la raison (REASON) indique SuccessfullyConfigured.

$ oc get nncp

NAME STATUS REASON

brint-ens5f1 Progressing ConfigurationProgressing

$ oc get nnce

NAME STATUS REASON

wwk-0.brint-ens5f1 Pending MaxUnavailableLimitReached

wwk-1.brint-ens5f1 Available SuccessfullyConfigured

wwk-2.brint-ens5f1 Progressing ConfigurationProgressing

NetworkAttachmentDefinition

Les machines virtuelles ne peuvent pas être directement reliées au pont que nous avons créé, mais elles peuvent se lier à une ressource NetworkAttachmentDefinition (NAD). La commande suivante crée une NAD nommée nad-brint qui sera reliée au pont brint créé sur le nœud. Consultez la documentation d'OpenShift pour savoir comment créer la ressource NetworkAttachmentDefinition.

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: nad-brint

annotations:

k8s.v1.cni.cncf.io/resourceName: bridge.network.kubevirt.io/brint

spec:

config: '{

"cniVersion": "0.3.1",

"name": "nad-brint",

"type": "cnv-bridge",

"bridge": "brint",

"macspoofchk": true

}'

Après avoir appliqué le fichier YAML, vous pouvez afficher la ressource NetworkAttachmentDefinition à l'aide de la commande oc get network-attachment-definition .

$ oc create -f brint-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/nad-brint created

$ oc get network-attachment-definition

NAME AGE

nad-brint 19s

La NAD peut également être créée à partir de l'interface utilisateur depuis le menu Networking > NetworkAttachmentDefinitions.

Exemple de connexion via une interface de couche 2

Une fois la ressource NetworkAttachmentDefinition créée, vous pouvez ajouter une interface réseau à la machine virtuelle ou modifier une interface existante pour l'utiliser. Ajoutons une nouvelle interface en accédant aux détails de la machine virtuelle et en sélectionnant l'onglet Network interfaces. Sélectionnez l'option Add network interface. Vous pouvez modifier une interface existante via le menu à trois points situé à droite.

Après le redémarrage de la machine virtuelle, l'onglet Overview des détails de la machine virtuelle affiche l'adresse IP envoyée par DHCP.

Nous pouvons désormais nous connecter à la machine virtuelle à l'aide de l'adresse IP acquise auprès d'un serveur DHCP sur l'interface reliée.

$ ssh cloud-user@10.19.142.213 -i ~/.ssh/id_rsa_cloud-user

The authenticity of host '10.19.142.213 (10.19.142.213)' can't be established.

ECDSA key fingerprint is SHA256:0YNVhGjHmqOTL02mURjleMtk9lW5cfviJ3ubTc5j0Dg.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '10.19.142.213' (ECDSA) to the list of known hosts.

Last login: Wed May 17 11:12:37 2023

[cloud-user@rhel9 ~]$

Le trafic SSH passe dans la machine virtuelle via le pont et sort de l'interface réseau physique. Le trafic contourne le réseau de pods et se trouve sur le réseau où réside l'interface reliée. La machine virtuelle n'est protégée par aucun pare-feu lorsqu'elle est connectée de cette manière et tous ses ports sont accessibles, y compris ceux utilisés pour SSH et VNC.

Conclusion

Vous avez découvert différentes méthodes de connexion à des machines virtuelles exécutées dans OpenShift Virtualization. Ces méthodes permettent de résoudre les problèmes liés au dysfonctionnement des machines virtuelles et de s'y connecter au quotidien. Les machines virtuelles peuvent interagir entre elles localement au sein du cluster, et les systèmes externes au cluster peuvent y accéder directement ou via le réseau de pods. Ces possibilités facilitent la transition lors de la migration de systèmes physiques et de machines virtuelles depuis d'autres plateformes vers OpenShift Virtualization.

À propos de l'auteur

Plus de résultats similaires

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Shadow-Soft shares top challenges holding organizations back from virtualization modernization

Edge computing covered and diced | Technically Speaking

Parcourir par canal

Automatisation

Les dernières nouveautés en matière d'automatisation informatique pour les technologies, les équipes et les environnements

Intelligence artificielle

Actualité sur les plateformes qui permettent aux clients d'exécuter des charges de travail d'IA sur tout type d'environnement

Cloud hybride ouvert

Découvrez comment créer un avenir flexible grâce au cloud hybride

Sécurité

Les dernières actualités sur la façon dont nous réduisons les risques dans tous les environnements et technologies

Edge computing

Actualité sur les plateformes qui simplifient les opérations en périphérie

Infrastructure

Les dernières nouveautés sur la plateforme Linux d'entreprise leader au monde

Applications

À l’intérieur de nos solutions aux défis d’application les plus difficiles

Virtualisation

L'avenir de la virtualisation d'entreprise pour vos charges de travail sur site ou sur le cloud