What are these block devices?

Like CPUs and memory, block devices have been with us in the Linux and UNIX world for a long time, and are going to stay. In this article, I try to point at some recent developments and give ideas for debugging and available tools.

Ever wondered how to do checksumming on a block device? How to have a device appear bigger than the underlying disk? How to compress your disk? This article has something new for everybody.

For character devices, the driver sends/receives single bytes. For block devices, communication is in entire blocks. For example, hard disk or NVMe drivers can present the disk contents for read/write access via device node /dev/sda, and we can imagine the block device as representing the disk as a long lineup of bytes. A user on the Linux system can then open the device, seek to the place which should be accessed, and read or write data.

Many of the software pieces mentioned here take existing block devices, and make a new device available, for example providing transparent compression between both devices. Other layers like LVM can be used on top. This flexibility is one of Linux’s strengths!

Spawning block devices for testing

Let’s assume we want to practice how to replace failed discs in a mdadm raid setup - for such a setup we need a few fast and disposable block devices.

Probably the most simple way to play with block devices is to create a normal file, and have it then mapped to a block device. We will use dd to create a 32MB file with zeros and map it to block device /dev/loop0.

$ dd if=/dev/zero of=myfile bs=1M count=32 $ losetup --show -f myfile /dev/loop0 $ ls -al /dev/loop0 brw-rw----. 1 root disk 7, 0 Jul 1 14:41 /dev/loop0

Loop0 can now be used as a normal block device. The mapping can be torn down later with losetup -d /dev/loop0. Our most basic access test will be to write bytes, and then read them back.

$ echo hugo >/dev/loop0 $ dd if=/dev/loop0 bs=1 count=4 hugo

This test is also interesting when you get 2 systems with Fiber Channel HBA configured for a commonly shared LUN on a SAN storage: when the LUN is still unused, writing some bytes from one node and then reading them from all nodes as per above commands is a good test.

A further idea for getting block devices for tests: create a LVM volume, and then a snapshot. You can then perform reads/writes on that LVM snapshot, and destroy it when no longer needed.

For some years now, it is also possible to merge the snapshot back into the original volume. This can be used also for RHEL upgrades: take a snapshot, upgrade to a new minor release. Then, in case your application stops working, you merge back the original snapshot. If your application is happy with the upgrade, you simply remove the snapshot. The one downside of this procedure is that it involves several steps and is not simple, as /boot is not living on LVM and needs extra consideration. This kbase article has details.

When you are using dd to get an idea about the access speed of a block device, you can use status=progress in newer versions of dd:

$ dd if=/dev/nvme0n1 of=/dev/null status=progress 2518680064 bytes (2.5 GB, 2.3 GiB) copied, 3 s, 840 MB/s^C [..]

Also hdparm -t <device> and hdparm -T <device> can be used to show baseline access speeds to disk devices.

A further idea for test block devices: iSCSI. RHEL comes with iSCSI target software (so making block devices available) as well as initiator, so software to “consume.” A single iSCSI target can also be used from multiple initiators. Also, as the access goes over the network sniffing the traffic and investigating it with for example wireshark shows operations on SCSI level. If you want to simulate slow block devices, you can setup iSCSI and then do traffic shaping for the iSCSI network packets.

An extra serving of disks, please!

Suppose you want to investigate how host bus adapters and block devices will get represented under the /sys filesystem, or if a high number of devices is required, then the scsi_debug kernel module can help.

The module emulates a scsi adapter, just not backed by real storage but by memory. Using modinfo scsi_debug gives an overview of the parameters. So this command:

modprobe scsi_debug add_host=5 max_luns=10 num_tgts=2 dev_size_mb=16

spawns 100 disks (5x10x2) of 16MB size for us, as seen via cat /proc/partitions. In detail:

-

5 scsi hosts get emulated

-

10 LUNs for each

-

2 targets

These options should be chosen carefully, as udev rules are run on new block devices, so high load can be seen for high numbers of disks. You can also use scsi_debug to test device-mapper-multipath.

On a side note, the number of visible disk devices can explode quickly in the real world. Imagine a SAN storage exporting 100 LUNs to a system. The SAN connects with 4 ports to a fabric. We have 2 fiber switches in front of the SAN storage, and then our RHEL system with 2 fiber ports. This results in the 100 LUNs appearing as 1600 block devices on the RHEL system. Thousands of disk devices quickly become a problem when booting - so LUN and path numbers should be considered carefully.

Making huge block devices available

Suppose you want to investigate the behaviour with huge block devices. For example, how much data will the XFS filesystem occupy for itself on a 10TB block device?

We already used dd to map a file to a block device. What happens with a sparse file as the backend? A normal file simply contains a sequence of bytes. For sparse files, between the sequences of bytes we have holes where no data has been written. The benefit over writing a sequence of zeros is that these holes are a special file system feature, and use up much less space.

Sparse files can for example be created with compiled C code, with dd, or with truncate.

With this, we can create a 10TB sparse file which on the file system takes up just some KB. We can also map that with losetup to a block device: the device will look like a normal 10TB disk. As soon as we write to the block device, the underlying file will grow.

So, in case you wondered how much data XFS occupies on the 10TB disk after creating the filesystem:

$ dd if=/dev/zero of=myfile bs=1 count=1 seek=10T $ du -sh myfile 4.0k myfile $ losetup --show -f myfile [..] $ mkfs.xfs /dev/loop0 $ du -sh myfile 2.0G myfile

These tools and sparse files come in quite handy. Logical Volume Manager (LVM) allows thin provisioning, which can be seen as the more enterprise version. Just be sure to carefully monitor how full your backend devices are: with thin provisioning, the system is presenting bigger block devices than the backend provides, so situations where the backend fills up completely need to be prevented as then writes can no longer be carried out.

A further way to “provide bigger block devices than the backend has” is via Virtual Data Optimizer (VDO). VDO is part of RHEL, and can reduce the amount of data on block level. With this, a 10TB backend can be presented as 15TB - so if your data is nicely compressible, you can store 15TB of data there.

Block device high availability and mirroring

How to prevent data loss from failing disks? RAID is one way.

With a software raid, Linux sees the disks as single devices. The software raid layer assembles the devices into a new block device, for example mdadm creates a device /dev/md0.

When the RAID is implemented in hardware, Linux sees just a single device, and is not aware of the underlying hardware RAID. Both software and hardware RAID have their strong and weak points - if in doubt, we can help you to find the best approach for your requirements.

As for software RAID, mdadm is a stable and proven implementation in RHEL, allowing raids over disks - both internal and external ones. LVM has also implemented the functionality for some years, details are here.

If SAN storage is connected, then most likely the SAN is internally doing RAID, to protect data against disk failure. When interacting with SAN, we are likely to see the LUNs available as many disks, and use dm-multipath to abstract all of these disk-devices to the single LUNs which the SAN actually exports. dm-multipath allows us here to survive failure of components (fiber cables, fiber switches, etc.) as well as to balance I/O over the available paths.

Balancing performance vs. price

We seem to be at the last part of the era of spinning disks. They are big, cheap and (compared to newer technology) slow. Technology like NVMe and SSD are faster, but more expensive. Using dm-cache can help to get the best from both worlds: with dm-cache, we can put NVMe devices in front of disk devices, and have the NVMe act as a cache. Details are here and here.

Integrity checks: has data been modified?

If you store data via block device on a hard disk, ever wondered what would happen if the read operation returns something else than was written?

Hard disk vendors specify the mean time between failures for their disks, and with the ever increasing disk sizes, data integrity is something to consider.

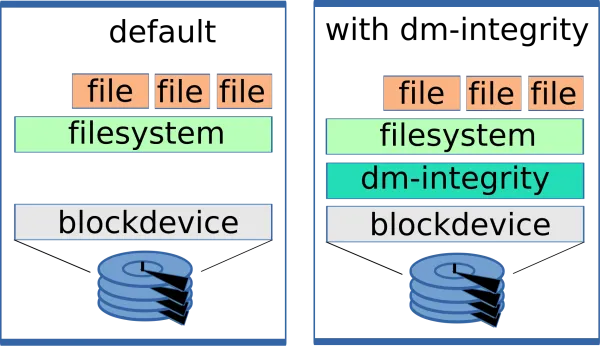

If we run a plain file system like XFS on the harddisk, and the disk returns modified bytes, then the file system is unlikely to notice. XFS supports checksums over metadata, but not over the stored data itself.

To counter this, Device Mapper integrity (dm-integrity) can be used on newer RHEL 8 versions. With this layer on top of the harddisk device, written data is stored with a checksum which allows us to detect when the disk returns incorrect data.

We can also conveniently use dm-integrity together with LUKS, which is a layer to encrypt block devices. When installing Linux on a laptop, using LUKS is recommended.

DIF/DIX: integrity checks over all layers

Suppose we are worried about data corruption on the full stack, the whole way until the data gets written to the block device: what could be done about that?

In today’s setups, many layers can be involved when data moves: an application in a KVM guest writes data, it passes through filesystem and LVM in the guest, on to the hypervisor, which writes to LUNs on SAN storage. If data gets mangled, all of these layers need to be looked at.

DIF/DIX is an approach to protect from modification over these layers: when the application writes data, an extra checksum is attached, the Data Integrity Field (DIF). That checksum stays with the data through all layers, until eventually the storage verifies the DIF, and stores both the data and the DIF. Also when reads occur, the checksum gets verified by the storage device. This is a bit like dm-integrity, but with the verification directly done in the application layer.

From the application side, just a few applications (for example Oracle databases) support DIF/DIX, and also just a few storage vendors. Red Hat is not certifying DIF/DIX compatibility, but working with partners to ensure that DIF/DIX is possible, so the Linux layers can deal with it.

In the future, more applications and storage solutions might start to support DIF/DIX. Details are here.

Diagnostics

Let’s also consider some diagnostics around block devices. Both cat /proc/partitions and lsblk are used often to get an overview of the systems block devices.

The command iostat from the sysstat package provides details on the I/O operations on block devices, throughput of the device, and estimations of the device saturation. When a device gets saturated, further investigation might make sense.

The blktrace command generates traces of I/O traffic, iotop shows details of the throughput. Performance Co-Pilot (PCP) is our default solution to show live throughput, and create archive files for later examination. With Redis and Grafana,these metrics can also be nicely visualized.

With the bcc suite, we can run code in kernel space which helps us very much in the block layers:

-

bcc/ trace: Who/which process is executing specific functions against block devices?

-

bcc/biosnoop: Which process is accessing the block device, how many bytes are accessed, which latency for answering the requests?

Summary

As we have seen, the Linux world provides many tools and technologies around block devices, and almost weekly new options become available. Creation of block devices for testing is easy, to try out things. These technologies can be combined, allowing great flexibility.

Stacking layers together one time might work, but it’s also important that the full stack also gets assembled after reboots, and RHEL updates. If in doubt please reach out to your Technical Account Manager (TAM) or Red Hat support to confirm if combinations are tested and recommended.

Sull'autore

Christian Horn is a Senior Technical Account Manager at Red Hat. After working with customers and partners since 2011 at Red Hat Germany, he moved to Japan, focusing on mission critical environments. Virtualization, debugging, performance monitoring and tuning are among the returning topics of his daily work. He also enjoys diving into new technical topics, and sharing the findings via documentation, presentations or articles.

Altri risultati simili a questo

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 5)

Announcing general availability of SQL Server 2025 on Red Hat Enterprise Linux 10

The Overlooked Operating System | Compiler: Stack/Unstuck

Linux, Shadowman, And Open Source Spirit | Compiler

Ricerca per canale

Automazione

Novità sull'automazione IT di tecnologie, team e ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Hybrid cloud open source

Scopri come affrontare il futuro in modo più agile grazie al cloud ibrido

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Virtualizzazione

Il futuro della virtualizzazione negli ambienti aziendali per i carichi di lavoro on premise o nel cloud