Some people view Kubernetes management platforms as "just another layer" in the stack or, worse, as additional complexity. When "keep the lights on" and "get this application deployed quickly" are your main metrics and the basis of your key performance indicators (KPIs), that's logical.

But that's not accurate once you dive deeper into your applications and their dependencies. When deciding where to host your application, you must consider that they may later need to be migrated or used in hybrid cloud environments. This means you need to architect for portability.

[ Managed services vs. hosted services vs. cloud services: What's the difference? ]

This is where a well-orchestrated Kubernetes management platform is valuable. It takes work off your teams by making it simpler to resolve external dependencies so that your teams can focus on the application's key functionalities.

How to measure portability

Portability time objective (PTO) is the maximum time that is acceptable for moving applications and data. One example is migrating from on-premises to the cloud. PTO is a well-known KPI to help organizations establish governance in their cloud strategy.

Business scenarios include:

- Design for no PTO: You want the quickest possible solution. You don't care about the ability to port, and you are willing to accept lock-in risk.

- Design for a PTO of < 1 month: You want a sustainable solution with the flexibility to run in multiple locations on multiple cloud providers and therefore need portability.

- Design for a PTO of 3-6 months: You've decided that the solution needs to be portable with a PTO of 3-6 months. Your target could be another public cloud provider.

PTO is a metric to consider as part of your migration efforts. It is often worth combining multiple application dependencies in a platform to influence your PTO.

Options for managing Kubernetes services

Maintaining Kubernetes can be difficult. Many books, articles, and blogs will teach you to self-manage Kubernetes. Other solutions, including Red Hat OpenShift, reduce the load on your teams. Taking this idea further, Kubernetes container management platforms are available as managed services, such as Red Hat OpenShift Service on AWS (ROSA).

[ Try this hands-on learning path: Getting started with Red Hat OpenShift Service on AWS (ROSA). ]

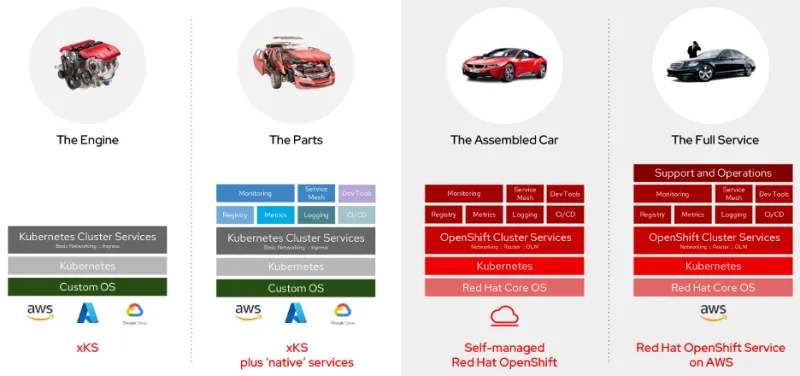

Kubernetes deployments look unique because they need additional components to move your applications to production, including the underlying operating system, the container engine, logging, monitoring, DNS, certificate management, node scaling, and more. Rather than just an "assembled car," ROSA integrates the whole stack and provides a "taxi service" for building and running your applications.

This diagram shows the differences between Kubernetes on its own (the car's engine), with additional services (parts), self-managed OpenShift (the car), and ROSA (the taxi service).

A hands-on example

This article describes a way to achieve PTO using ROSA. It uses Google Cloud Platform's microservices demo, but you could use any simple web application. Google's example of a whole shop application is well suited for this case because it shows how application teams can roll out a complete application with all DNS and TLS certificates. These are close to real-life dependency requirements.

[ Discover ways enterprise architects can map and implement modern IT strategy with a hybrid cloud strategy. ]

Install the managed OpenShift platform

ROSA is available on the AWS Marketplace from the AWS console, alongside other native AWS services. The installation is pretty straightforward; see the ROSA quickstart for guidance.

ROSA provides:

- A trusted enterprise Kubernetes

- Built-in security features

- The ability to add services with the click of a button using the Operator Framework

ROSA offloads Kubernetes base administration tasks and responsibilities to your provider of choice. This feature is also available with other hyperscalers. See the ROSA service definition for information on the policies and service definitions in the managed service.

Roll out the example application

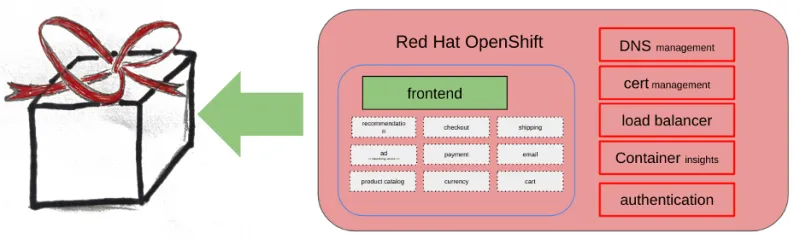

Once your OpenShift environment is up and running, you'll have an application architecture like the following diagram.

There are still some external dependencies. Your hyperscaler's tools (which will differ from platform to platform) will handle these. These include:

- Certificate management

- Load balancing

- Container insights (such as monitoring, logging, and autoscaling)

You want to resolve these external dependencies to gain independence, which will help you simplify migration.

Your OpenShift environment with a default Google microservice is up and running. The process takes about three hours, with an hour spent waiting while the complete platform is provisioned in the background. The basic setup can take a full work day if you don't have experience setting up ROSA.

[ Learn how to build a flexible foundation for your organization. Download An architect's guide to multicloud infrastructure. ]

Replace external dependencies

Red Hat OpenShift comes with many Operators. This example scenario keeps things simple and replaces certain external dependencies:

- DNS management: For your application, you want to leverage an external DNS provider's service to have a specific DNS domain like shop.<your-domain> with each of your deployments. Read A little bit of security is what I want for more on enabling your developers to keep DNS management separate from application deployment.

- Certificate management: You want to integrate with an external certificate authority (CA) to request and manage certificates.

- Load balancer: You want this to happen automatically rather than being an external dependency.

- Container insights: This shouldn't be dependent on provider-specific services.

- Authentication: Leverage your enterprise authentication to control and secure access to the platform with minimal effort in case you migrate your app.

Your deployment should look like the diagram below to use the platform's power with PTO in mind.

Once configured in OpenShift, those additional services can be migrated along with your deployment(s) to any other provider. The effort you spend to set this up now will be saved with future rollouts.

Integrate your enterprise authentication provider

You don't want to use a local authentication provider. You need all authentication and authorization to move with your deployment whenever you migrate your application to another location.

OpenShift provides the ability to add authentication to your platform. This demo uses Google Authenticator. Following an automation-first approach permits smoother migrations to another environment, where all customizations can be done using an API. For example, Google Authenticator looks like this:

rosa create idp --type=google --client-id=<ID>.apps.googleusercontent.com --client-secret=<SECRET> --mapping-method=claim --hosted-domain=redhat.com --cluster=winkelschleifer --name=Google-RedHat

To keep this example simple, I used OpenShift's capabilities for authorization. You change the admin role in the AWS deployment with:

rosa grant user dedicated-admin --user=<user1>@redhat.com –cluster=winkelschleifer

You want to add authentication functionality to the deployment to add security. And you did so using OpenShift's built-in modules. The OpenShift-based authentication provider configuration can be migrated to another environment with no effort.

[ How to explain orchestration in plain English ]

DNS and certificate management

In A little bit of security is what I want, I focused on the technical details of how OpenShift Operators interact and enable you to roll out applications. This approach helps you follow the agile principles, especially autonomy, as the platform and the Operators help you meet security requirements.

A key aspect is separating the duties of the platform administrators from the development teams.

While the SRE team in charge of the platform prepares the Operators, the development teams can roll out their applications.

You can now rely on DNS and certificate management. Frequent deployments on any platform are manageable because deployment targets no longer matter.

Wrap up

The pieces that add migration costs to the bucket include:

- DNS record management

- Certificate management

- Load balancer management

- Secrets integration

- Scaling

- Identity provider (IDP) integration

A Kubernetes platform like OpenShift puts all these bits and pieces together in a proper package that can be deployed in a completely automated manner, reducing the lead time to prepare environments to a minimum.

You can delegate these preparation topics to OpenShift and dramatically reduce your migration efforts. This approach puts quicker migrations and multicloud portability within reach with minimal resource investments.

[ Learn how to build a flexible foundation for your organization. Download An architect's guide to multicloud infrastructure ]

About the author

Manfred is working as a Senior Solution Architect at Red Hat. His focus areas are customers in the automotive industry. His career started in the 90s working with FreeBSD and C programming. Over the years, the size of his projects has grown. Today, he's happy to see how state-of-the-art approaches help customer teams deploy fast and securely.

More like this

Ford's keyless strategy for managing 200+ Red Hat OpenShift clusters

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

The Containers_Derby | Command Line Heroes

Crack the Cloud_Open | Command Line Heroes

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds