In my previous article, How to back up Kubernetes persistent volumes with the VolSync add-on, I described using VolSync to back up persistent volumes in Kubernetes. This article explains how to use Submariner, which is also a Red Hat Advanced Cluster Management (ACM) for Kubernetes add-on. As Submariner's website explains:

Submariner enables direct networking between Pods and Services in different Kubernetes clusters, either on-premises or in the cloud.

Why Submariner?

As Kubernetes gains adoption, teams are finding they must deploy and manage multiple clusters to facilitate features like geo-redundancy, scale, and fault isolation for their applications. With Submariner, your applications and services can span multiple cloud providers, datacenters, and regions.

Submariner is completely open source and designed to be network plugin (CNI) agnostic.

What Submariner provides

- Cross-cluster L3 connectivity using encrypted or unencrypted connections

- Service Discovery across clusters

- subctl, a command-line utility that simplifies deployment and management

- Support for interconnecting clusters with overlapping Classless Inter-Domain Routing (CIDRs)

This article serves a few purposes. I'll show the Submariner installation process first and then change the VolSync configuration I covered in my previous article. Specifically, I'm changing from VolSync using a load balancer such as MetalLB to Submariner to allow easier networking between pods.

Assume you have a limited number of external IP addresses you can assign. With the MetalLB solution, each ReplicationDestination would need an ExternalIP resource. If another team manages the IP space in your organization, it might take some time to get these IPs. If your cluster is directly on the internet (not typical), you may need to purchase additional IPs.

Submariner eliminates this IP problem and solves several other issues. The configuration steps in this article are based on section 1.1.2.1 of the documentation on the Red Hat ACM site.

[ Learning path: Getting started with Red Hat OpenShift Service on AWS (ROSA) ]

You must have the following prerequisites before using Submariner:

- You need a credential to access the hub cluster with

cluster-adminpermissions. - IP connectivity must be configured between the Gateway nodes. When connecting two clusters, at least one of the clusters must be accessible to the Gateway node using its public or private IP address designated to the Gateway node. Access Submariner NAT traversal for more information.

- If you are using OVN-Kubernetes, clusters must be at Red Hat OpenShift Container Platform (OCP) version 4.11 or later.

- If your OCP clusters use OpenShift SDN CNI, the firewall configuration across all nodes in each of the managed clusters must allow 4800/UDP in both directions.

-

The firewall configuration must allow 4500/UDP and 4490/UDP on the gateway nodes for establishing tunnels between the managed clusters.

Note: This is configured automatically when your clusters are deployed in an Amazon Web Services, Google Cloud Platform, Microsoft Azure, or Red Hat OpenStack environment, but must be configured manually for clusters on other environments and for the firewalls that protect private clouds. (Learn the differences between managed services, hosted services, and cloud services.)

-

The

managedclustername must follow the DNS label standard defined in RFC 1123 and meet the following requirements:- Contain 63 characters or fewer

- Contain only lowercase alphanumeric characters or

- - Start with an alphanumeric character

- End with an alphanumeric character

Since I'm currently running all single-node (SNO) (bare-metal) clusters, the gateway node is each SNO host. A site-to-site VPN allows direct connectivity between each of the clusters. These clusters are based on OCP 4.11 using OVNKubernetes.

You must open some additional, required ports if there is a firewall between the clusters:

- IPsec NATT:

- Default value: 4500/UDP

- Customizable

- VXLAN:

- Default value: 4800/UDP

- Not customizable

- NAT discovery port:

- Default value: 4490/UDP

- Not customizable

I will cover the following topics:

- Installing the Submariner add-on to spoke1/spoke2 clusters

- Enabling Service Discovery

- Changing VolSync to use ClusterIP instead of LoadBalancer

[ Learn how to build a flexible foundation for your organization. Download An architect's guide to multicloud infrastructure. ]

1. Install the Submariner add-on to the spoke1 and spoke2 clusters

I will deploy Globalnet, a component that supports overlapping CIDRs in connected clusters. It is required because I used the default settings for my Services/ClusterIPs on each cluster. The default setting is 172.30.0.0/16. I use a virtual network and NAT (provided by Globalnet) to allow the services on each cluster to talk.

You can find the YAML code for this article in my GitHub repo.

- Create the ManagedClusterSet resource called submariner. spoke1 and spoke2 will become members of it. Run the resource from the hub cluster.

apiVersion: cluster.open-cluster-management.io/v1beta2 kind: ManagedClusterSet metadata: name: submarineroc apply -f mcs-submariner.yaml - Now, create the broker configuration on the hub.

apiVersion: submariner.io/v1alpha1 kind: Broker metadata: name: submariner-broker namespace: submariner-broker labels: cluster.open-cluster-management.io/backup: submariner spec: globalnetEnabled: trueoc apply -f submariner-broker.yaml - Label spoke1 and spoke2 as part of the submariner ManagedClusterSet created in step 1.

oc label managedclusters spoke1 "cluster.open-cluster-management.io/clusterset=submariner" --overwrite oc label managedclusters spoke2 "cluster.open-cluster-management.io/clusterset=submariner" --overwrite - Create the submariner configuration for spoke1 and spoke2. This also runs from the hub. The VXLAN driver works best in my environment; I assume this is due to an issue with double encryption/encapsulation with my site-to-site VPN.

apiVersion: submarineraddon.open-cluster-management.io/v1alpha1 kind: SubmarinerConfig metadata: name: submariner namespace: spoke1 spec: cableDriver: vxlanoc apply -f submariner-config-spoke1.yamlapiVersion: submarineraddon.open-cluster-management.io/v1alpha1 kind: SubmarinerConfig metadata: name: submariner namespace: spoke2 spec: cableDriver: vxlanoc apply -f submariner-config-spoke2.yaml - Create the submariner ManagedClusterAddon for spoke1 and spoke2 on the hub.

apiVersion: addon.open-cluster-management.io/v1alpha1 kind: ManagedClusterAddOn metadata: name: submariner namespace: spoke1 spec: installNamespace: submariner-operatoroc apply -f mca-spoke1.yamlapiVersion: addon.open-cluster-management.io/v1alpha1 kind: ManagedClusterAddOn metadata: name: submariner namespace: spoke2 spec: installNamespace: submariner-operatoroc apply -f mca-spoke2.yaml - After you run these two commands, check the status.

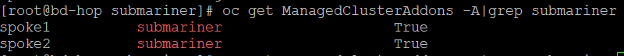

oc get ManagedClusterAddons -A|grep submariner

To check that the ManagedClusterAddon was enabled, make sure the output shows as True for submariner.

Next, check if the appropriate workloads were enabled on spoke1 and spoke2 to support Submariner.

oc -n spoke1 get managedclusteraddons submariner -oyaml

Wait a few minutes and check the conditions.

- Ensure that the SubMarinerAgentDegraded shows False.

- Ensure that the SubMarinerConnectionDegraded shows False.

[ Become a Red Hat Certified Architect and boost your career. ]

Another way to check its status is by using the subctl utility. You may need to do a few things to download this tool and run it from your bastion/jump-host. Copy the following to /usr/local/bin:

# Install podman if not already installed

yum install podman -y

# Login to Redhat registry

podman login registry.redhat.io

# Download image and extract tar.xz file to /tmp

oc image extract registry.redhat.io/rhacm2/subctl-rhel8:v0.14 --path="/dist/subctl-v0.14*-linux-amd64.tar.xz":/tmp/ --confirm

# Untar/gz the file

tar -C /tmp/ -xf /tmp/subctl-v0.14*-linux-amd64.tar.xz

# Copy subctl binary to /usr/local/bin and change permissions

install -m744 /tmp/subctl-v0.14*/subctl-v0.14*-linux-amd64 /usr/local/bin/subctl

From one of the clusters in the ManagedClusterSet (I picked spoke2), run the following command:

subctl show connections

This command shows that sno-spoke1 is connected to spoke2.

[ Learn about upcoming webinars, in-person events, and more opportunities to increase your knowledge at Red Hat events. ]

2. Enable ServiceDiscovery

The documentation provides the following example to test whether ServiceDiscovery is working.

1. Begin with spoke2. The following commands download an unprivileged Nginx workload to the default project/namespace and expose it as a service on port 8080:

oc -n default create deployment nginx --image=nginxinc/nginx-unprivileged:stable-alpine

oc -n default expose deployment nginx --port=8080

Enable ServiceDiscovery on Nginx pod:

subctl export service --namespace default nginx

2. Change to spoke1 and attempt to reach this service. Create a nettest project and then a deployment.

oc new project nettest

kind: Deployment

apiVersion: apps/v1

metadata:

name: nettest

spec:

replicas: 1

selector:

matchLabels:

app: nettest

template:

metadata:

creationTimestamp: null

labels:

app: nettest

deploymentconfig: nettest

spec:

containers:

- name: nettest

image: quay.io/submariner/nettest

command:

- sleep

- infinity

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: Always

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext: {}

schedulerName: default-scheduler

imagePullSecrets: []

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

paused: false

oc create -f deployment.yaml

Find the name of the pod and remote shell (RSH) into it.

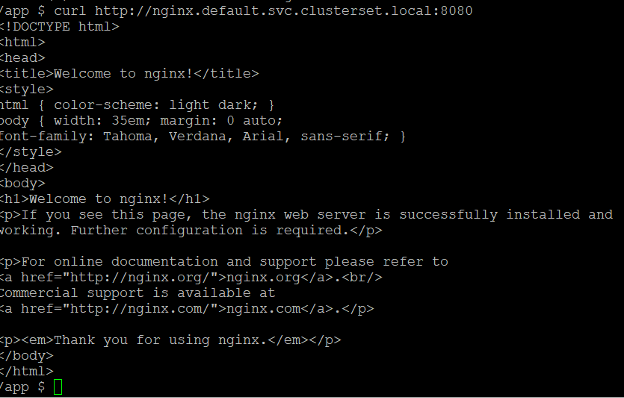

This output proves that network connectivity and DNS resolutions work across this ClusterSet.

3. Change VolSync to use ClusterIP instead of LoadBalancer

In my VolSync article, I used a load balancer (MetalLB) resource to expose the replicationDestinationResource. I will change this to use ClusterIP and expose the service across the ClusterSets.

1. Review the replicationDestination service on spoke2. In my cluster, the project is called myopenshiftblog.

oc project myopenshiftblog

oc get svc

Since this is type LoadBalancer, the replicationDestinationResource must be changed to use serviceType: ClusterIP.

# Get appropriate replicationDestination if there is more than one

oc get replicationDestination

oc edit replicationDestination myopenshiftblog

2. Run the following command. Specify the namespace and servicename of the volsync service.

# Get the svc name

oc get svc

subctl export service --namespace myopenshiftblog volsync-rsync-dst-myopenshiftblog

There should be another svc exposed in addition to the regular svc.

oc get svc

A 241.1.255.252 GlobalNetIP was assigned.

3. Test connectivity from the spoke1 cluster to this resource.

Assuming you still have the nettest pod deployed, run the following from the RSH prompt:

dig volsync-rsync-dst-myopenshiftblog.myopenshiftblog.svc.clusterset.local

The address now resolves.

4. On spoke1, change the replicationSource to the new DNS-based ClusterSet resource (volsync-rsync-dst-myopenshiftblog.myopenshiftblog.svc.clusterset.local). Edit the rsync.Address spec:

oc project myopenshiftblog

oc edit replicationsource myopenshiftblog

5. Since I set this cronjob to every 3 minutes, it should run:

oc get replicationsource myopenshiftblog

This example shows a successful sync based on the new DNS name (based on submariner/Globalnet).

[ Kubernetes: Everything you need to know ]

Wrap up

Submariner provides an alternative to the VolSync configuration I covered in my previous article. It enables pod and service networking, offering an additional option to solutions such as the MetalLB load balancer.

This originally appeared on myopenshiftblog and is republished with permission.

About the author

Keith Calligan is an Associate Principal Solutions Architect on the Tiger Team specializing in the Red Hat OpenShift Telco, Media, and Entertainment space. He has approximately 25 years of professional experience in many aspects of IT with an emphasis on operations-type duties. He enjoys spending time with family, traveling, cooking, Amateur Radio, and blogging. Two of his blogging sites are MyOpenshiftBlog and MyCulinaryBlog.

More like this

Redefining automation governance: From execution to observability at Bradesco

Simplify Red Hat Enterprise Linux provisioning in image builder with new Red Hat Lightspeed security and management integrations

Technically Speaking | Taming AI agents with observability

You Can’t Automate Collaboration | Code Comments

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds