Kuryr and OpenShift on OpenStack

OpenShift on OpenStack

Many cloud applications are moving to containers while many others are running on virtual instances, leading to the need for containers and VMs to coexist in the same infrastructure. Red Hat OpenShift running on top of OpenStack covers this use case as an on-prem solution, providing in turn high levels of automation and scalability.

OpenShift takes advantage of the OpenStack APIs integrating with OpenStack services such as Cinder, Neutron or Octavia.

Introduction to Kuryr

Kuryr is a CNI plugin using Neutron and Octavia to provide networking for pods and services. It is primarily designed for OpenShift clusters running on OpenStack virtual machines. Kuryr improves the network performance.

Kuryr is recommended for OpenShift deployments on encapsulated OpenStack tenant networks in order to avoid double encapsulation: running an encapsulated OpenShift SDN overan encapsulated OpenStack network i.e whenever VXLAN/GRE/GENEVE are required. Conversely, implementing Kuryr does not make sense when provider networks, tenant VLANs, or a 3rd party commercial SDN such as Cisco ACI or Juniper Contrail are implemented.

We tested Kuryr to check how it increases the networking performance when running OpenShift on OpenStack, when compared to using OpenShift SDN. In this article we cover aspects of the performance improvements by showing test results when using Kuryr as the SDN to run OpenShift on OpenStack.

OpenShift on OpenStack Architecture

Architecture Overview

There are many supported ways to configure OpenShift and OpenStack to work together. Consult the OpenStack Platform 13 Director Installation and Usage Guide and the OpenShift Container Platform 3.11 product documentation for detailed installation instructions. In this document we deployed a OpenShift on OpenStack in a standard way and focused our efforts on testing the performance.

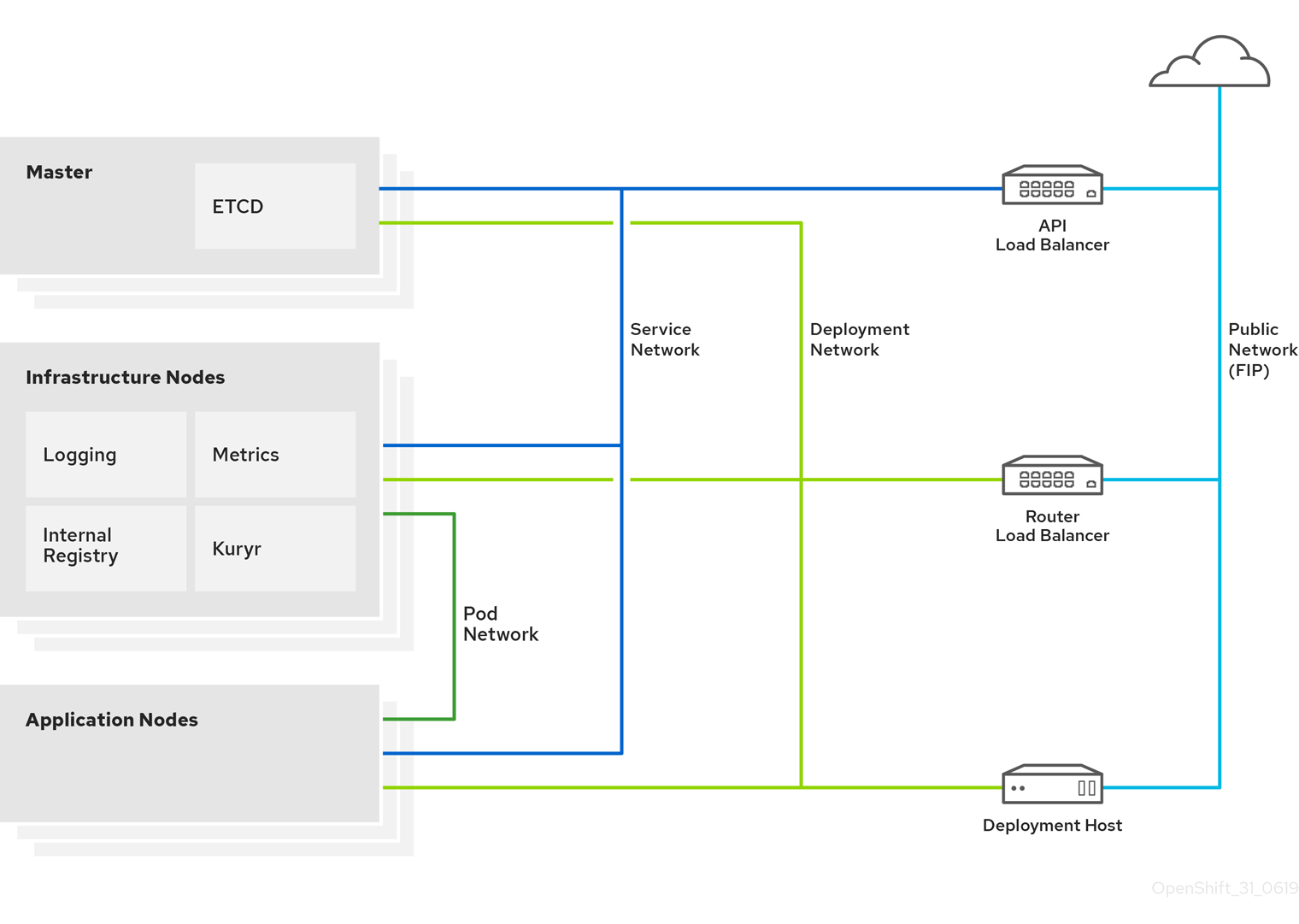

Figure 1. OpenShift on OpenStack Architecture Overview.

Networking Diagram

The following diagram depicts the deployed reference architecture for OpenShift on OpenStack. showing the relationships between components, roles, and networks.

Figure 2. OpenShift on OpenStack Networking Diagram.

A reference architecture for Red Hat OpenShift Container Platform 3.11 on Red Hat OpenStack Platform 13 has been released, which details the installation process and a prescriptive configuration, capturing recommendations from Red Hat field consultants.

Kuryr Architecture

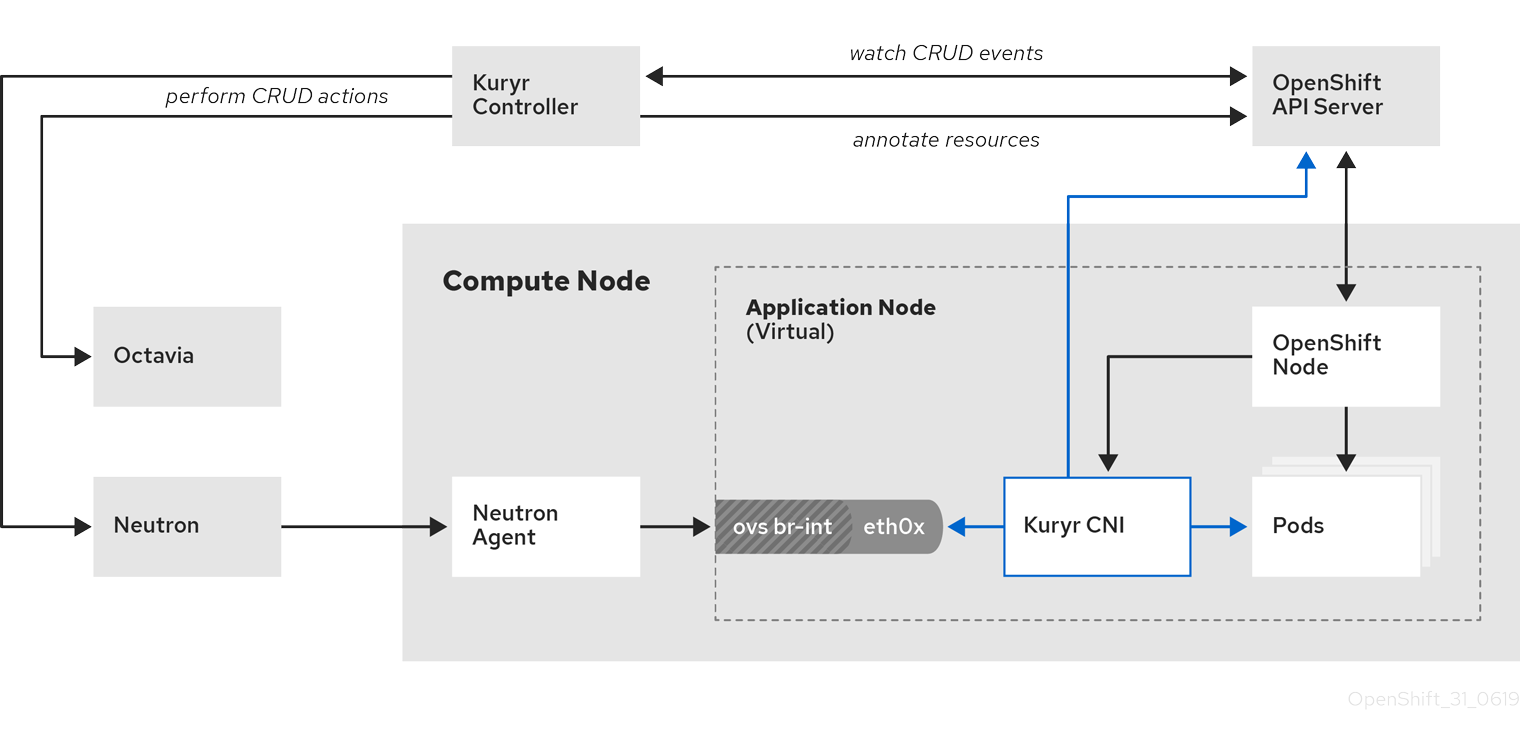

Kuryr components are deployed as pods in the kuryr namespace. The kuryr-controller is a single container service pod installed on an infrastructure node as a deployment OpenShift resource type. The kuryr-cni container installs and configures the Kuryr CNI driver on each of the OpenShift masters, infrastructure nodes and compute node as a daemonset.

The Kuryr controller watches the OpenShift API server for pod, service and namespace create, update and delete events. It maps the OpenShift API calls to corresponding objects in Neutron and Octavia. This means that every network solution that implements the Neutron trunk port functionality can be used to back OpenShift via Kuryr. This includes open source solutions such as OVS and OVN as well as Neutron-compatible commercial SDNs. That is also the reason the OpenvSwitch firewall driver is a prerequisite instead of the ovs-hybrid firewall driver when using OVS.

Figure 3. Kuryr Architecture.

See the Kuryr Kubernetes integration Design, written by the Kuryr developers for additional details.

Performance benchmarking Kuryr vs OpenShift SDN

While Kuryr offers network performance gains by the very nature of its architecture, one needs testing and data to characterize the network performance and confirm the hypothesis. This is exactly where performance engineering comes into the picture. Over the past several weeks, we’ve deployed an OpenShift on OpenStack cluster using Red Hat OpenStack Director and openshift-ansible. We’ve run extensive performance tests using both OpenShift SDN and Kuryr across a range of OpenStack Neutron backends, to compare and contrast the network performance

Hardware Setup

OpenStack was deployed with 1 undercloud node, 3 controllers and 11 compute nodes. On top of that, a bastion instance, a DNS instance, 3 OCP masters, 4 OCP workers and 1 infra node were deployed using openshift-ansible. A total of 14 servers with Intel Skylake processors (32 cores, 64 threads) with 376G of memory were used to build this test environment. OpenShift masters had 16 VCPUs and 32G memory while the worker nodes were given 8 VCPUs and 16G of memory.

Software Setup

Red Hat OpenStack Platform version 13 provided the foundation on which OpenShift Container Platform 3.11 was deployed. VxLAN overlay was used at the OpenStack level, with tenant network on a 25G NIC. For the Neutron backend, ML2/OVS and ML2/OVN were both tested. OpenStack Neutron had OpenvSwitch firewall driver configured and had the trunk extension enabled for ML2/OVS. OpenShift was deployed with and without Kuryr to compare the performance offered by Kuryr deployments with those using OpenShift SDN.

Results

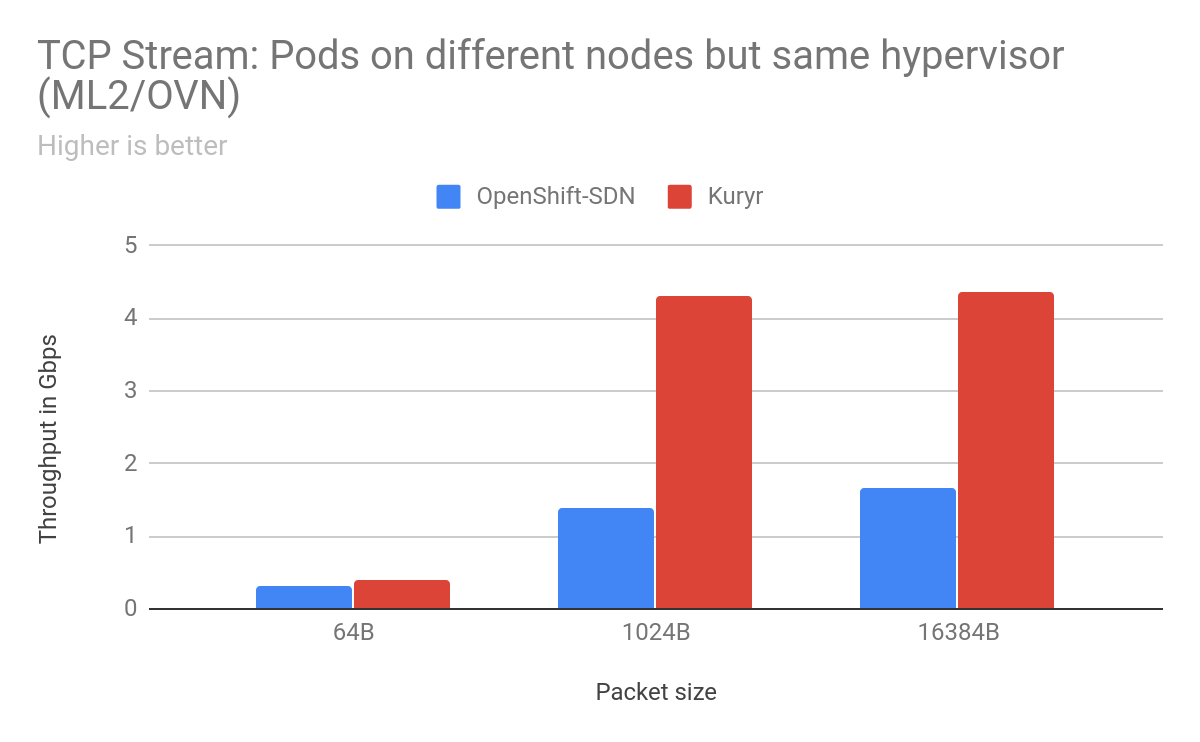

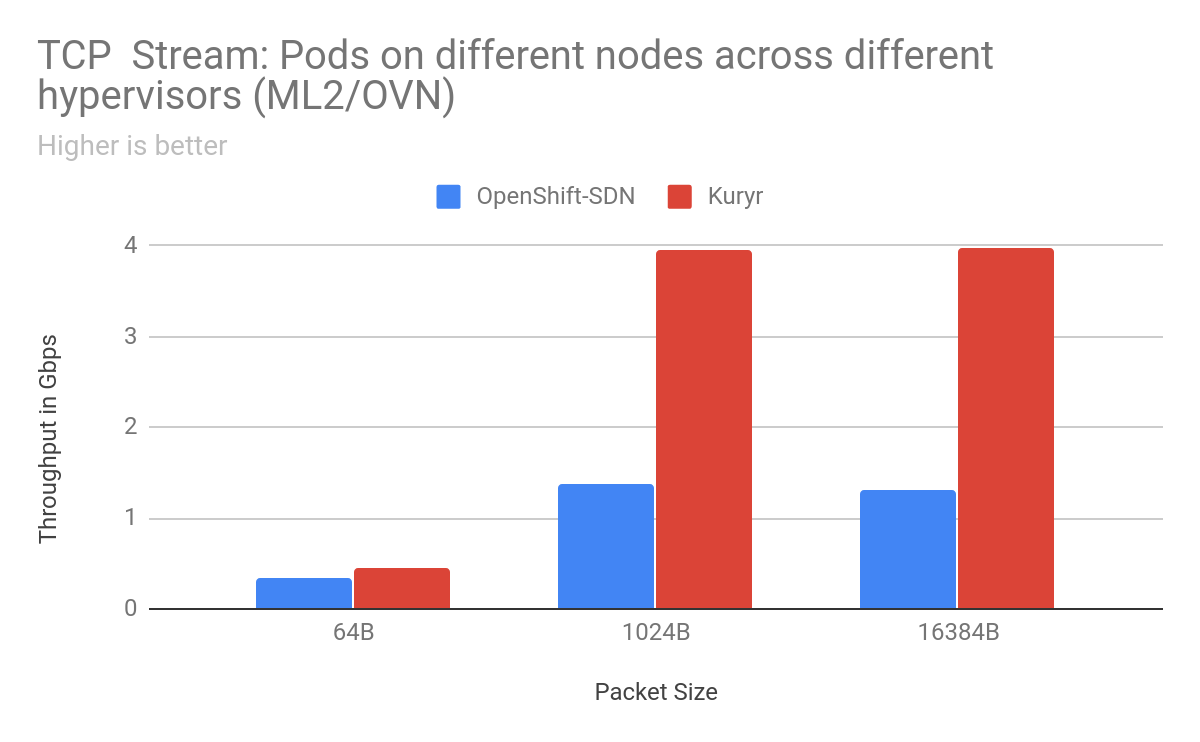

An image with the network performance testing tool uperf installed was used to spin up pods and run tests for TCP and UDP traffic profiles in a variety of scenarios for pod-to-pod and pod-to-service traffic, such as sender and receiver pods being on the same worker node, across different worker nodes, on the same OpenStack hypervisor and across different worker nodes spread across OpenStack hypervisors. For each traffic profile, throughput and latency for packet sizes ranging from 64B to 16K were measured, giving us a good test matrix covering all possible scenarios in real world use cases.

In the pod-to-pod tests, the traffic flows from a client pod to a server pod. Whereas in the pod-to-service tests, the traffic flows from client pods to a service, that is backed by the server pods. The stream tests are designed such that a client connects to a server using TCP and then sends data as fast a possible. This gives a good measure of how much bandwidth can be used between the client and server. On the other hand, the Request-Response tests are used to measure latency as they stress the connection establishment and break down aspects.

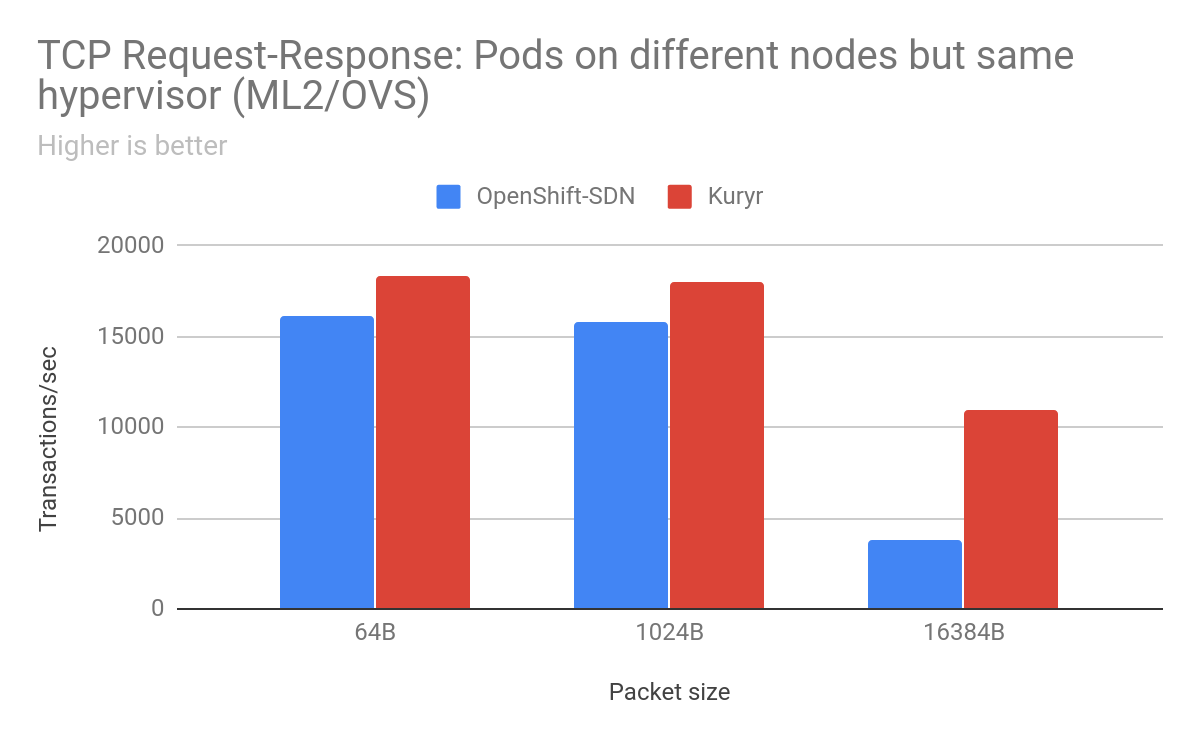

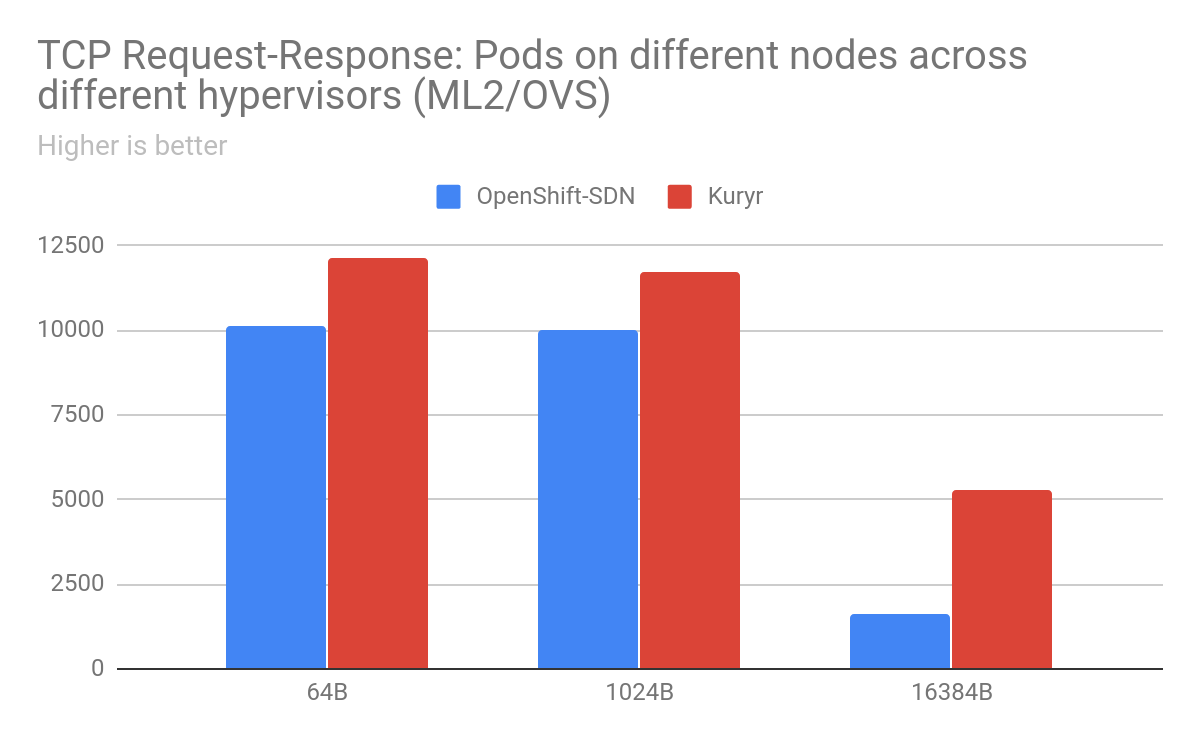

For pod-to-pod traffic, with ML2/OVS as the backend for OpenStack, the scenarios that saw the most improvements for TCP traffic were when the sender/receiver pods were on separate worker nodes. We saw anywhere from 2% to 900% improvement in throughput with Kuryr depending on packet size and arrangement of worker nodes. With 16KB packets between pods on worker nodes placed on separate OpenStack hypervisors, there was a gain of 904% with Kuryr. Latency is also much lower with Kuryr when pods are on different worker nodes. Improvements in TCP latency range from 13% for 64B packets between pods across worker nodes on the same hypervisor to 220% between pods on different worker nodes across separate hypervisors. However, latency with Kuryr in the case of pods on the same worker node is higher than with OpenShift SDN as with OpenShift SDN traffic does not need to leave the worker node.

For UDP traffic, although the gains are not as high as seen with TCP, we see gains in the range of the low teens up to 30% across a variety of packet sizes and scenarios.

For UDP traffic, although the gains are not as high as seen with TCP, we see gains in the range of the low teens up to 30% across a variety of packet sizes and scenarios.

However, during performance testing we identified that Kuryr was performing lower than OpenShift SDN for UDP when traffic has to go across OpenStack hypervisors. This is being investigated by the Neutron and Kuryr engineering teams. It should also be noted that latency suffers with Kuryr when compared to OpenShift SDN when pods are on the same worker node as traffic never leaves the worker node in the case of OpenShift SDN.

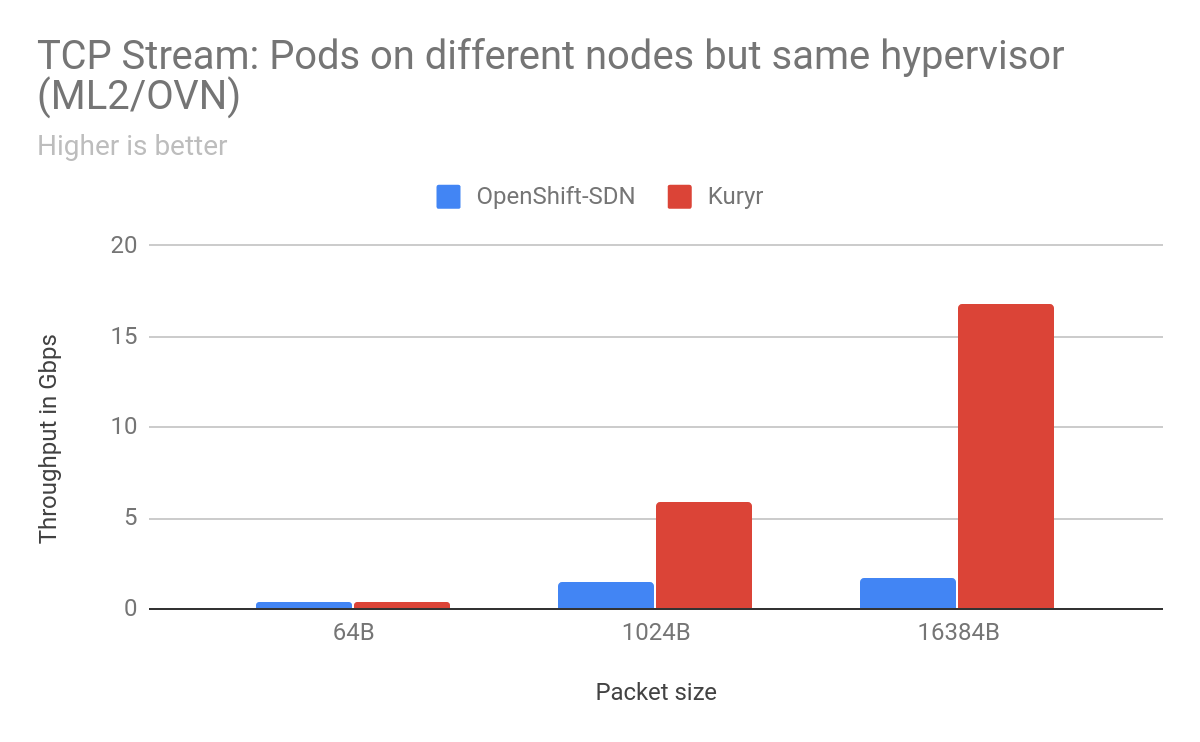

With ML2/OVN as the Neutron backend, we saw similar improvements with Kuryr in the case of TCP stream traffic between pods on different worker nodes on the same hypervisor, as well as separate hypervisors. Even the TCP Request-Response results with ML2/OVS show a trend similar to Kuryr on ML2/OVS, exhibiting lower latency. However, UDP performance is still lower with Kuryr than with OpenShift SDN, which is being investigated as noted earlier.

Next, we move onto pod-to-service benchmarking, which is important in the context of how applications communicate between them. Even in this case, Kuryr outperforms OpenShift SDN in the most common scenarios for TCP stream. However, TCP latency is higher with Kuryr than with OpenShift SDN due to the extra network hop needed to go through the Octavia LoadBalancer VM.

Conclusions

Overall, Kuryr provides a significant boost in pod-to-pod network performance. As an example we went from getting 0.5Gbps pod-to-pod to 5 Gbps on a 25 Gigabit link for the common case of 1024B TCP packets when worker nodes nodes were spread across separate OpenStack hypervisors. With Kuryr, we are able to achieve a higher throughput, satisfying application needs for better bandwidth while at the same time achieving better utilization on our high bandwidth NICs.

About the authors

Sai is a Senior Engineering Manager at Red Hat, leading global teams focused on the performance and scalability of Kubernetes and OpenShift. With over a decade of deep expertise in infrastructure benchmarking, cluster tuning, and real-world workload optimization, Sai has driven critical initiatives to ensure OpenShift scales seamlessly across diverse deployment footprints- including bare metal, cloud, and edge environments.

Sai and his team are committed to making Kubernetes the premier platform for running mission-critical workloads across industries. A strong advocate for open source, he has played a pivotal role in bringing performance and scale testing tools like kube-burner into the CNCF ecosystem. He holds 8 U.S. patents in systems and performance engineering and has published at leading conferences focused on systems performance and cloud infrastructure.

Sai is passionate about mentoring engineers, fostering open collaboration, and advancing the state of performance engineering within the cloud-native community.

More like this

Why the future of AI depends on a portable, open PyTorch ecosystem

Scaling the future of Open RAN: Red Hat joins the OCUDU Ecosystem Foundation

Post-quantum Cryptography | Compiler

Understanding AI Security Frameworks | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds