A Virtual Private Cloud (VPC) is a private cloud environment hosted within a shared public cloud infrastructure. It provides an isolated set of compute, storage, and network resources, enabling multiple organizations or groups to host services on a shared public cloud without compromising privacy or security.

The primary responsibility of the cloud service provider is to ensure robust security, privacy, reliability, and resiliency for the tenants. Additionally, the VPC allows tenants to dynamically scale resources as needed, offering flexibility and cost-efficiency without requiring upfront infrastructure investment or ownership.

Key components of VPC

There are three considerations when providing isolation and privacy in a VPC:

- Administrative control of the allocated infrastructure resources

- Control plane isolation: No shared routing or forwarding tables

- No other tenants can see or access the data other than the owner tenant of the VPC

Unique VPCs provide isolation for tenants within the infrastructure cluster or site and a tenant may have multiple VPC instances across multiple sites and clusters.

VPC network connectivity

VPC connectivity can be divided into three categories:

- Connectivity within the VPC

- Inter VPC instance connectivity within same cluster

- Inter VPC connectivity across cluster

Connectivity within the VPC

Thinking about the OpenStack project as an equivalent construct for VPC, an OpenStack project allows multiple tenant networks (private networks) and subnets for deploying VM workloads. Connectivity for workloads on the same tenant network is typically Layer 2, and when deployed on different tenant networks it's enabled by neutron routers. Tenant networks in OpenStack are akin to private networks, while provider networks are akin to public networks.

Workloads deployed on tenant networks can be assigned floating IP addresses with external network reachability. VM workloads on provider networks have external network reachability by default.

Inter-VPC connectivity

An organization often requires a single VPC with multiple VPC instances, or multiple VPCs, within a shared cloud infrastructure. Multiple VPCs in the same shared cloud may be required by an organization for microsegmentation, to maintain isolation between multiple groups or entities belonging to the same organization (such as Marketing, Finance, Engineering, and so on.)

In the context of OpenStack Services on OpenShift, each project can be considered a logical construct representing a unique VPC. One-to-one mapping between virtual routing and forwarding (VRF) and VPC provides the isolation and control over which VPC can talk to other VPCs. This provides policy controls through network connectivity between VPCs. Essentially, VRF provides the necessary control and data plane isolation between the VPCs.

For example, network resources (tenants networks or provider networks) in Project 1/VPC1 belong to VRF1, and network resources (tenants networks or provider networks) in Project 2/VPC2 belong to VRF2. Depending on the distribution of the route target (RT) and route distinguisher (RD) in the context of border gateway protocol (BGP) and ethernet virtual private network (EVPN), workloads in VPC1 and VPC2 can talk to one another.

Intra-VPC Connectivity (different cluster or site)

Inter VPC communication across sites or clusters relies on VPN overlays between sites or clusters. VPN could be based on multiprotocol label switching (MPLS) or BGP-EVPN and a virtual extensible local area network (VxLAN), as long as data plane and control plane separation for VPC traffic is maintained across the wide area network (WAN).

For example, VPC 1/Project 1 workloads in Region 1 talk to workload on VPC1/Project 1 in Region 2 over a VPN tunnel between the regions. Control plane and data plane separation within the cluster is maintained using unique VRFs with a BGP-EVPN+VxLAN overlay, while the data center gateway (DC-GW) may use some other VPN overlay on the WAN. In this case, VPC1 has an instance in each region.

Communication between sites or clusters is dependent on the DC-GW.

Inter-VPC Connectivity (different cluster or site)

Inter-VPC is communication between two VM workloads belonging to a different VPC, but intra-VPC connectivity involves communication between VMs belonging to the same VPC.

For example, VPC 1/Project 1 workloads in region 1 talk to the workload on VPC2/Project 2 in region 2 over the VPN tunnel between the regions. Control plane and data plane separation within the cluster is maintained using unique VRFs with BGP-EVPN+VxLAN overlay, whereas the DC-GW may use some other VPN overlay on the WAN.

Security groups and firewall-as-a-service

Security groups and firewall-as-a-service (FWaaS) form an important part of VPC functionality, defining security policies, and allowing only authorized access to infrastructure resources and applications associated with the VPC. Security groups enable you to define security group rules at the virtual machine (VM) instance and VM port level. While this is sufficient for VM security, it does present a scalability issue. Additionally, it requires all VM workloads to deploy robust and comprehensive security group rules. Applying the security group rules independently can result in inconsistencies or may be insufficient for maintaining an effective security posture.

This presents the need to apply firewall rules at a higher level that applies to groups of resources and applications using FwaaS functionality, and applying specific rules using security groups at the instance level.

FWaaS policies can be shared at a project level. I.e. the policies may be applied to any of the router ports that belong to the given project, essentially providing a wider scope for the applicability of the firewall rules. This helps in applying common rules consistently within the project reducing the probability of any human errors.

Additionally, FWaaS also provides an added option of using 3rd party NGFW plugins for capabilities beyond ACLs such as DPI, IPS and IDP thus enabling a stronger security posture for a virtualized infrastructure environment.

DNS as a service

One of the key aspects for the VPC construct is localization of services for the workloads deployed in the VPC. Deployment design must ensure that in scenarios where cloud service access is local to the VPC, the data path to the cloud services must not need to go to an external network for access. Local resolvers, along with global server load balancing (GSLB) services that assign local cloud services, may be used to increase efficiencies and optimize the network bandwidth. Distributed DNS-as-a-service (DNSaaS) with DNS resolvers local to the VPC play an important role in such designs. DNS resolvers that are aware of availability zones (AZ) are critical to keep DNS queries and the resolution to service endpoints local.

Mapping VPC to Red Hat OpenStack Services on OpenShift constructs

Equating a VPC feature to a OpenStack Services on OpenShift is often a matter of conceptualizing the functionality you're trying to achieve, and understanding what component provides it. Here are some common functions, and how they're provided by either VPC or OpenStack Services on OpenShift:

Functionality | Red Hat OpenStack Services on OpenShift | VPC |

Private IPv4 addresses, FIP for public IPv4 with NAT, public IPv6 addresses | Tenant network | VPC subnets |

For connectivity across tenant networks / subnets | vRouter | Route tables |

NAT, external network connectivity | OVN gateway | Internet gateway, Transit gateway |

Site-to-Site VPN | BGP-EVPN | Transit gateway VPN |

Cloud to On-Prem | Multi-Site BGP | Transit gateway, Direct connect, transit gateway peering |

VPC / tenant peering across sites | DCN, Multi-site BGP | VPC peering, backbone, Encrypted |

IPSec support | DCGW | Transit gateway |

3rd party service support | Neutron ML2 plugin | Private link |

SD-WAN | ML2-Plugin, DCGW | Transit GW |

LB, ECMP across GW | OVN GW (Multiple networker nodes) | Transit GW |

VPC connectivity to external networks and multi-cloud scenarios

The above topology displays the data path for VM workload connectivity in a multi-tenant environment where each tenant belongs to a separate VRF (virtual routing and forwarding table). The overlay network established between the compute nodes is VxLAN based on the data plane side supported by BGP-EVPN for the control plane to establish the appropriate VxLAN tunnels. Here, the VM workloads deployed on provider networks send traffic directly to leaf devices (ToR) over the VxLAN overlay. However, the VM workloads deployed on the tenant networks establish a Geneve tunnel to the OVN GW and subsequently the data path to the external DC is achieved with an VxLAN overlay established between the two OVN GW devices with BGP-EVPN.

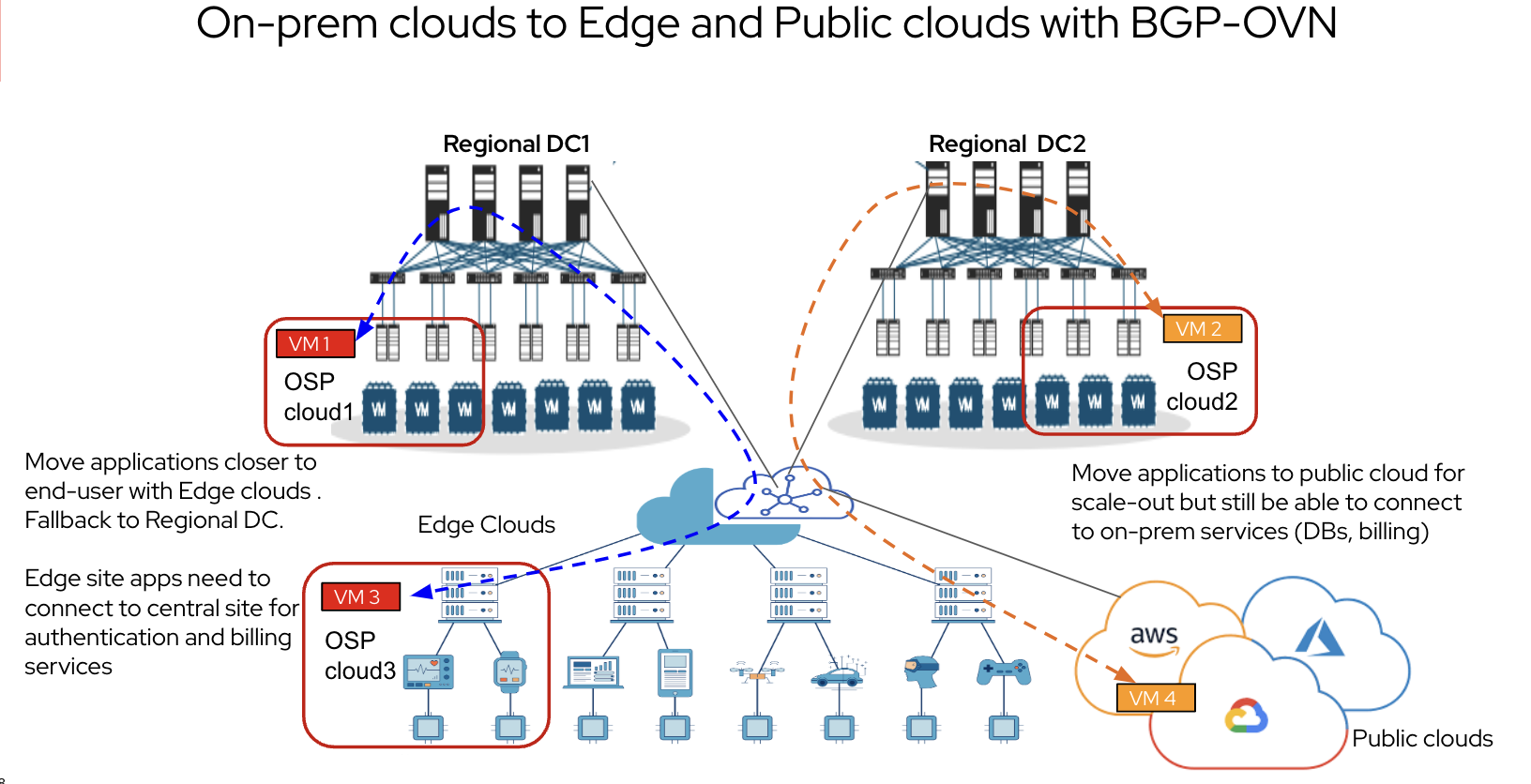

This diagram illustrates how deploying end-to-end (E2E) BGP between different clouds (private and public) enables multi-cloud connectivity. BGP is used for layer 3 (L3) forwarding within the OpenStack deployment as well, providing E2E connectivity based on L3.

Similarly, BGP or BGP-EVPN may be used for connectivity and forwarding from OpenStack compute nodes to a DC gateway. Subsequently, WAN connectivity may be established between the DC-GW or a software-defined wide area network (SD-WAN) GW that are part of the DC fabric.

Building a better cloud

A VPC provides an isolated set of compute, storage, and network resources that enable multiple organizations to host services on a shared public cloud without compromising privacy or security. Red Hat OpenStack Services on OpenShift helps you build a robust infrastructure for that purpose, and there are yet more features in the works so you can get the most out of whatever cloud deployment you choose.

Product trial

Red Hat OpenShift Container Platform | Product Trial

About the author

Experienced Product Manager renowned for driving innovation in network and cloud technologies. Expertise in strategic planning, competitive analysis, and product development. Skilled leader fostering customer success and shaping transformative strategies for progressive organizations committed to excellence

Highly engaged in the pioneering stages of ETSI NFV standards and OPNFV initiatives, specializing in active monitoring and ensuring reliability in virtualized network deployments

More like this

Simplifying Windows Licensing with OpenShift Virtualization on ROSA

Migrate your VMs faster with the migration toolkit for virtualization 2.11

SREs on a plane | Technically Speaking

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds