In every release of our products, the User Experience Design team at Red Hat (UXD) prioritizes implementing work that directly relates to customer feedback and insights. For a nascent product like RHEL AI, we have had to be creative about doing so. Feedback channels we used for this upcoming release to help us continuously improve the experience include upstream community engagement and direct feedback from users via Slack, interviews with internal team members, interviews with AI users and enthusiasts who may be interested in adopting RHEL AI, and examining our competitive landscape. This has allowed us to inform our design work for the RHEAL AI 1.4 release and beyond.

We are excited to highlight a few new major features:

- Document conversion - Enables the ability to convert uploaded files to markdown automatically. Users can now upload PDFs, HTML, ASCII documents, etc. and these will be converted to markdown.

- Model chat evaluation - Allows users to be able to evaluate model responses

Contribution Experience

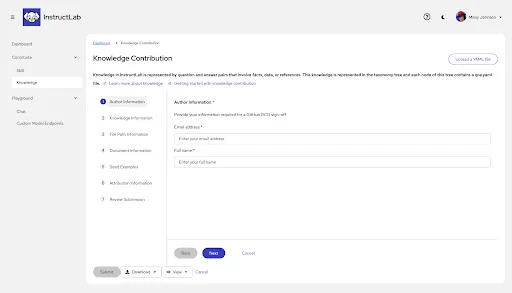

The Contribution Experience, now in Dev Preview, is a graphical user interface (GUI) that was created to help subject matter experts craft input for their knowledge submission without needing to understand YAML, reducing the barrier of technical expertise required to contribute knowledge and improve model responses. For more context and background, check out an awesome blog written in December by our Engineering colleagues.

Let’s dive into a few specific examples of areas that we focused on improving for Dev Preview!

Formatting Knowledge

What we heard:

Editing YAML remains a significant pain point, with ongoing feedback from most users highlighting challenges. It is especially difficult for non-technical subject matter experts to navigate. The overall task of inputting the data is daunting and it is not always clear what is required and what is optional, often leading to errors due to improper indentation, trailing whitespaces, etc.

How we responded for 1.4:

We continue to iterate on and improve the knowledge contribution UI, reducing points of friction without impacting model responses and performance. In this next iteration, UXD recommended moving the long-form to a Wizard, to help guide users through the process and gain confidence that progress is being made throughout each step.

In addition, when documents are uploaded, they will automatically be converted to markdown.

Add an Endpoint to Chat with a Model

To enable users to chat with their own custom models, we are continuing to improve the custom model endpoint experience.

To access custom models, users need to set up a dedicated "chat" endpoint within the platform, which allows messages to be sent in a conversational format and receive responses that maintain context throughout the interaction; this involves specifying the desired model, authentication details, and the API endpoint URL to send requests to for chat interactions

What we heard:

In our first iteration, the list of endpoints created (called a list view) was missing helpful context for users to be able to successfully leverage the endpoint’s capabilities and chat with their custom models. Additionally, there was no clear connection to the chat with model experience, which resulted in requests that we work to bring the workflows closer together.

Improvements we are considering for the future:

- Health check status - Add a health check status column to help the user quickly identify issues with their endpoints availability.

- Actions - Add a button for users to quickly enable or disable their endpoint connection. Provide an overflow menu with a PatternFly kabob icon for Edit and Delete.

- Details - Add a side drawer that can be opened to see additional details like the last time the API key changed and a link to the Taxonomy path.

- URL - Add a copy icon so the user can quickly copy the URL.

- Default sort - Include the ability to sort for most recently created at the top

Chat with a Model

There are many points in the experience when a user may want to be able to chat with a model. For example:

- To better understand the gaps in the model responses, answering the question, does the model have the data I am looking for?

- To compare base model responses to custom model responses, answering the question, did I make the model better?

Ultimately, a user may want to compare a base model, a fine-tuned model customized with the InstructLab fine-tuning capabilities, and an InstructLab fine-tuned model combined with our RAG capabilities.

What we learned:

The first iteration was a very basic chatbot experience that lacked the ability to view source information, and take action on model responses and was isolated from the model endpoint workflow. It was missing speech-to-text accessibility as well.

How we responded for 1.4:

Improvements to the chat interface include the ability to add a custom model endpoint directly from this workflow.

Improvements we are considering for the future:

- Ability to review sources used for the model's response and take action (like, dislike, copy, download, and regenerate)

- Use audio to create a message instead of manually entering one

- Provide additional in-context help

What’s Next

Lots of exciting improvements and new features are in the pipeline:

- Synthetic data generation and training

- Evaluating the newly fine-tuned model against the base model

- Complete end-to-end tuning without ever needing to use a CLI

Connect With Us

We are always growing and evolving our improvements to RHEL AI with a customer-first mindset. Be on the lookout for more enhancements in future releases. Please sign up to participate in future research endeavors to share your RHEL AI experiences.

Additionally, please join our InstructLab community, check out our upstream UI (a subset of these features will be available there), and share your thoughts. We would love to connect with users like you.

About the authors

Design is a team sport, and as a principal designer on the Red Hat UXD team, Missy works closely with teams to solve complex problems and design meaningful, inclusive experiences. She joined Red Hat in January 2021. Currently, Missy is focused on RHEL AI and InstructLab, aiming to bridge the gap between the highly technical world of AI and less technical users.

More like this

AI quickstarts: An easy and practical way to get started with Red Hat AI

Empowering federated learning with multicluster management

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds