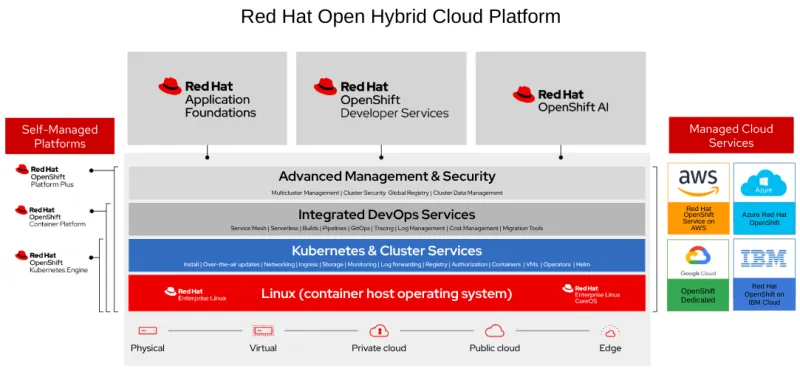

Red Hat OpenShift networking is much more than just Kubernetes networking. With Red Hat Enterprise Linux (RHEL) as its foundation, it’s a suite of highly integrated Red Hat products and capabilities that provide a state of the art networking ecosystem for Kubernetes applications that require zero trust networking, network observability, virtual machine networking, multi-cluster management, service mesh, and more.

One of the most important components of that Kubernetes networking ecosystem is the Container Network Interface (CNI) plugin, which assigns pods within a cluster IP addresses, configures routes to establish network connectivity, and enforces security policies between pods within a cluster. In the case of Red Hat OpenShift networking, the default CNI plugin is OVN-Kubernetes, a full-featured modern implementation of advanced networking capability, trusted by a gamut of customers ranging from telecommunication providers delivering 5G cellular networks to the world's leading banks running financial services infrastructures.

When building the pod network, it is typically created as an overlay network that abstracts away the underlying physical network to provide a common networking fabric across a variety of private on-premises and public cloud platforms. Commonly, the pod network assigns a unique IP to every pod in a layer 3 network and the CNI plugin handles the traffic routing between them, regardless of a pod’s placement on a physical node within the cluster.

In a multi-tenant environment, that baseline indiscriminate connectivity between pods is not desirable for compliance and security reasons. Kubernetes Network Policy (along with its Admin Network Policy and Baseline Network Policy enhancements) provides an important tool to restrict pod-to-pod traffic across and within the namespace (the Kubernetes tenant boundary). However, a misconfiguration could expose traffic to bad actors because they are all sharing the same network.

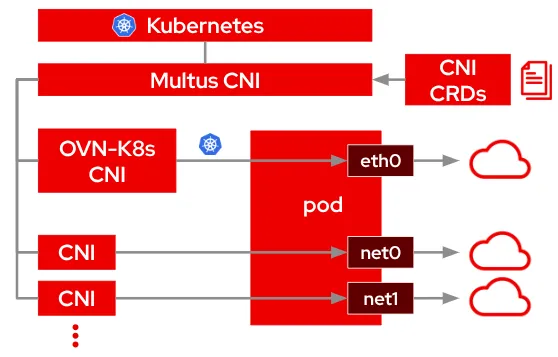

To mitigate the problem, Red Hat OpenShift networking teams helped establish the Kubernetes Network Plumbing Group to address low level networking issues in Kubernetes. This group developed standards for attaching multiple networks to pods in Kubernetes, centering around the Multus CNI plugin, which enables pods to have multiple network interfaces configured from a standard Custom Resource Definition (CRD).

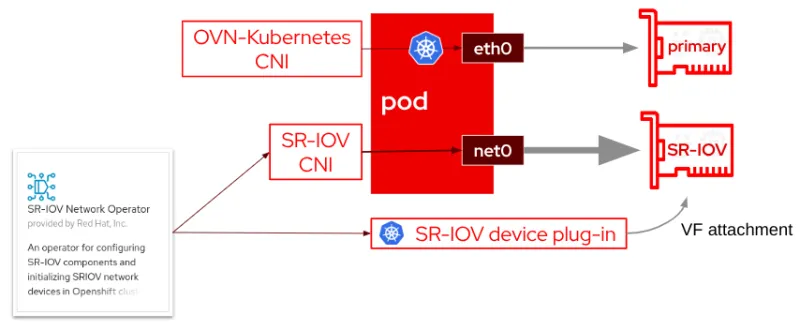

Typically in Kubernetes, each pod has a single network interface (apart from a loopback). The networking of the eth0 interface is described by the default (primary) CNI plugin for control and data plane traffic. Using the Multus CNI, you can create a multi-homed pod with multiple interfaces, each (potentially) described by a different secondary CNI plugin that might provide a different set of features beyond the default CNI plugin's capabilities. For example, a cluster administrator could keep Kubernetes control plane traffic on the pod’s eth0 interface, but configure a secondary interface with high-speed SR-IOV for streaming data.

While Multus secondary interfaces provide a solution for isolating traffic onto a secondary network, limitations remain and can present new challenges. For one, this introduces an operational complexity. Also, the array of secondary CNI plugins available for use are typically focused on a fairly limited set of functionality (for instance, MACVLAN network configuration only), often requiring additional CNI plugins (IPAM, for example) to make the secondary network usable. The OVN-Kubernetes CNI plugin can be used for both primary and secondary networks to mitigate that problem. Fundamentally, the issue with secondary interfaces is that they are not "first class citizens" in Kubernetes networking, and limitations still exist (for example, they have limited integration with Kubernetes services).

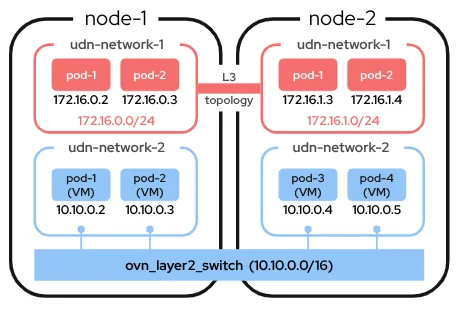

To directly address the limitations of Multus secondary networks for the purposes of advanced network functionality and network segmentation and isolation, Red Hat OpenShift developed a foundational Kubernetes networking enhancement called user-defined networks.

What is a user-defined network?

A user-defined network (UDN) supports seamless integration between OpenShift's OVN-Kubernetes cluster network and existing external networks, and features targeted networking solutions that cross over that boundary. UDN improves the flexibility and segmentation capability of the default layer 3 Kubernetes pod network by enabling custom layer 2, layer 3, and localnet network segments that act as either primary or secondary networks for container pods and virtual machines. Using the default OpenShift OVN-Kubernetes networking, UDN provides a set of network semantics that network administrators and applications are already familiar with. UDN augments OpenShift’s existing Multus-enabled secondary CNI capability by providing a comparable experience and feature set to all network segments.

A UDN uniquely provides support for common virtual machine (VM) networking use cases, such as providing VM static IP assignment for its lifetime and a layer 2 primary pod network for the live migration of VMs between nodes. This is fully integrated with Red Hat OpenShift Virtualization.

UDN aims to provide a consistent experience across all network segments, and the feature set introduced at OpenShift 4.18 is the beginning of that journey. Over the next releases, we will be introducing support for more features that makes UDN more useful and usable, starting with the support for Admin Network Policy and Route for UDN segments.

Kubernetes defined the modern application deployment model, and OpenShift has been the premier Kubernetes platform. OpenShift’s legacy of Kubernetes leadership and innovation continues with the addition of UDN, as Red Hat OpenShift networking takes a bold step to once again redefine Kubernetes networking for the next generation of applications.

Product trial

Red Hat OpenShift Container Platform | Product Trial

About the authors

Marc Curry is a Distinguished Product Manager in Red Hat's Hybrid Platform Business Unit, specializing in the networking architecture, performance, and scalability of the OpenShift Container Platform.

With over 20 years at Red Hat, Marc has held various technical roles, including serving as a Solutions Architect focused on open-source solutions for the telecommunications industry. His expertise builds upon a strong foundation in scientific and high-performance computing.

Feng leads the networking engineering organization in OpenShift, responsible for all networking components including CNI (OVN-Kubernetes), Ingress, network observability, service mesh (OSSM) and connectivity link (RHCL).

More like this

Ford's keyless strategy for managing 200+ Red Hat OpenShift clusters

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

The Containers_Derby | Command Line Heroes

Can Kubernetes Help People Find Love? | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds