Introduction

In this blog, I describe the Gatekeeper integration with Red Hat Advanced Cluster Management for Kubernetes. I focus on the value that Red Hat Advance Cluster Management provides by integrating Gatekeeper. Gatekeeper is an open source, general-purpose policy engine that enables unified, context-aware policy enforcement and can evaluate the compliance of K8s resources to policies. It leverages Open Policy Agent (OPA) as the policy engine, which uses Rego as the policy language.

Continue reading to learn about the following aspects and components to integrate Gatekeeper with Red Hat Advanced Cluster Management:

- Create the Red Hat Advanced Cluster Management gatekeeper operator policy to install and monitor the Gatekeeper Operator.

- Create a policy to configure which namespaces that you want to be handled by Gatekeeper, and configure cluster-wide configuration options.

- Create policies that distribute Gatekeeper rules onto your clusters.

- Learn how to monitor the Gatekeeper-related policies in Red Hat Advanced Cluster Management.

Integration: High-level overview

View the following diagram for a visual of the integration:

Notice the policies that are handled by the Kubernetes configuration policy controller. In theory, you can put all Gatekeeper-related configuration into a single policy; the options are discussed later in this blog.

Support for Gatekeeper Operator in the context of Red Hat Advanced Cluster Management

Let's use and test the Gatekeeper Operator from the upstream project. This Operator can be used without Red Hat Advanced Cluster Management, however Red Hat Advanced Cluster Management supports optimal integration and verification of the usecases.

Note: Red Hat Advanced Cluster Management 2.2 fully supports Gatekeeper Operator for OpenShift 4.6+ Clusters. Review our documentation for more information.

Install Gatekeeper as Operator using policies

Use the gatekeeper operator policy to install the community version of Gatekeeper. Be sure to leverage the Red Hat Advanced Cluster Management features like PlacementRules to distribute rules among the clusters, where you want the policies to be applied.

Configure namespaces to be excluded

Once you have installed the Operator, apply the policy-gatekeeper-config-exclude-namespaces.yaml to configure the namespaces where you do not want Gatekeeper to be handled. Check out the Exempting Namespaces from Gatekeeper README for more information.

List the namespaces that you want to exclude. View the following YAML example where several OpenShift and Open Cluster Management namespaces are excluded:

objectDefinition:

apiVersion: config.gatekeeper.sh/v1alpha1

kind: Config

metadata:

name: config

namespace: openshift-gatekeeper-system

spec:

match:

- excludedNamespaces:

- hive

- kube-system

- kube-public

- openshift-kube-apiserver

- openshift-monitoring

- open-cluster-management-agent

- open-cluster-management

- open-cluster-management-agent-addon

- openshift-sdn

- openshift-machine-config-operator

- openshift-machine-api

- openshift-ingress-operator

- openshift-ingress

- sdn-controller

- openshift-cluster-csi-drivers

- openshift-kube-controller-manager-operator

- openshift-kube-controller-manager

....

processes:

- '*'

Write and distribute gatekeeper-policies with Red Hat Advanced Cluster Management

In this section, I demonstrate and describe how to write a policy for Gatekeeper.

As a first step, create a ConstraintTemplate and a Constraint. The purpose of the constraint template is to define both the Rego code that you use to enforce the policy, and the schema that the constraint can be applied to. View the following examples:

-

A constraint template that includes

regoparameter:objectDefinition:

apiVersion:

templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

rego: |

package k8sminreplicacount

violation[{"msg": msg, "details": {"missing_replicas": missing}}] {

provided := input.review.object.spec.replicas

required := input.parameters.min

missing := required - provided

missing > 0

msg := sprintf("you must provide %v more replicas", [missing]) -

A concrete constraint which defines that every deployment in the

deploymenttestnamespace must have at least 5 replicas:apiVersion:

constraints.gatekeeper.sh/v1beta1

kind: K8sMinReplicaCount

metadata:

name: deployment-must-have-min-replicas

spec:

match:

kinds:

- apiGroups:

- apps

kinds:

- Deployment

- apiGroups:

- autoscaling

kinds:

- Scale

namespaces:

- deploymenttest

parameters:

min: 5 -

An audit template to check for violations that are in existing resources:

metadata:

name: policy-gatekeeper-audit

spec:

remediationAction: inform # will be overridden by remediationAction in parent policy

severity: low

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sMinReplicaCount

metadata:

name: must-have-minreplica

status:

totalViolations: 0 -

An admission template to check for violation events (

event_type) generated by unallowed actions against the previously defined constraint:metadata:

name: policy-gatekeeper-admission

spec:

remediationAction: inform # will be overridden by remediationAction in parent policy

severity: low

object-templates:

- complianceType: mustnothave

objectDefinition:

apiVersion: v1

kind: Event

metadata:

namespace: openshift-gatekeeper-system

annotations:

constraint_action: deny

constraint_kind: K8sMinReplicaCount

constraint_name: must-have-minreplica

event_type: violation

Put the elements together into a single policy

As mentioned before, you can put all Gatekeeper-related configurations into a single policy, however this is not best practice. A single policy is recommended to define which previously mentiond elements you want to implement. After all of the resources have been defined, include them in a Red Hat Advanced Cluster Management configuration policy. You can either define one ConfigurationPolicy and add the four defined resources in the objectDefinition parameter section, or create one ConfigurationPolicy for each of the four resources of the policy. There are advantages with creating a configuration policy for each resource. For example, it is more clear which resources of the policy are non-compliant when checking from the Red Hat Advanced Cluster Management console.

Policies, configuration policies, and object-templates

Let’s discuss the use case further to see how the policy works:

First let’s try to generate a deployment that violates the defined constraints. Download the deploymenttest_noprobe_missingreplicas.yaml before you run the following command:

oc apply -f deploymenttest_noprobe_missingreplicas.yaml

Notice how the gatekeeper admission webhook is defined to deny the request because of the following three rules that have been applied:

- Liveness probe must be present

- Readiness probe must be present

- At least five replicas must be present (as previously mentioned)

View the following logs generated from Gatekeeper:

[denied by deployment-must-have-min-replicas] you must provide 2 more replicas): error when creating "deploymenttest_noprobe_missingreplicas.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [denied by containerlivenessprobenotset] Deployment/gatekeepertest: container 'nginx' has no livenessProbe. See: https://docs.openshift.com/container-platform/latest/applications/application-health.html

[denied by containerlivenessprobenotset] Deployment/gatekeepertest: container 'nginx' has no livenessProbe. See: https://docs.openshift.com/container-platform/latest/applications/application-health.html

[denied by containerreadinessprobenotset] Deployment/gatekeepertest: container 'nginx' has no readinessProbe. See: https://docs.openshift.com/container-platform/4.4/applications/application-health.html

[denied by containerreadinessprobenotset] Deployment/gatekeepertest: container 'nginx' has no readinessProbe. See: https://docs.openshift.com/container-platform/4.4/applications/application-health.html

After that, let’s create a deployment which passes the validation, and then let’s scale the deployment to 3 replicas:

oc scale deployment my-application-web --replicas=3 -n deploymenttest

Run the following command to scale the deployment to 3:

oc patch deployment -p '{"spec": {"replicas": 3}}'

Note, the current setup gets denied and generates a violation event. Continue reading for an explanation of why scaling replicas is possible, and how to configure Gatekeeper to also detect and forbid the scaling operation.

Now let’s log in to the Red Hat Advanced Cluster Management console to view the status of the defined policy. In the following image, you can see that two of the four resource templates are not compliant:

Further explanation:

The first constraint is non-compliant against the audit template, because the constraint has more than one violation (totalViolations > 1). Once you scale the deployment back to 5, the constraint becomes compliant again.

Click the View history link for more details about the violation.

The second constraint is considered not compliant because there are events in the openshift-gatekeeper-namespace, which was generated after attempting to generate the Deployment object.

You can either delete this event manually or wait until the event is automatically purged after the default value of 2 hours (event-ttl).

oc delete event -n openshift-gatekeeper-system my-application-web.165ba7be7e8eebf7

After the violation event is removed from the configuration policy, it becomes compliant again.

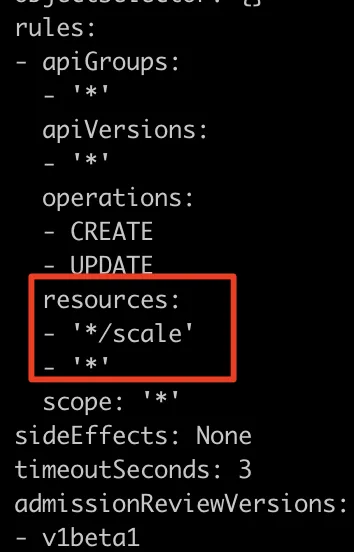

Modifications to make scale operations work

As previously demonstrated, it is possible to scale the deployments to 3. The policy detected the violation, but why was it possible to perform the scale operation at all?

In this example, policy modifications need to be applied to the Gatekeeper Operator and its related policies to include Scale, which can be described and configured as a subresource.

It is necessary to add the following contents to the gatekeeper policy:

- apiGroups:

- autoscaling

kinds:

- Scale

Further, we need to modify the validating-webhook-configuration object by running the following command:

oc edit ValidatingWebhookConfiguration gatekeeper-validating-webhook-configurationAfter you complete the modifications, scaling the replicas to be less than 5 is not allowed anymore. This generates the described event in the openshift-gatekeeper namespace, which is recognized by the admission template.

Recap of gatekeeper-related policies in the policy-collection repository

Recall the gatekeepr-related policies that were discussed in this blog, that can also be found in the policy-collection repository:

- Gatekeeper operator policy: As discussed, this is the foundation to install Gatekeeper for the integration.

- Gatekeeper container image with the latest tag: Use the Gatekeeper policy to enforce containers in deployable resources to not use images with the latest tag.

- Gatekeeper liveness probe not set: Use the Gatekeeper policy to enforce pods that have a liveness probe.

- Gatekeeper readiness probe not set: Use the Gatekeeper policy to enforce pods that have a readiness probe.

- Gatekeeper allowed external IPs: Use the Gatekeeper allowed external IPs policy to define external IPs that can be applied to a managed cluster.

Enabling mutation

I also want to introduce two policies with the new Gatekeeper feature, Mutating-Webhooks (alpha). The following policies are available in the community folder of the policy-collection repository:

-

Gatekeeper mutation policy (owner annotation): Use the Gatekeeper mutation policy to set the owner annotation on pods.

Note: Gatekeeper controllers must be installed to use the gatekeeper policy. For more information, see the Gatekeeper documentation.

-

Gatekeeper mutation policy (image pull policy): Use the Gatekeeper mutation policy to set or update the image pull policy on your pods.

Summary

We are continuously adding more examples to our policy-collection repository and happy for any contribution from the open source community. Learn more about contributing by reviewing the Contributing policies document guidelines.

About the author

More like this

Key considerations for 2026 planning: Insights from IDC

Sovereignty emerges as the defining cloud challenge for EMEA enterprises

Crack the Cloud_Open | Command Line Heroes

Edge computing covered and diced | Technically Speaking

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds