We’re on a journey - with you

In OpenShift 4.13 we’ll be supporting customization of the RHEL CoreOS (RHCOS) image. That includes things like third party agent software and even out-of-box kernel drivers. We’re also going to be supporting a simplified interface for managing configuration files. Before we get to the details of what’s new, it’s worth taking a look at where we started.

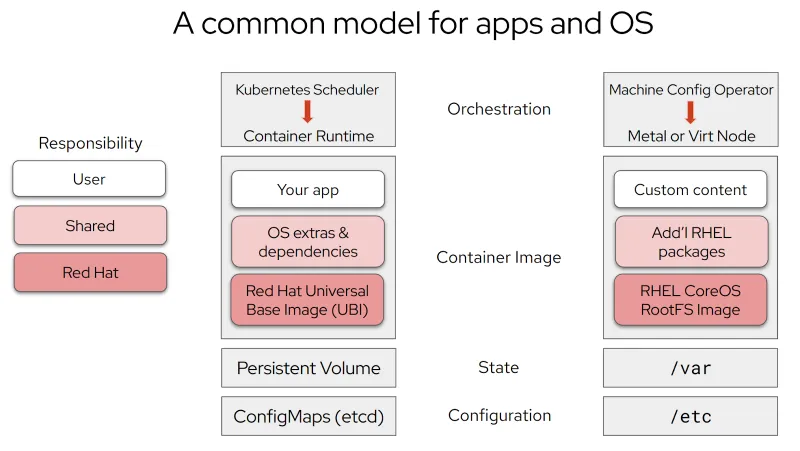

A key theme of OpenShift 4 is an opinionated and automated approach, starting from installation to management to the operating system. Using Kubernetes operators to manage the platform itself, we introduced the Machine Config Operator and brought the Kube API down to the OS-level. Your machine’s /etc configuration became declarative objects in etcd. Kernel command-line options could now be managed with oc or kubectl. Exciting times!

Now, the operating system is simply another component of the cluster—versioned, tested, and shipped alongside the rest of the platform. To make the cluster even more manageable and predictable, all additional software running on the nodes should be delivered as containers. No exceptions. We made cluster safety our highest virtue and keeping things cleanly separated between what Red Hat provides and what customers provide supports this virtue. Compared to OpenShift 3, this architecture reduced cluster administrator toil substantially and has brought a vastly simplified deployment and more reliable upgrade process to the platform.

But over time, many compelling scenarios have come directly from our customers and partners that required more technical flexibility than this model offered. Third-party system agents and kernel modules were a common requirement. Containers don’t offer isolation if a system agent needs to run as root on the node and many agents aren’t containerized by their vendors. Other common requests were for additional RHEL packages that might be of use only to a small percentage of customers. All legitimate use cases, but only a select few could be accommodated. Adding packages to the base image increased the image size and attack surface for all customers and thus the bar for adding new packages is quite high.

We also heard from some customers that MachineConfigs were a bit of a learning curve for their administrators. Skilled RHEL admins had to learn a new layer of abstraction and one that could get in the way of their operations and troubleshooting, particularly in critical situations.

OpenShift 3 users had nearly complete freedom to manage their RHEL nodes and do all these things, but we knew from experience that that came with a lot of life cycle problems. How could we bring back flexibility without configuration drift and complexity?

Evolving RHEL CoreOS

Engineering decided to take a page — rather directly — out of containerized application development. The central idea of CoreOS Layering is that we are putting the OS root filesystem image in a standard OCI container. To be clear the OS is not running as a container; RHCOS still boots using a kernel and systemd as before. Rather, we’re using containers as an image format for updating physical and virtual machine disks. In other words, rpm-ostree can now use containers as transport format for updating root filesystems.

It’s a simple idea but it opens up a lot of possibilities. By using OCI containers, we unlock the use of the same industry-standard tooling you are already using in and around your Kubernetes environments. Suddenly all these tools interoperate with CoreOS node base images: registries, security scanners, build tools, even container engines — they all just work. Now you can take an OS base image and describe all your modifications in a Dockerfile (aka Containerfile). Note: while RHCOS images aren’t intended to be run as containers, they actually can and it’s a super easy way to test or inspect an image.

Let’s talk a little more about what’s not changing. RHCOS remains an image-based edition of RHEL. It is not an assortment of packages at various patch levels. Each RHEL CoreOS image is a set of packages at specific revision level shipped as a unit. By building your customizations on top of this atomic unit, it makes it easy to stay as close as possible to the exact same units we test every day in our CI system.

The application container layering model has been incredibly successful in part by having a clear separation of responsibilities and this has informed the development of CoreOS Layering. By adopting this model, customizing the OS becomes just like building your application containers.

For application containers, you start with a base image, supported by a vendor or a project, very often add some additional distro dependencies, and then your custom content. Then you build the image, push it to a registry, and deploy it to Kubernetes. Likewise, with RHEL CoreOS, you start with a base image, and may add a 3rd party service which in turn may pull in some additional RHEL packages, and you can drop in configuration files here. Build the image, push it, and deploy it with the Machine Config Operator. Then, just like Kubernetes orchestrates a rolling rebase of your application containers, the Machine Config Operator triggers a rolling rebase of nodes into the new image.

Comparing the app state and configuration to the operating system isn’t perfect, but there are some analogs of /var and /etc in the Kubernetes realm.

So how does this really work?

Let’s say that you wanted to try a test kernel from CentOS Stream, you might have a Containerfile like:

# Get RHCOS base image of target cluster `oc adm release info --image-for rhel-coreos`

FROM quay.io/openshift-release/ocp-release@sha256...

# Enable cliwrap; this is currently required to intercept some command invocations made from

# kernel scripts.

RUN rpm-ostree cliwrap install-to-root /

# Replace the kernel packages. Note as of RHEL 9.2+, there is a new kernel-modules-core that needs

# to be replaced.

RUN rpm-ostree override replace https://mirror.stream.centos.org/9-stream/BaseOS/x86_64/os/Packages/kernel-{,core-,modules-,modules-core-,modules-extra-}5.14.0-295.el9.x86_64.rpm && \

ostree container commit

SOURCE: https://github.com/openshift/rhcos-image-layering-examples/blob/master/override-kernel/Containerfile

Now you can build your custom RHCOS container image using podman. Then you take the SHA256 digest of the image and push it to your container registry. Finally, you tell the cluster to roll out the new image by applying a MachineConfig specifying the location of the container image and the digest calculated earlier:

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: os-layer-hotfix

spec:

osImageURL: quay.io/my-registry/custom-image@sha256:306b606615dcf8f0e5e7d87fee3

Note: we add the hash because it’s critical that the pools be consistent. If we allowed updating by tag, the image could change mid-update.

We’ve also introduced this model upstream in Fedora CoreOS. Here is an interesting single-server example that hints at the range of configuration possibilities now available:

# This example uses Ansible to configure firewalld to set up a node-local firewall suitable as recommended for use as an OpenShift 4 worker.

# However, this is intended to generalize to using Ansible as well as firewalld for generic tasks.

FROM quay.io/fedora/fedora-coreos:stable

ADD configure-firewall-playbook.yml .

# Install firewalld; also install ansible, use it to run a playbook, then remove it

# so it doesn't take up space persistently.

# TODO: Need to also remove ansible-installed dependencies

RUN rpm-ostree install firewalld ansible && \

ansible-playbook configure-firewall-playbook.yml && \

rpm -e ansible && \

ostree container commit

SOURCE:

https://github.com/coreos/layering-examples/blob/main/ansible-firewalld/Containerfile

This Containerfile pulls the latest fedora-coreos:stable base image, copies an Ansible playbook. Then we install firewalld and Ansible, and run the firewall configuration playbook in offline mode. With the system configured now, we remove Ansible, delete some cached repo data, and commit the final container image.

Why are we removing Ansible? Because we don’t need to run Ansible in-place. Under this model, if we wanted to change the open ports, for example, we would not reach out change the configuration on each node. Rather, we edit the playbook, rebuild the image, and rebase our server to that new image. We’ve learned over the years that redeploying the state you want is far less error prone than trying to cover all the possible in-place upgrade scenarios.

By using this architecture with pools of machines, we can allow robust customization, yet still inhibit configuration drift because each machine in the pool is being reliably rebased to the same image.

Today

In OpenShift 4.13 we are supporting off-cluster builds, where you are providing a build environment and a container registry to host the machine images. Customizations to the base image, including installing packages and directly dropping in configuration files will be supported. [Please note: we are not deprecating the MachineConfig API].

This should be done with some care. When you enable off-cluster builds, you are taking ownership of defining the image for each MachineConfigPool. If you don’t define a new image, RHCOS will not be updated. You have the wheel.

While it may be tempting to avoid image updates and the resulting rolling reboot, consider that the further back you fall from what we ship together as a unit—meaning the OCP platform containers and RHCOS—the further you are away from what we test. That may not matter every time. In fact there is some version skew associated with the rollout of every update. But over time that increases the chances that you will see issues. Issues that other customers may not be reporting because few or none have the particular combination of OCP containers and RHCOS that you are running. This is particularly true of Cri-O and the kubelet which are part of the RHCOS image working with the API server which is a control plane pod. Keeping close also minimizes the amount of change in any given update that you deploy.

Of course, as per our normal policy, if any customizations appear to be related to the problem you are calling support about, you may be asked to try reproducing with those customizations disabled.

The road ahead

Our top two priorities are:

- On-cluster builds. The batteries-included version. This removes the need to maintain separate infrastructure for building and storing machine container images. Eventually we want deployment of customized images to be as simple as managing stock RHCOS images is today.

- Custom first-boot images. Today the deployment architecture of OpenShift still requires the use of unmodified first-boot images when installing a new cluster or scaling out a new node. That means that for now, you won’t be able to add a device driver that may be required for bootstrap. For example, if you want to install RHCOS to a storage or networking device that only has an out-of-tree driver, that is not possible yet.

Beyond that we will look to enhance the on-cluster build process with more automation knobs, integrate with the Openshift web console, and pull-in as much customer feedback as we can. If you have questions about CoreOS Layering or anything else RHCOS related please reach out to your account team and let’s talk!

Resources

About the authors

Mark joined Red Hat in 2014 and is part of the RHEL and OpenShift product management teams. Before that, he had experience as: a sysadmin, a support tech for multiple Linux distros, a consulting architect and an engineering partnership manager. He loves discussing image-based operating systems, edge scenarios, and container engines. Outside of work, Mark enjoys going to see punk rock and other weird music shows in tiny clubs.

More like this

Red Hat Enterprise Linux now available on the AWS European Sovereign Cloud

Getting started with socat, a multipurpose relay tool for Linux

Crack the Cloud_Open | Command Line Heroes

Days of Future Open | Command Line Heroes

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds