Micro frontends enable teams to work more efficiently by allowing them to build and deploy independently. They have a clean separation of concerns, and they give development teams ownership of the end-to-end user experience.

This article explores a simple micro frontend project to show how you can structure, build, deploy, and integrate product features into an existing monolithic application. Its goal is to help you understand the why and the how of micro frontends.

Project overview

The example application I explore here is called Practice Question Service. In this scenario, a team supports multiple educational course websites. This application enables students to create practice questions as they move through the course material that they can use as review material when preparing for exams.

A user story for this feature might be: "As a student that is reading through course material, I read a piece of key information that I want to save for review. I'm able to easily create a practice question that contains the following information: question, answer, and notes."

Development challenges

In this scenario, typical development challenges for teams working with a monolithic application might be:

- Limited deployments: Monoliths, not always but typically, opt for long sprints between deployments. This reduces the risk of new features introducing regressions to its extensive feature set but makes it more difficult for teams to plan and launch new features.

- Feature collision: Every feature in one bucket sometimes introduces regressions to other functionality (you'd be surprised how often this happens). This is why core teams try to limit the number of new features they introduce concurrently.

- Limited technology stack: With monoliths, you have to work with the available tools. Integrating new technologies within the monolith is often a significant lift. Also, introducing new technology to the monolith means more responsibility for the team dedicated to ensuring uptime.

- Ownership: When you have large teams supporting a monolith, the responsibility for a feature may bounce from one developer to another. This sounds good on the surface but often leads to developers having to maintain product features they aren't entirely familiar with.

The micro frontend model

The micro frontend model aims to solve these problems by enabling many small, cross-functional teams to build and deploy product features independent of the core monolith. They have their own technology stack, their own deployment pipeline, and a dedicated team to support the product feature's core mission.

Now let's take a look at the example micro frontend.

The repo

The two discrete pieces of the project are the backend and frontend.

The frontend is responsible for any user experience you need to provide to the customers and product administrators.

The backend is a purely headless service. This is the API responsible for receiving requests from the frontend components. It can also be responsible for communicating with other backend services. For my backend, I chose Hasura, an open source GraphQL engine, but you could do the same thing with similar tools.

The two pieces for the Practice Question Service are in the components and hasura directories:

- Frontend (components): The components directory contains one or many components meant to serve as an integration point between the course website and the product feature (practice questions). Any user experience (UX) you need to provide gets composed and delivered in these components. In this case, these are a collection of web components. They can be React, Angular, Vue, and such. Whatever you pick, just make sure that they integrate seamlessly not only with your monolithic application but with other micro frontends (which is why I choose web components).

- Backend (hasura): Hasura is an open source microservice framework that enables me to build a robust API for frontends quickly. Again, the technology stack is up to you; use whatever best suits your team's needs. Just make sure that it is API-first and easily deployable. All configuration changes to your backend should be described in code to be deployed simultaneously with the frontend.

Communication

When the practice question components are placed in the monolith, they should understand how to communicate with the backend out of the box. They immediately ping the backend service to ensure it's operational and begin fetching any data required to set itself up. The frontend components also understand how to create data. In this case, the component understands how to take the question, answer, and notes text fields and send them to the backend service for storing.

The components also know how to handle errors coming back from the backend. If the backend responds to a new submission with an error, the component should understand exactly what to do with the error. In this case, it shows a dialog message saying there is an issue creating the question and prompting the user to try again.

Deployments

Deployments will vary greatly depending on what technology stack you choose for your micro frontend and your governance around how new features get deployed to your monoliths. That's too large a topic for one article. The good news is that micro frontends are, by nature, very flexible, so they can fit into whatever deployment strategy your team decides.

In the Practice Question Service, it looks something like this:

The backend service is deployed to the managed cloud service (in this example, Hasura.io). The frontend components get published to npmjs.org. And unpkg.com automatically publishes the components to a content delivery network (CDN).

Integration

The integration point between the Practice Question Service and the existing application could not be easier. The application is responsible for three things:

- Registering the components

- Specifying the location where it should render the components

- Setting necessary environment variables

[You might also be interested in An architect's guide to multicloud infrastructure. ]

In this demo, you add the Practice Question Service to a demo page in the repo.

First, register the practice question component with the site by importing it using a script tag.

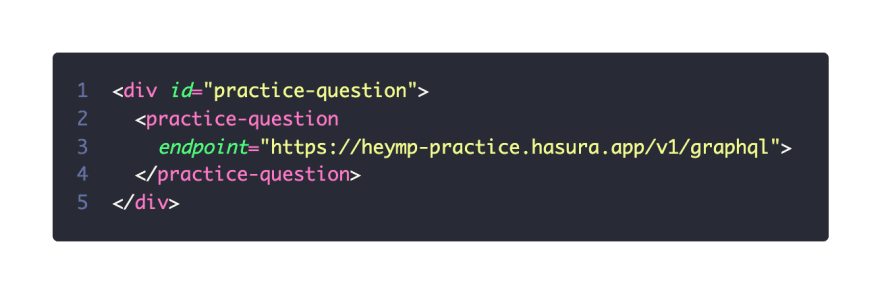

Now that you've registered the component, you need to specify where it should render in the application. Web components allow you to do this easily.

Now that you registered the component and placed it on the application, you can see that it's working—but not yet communicating with the backend.

The only other thing the application is responsible for providing the component is the actively available backend location. This is in the form of a URL. This handoff of environment variables between the application and component is equivalent to what happens on the server. Servers are responsible for setting runtime environment variables to applications. This is precisely what you're doing here with the endpoint variable.

And that's it! You successfully integrated your micro frontend.

A world of possibilities

This article doesn't even begin to scratch the surface of this topic, but I hope it illuminates the basic principles of micro frontends. Micro frontends open up a world of possibilities to teams that struggle to release maintainable product features quickly.

This model provides a clear separation of concerns. It enables teams to increase ownership and independence without compromising the stability of existing applications. As a long maintainer of monolithic applications, I believe micro frontends are the future of building web applications, which makes me excited.

You can find the code for this demo in my GitHub repo. Please give me a shoutout on Twitter if you have any questions.

This originally appeared on Dev.to and is republished with permission.

About the author

Michael is a Principal Front-end Developer on the Digital UX team at Red Hat. He is a maintainer on the open source design system Patternfly Elements. As a long-time Drupal community member, he has been evangelizing component based architecture as a means of making maintainable monolithic applications. He focuses primarily on microservices, micro frontends, and web component technologies.

More like this

Metrics that matter: How to prove the business value of DevEx

Extend trust across the software supply chain with Red Hat trusted libraries

Ready to Commit | Command Line Heroes

The Fractious Front End | Compiler: Stack/Unstuck

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds