MLRun is an open source machine learning operations (MLOps) orchestration framework designed to build, manage and scale ML applications. As orchestration and automation are core to the Red Hat portfolio, we work to enable the community with more effective and efficient machine learning tools to grow their artificial intelligence (AI) workloads. In this article, we will install and deploy the MLRun Framework on a Red Hat OpenShift cluster to showcase the diversity of what the OpenShift ecosystem and the communities around it can provide in the world of AI.

MLRun’s MLOps framework

MLRun serves as an open MLOps framework, facilitating the development and management of continuous machine learning (ML) applications throughout their lifecycle. It integrates with your development and CI/CD environment, automating the delivery of production data, ML pipelines and online applications, which in turn helps to reduce engineering efforts, time to production and computation resources. Their orchestration allows for greater flexibility and efficiency when scaling and managing intelligent applications for production. MLRun also fosters collaboration and accelerates continuous improvements by breaking down silos between data, ML, software and DevOps/MLOps teams.

Prerequisites

For this walkthrough, you will need OpenShift 4.14, Helm and the MLRun helm repository. If you do not have an OpenShift subscription, you can use the Developer Sandbox with a 30 day trial. The MLRun Helm repository is included in this tutorial, so no further action is needed.

It’s important to note that we’ll be running MLRun on OpenShift 4.14, which runs Kubernetes 1.27 under the hood. The latest version of Kubernetes that MLRun officially fully supports is 1.26. Although we did not run into any issues with 1.27, it’s worth noting that at this time it is not fully supported. If it is important for you to align with their supported Kubernetes version, you can always use OpenShift 4.13 which ships with Kubernetes 1.26.

Preparing for the MLRun install

Let’s get things started by creating an OpenShift project in which MLRun will reside:

$ oc new-project mlrun

Now using project "mlrun" on server "https://api.ocp4.multicluster.io:6443".

You can add applications to this project with the 'new-app' command. For example, try:

oc new-app rails-postgresql-example

to build a new example application in Ruby. Or use kubectl to deploy a simple Kubernetes application:

kubectl create deployment hello-node --image=registry.k8s.io/e2e-test-images/agnhost:2.43 -- /agnhost serve-hostnameBecause of how MLRun has been set up, its various components want to run with specific user accounts through UID’s. To allow for that, we’ll have to change the corresponding service accounts to the anyuid security context constraint (SCC).

$ oc adm policy add-scc-to-user anyuid -z default

$ oc adm policy add-scc-to-user anyuid -z minio-sa

$ oc adm policy add-scc-to-user anyuid -z state-metrics

$ oc adm policy add-scc-to-user anyuid -z ml-pipeline-persistenceagent

$ oc adm policy add-scc-to-user anyuid -z minio

$ oc adm policy add-scc-to-user anyuid -z grafana

$ oc adm policy add-scc-to-user anyuid -z argo

$ oc adm policy add-scc-to-user anyuid -z mlrun-db

$ oc adm policy add-scc-to-user anyuid -z mlrun-api

$ oc adm policy add-scc-to-user anyuid -z monitoring-admission

$ oc adm policy add-scc-to-user anyuid -z monitoring-operator

$ oc adm policy add-scc-to-user anyuid -z monitoring-prometheusInstalling MLRun

MLRun uses Helm to deploy to Kubernetes-based environments. Let’s start off by adding the MLRun Helm repo with the following command and updating with the latest repository available:

$ helm repo add mlrun-ce https://mlrun.github.io/ce

"mlrun-ce" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "mlrun-ce" chart repository

Update Complete. ⎈Happy Helming!⎈We’ll make sure the MLRun repo was added by listing our available repositories.

$ helm repo list

NAME URL

mlrun-ce https://mlrun.github.io/ce

Now, we’ll run the install to install the MLRun chart repository in our OpenShift cluster:

$ helm --namespace mlrun \

install mlrun-ce \

--wait \

--timeout 960s \

--set kube-prometheus-stack.enabled=false \

mlrun-ce/mlrun-ce

NAME: mlrun-ce

LAST DEPLOYED: Mon Dec 11 14:00:51 2023

NAMESPACE: mlrun

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

You're up and running !

1. Jupyter UI is available at:

http://localhost:30040

2. Nuclio UI is available at:

http://localhost:30050

3. MLRun UI is available at:

http://localhost:30060

4. MLRun API is exposed externally at:

http://localhost:30070

5. Minio API is exposed externally at:

http://localhost:30080

6. Minio UI is available at:

http://localhost:30090

Credentials:

username: minio

password: minio123

7. Pipelines UI is available at:

http://localhost:30100

Happy MLOPSing !!! :]Accessing MLRun UI

Now that we have deployed the Helm chart, we need to be able to access the MLRun user interface (UI), so let’s create some routes to the various components that were installed:

$ oc expose service/mlrun-jupyter

$ oc expose service/nuclio-dashboard

$ oc expose service/mlrun-ui

$ oc expose service/mlrun-api

$ oc expose service/minio-console

$ oc expose service/minio

$ oc expose service/ml-pipeline-uiTo access the MLRun UI, you’ll need to point your browser to the mlrun-ui route you just created via HTTP. We can use the following command to get the route URL.

$ oc get route mlrun-ui

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

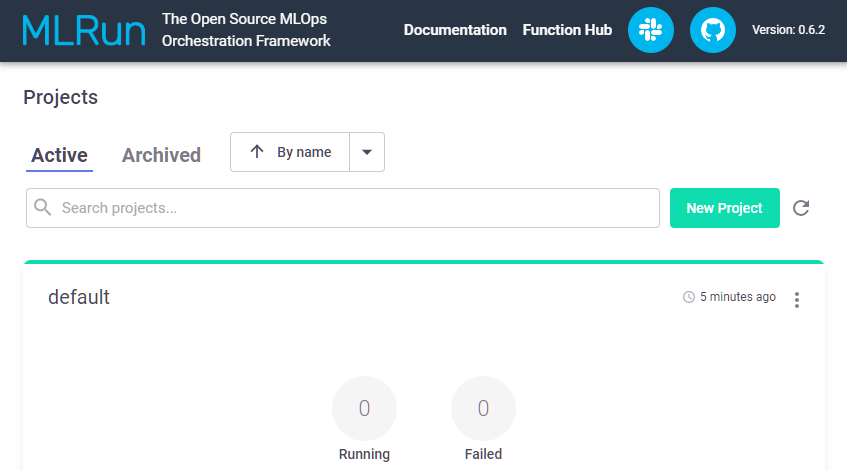

mlrun-ui mlrun-ui-mlrun.apps.ocp4.example.com mlrun-ui http NoneWe’ll take the address http://mlrun-ui-mlrun.apps.ocp4.example.com and enter that into any browser. Be sure you are using HTTP and not HTTPS. You should now see the MLRun Community Edition UI resolve and it should look something like this:

We have successfully run MLRun on our OpenShift cluster! It's as simple as that. Now you have the MLRun open MLOps Framework ready to use on OpenShift. For more information on MLRun you can visit their website at https://www.mlrun.org/

If you would like to try out OpenShift, you can with our Developer Sandbox using a no-cost trial. Learn more about OpenShift and our AI initiatives.

About the authors

More like this

Innovation is a team sport: Top 10 stories from across the Red Hat ecosystem

Production-ready: Red Hat’s blueprint for 2026

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds