Overview

Policies in Red Hat Advanced Cluster Management for Kubernetes (RHACM) can define desired states for managed clusters within a fleet. Multiple policies are often required to accomplish one desired end result, such as installing and configuring an operator. The policy framework provides the ability to logically organize multiple objects into one policy, and multiple policies into a policy set, but previously it had not provided a way to apply policies in a specific order.

In the 2.7 release of RHACM, new fields for the policy framework were added, where policy authors can declare when a policy should be applied, based on the compliance of other policies on that managed cluster. For example, by using the spec.dependencies and spec.policy-templates[*].extraDependencies, an author can ensure a custom resource definition and the operator managing it is installed before attempting to create an instance of that custom resource. This can help reduce the load on the Kubernetes API server, and reduce violation noise inside a cluster.

Continue reading for a walk-through of how to use spec.dependencies and spec.policy-template[*].extraDependencies to orchestrate the activation of policies in two use-cases. In the first example, policies are used to install the Strimzi operator and create a Kafka cluster with policy dependencies ensuring that actions are taken in the correct order. The second example shows how to activate a policy when something is non-compliant, demonstrating a possible approach to automatically remediating violations.

Policy dependency overview

Before using the new fields, it is important to understand how the policy resources are configured. The dependencies field defines requirements for everything in a policy, while the extraDependencies field defines extra requirements for one specific policy template. Each have the same schema; both fields are lists of objects with the following fields:

apiVersionkindnamenamespacecompliance

For more information about each of the fields in the documentation. However, the namespace and compliance fields deserve extra discussion. The namespace field can change since dependencies are checked on each managed cluster, and objects like configuration policies usually exist within the cluster namespace (with a unique name per cluster). To address this, leave the namespace field in a dependency empty for the framework to use the cluster namespace dynamically.

The compliance field is the condition the policy is waiting for. When the targeted object status.compliant field matches what's specified here, the dependency is considered satisfied. When the target object does not exist or its status does not match, then the policy defining the dependency is in a Pending state.

Example installing Kafka with Strimzi

Strimzi provides a way to create and manage Apache Kafka® instances on a Kubernetes cluster using an operator. Using policies to manage an object managed by an operator can be a common pattern which inherently has some dependencies. For this example, the policies should be written to ensure that the operator is installed before the object it manages.

Here is a policy to install the operator into the myapp namespace:

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: myapp-strimzi-operator

namespace: default

spec:

disabled: false

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-strimzi-operatorgroup

spec:

remediationAction: enforce

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: myapp-strimzi

namespace: myapp

spec:

targetNamespaces:

- myapp

upgradeStrategy: Default

- extraDependencies:

- apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

name: myapp-strimzi-operatorgroup

namespace: ""

compliance: Compliant

objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-strimzi-subscription

spec:

remediationAction: enforce

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: strimzi-kafka-operator

namespace: myapp

spec:

channel: stable

installPlanApproval: Automatic

name: strimzi-kafka-operator

source: community-operators

sourceNamespace: openshift-marketplace

startingCSV: strimzi-cluster-operator.v0.32.0

- extraDependencies:

- apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

name: myapp-strimzi-subscription

namespace: ""

compliance: Compliant

objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-strimzi-csv-check

spec:

remediationAction: inform

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: operators.coreos.com/v1alpha1

kind: ClusterServiceVersion

metadata:

namespace: myapp

spec:

displayName: Strimzi

status:

phase: Succeeded

reason: InstallSucceeded

For simplicity, this policy assumes that the myapp namespace already exists, and that the Strimzi operator is included in a catalog source named community-operators in the openshift-marketplace namespace (which is the case when using Red Hat OpenShift Container Platform). It is possible to put those details into additional policies, and use dependencies to ensure they are applied first.

The extraDependencies field is used in this operator policy so that the templates are applied in order. The Subscription is not created until the OperatorGroup exists, and the ClusterServiceVersion is not checked for until after the Subscription has been created. Note that each dependency uses an empty namespace field, because the configuration policies are in the cluster namespace.

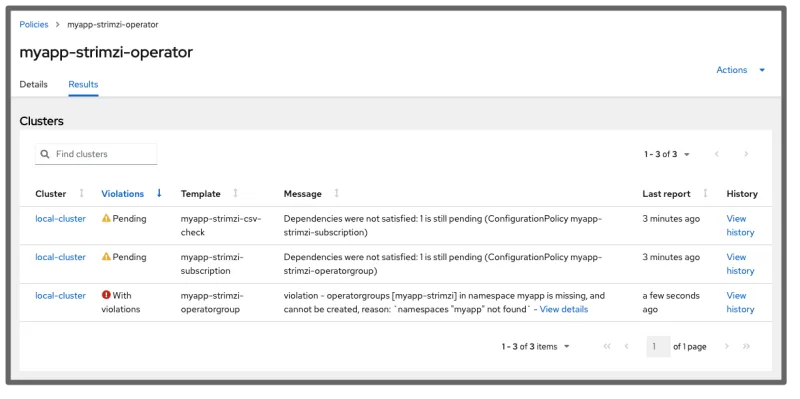

If the namespace has not been created yet, the other templates are displayed as Pending from the RHACM Governance console and the status messages from the table describe the reason why the templates are pending. View the following image:

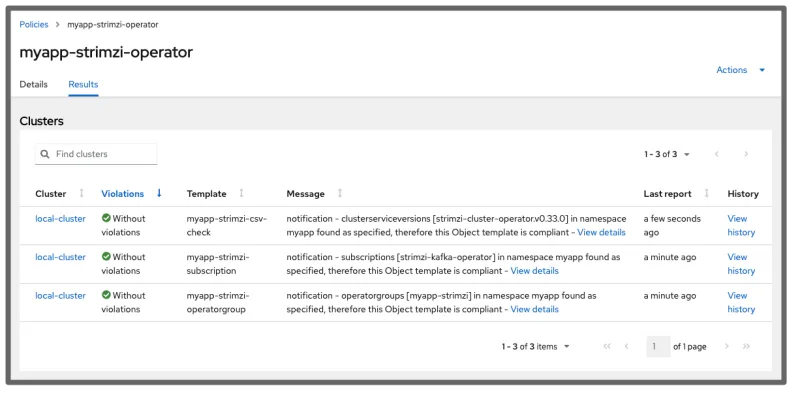

After the namespace is created and the installation proceeds, everything becomes compliant after a few minutes:

After the operator is installed, another policy creates a Kafka instance. This policy uses the

After the operator is installed, another policy creates a Kafka instance. This policy uses the dependencies field to be applied only after the operator is successfully installed. View the following policy:

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: myapp-kafka

namespace: default

spec:

disabled: false

dependencies:

- apiVersion: policy.open-cluster-management.io/v1

kind: Policy

name: myapp-strimzi-operator

namespace: default

compliance: Compliant

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: myapp-kafka

spec:

remediationAction: enforce

severity: medium

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: my-cluster

namespace: myapp

spec:

kafka:

config:

offsets.topic.replication.factor: 3

transaction.state.log.replication.factor: 3

transaction.state.log.min.isr: 2

default.replication.factor: 3

min.insync.replicas: 2

inter.broker.protocol.version: "3.3"

storage:

type: ephemeral

listeners:

- name: plain

port: 9092

type: internal

tls: false

- name: tls

port: 9093

type: internal

tls: true

version: 3.3.1

replicas: 3

entityOperator:

topicOperator: {}

userOperator: {}

zookeeper:

storage:

type: ephemeral

replicas: 3

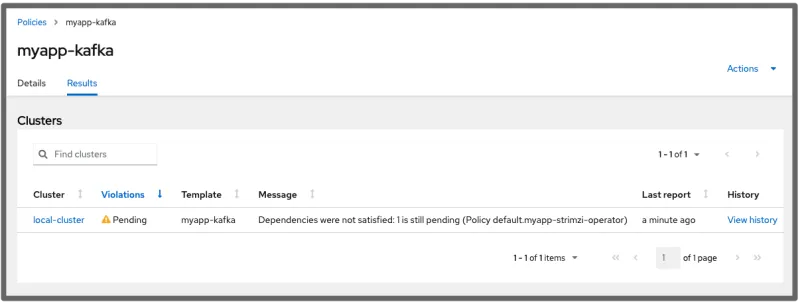

Before the operator policy becomes compliant, the Kafka instance policy is marked as Pending:

After everything is created, both policies are compliant:

After everything is created, both policies are compliant:

Example refreshing a certificate

Configuration policies can be set to enforce mode, automatically remediating violations by applying changes to objects inside the cluster, but other policy types do not always have this ability. For example, certificate policies can identify when a certificate is about to expire, but can not refresh it automatically by itself.

This example uses a hypothetical container image that can be used in a Kubernetes Job to refresh a certificate. Such an image might be created by an application administrator to automate certificate refreshes in a given namespace.

This example policy uses a policy dependency to create a job when any certificate in the myapp namespace is going to expire within six hours (6h):

apiVersion: policy.open-cluster-management.io/v1

kind: Policy

metadata:

name: certificate-with-remediation

namespace: default

spec:

disabled: false

policy-templates:

- objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: CertificatePolicy

metadata:

name: expiring-myapp-certificates

spec:

namespaceSelector:

include: ["myapp"]

remediationAction: inform

severity: low

minimumDuration: 6h

- extraDependencies:

- apiVersion: policy.open-cluster-management.io/v1

compliance: NonCompliant

kind: CertificatePolicy

name: expiring-myapp-certificates

namespace: ""

ignorePending: true

objectDefinition:

apiVersion: policy.open-cluster-management.io/v1

kind: ConfigurationPolicy

metadata:

name: refresh-myapp-certificates

spec:

object-templates:

- complianceType: musthave

objectDefinition:

apiVersion: batch/v1

kind: Job

metadata:

name: refresh-myapp-certificates

namespace: myapp

spec:

template:

spec:

containers:

- name: refresher

image: quay.io/myapp/magic-cert-refresher:v1

command: ["refresh"]

restartPolicy: Never

backoffLimit: 2

remediationAction: enforce

pruneObjectBehavior: DeleteAll

severity: low

When a policy template has a dependency that is not satisfied, it is usually marked as Pending, and the policy is not considered to be Compliant. To make this policy Compliant when nothing needs to be done, enable the ignorePending parameter in the template: ignorePending: true.

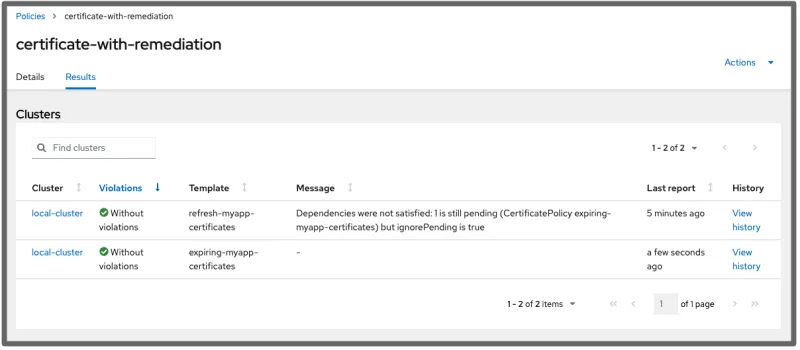

When no actions need to be taken on the cluster, the policy is compliant in the console. View the following image:

The Message details describe that the configuration policy is Pending, but considered compliant:

The Message details describe that the configuration policy is Pending, but considered compliant:

This policy also uses the pruneObjectBehavior: DeleteAll setting, where the configuration-policy-controller deletes the job when the template is inactive. This way, each time the configuration policy is activated, it creates a new job, as opposed to finding the job from the previous run.

Conclusion

Policy dependencies can be a powerful tool allowing policy authors to define relationships between objects managed by policies. This can help cluster administrators more quickly identify the blocking step in a group of policies that need to be applied in a certain order. Additionally, the feature is flexible enough to allow interesting remediation workflows to be automated when a policy becomes non-compliant.

The Strimzi and Kafka example policies in this blog can be expanded to also create and configure persistent storage for the cluster. That configuration can be one or more separate policies, but is likely to just be one additional policy dependency. For example, checking if a PersistentVolume exists. Since the dependency does not specify how that volume is created or provisioned, different provisioners can be used on different clusters.

The certificate remediation example policy in this blog can also be expanded to a Tekton PipelineRun instead of a simple job. That pipeline might identify which certificates need to be refreshed, and supply that information to another Task. This process can be reused in multiple applications, while keeping the policy dependency relatively simple.

I look forward to seeing what use-cases the policy-collection community finds for this feature. Visit the policy-collection community!

About the author

More like this

Looking ahead to 2026: Red Hat’s view across the hybrid cloud

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

Crack the Cloud_Open | Command Line Heroes

Edge computing covered and diced | Technically Speaking

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds