The real estate bubble of 2008 made shockwaves worldwide. This was so significant an event that the US economy only this year was determined to have recovered to pre-bubble levels. But this event also resulted in all sorts of other shockwaves -- regulatory ones.

To address these regulatory needs for Wall Street, Red Hat Storage teamed up with Hortonworks to build an enterprise grade big data risk management solution. At the recent Strata+Hadoop World, Vamsi Chemitiganti (chief architect, financial services) presented the solution in a session -- which you can see for yourself at the bottom of this post.

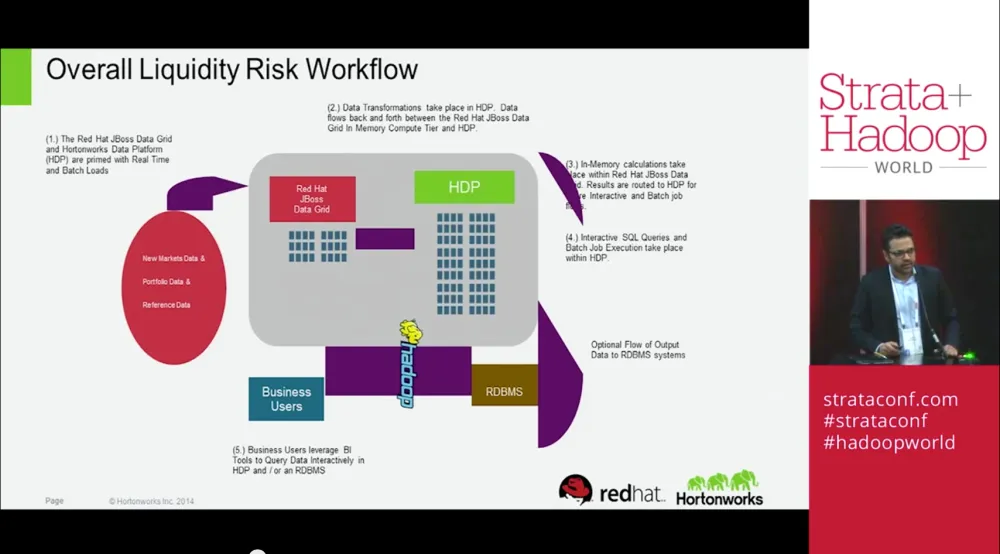

The slide does a great job of breaking down the workflow. To spare your eyes, here are the steps:

- The Red Hat JBoss Data Grid and Hortonworks Data Platform (HDP) are primed with Real Time and Batch Loads

- Data Transformations take place in HDP. Data flows back and forth between the Red Hat JBoss Data Grid in Memory Compute Tier and HDP

- In-Memory calculations take place within Red Hat JBoss Data Grid. Results are routed to HDP where Interactive and Batch job loads

- Interactive SQL Queries and Batch Job Execution take place within HDP

- Business users leverage business intelligence tools to query data interactive in HDP and/or a relational database management system (RDBMS).

Check out the full session right here:

執筆者紹介

チャンネル別に見る

自動化

テクノロジー、チームおよび環境に関する IT 自動化の最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

オープン・ハイブリッドクラウド

ハイブリッドクラウドで柔軟に未来を築く方法をご確認ください。

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

仮想化

オンプレミスまたは複数クラウドでのワークロードに対応するエンタープライズ仮想化の将来についてご覧ください